D-FINE: REDEFINE REGRESSION TASK IN DETRS AS FINE-GRAINED DISTRIBUTION REFINEMENT

D-FINE:将DETR中的回归任务重新定义为细粒度分布优化

Figure 1: Comparisons with other detectors in terms of latency (left), model size (mid), and computational cost (right). We measure end-to-end latency using TensorRT FP16 on an NVIDIA T4 GPU.

图 1: 与其他检测器在延迟(左)、模型大小(中)和计算成本(右)方面的比较。我们在 NVIDIA T4 GPU 上使用 TensorRT FP16 测量端到端延迟。

ABSTRACT

摘要

We introduce D-FINE, a powerful real-time object detector that achieves outstanding localization precision by redefining the bounding box regression task in DETR models. D-FINE comprises two key components: Fine-grained Distribution Refinement (FDR) and Global Optimal Localization Self-Distillation (GO-LSD). FDR transforms the regression process from predicting fixed coordinates to iterative ly refining probability distributions, providing a fine-grained intermediate representation that significantly enhances localization accuracy. GOLSD is a bidirectional optimization strategy that transfers localization knowledge from refined distributions to shallower layers through self-distillation, while also simplifying the residual prediction tasks for deeper layers. Additionally, D-FINE incorporates lightweight optimization s in computationally intensive modules and operations, achieving a better balance between speed and accuracy. Specifically, D-FINE-L / X achieves $54.0%/55.8%$ AP on the COCO dataset at 124 / 78 FPS on an NVIDIA T4 GPU. When pretrained on Objects365, D-FINE-L / X attains $57.1%/59.3%$ AP, surpassing all existing real-time detectors. Furthermore, our method significantly enhances the performance of a wide range of DETR models by up to $5.3%$ AP with negligible extra parameters and training costs. Our code and pretrained models: https://github.com/Peterande/D-FINE.

我们介绍了 D-FINE,这是一种强大的实时目标检测器,通过重新定义 DETR 模型中的边界框回归任务,实现了出色的定位精度。D-FINE 包含两个关键组件:细粒度分布优化 (Fine-grained Distribution Refinement, FDR) 和全局最优定位自蒸馏 (Global Optimal Localization Self-Distillation, GO-LSD)。FDR 将回归过程从预测固定坐标转变为迭代优化概率分布,提供了细粒度的中间表示,显著提高了定位精度。GO-LSD 是一种双向优化策略,通过自蒸馏将定位知识从优化后的分布传递到较浅层,同时简化了较深层的残差预测任务。此外,D-FINE 在计算密集型模块和操作中引入了轻量级优化,在速度和精度之间实现了更好的平衡。具体来说,D-FINE-L/X 在 NVIDIA T4 GPU 上以 124/78 FPS 的速度在 COCO 数据集上实现了 $54.0%/55.8%$ AP。在 Objects365 上预训练后,D-FINE-L/X 达到了 $57.1%/59.3%$ AP,超越了所有现有的实时检测器。此外,我们的方法显著提升了多种 DETR 模型的性能,AP 提升了高达 $5.3%$,且额外参数和训练成本几乎可以忽略不计。我们的代码和预训练模型:https://github.com/Peterande/D-FINE。

1 INTRODUCTION

1 引言

The demand for real-time object detection has been increasing across various applications (Arani et al., 2022). Among the most influential real-time detectors are the YOLO series (Redmon et al., 2016a; Wang et al., 2023a;b; Glenn., 2023; Wang & Liao, 2024; Wang et al., 2024a; Glenn., 2024), widely recognized for their efficiency and robust community ecosystem. As a strong competitor, the Detection Transformer (DETR) (Carion et al., 2020; Zhu et al., 2020; Liu et al., 2021; Li et al., 2022; Zhang et al., 2022) offers distinct advantages due to its transformer-based architecture, which allows for global context modeling and direct set prediction without reliance on Non-Maximum Suppression (NMS) and anchor boxes. However, they are often hindered by high latency and computational demands (Zhu et al., 2020; Liu et al., 2021; Li et al., 2022; Zhang et al., 2022). RT-DETR (Zhao et al., 2024) addresses these limitations by developing a real-time variant, offering an end-to-end alternative to YOLO detectors. Moreover, LW-DETR (Chen et al., 2024) has shown that DETR can achieve higher performance ceilings than YOLO, especially when trained on large-scale datasets like Objects365 (Shao et al., 2019).

实时目标检测的需求在各种应用中不断增加 (Arani et al., 2022)。其中最具影响力的实时检测器是YOLO系列 (Redmon et al., 2016a; Wang et al., 2023a;b; Glenn., 2023; Wang & Liao, 2024; Wang et al., 2024a; Glenn., 2024),因其效率和强大的社区生态系统而广受认可。作为强有力的竞争对手,Detection Transformer (DETR) (Carion et al., 2020; Zhu et al., 2020; Liu et al., 2021; Li et al., 2022; Zhang et al., 2022) 凭借其基于Transformer的架构,提供了独特的优势,能够进行全局上下文建模,并且无需依赖非极大值抑制 (NMS) 和锚框即可直接进行集合预测。然而,它们往往受到高延迟和高计算需求的限制 (Zhu et al., 2020; Liu et al., 2021; Li et al., 2022; Zhang et al., 2022)。RT-DETR (Zhao et al., 2024) 通过开发实时变体解决了这些限制,为YOLO检测器提供了一种端到端的替代方案。此外,LW-DETR (Chen et al., 2024) 表明,DETR在诸如Objects365 (Shao et al., 2019) 等大规模数据集上训练时,可以比YOLO达到更高的性能上限。

Despite the substantial progress made in real-time object detection, several unresolved issues continue to limit the performance of detectors. One key challenge is the formulation of bounding box regression. Most detectors predict bounding boxes by regressing fixed coordinates, treating edges as precise values modeled by Dirac delta distributions (Liu et al., 2016; Ren et al., 2015; Tian et al., 2019; Lyu et al., 2022). While straightforward, this approach fails to model localization uncertainty. Consequently, models are constrained to use L1 loss and IoU loss, which provide insufficient guidance for adjusting each edge independently (Girshick, 2015). This makes the optimization process sensitive to small coordinate changes, leading to slow convergence and suboptimal performance. Although methods like GFocal (Li et al., 2020; 2021) address uncertainty through probability distributions, they remain limited by anchor dependency, coarse localization, and lack of iterative refinement. Another challenge lies in maximizing the efficiency of real-time detectors, which are constrained by limited computation and parameter budgets to maintain speed. Knowledge distillation (KD) is a promising solution, transferring knowledge from larger teachers to smaller students to improve performance without increasing costs (Hinton et al., 2015). However, traditional KD methods like Logit Mimicking and Feature Imitation have proven inefficient for detection tasks and can even cause performance drops in state-of-the-art models (Zheng et al., 2022). In contrast, localization distillation (LD) has shown better results for detection. Nevertheless, integrating LD remains challenging due to its substantial training overhead and incompatibility with anchor-free detectors.

尽管实时目标检测取得了显著进展,但一些未解决的问题仍然限制了检测器的性能。一个关键挑战是边界框回归的公式化。大多数检测器通过回归固定坐标来预测边界框,将边缘视为由狄拉克δ分布建模的精确值 (Liu et al., 2016; Ren et al., 2015; Tian et al., 2019; Lyu et al., 2022)。虽然这种方法简单直接,但未能建模定位不确定性。因此,模型被限制使用L1损失和IoU损失,这些损失无法为独立调整每个边缘提供足够的指导 (Girshick, 2015)。这使得优化过程对小的坐标变化敏感,导致收敛速度慢和性能不佳。尽管像GFocal (Li et al., 2020; 2021) 这样的方法通过概率分布解决了不确定性问题,但它们仍然受限于锚点依赖、粗略定位以及缺乏迭代优化。另一个挑战在于最大化实时检测器的效率,这些检测器受限于有限的计算和参数预算以保持速度。知识蒸馏 (Knowledge Distillation, KD) 是一种有前途的解决方案,它将知识从较大的教师模型转移到较小的学生模型,从而在不增加成本的情况下提高性能 (Hinton et al., 2015)。然而,传统的KD方法如Logit Mimicking和Feature Imitation在检测任务中已被证明效率低下,甚至可能导致最先进模型的性能下降 (Zheng et al., 2022)。相比之下,定位蒸馏 (Localization Distillation, LD) 在检测中表现出更好的效果。然而,由于LD的训练开销较大且与无锚点检测器不兼容,其集成仍然具有挑战性。

To address these issues, we propose D-FINE, a novel real-time object detector that redefines bounding box regression and introduces an effective self-distillation strategy. Our approach tackles the problems of difficult optimization in fixed-coordinate regression, the inability to model localization uncertainty, and the need for effective distillation with less training cost. We introduce Finegrained Distribution Refinement (FDR) to transform bounding box regression from predicting fixed coordinates to modeling probability distributions, providing a more fine-grained intermediate representation. FDR refines these distributions iterative ly in a residual manner, allowing for progressively finer adjustments and improving localization precision. Recognizing that deeper layers produce more accurate predictions by capturing richer localization information within their probability distributions, we introduce Global Optimal Localization Self-Distillation (GO-LSD). GO-LSD transfers localization knowledge from deeper layers to shallower ones with negligible extra training cost. By aligning shallower layers’ predictions with refined outputs from later layers, the model learns to produce better early adjustments, accelerating convergence and improving overall performance. Furthermore, we streamline computationally intensive modules and operations in existing real-time DETR architectures (Zhao et al., 2024; Chen et al., 2024), making D-FINE faster and more lightweight. While such modifications typically result in performance loss, FDR and GO-LSD effectively mitigate this degradation, achieving a better balance between speed and accuracy.

为了解决这些问题,我们提出了 D-FINE,一种新颖的实时目标检测器,它重新定义了边界框回归,并引入了一种有效的自蒸馏策略。我们的方法解决了固定坐标回归中优化困难、无法建模定位不确定性以及需要以更低的训练成本进行有效蒸馏的问题。我们引入了细粒度分布精炼 (FDR) ,将边界框回归从预测固定坐标转变为建模概率分布,提供了更细粒度的中间表示。FDR 以残差的方式迭代地精炼这些分布,允许逐步进行更精细的调整并提高定位精度。我们认识到,更深层次通过在其概率分布中捕获更丰富的定位信息来产生更准确的预测,因此我们引入了全局最优定位自蒸馏 (GO-LSD) 。GO-LSD 以几乎可以忽略的额外训练成本将定位知识从更深层次传递到更浅层次。通过将更浅层次的预测与后续层次的精炼输出对齐,模型学会产生更好的早期调整,加速收敛并提高整体性能。此外,我们简化了现有实时 DETR 架构 (Zhao et al., 2024; Chen et al., 2024) 中计算密集型的模块和操作,使 D-FINE 更快且更轻量。虽然此类修改通常会导致性能下降,但 FDR 和 GO-LSD 有效地缓解了这种退化,在速度和准确性之间实现了更好的平衡。

Experimental results on the COCO dataset (Lin et al., 2014a) demonstrate that D-FINE achieves state-of-the-art performance in real-time object detection, surpassing existing models in accuracy and efficiency. D-FINE-L and D-FINE-X achieve $54.0%$ and $55.8%$ AP, respectively on COCO val2017, running at $124;\mathrm{FPS}$ and 78 FPS on an NVIDIA T4 GPU. After pre training on larger datasets like Objects365 (Shao et al., 2019), the D-FINE series attains up to $59.3%$ AP, surpassing all existing real-time detectors, showcasing both s cal ability and robustness. Moreover, our method enhances a variety of DETR models by up to $5.3%$ AP with negligible extra parameters and training costs, demonstrating its flexibility and general iz ability. In conclusion, D-FINE pushes the performance boundaries of real-time detectors. By addressing key challenges in bounding box regression and distillation efficiency through FDR and GO-LSD, we offer a meaningful step forward in object detection, inspiring further exploration in the field.

在COCO数据集(Lin et al., 2014a)上的实验结果表明,D-FINE在实时目标检测中实现了最先进的性能,在准确性和效率方面超越了现有模型。D-FINE-L和D-FINE-X在COCO val2017上分别达到了$54.0%$和$55.8%$的AP(平均精度),在NVIDIA T4 GPU上分别以$124;\mathrm{FPS}$和78 FPS的速度运行。在更大的数据集(如Objects365(Shao et al., 2019))上进行预训练后,D-FINE系列的AP达到了$59.3%$,超越了所有现有的实时检测器,展示了其可扩展性和鲁棒性。此外,我们的方法通过FDR和GO-LSD解决了边界框回归和蒸馏效率的关键挑战,使各种DETR模型的AP提高了$5.3%$,而额外的参数和训练成本几乎可以忽略不计,展示了其灵活性和通用性。总之,D-FINE推动了实时检测器的性能边界,为目标检测领域提供了有意义的前进方向,并激励了该领域的进一步探索。

Real-Time / End-to-End Object Detectors. The YOLO series has led the way in real-time object detection, evolving through innovations in architecture, data augmentation, and training techniques (Redmon et al., 2016a; Wang et al., 2023a;b; Glenn., 2023; Wang & Liao, 2024; Glenn., 2024). While efficient, YOLOs typically rely on Non-Maximum Suppression (NMS), which introduces latency and instability between speed and accuracy. DETR (Carion et al., 2020) revolutionizes object detection by removing the need for hand-crafted components like NMS and anchors. Traditional DETRs (Zhu et al., 2020; Meng et al., 2021; Zhang et al., 2022; Wang et al., 2022; Liu et al., 2021; Li et al., 2022; Chen et al., 2022a;c) have achieved excelling performance but at the cost of high computational demands, making them unsuitable for real-time applications. Recently, RTDETR (Zhao et al., 2024) and LW-DETR (Chen et al., 2024) have successfully adapted DETR for real-time use. Concurrently, YOLOv10 (Wang et al., 2024a) also eliminates the need for NMS, marking a significant shift towards end-to-end detection within the YOLO series.

实时/端到端目标检测器。YOLO系列在实时目标检测领域一直处于领先地位,通过架构、数据增强和训练技术的创新不断演进 (Redmon et al., 2016a; Wang et al., 2023a;b; Glenn., 2023; Wang & Liao, 2024; Glenn., 2024)。尽管YOLO效率高,但它通常依赖于非极大值抑制 (Non-Maximum Suppression, NMS),这会在速度和准确性之间引入延迟和不稳定性。DETR (Carion et al., 2020) 通过去除NMS和锚点等手工设计的组件,彻底改变了目标检测领域。传统的DETRs (Zhu et al., 2020; Meng et al., 2021; Zhang et al., 2022; Wang et al., 2022; Liu et al., 2021; Li et al., 2022; Chen et al., 2022a;c) 虽然取得了卓越的性能,但计算需求高,不适合实时应用。最近,RTDETR (Zhao et al., 2024) 和 LW-DETR (Chen et al., 2024) 成功地将DETR应用于实时场景。同时,YOLOv10 (Wang et al., 2024a) 也消除了对NMS的需求,标志着YOLO系列向端到端检测的重大转变。

Distribution-Based Object Detection. Traditional bounding box regression methods (Redmon et al., 2016b; Liu et al., 2016; Ren et al., 2015) rely on Dirac delta distributions, treating bounding box edges as precise and fixed, which makes modeling localization uncertainty challenging. To address this, recent models have employed Gaussian or discrete distributions to represent bounding boxes (Choi et al., 2019; Li et al., 2020; Qiu et al., 2020; Li et al., 2021), enhancing the modeling of uncertainty. However, these methods all rely on anchor-based frameworks, which limits their compatibility with modern anchor-free detectors like YOLOX (Ge et al., 2021) and DETR (Carion et al., 2020). Furthermore, their distribution representations are often formulated in a coarse-grained manner and lack effective refinement, hindering their ability to achieve more accurate predictions.

基于分布的目标检测

Knowledge Distillation. Knowledge distillation (KD) (Hinton et al., 2015) is a powerful model compression technique. Traditional KD typically focuses on transferring knowledge through Logit Mimicking (Zagoruyko & Komodakis, 2017; Mirzadeh et al., 2020; Son et al., 2021). FitNets (Romero et al., 2015) initially propose Feature Imitation, which has inspired a series of subsequent works that further expand upon this idea (Chen et al., 2017; Dai et al., 2021; Guo et al., 2021; Li et al., 2017; Wang et al., 2019). Most approaches for DETR (Chang et al., 2023; Wang et al., 2024b) incorporate hybrid distillations of both logit and various intermediate representations. Recently, localization distillation (LD) (Zheng et al., 2022) demonstrates that transferring localization knowledge is more effective for detection tasks. Self-distillation (Zhang et al., 2019; 2021) is a special case of KD which enables earlier layers to learn from the model’s own refined outputs, requiring far fewer additional training costs since there’s no need to separately train a teacher model.

知识蒸馏 (Knowledge Distillation)。知识蒸馏 (KD) (Hinton et al., 2015) 是一种强大的模型压缩技术。传统的 KD 通常专注于通过 Logit Mimicking (Zagoruyko & Komodakis, 2017; Mirzadeh et al., 2020; Son et al., 2021) 来传递知识。FitNets (Romero et al., 2015) 首次提出了特征模仿 (Feature Imitation),这一方法激发了一系列后续工作,进一步扩展了这一思路 (Chen et al., 2017; Dai et al., 2021; Guo et al., 2021; Li et al., 2017; Wang et al., 2019)。大多数 DETR (Chang et al., 2023; Wang et al., 2024b) 的方法都结合了 logit 和各种中间表示的混合蒸馏。最近,定位蒸馏 (Localization Distillation, LD) (Zheng et al., 2022) 表明,传递定位知识对于检测任务更为有效。自蒸馏 (Self-distillation) (Zhang et al., 2019; 2021) 是 KD 的一种特殊情况,它使得早期层可以从模型自身精炼的输出中学习,由于不需要单独训练教师模型,因此所需的额外训练成本大大减少。

3 PRELIMINARIES

3 预备知识

Bounding box regression in object detection has traditionally relied on modeling Dirac delta distributions, either using centroid-based ${x,y,w,h}$ or edge-distance ${\mathbf{c},\mathbf{d}}$ forms, where the distances $\mathbf{d}={t,b,l,r}$ are measured from the anchor point $\mathbf{c}={x_{c},y_{c}}$ . However, the Dirac delta assumption, which treats bounding box edges as precise and fixed, makes it difficult to model localization uncertainty, especially in ambiguous cases. This rigid representation not only limits optimization but also leads to significant localization errors with small prediction shifts.

目标检测中的边界框回归传统上依赖于建模狄拉克δ分布,通常使用基于中心点的 ${x,y,w,h}$ 或基于边缘距离的 ${\mathbf{c},\mathbf{d}}$ 形式,其中距离 $\mathbf{d}={t,b,l,r}$ 是从锚点 $\mathbf{c}={x_{c},y_{c}}$ 测量的。然而,狄拉克δ假设将边界框边缘视为精确且固定的,这使得在模糊情况下难以建模定位不确定性。这种刚性表示不仅限制了优化,还在预测发生微小偏移时导致显著的定位误差。

To address these problems, GFocal (Li et al., 2020; 2021) regresses the distances from anchor points to the four edges using disc ret i zed probability distributions, offering a more flexible modeling of the bounding box. In practice, bounding box distances $\mathbf{d}={t,b,l,r}$ is modeled as:

为了解决这些问题,GFocal (Li et al., 2020; 2021) 使用离散概率分布回归从锚点到四个边缘的距离,提供了更灵活的边界框建模。在实际应用中,边界框距离 $\mathbf{d}={t,b,l,r}$ 被建模为:

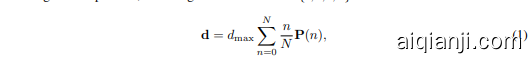

where $d_{\mathrm{max}}$ is a scalar that limits the maximum distance from the anchor center, and ${\mathbf P}(n)$ denotes the probability of each candidate distance of four edges. While GFocal introduces a step forward in handling ambiguity and uncertainty through probability distributions, specific challenges in its regression approach persist: (1) Anchor Dependency: Regression is tied to the anchor box center, limiting prediction diversity and compatibility with anchor-free frameworks. (2) No Iterative Refinement: Predictions are made in one shot without iterative refinements, reducing regression robustness.

其中 $d_{\mathrm{max}}$ 是一个标量,用于限制与锚点中心的最大距离,${\mathbf P}(n)$ 表示四条边每个候选距离的概率。尽管 GFocal 通过概率分布在处理模糊性和不确定性方面迈出了一步,但其回归方法仍存在一些特定挑战:(1) 锚点依赖性:回归与锚点框中心绑定,限制了预测的多样性以及与无锚点框架的兼容性。(2) 无迭代优化:预测是一次性完成的,没有迭代优化,降低了回归的鲁棒性。

(3) Coarse Localization: Fixed distance ranges and uniform bin intervals can lead to coarse localization, especially for small objects, because each bin represents a wide range of possible values.

(3) 粗定位:固定的距离范围和均匀的区间间隔可能导致粗定位,尤其是对于小物体,因为每个区间代表了一个广泛的可能值范围。

Localization Distillation (LD) is a promising approach, demonstrating that transferring localization knowledge is more effective for detection tasks (Zheng et al., 2022). Built upon GFocal, it enhances student models by distilling valuable localization knowledge from teacher models, rather than simply mimicking classification logits or feature maps. Despite its advantages, the method still relies on anchor-based architectures and incurs additional training costs.

定位蒸馏 (Localization Distillation, LD) 是一种有前景的方法,研究表明,在检测任务中传递定位知识更为有效 (Zheng et al., 2022)。该方法基于 GFocal,通过从教师模型中蒸馏出有价值的定位知识来增强学生模型,而不是简单地模仿分类 logits 或特征图。尽管有其优势,但该方法仍然依赖于基于锚点的架构,并会产生额外的训练成本。

4 METHOD

4 方法

We propose D-FINE, a powerful real-time object detector that excels in speed, size, computational cost, and accuracy. D-FINE addresses the shortcomings of existing bounding box regression approaches by leveraging two key components: Fine-grained Distribution Refinement (FDR) and Global Optimal Localization Self-Distillation (GO-LSD), which work in tandem to significantly enhance performance with negligible additional parameters and training time cost.

我们提出了 D-FINE,一种在速度、大小、计算成本和准确性方面表现出色的实时目标检测器。D-FINE 通过利用两个关键组件来解决现有边界框回归方法的不足:细粒度分布优化 (Fine-grained Distribution Refinement, FDR) 和全局最优定位自蒸馏 (Global Optimal Localization Self-Distillation, GO-LSD),这两个组件协同工作,显著提升了性能,同时增加的参数和训练时间成本几乎可以忽略不计。

(1) FDR iterative ly optimizes probability distributions that act as corrections to the bounding box predictions, providing a more fine-grained intermediate representation. This approach captures and optimizes the uncertainty of each edge independently. By leveraging the non-uniform weighting function, FDR allows for more precise and incremental adjustments at each decoder layer, improving localization accuracy and reducing prediction errors. FDR operates within an anchor-free, end-toend framework, enabling a more flexible and robust optimization process.

(1) FDR 通过迭代优化概率分布,作为边界框预测的修正,提供更细粒度的中间表示。该方法独立捕获并优化每条边缘的不确定性。通过利用非均匀权重函数,FDR 允许在每个解码器层进行更精确和逐步的调整,从而提高定位精度并减少预测误差。FDR 在无锚框、端到端的框架内运行,实现了更灵活和鲁棒的优化过程。

(2) GO-LSD distill localization knowledge from refined distributions into shallower layers. As training progresses, the final layer produces increasingly precise soft labels. Shallower layers align their predictions with these labels through GO-LSD, leading to more accurate predictions. As earlystage predictions improve, the subsequent layers can focus on refining smaller residuals. This mutual reinforcement creates a synergistic effect, leading to progressively more accurate localization.

(2) GO-LSD 将定位知识从精炼的分布中蒸馏到较浅的层中。随着训练的进行,最终层产生越来越精确的软标签。较浅的层通过 GO-LSD 将其预测与这些标签对齐,从而产生更准确的预测。随着早期预测的改进,后续层可以专注于精炼较小的残差。这种相互强化产生了协同效应,从而逐步提高定位的准确性。

To further enhance the efficiency of D-FINE, we streamline computationally intensive modules and operations in existing real-time DETR architectures (Zhao et al., 2024), making D-FINE faster and more lightweight. Although these modifications typically result in some performance loss, FDR and GO-LSD effectively mitigate this degradation. The detailed modifications are listed in Table 3.

为进一步提升 D-FINE 的效率,我们简化了现有实时 DETR 架构 (Zhao et al., 2024) 中计算密集型的模块和操作,使 D-FINE 更快、更轻量。尽管这些修改通常会导致一些性能损失,但 FDR 和 GO-LSD 有效地缓解了这种退化。详细修改列于表 3。

4.1 FINE-GRAINED DISTRIBUTION REFINEMENT

4.1 细粒度分布优化

Fine-grained Distribution Refinement (FDR) iterative ly optimizes a fine-grained distribution generated by the decoder layers, as shown in Figure 2. Initially, the first decoder layer predicts preliminary bounding boxes and preliminary probability distributions through a traditional bounding box regression head and a D-FINE head (Both heads are MLP, only the output dimensions are different). Each bounding box is associated with four distributions, one for each edge. The initial bounding boxes serve as reference boxes, while subsequent layers focus on refining them by adjusting distributions in a residual manner. The refined distributions are then applied to adjust the four edges of the corresponding initial bounding box, progressively improving its accuracy with each iteration.

细粒度分布优化 (Fine-grained Distribution Refinement, FDR) 迭代优化由解码器层生成的细粒度分布,如图 2 所示。最初,第一个解码器层通过传统的边界框回归头和一个 D-FINE 头(两者都是 MLP,仅输出维度不同)预测初步的边界框和初步的概率分布。每个边界框与四个分布相关联,每个边对应一个。初始边界框作为参考框,而后续层则通过残差方式调整分布来优化它们。优化后的分布随后用于调整相应初始边界框的四个边,逐步提高其准确性。

Mathematically, let $\mathbf{b}^{0},=,{x,y,W,H}$ denote the initial bounding box prediction, where ${x,y}$ represents the predicted center of the bounding box, and ${W,H}$ represent the width and height of the box. We can then convert ${\bf{b}}^{0}$ into the center coordinates $\mathbf{c}^{\bar{0}}={x,y}$ and the edge distances ${\bf d}^{0},=,{t,b,l,r}$ , which represent the distances from the center to the top, bottom, left, and right edges. For the $l$ -th layer, the refined edge distances $\mathbf{d}^{l}={t^{l},b^{l},l^{l},r^{l}}$ are computed as:

数学上,令 $\mathbf{b}^{0},=,{x,y,W,H}$ 表示初始的边界框预测,其中 ${x,y}$ 表示边界框的预测中心,${W,H}$ 表示边界框的宽度和高度。然后我们可以将 ${\bf{b}}^{0}$ 转换为中心坐标 $\mathbf{c}^{\bar{0}}={x,y}$ 和边缘距离 ${\bf d}^{0},=,{t,b,l,r}$,这些距离表示从中心到上、下、左、右边缘的距离。对于第 $l$ 层,精炼后的边缘距离 $\mathbf{d}^{l}={t^{l},b^{l},l^{l},r^{l}}$ 计算如下:

where $\mathbf P\mathbf r^{l}(n),=,{\mathrm{Pr}{t}^{l}(n),\mathrm{Pr}{b}^{l}(n),\mathrm{Pr}{l}^{l}(n),\mathrm{Pr}{r}^{l}(n)}$ represents four separate distributions, one for each edge. Each distribution predicts the likelihood of candidate offset values for the corresponding edge. These candidates are determined by the weighting function $W(n)$ , where $n$ indexes the discrete bins out of $N$ , with each bin corresponding to a potential edge offset. The weighted sum of the distributions produces the edge offsets. These edge offsets are then scaled by the height $H$ and width $W$ of the initial bounding box, ensuring the adjustments are proportional to the box size.

其中 $\mathbf P\mathbf r^{l}(n),=,{\mathrm{Pr}{t}^{l}(n),\mathrm{Pr}{b}^{l}(n),\mathrm{Pr}{l}^{l}(n),\mathrm{Pr}{r}^{l}(n)}$ 表示四个独立的分布,每个分布对应一条边。每个分布预测对应边的候选偏移值的可能性。这些候选值由加权函数 $W(n)$ 确定,其中 $n$ 索引 $N$ 个离散的区间,每个区间对应一个潜在的边偏移。分布的加权和产生边偏移。然后,这些边偏移通过初始边界框的高度 $H$ 和宽度 $W$ 进行缩放,确保调整与框的大小成比例。

Figure 2: Overview of D-FINE with FDR. The probability distributions that act as a more finegrained intermediate representation are iterative ly refined by the decoder layers in a residual manner. Non-uniform weighting functions are applied to allow for finer localization.

图 2: 带有 FDR 的 D-FINE 概述。作为更细粒度中间表示的概率分布通过解码器层以残差方式迭代优化。应用非均匀加权函数以实现更精细的定位。

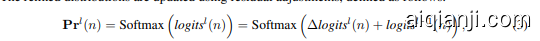

The refined distributions are updated using residual adjustments, defined as follows:

使用残差调整更新精炼分布,定义如下:

where logits from the previous layer $l o g i t s^{l-1}(n)$ reflect the confidence in each bin’s offset value for the four edges. The current layer predicts the residual logits $\Delta l o g i t s^{l}(n)$ , which are added to the previous logits to form updated logits $l o g i t s^{l}(n)$ . These updated logits are then normalized using the softmax function, producing the refined probability distributions.

其中前一层的 logits $logits^{l-1}(n)$ 反映了四个边缘每个 bin 偏移值的置信度。当前层预测残差 logits $\Delta logits^{l}(n)$ ,这些残差 logits 被加到前一层的 logits 上,形成更新的 logits $logits^{l}(n)$ 。然后使用 softmax 函数对这些更新的 logits 进行归一化,生成精炼的概率分布。

To facilitate precise and flexible adjustments, the weighting function $W(n)$ is defined as:

为了便于精确和灵活调整,权重函数 $W(n)$ 定义为:

where $a$ and $c$ are hyper-parameters controlling the upper bounds and curvature of the function. As shown in Figure 2, the shape of $W(n)$ ensures that when bounding box prediction is near accurate, small curvature in $W(n)$ allows for finer adjustments. Conversely, if the bounding box prediction is far from accurate, the larger curvature near the edges and the sharp changes at the boundaries of $W(n)$ ensure sufficient flexibility for substantial corrections.

其中 $a$ 和 $c$ 是控制函数上限和曲率的超参数。如图 2 所示,$W(n)$ 的形状确保了当边界框预测接近准确时,$W(n)$ 中的小曲率允许进行更精细的调整。相反,如果边界框预测不准确,$W(n)$ 边缘附近的较大曲率和边界处的急剧变化确保了足够的灵活性以进行大幅修正。

To further improve the accuracy of our distribution predictions and align them with ground truth values, inspired by Distribution Focal Loss (DFL) (Li et al., 2020), we propose a new loss function, Fine-Grained Localization (FGL) Loss, which is computed as:

为了进一步提高我们的分布预测准确性并与真实值对齐,受分布焦点损失 (Distribution Focal Loss, DFL) (Li et al., 2020) 的启发,我们提出了一种新的损失函数,称为细粒度定位 (Fine-Grained Localization, FGL) 损失,其计算公式为:

where $\mathbf{Pr}^{l}(n){k}$ represents the probability distributions corresponding to the $k$ -th prediction. $\phi$ is the relative offset calculated as $\phi\bar{=},({\bf d}^{G T},{-},{\bf\dot{d}}^{0})/{H,H,W,W}$ . $\mathbf{H}^{G T}$ represents the ground truth edgedistance and $n{\leftarrow},n_{\rightarrow}$ are the bin indices adjacent to $\phi$ . The cross-entropy (CE) losses with weights $\omega_{\leftarrow}$ and $\omega_{\rightarrow}$ ensure that the interpolation between bins aligns precisely with the ground truth offset. By incorporating IoU-based weighting, FGL loss encourages distributions with lower uncertainty to become more concentrated, resulting in more precise and reliable bounding box regression.

其中 $\mathbf{Pr}^{l}(n){k}$ 表示第 $k$ 个预测对应的概率分布。$\phi$ 是相对偏移量,计算公式为 $\phi\bar{=},({\bf d}^{G T},{-},{\bf\dot{d}}^{0})/{H,H,W,W}$。$\mathbf{H}^{G T}$ 表示真实边缘距离,$n{\leftarrow},n_{\rightarrow}$ 是与 $\phi$ 相邻的区间索引。带有权重 $\omega_{\leftarrow}$ 和 $\omega_{\rightarrow}$ 的交叉熵 (Cross-Entropy, CE) 损失确保区间之间的插值精确对齐真实偏移量。通过引入基于 IoU 的加权,FGL 损失鼓励不确定性较低的分布更加集中,从而实现更精确和可靠的边界框回归。

Figure 3: Overview of GO-LSD process. Localization knowledge from the final layer’s refined distributions is distilled into shallower layers through DDF loss with decoupled weighting strategies.

图 3: GO-LSD 流程概述。通过解耦加权策略的 DDF 损失,将最终层精炼分布中的定位知识蒸馏到较浅层中。

4.2 GLOBAL OPTIMAL LOCALIZATION SELF-DISTILLATION

4.2 全局最优定位自蒸馏

Global Optimal Localization Self-Distillation (GO-LSD) utilizes the final layer’s refined distribution predictions to distill localization knowledge into the shallower layers, as shown in Figure 3. This process begins by applying Hungarian Matching (Carion et al., 2020) to the predictions from each layer, identifying the local bounding box matches at every stage of the model. To perform a global optimization, GO-LSD aggregates the matching indices from all layers into a unified union set. This union set combines the most accurate candidate predictions across layers, ensuring that they all benefit from the distillation process. In addition to refining the global matches, GO-LSD also optimizes unmatched predictions during training to improve overall stability, leading to improved overall performance. Although the localization is optimized through this union set, the classification task still follows a one-to-one matching principle, ensuring that there are no redundant boxes. This strict matching means that some predictions in the union set are well-localized but have low confidence scores. These low-confidence predictions often represent candidates with precise localization, which still need to be distilled effectively.

全局最优定位自蒸馏 (GO-LSD) 利用最终层的精细化分布预测将定位知识蒸馏到较浅层,如图 3 所示。该过程首先将匈牙利匹配 (Carion et al., 2020) 应用于每一层的预测,以在模型的每个阶段识别局部边界框匹配。为了进行全局优化,GO-LSD 将所有层的匹配索引聚合到一个统一的联合集合中。该联合集合结合了跨层中最准确的候选预测,确保它们都能从蒸馏过程中受益。除了优化全局匹配外,GO-LSD 还在训练过程中优化未匹配的预测,以提高整体稳定性,从而提升整体性能。尽管定位通过该联合集合进行了优化,但分类任务仍遵循一对一匹配原则,确保没有冗余框。这种严格的匹配意味着联合集合中的一些预测定位良好但置信度较低。这些低置信度预测通常代表具有精确定位的候选框,仍然需要有效地蒸馏。

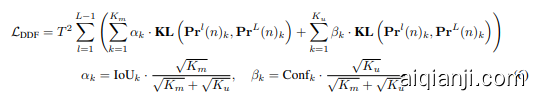

To address this, we introduce Decoupled Distillation Focal (DDF) Loss, which applies decoupled weighting strategies to ensure that high-IoU but low-confidence predictions are given appropriate weight. The DDF Loss also weights matched and unmatched predictions according to their quantity, balancing their overall contribution and individual losses. This approach results in more stable and effective distillation. The Decoupled Distillation Focal Loss $\mathcal{L}_{\mathrm{DDF}}$ is then formulated as:

为了解决这个问题,我们引入了解耦蒸馏焦点 (Decoupled Distillation Focal, DDF) 损失函数,它采用了解耦加权策略,以确保高 IoU 但低置信度的预测被赋予适当的权重。DDF 损失还根据匹配和不匹配预测的数量进行加权,平衡它们的整体贡献和个体损失。这种方法使得蒸馏过程更加稳定和有效。解耦蒸馏焦点损失 $\mathcal{L}_{\mathrm{DDF}}$ 的公式如下:

where $\mathbf{KL}$ represents the Kullback-Leibler divergence (Hinton et al., 2015), and $T$ is the temperature parameter used for smoothing logits. The distillation loss for the $k$ -th matched prediction is weighted by $\alpha_{k}$ , where $K_{m}$ and $K_{u}$ are the numbers of matched and unmatched predictions, respectively. For the $k$ -th unmatched prediction, the weight is $\beta_{k}$ , with $\mathrm{Conf}_{k}$ denoting the classification confidence.

其中 $\mathbf{KL}$ 表示 Kullback-Leibler 散度 (Hinton et al., 2015),$T$ 是用于平滑 logits 的温度参数。第 $k$ 个匹配预测的蒸馏损失由 $\alpha_{k}$ 加权,其中 $K_{m}$ 和 $K_{u}$ 分别是匹配和不匹配预测的数量。对于第 $k$ 个不匹配预测,权重为 $\beta_{k}$,$\mathrm{Conf}_{k}$ 表示分类置信度。

5 EXPERIMENTS

5 实验

5.1 EXPERIMENT SETUP

5.1 实验设置

To validate the effectiveness of our proposed methods, we conduct experiments on the COCO (Lin et al., 2014a) and Objects365 (Shao et al., 2019) datasets. We evaluate our D-FINE using the standard COCO metrics, including Average Precision (AP) averaged over IoU thresholds from 0.50 to 0.95, as well as AP at specific thresholds $\mathrm{\DeltaAP_{50}}$ and $\mathrm{AP_{75}}$ ) and AP across different object scales: small $(\mathrm{AP}{S})$ , medium $(\mathbf{AP}{M})$ , and large $(\mathbf{A}\mathbf{P}_{L})$ . Additionally, we provide model efficiency metrics by reporting the number of parameters (#Params.), computational cost (GFLOPs), and end-to-end latency. The latency is measured using TensorRT FP16 on an NVIDIA T4 GPU.

为了验证我们提出方法的有效性,我们在 COCO (Lin et al., 2014a) 和 Objects365 (Shao et al., 2019) 数据集上进行了实验。我们使用标准的 COCO 指标评估了我们的 D-FINE,包括在 IoU 阈值从 0.50 到 0.95 之间平均的平均精度 (AP),以及特定阈值 ($\mathrm{\DeltaAP_{50}}$ 和 $\mathrm{AP_{75}}$) 下的 AP,以及不同物体尺度下的 AP:小 ($\mathrm{AP}{S}$)、中 ($\mathbf{AP}{M}$) 和大 ($\mathbf{A}\mathbf{P}_{L}$)。此外,我们还通过报告参数量 (#Params.)、计算成本 (GFLOPs) 和端到端延迟来提供模型效率指标。延迟是在 NVIDIA T4 GPU 上使用 TensorRT FP16 测量的。

Table 1: Performance comparison of various real-time object detectors on COCO val2017.

$\star$ : NMS is tuned with a confidence threshold of 0.01.

5.2 COMPARISON WITH REAL-TIME DETECTORS

5.2 与实时检测器的比较

Table 1 provides a comprehensive comparison between D-FINE and various real-time object detectors on COCO val2017. D-FINE achieves an excellent balance between efficiency and accuracy across multiple metrics. Specifically, D-FINE-L attains an AP of $54.0%$ with 31M parameters and 91 GFLOPs, maintaining a low latency of $8.07~\mathrm{ms}$ . Additionally, D-FINE $\mathbf{\deltaX}$ achieves an AP of $55.8%$ with 62M parameters and 202 GFLOPs, operating with a latency of $12.89,\mathrm{ms}$ .

表 1: 提供了 D-FINE 与各种实时目标检测器在 COCO val2017 上的全面比较。D-FINE 在多个指标上实现了效率与准确性的优秀平衡。具体而言,D-FINE-L 在 31M 参数和 91 GFLOPs 的情况下达到了 $54.0%$ 的 AP,同时保持了 $8.07~\mathrm{ms}$ 的低延迟。此外,D-FINE $\mathbf{\deltaX}$ 在 62M 参数和 202 GFLOPs 的情况下达到了 $55.8%$ 的 AP,运行延迟为 $12.89,\mathrm{ms}$。

As depicted in Figure 1, which shows scatter plots of latency vs. AP, parameter count vs. AP, and FLOPs vs. AP, D-FINE consistently outperforms other state-of-the-art models across all key dimensions. D-FINE-L achieves a higher AP $(54.0%)$ compared to YOLOv10-L $(53.2%)$ , RTDETR-R50 $(53.1%)$ , and LW-DETR-X $(53.0%)$ , while requiring fewer computational resources (91 GFLOPs vs. 120, 136, and 174). Similarly, D-FINE $\mathbf{\deltaX}$ surpasses YOLOv10-X and RT-DETR-R101 by achieving superior performance $55.8%$ AP vs. $54.4%$ and $54.3%$ ) and demonstrating greater efficiency in terms of lower parameter count, GFLOPs, and latency.

如图 1 所示,图中展示了延迟与 AP、参数量与 AP 以及 FLOPs 与 AP 的散点图,D-FINE 在所有关键维度上始终优于其他最先进的模型。D-FINE-L 实现了更高的 AP $(54.0%)$,而 YOLOv10-L $(53.2%)$、RTDETR-R50 $(53.1%)$ 和 LW-DETR-X $(53.0%)$ 则需要更多的计算资源(91 GFLOPs 对比 120、136 和 174)。同样,D-FINE $\mathbf{\deltaX}$ 超越了 YOLOv10-X 和 RT-DETR-R101,实现了更高的性能 $(55.8% AP 对比 54.4% 和 54.3%)$,并在更低的参数量、GFLOPs 和延迟方面表现出更高的效率。

We further pretrain D-FINE and YOLOv10 on the Objects365 dataset (Shao et al., 2019), before finetuning them on COCO. After pre training, both D-FINE-L and D-FINE $\mathbf{\deltaX}$ exhibit significant performance improvements, achieving AP of $57.1%$ and $59.3%$ , respectively. These enhancements enable them to outperform YOLOv10-L and YOLOv10-X by $3.1%$ and $4.4%$ AP, thereby positioning them as the top-performing models in this comparison. What’s more, following the pre training protocol of YOLOv8 (Glenn., 2023), YOLOv10 is pretrained on Objects365 for 300 epochs. In contrast, D-FINE requires only 21 epochs to achieve its substantial performance gains. These findings corroborate the conclusions of LW-DETR (Chen et al., 2024), demonstrating that DETR-based models benefit substantia