Tasks, stability, architecture, and compute: Training more effective learned optimizers, and using them to train themselves

任务、稳定性、架构与算力:训练更高效的学习型优化器及其自我训练应用

Luke Metz Google Research, Brain Team lmetz@google.com

Luke Metz Google Research, Brain Team lmetz@google.com

Niru Ma he s war a nathan Google Research, Brain Team nirum@google.com

Niru Maheswaranathan

Google Research, Brain Team

nirum@google.com

C. Daniel Freeman Google Research, Brain Team cdfreeman@google.com

C. Daniel Freeman Google Research, Brain Team cdfreeman@google.com

Ben Poole Google Research, Brain Team pooleb@google.com

Ben Poole Google Research, Brain Team pooleb@google.com

Jascha Sohl-Dickstein Google Research, Brain Team jaschasd@google.com

Jascha Sohl-Dickstein Google Research, Brain Team jaschasd@google.com

Abstract

摘要

Much as replacing hand-designed features with learned functions has revolutionized how we solve perceptual tasks, we believe learned algorithms will transform how we train models. In this work we focus on general-purpose learned optimizers capable of training a wide variety of problems with no user-specified hyperparameters. We introduce a new, neural network parameterized, hierarchical optimizer with access to additional features such as validation loss to enable automatic regularization. Most learned optimizers have been trained on only a single task, or a small number of tasks. We train our optimizers on thousands of tasks, making use of orders of magnitude more compute, resulting in optimizers that generalize better to unseen tasks. The learned optimizers not only perform well, but learn behaviors that are distinct from existing first order optimizers. For instance, they generate update steps that have implicit regular iz ation and adapt as the problem hyperparameters (e.g. batch size) or architecture (e.g. neural network width) change. Finally, these learned optimizers show evidence of being useful for out of distribution tasks such as training themselves from scratch.

正如通过学习函数替代手工设计的特征彻底改变了我们解决感知任务的方式一样,我们相信学习算法将重塑模型训练范式。本研究聚焦于无需用户指定超参数、能训练多种任务的通用学习型优化器。我们提出一种新型神经网络参数化的分层优化器,其可利用验证损失等附加特征实现自动正则化。现有学习型优化器大多仅在单一或少量任务上训练,而我们的优化器在数千个任务上训练,消耗了数量级更高的算力,从而获得更优秀的未知任务泛化能力。这些优化器不仅性能优异,还展现出与一阶优化器截然不同的行为特征:例如能生成具有隐式正则化的更新步骤,并随问题超参数(如批量大小)或架构(如神经网络宽度)变化而自适应调整。最后,这些学习型优化器还显示出对分布外任务(如从零开始自我训练)的潜在适用性。

1 Introduction

1 引言

Much of the success of modern deep learning has been driven by a shift from hand-designed features carefully curated by human experts, to domain-agnostic methods that can learn features from large amounts of data. By leveraging large-scale datasets with flexible models, we are now able to rapidly learn powerful features for new problem settings that often generalize to novel tasks. While learned features outperform hand-designed features on numerous tasks [1–4], we continue to use handdesigned optimization algorithms (such as gradient descent, momentum, and so on) for training models.

现代深度学习的成功很大程度上源于从人工专家精心设计的手工特征,转向了能够从大量数据中学习特征的领域无关方法。通过利用大规模数据集和灵活模型,我们现在能够快速学习适用于新问题场景的强大特征,这些特征通常能泛化到新任务。尽管学习到的特征在众多任务上超越了手工特征 [1-4],我们仍继续使用手工设计的优化算法(如梯度下降、动量法等)来训练模型。

These hand-designed update rules benefit from decades of optimization research but still require extensive expert supervision in order to be used effectively in machine learning. For example, they fail to flexibly adapt to new problem settings and require careful tuning of learning rate schedules and momentum timescales for different model architectures and datasets [5]. In addition, most do not leverage alternative sources of information beyond the gradient, such as the validation loss. By separating the optimization target (training loss) from the broader goal (generalization), classic methods require more careful tuning of regular iz ation and/or data augmentation strategies by the practitioner.

这些手工设计的更新规则得益于数十年的优化研究,但在机器学习中有效使用仍需要大量专家监督。例如,它们无法灵活适应新问题场景,且需要针对不同模型架构和数据集仔细调整学习率计划与动量时间尺度 [5]。此外,大多数方法未能利用梯度之外的其他信息源(如验证损失)。由于将优化目标(训练损失)与更广泛目标(泛化性)分离,传统方法要求从业者更谨慎地调整正则化或数据增强策略。

To address these drawbacks, recent work on learned optimizers aims to replace hand-designed optimizers with a parametric optimizer, trained on a set of tasks, that can then be applied more generally. Recent work in this area has focused on either augmenting existing optimizers to adapt their own hyper parameters [6–8], or developing more expressive learned optimizers to replace existing optimizers entirely [9–15]. These latter models take in problem information (such as the current gradient of the training loss) and iterative ly update parameters. However, to date, learned optimizers have proven to be brittle and ineffective at generalizing across diverse sets of problems.

为了解决这些缺陷,近期关于学习型优化器 (learned optimizer) 的研究致力于用参数化优化器替代人工设计的优化器。这种优化器在一组任务上训练后能更广泛地应用。该领域的最新研究主要聚焦于两个方向:一是增强现有优化器以自适应调整超参数 [6-8],二是开发表达能力更强的学习型优化器来完全取代现有方案 [9-15]。后一类模型会接收问题信息(如当前训练损失的梯度)并迭代更新参数。然而迄今为止,学习型优化器在跨问题泛化方面仍存在脆弱性和效果不足的问题。

Our work identifies fundamental barriers that have limited progress in learned optimizer research and addresses several of these barriers to train effective optimizers:

我们的研究揭示了制约学习优化器研究进展的根本性障碍,并通过解决其中若干关键问题实现了高效优化器的训练:

By addressing these barriers, we develop learned optimizers that exceed prior work in scale, robustness, and out of distribution generalization. As a final test, we show that the learned optimizer can be used to train new learned optimizers from scratch (analogous to “self-hosting” compilers [17]). We see this final accomplishment as being analogous to the first time a compiler is complete enough that it can be used to compile itself.

通过解决这些障碍,我们开发出在规模、鲁棒性和分布外泛化能力上超越先前工作的学习型优化器。最终测试表明,该学习型优化器可用于从头训练新的学习型优化器(类似于"自托管"编译器[17])。我们将这一最终成果类比于编译器首次具备足够完整性来编译自身的历史时刻。

2 Preliminaries

2 预备知识

Training a learned optimizer is a bilevel optimization problem that contains two loops: an inner loop that applies the optimizer to solve a task, and an outer loop that iterative ly updates the parameters of the learned optimizer [18]. We use the inner- and outer- prefixes throughout to be explicit about which optimization loop we are referring to. That is, the inner-loss refers to a target task’s loss function that we wish to optimize, and the outer-loss refers to a measure of the optimizer’s performance training the target task (inner-task). Correspondingly, we refer to the optimizer parameters as outer-parameters, and the parameters that the optimizer is updating as inner-parameters. Outer-optimization refers to the act of finding outer-parameters that perform well under some outer-loss.

训练一个学习型优化器 (learned optimizer) 是一个双层优化问题,包含两个循环:内层循环应用优化器解决任务,外层循环迭代更新学习型优化器的参数 [18]。我们始终使用 inner- 和 outer- 前缀来明确所指的优化循环。即 inner-loss 指我们希望优化的目标任务损失函数,outer-loss 指优化器在训练目标任务 (inner-task) 时的性能度量。相应地,我们将优化器参数称为 outer-parameters,将被优化器更新的参数称为 inner-parameters。outer-optimization 指寻找在某些 outer-loss 下表现良好的 outer-parameters 的过程。

For a given inner-task, we apply the learned optimizer for some number of steps (unrolling the optimizer). Ideally, we would unroll each target task until some stopping criterion is reached, but this is computationally infeasible for even moderate scale machine learning tasks. Each outer-iteration requires unrolling the optimizer on a target task. Short (truncated) unrolls are more computationally efficient, but suffer from truncation bias [19, 14] in that the outer-loss surface computed using truncated unrolls is different (and may have different minima) than the fully unrolled outer-loss.

对于给定的内部任务,我们会在若干步骤中应用学习到的优化器(即展开优化器)。理想情况下,我们会将每个目标任务展开直至满足停止条件,但对于中等规模的机器学习任务来说,这在计算上是不可行的。每次外部迭代都需要在目标任务上展开优化器。较短的(截断的)展开在计算上更高效,但会遭受截断偏差 [19, 14] 的影响,即使用截断展开计算的外部损失曲面与完全展开的外部损失曲面不同(且可能具有不同的最小值)。

3 Methods: Addressing the three barriers to learned optimizers

3 方法:解决学习型优化器的三大障碍

3.1 Outer-Optimization

3.1 外层优化

To train the optimizer, we minimize an outer-loss that quantifies the performance of the optimizer. This is defined as the mean of the inner-loss computed on the inner-validation set for some number of unrolled steps, averaged over inner-tasks in the outer-training taskset. Although this outer-loss is differentiable, it is costly to compute the outer-gradient (which involves back propagating through the unrolled optimization). In addition, the outer-loss surface is badly conditioned and extremely non-smooth [14], making it difficult to optimize.

为训练优化器,我们最小化用于量化优化器性能的外部损失。该损失定义为在若干展开步长上计算的内验证集内部损失均值,并对外部训练任务集中的内部任务取平均。尽管此外部损失可微分,但计算外部梯度(需通过展开优化过程反向传播)成本高昂。此外,外部损失曲面存在严重病态条件且极度不平滑 [14],导致优化难度极大。

We deal with these issues by using derivative-free optimization–specifically, evolutionary strategies (ES) [20]–to minimize the outer-loss, obviating the need to compute derivatives through the unrolled optimization process. Previous work has used unrolled derivatives [9, 10, 14], and was thus limited to short numbers of unrolled steps (e.g. 20 in An dry ch owicz et al. [9] and starting at 50 in Metz et al. [14]). Using ES, we are able to use considerably longer unrolls. Initial unroll lengths were chosen to balance communication cost between parallel workers (when updating optimizer parameters) with the computational cost of unrolling on individual workers (when estimating the local gradient with ES). We start outer-training by sampling unroll steps uniformly from 240-360 steps. When performance saturates, we continue training with Persistent Evo lotion ary Strategies (PES) [21]. PES provides an unbiased estimate of the outer-gradient over the entire inner task, but at the cost of higher variance gradients.

我们通过使用无导数优化(derivative-free optimization)——特别是进化策略(ES)[20]——来最小化外部损失,从而避免在展开优化过程中计算导数的需求。先前的工作使用了展开导数[9, 10, 14],因此仅限于较短的展开步数(例如An dry ch owicz等人[9]中的20步,以及Metz等人[14]中从50步开始)。通过使用ES,我们能够采用更长的展开步数。初始展开长度选择是为了平衡并行工作节点间的通信成本(更新优化器参数时)与单个工作节点上展开的计算成本(用ES估计局部梯度时)。我们通过从240-360步中均匀采样展开步数来启动外部训练。当性能趋于饱和时,我们继续使用持久进化策略(PES)[21]进行训练。PES提供了对整个内部任务外部梯度的无偏估计,但代价是梯度方差更高。

ES and PES have an additional benefit, in that optimizing with ES smooths the underlying loss function. This smoothing helps stabilize outer-training [14]. We set the standard deviation of the Gaussian distribution used by the ES algorithm (which also controls how much the outer-loss is smoothed) to 0.01. To deal with the high variance of the ES estimate of the gradient, we use antithetic sampling and train in in parallel using 1024 multi-core CPU workers. While using more workers increases training speed, we find 1024 to be the point where performance gains become sub-linear. For more details see Appendix B.

ES和PES还有一个额外优势,即通过ES优化能平滑底层损失函数。这种平滑作用有助于稳定外层训练[14]。我们将ES算法采用的高斯分布标准差(该参数同时控制外层损失的平滑程度)设为0.01。为应对ES梯度估计的高方差问题,我们采用对偶采样技术,并使用1024个多核CPU工作器进行并行训练。虽然增加工作器数量能提升训练速度,但我们发现1024个工作器时性能提升开始呈现次线性特征。更多细节详见附录B。

3.2 Task distributions

3.2 任务分布

To train the optimizer, we need to define a set of inner-tasks to use for training. The choice of training tasks is critically important for two reasons: it determines the ability of the optimizer to outer-generalize (i.e. the learned optimizer’s performance on new tasks), and it determines the computational complexity of outer-training. For improved outer-generalization, we would like our inner-problems to be representative of tasks we care about. In this work, these are state-of-the-art machine learning models such as ResNets [22] or Transformers [23]. Unfortunately, directly utilizing these large scale models is computationally infeasible, therefore we outer-train on proxy tasks for speed[24].

为了训练优化器,我们需要定义一组用于训练的内部任务。训练任务的选择至关重要,原因有二:它决定了优化器外推泛化的能力(即所学优化器在新任务上的表现),同时也决定了外部训练的计算复杂度。为了提升外推泛化能力,我们希望内部问题能代表我们关心的任务类型。本研究中,这些任务涉及最先进的机器学习模型,如ResNets [22] 或Transformer [23]。但直接使用这些大规模模型在计算上不可行,因此我们采用代理任务进行外部训练以提升速度[24]。

In order to outer-train a learned optimizer capable of generalizing to new optimization tasks, we utilize an outer-training task set consisting of around 6,000 tasks designed after Metz et al. [16]. These tasks include RNNs [25, 26], CNNs [27], masked auto regressive flows [28], fully connected networks, language modeling, variation al auto encoders [29], simple 2D test functions, quadratic bowls, and more. For tasks that require them, we additionally sample a dataset, batch size, network architecture, and initialization scheme. To keep outer-training efficient, we ensure that all tasks take less than 100 milliseconds per-training step. For each task that makes use of a dataset, we create four splits of the data to prevent leakage: training data, which we compute gradients on and use to update the inner-parameters; inner-validation data, which is used to compute validation losses used by the learned optimizer; outer-validation data, which is used to update the weights of the learned optimizer; and test data, which is used to test an already trained learned optimizer. Because loss values vary in magnitude, when outer-training we normalize these outer-loss values by the best loss achieved by a baseline optimizer and the initial loss value. Note this normalization is not used during inner-training.

为了训练一个能够泛化到新优化任务的学习型优化器,我们采用了一个由约6,000个任务组成的外部训练任务集,其设计参考了Metz等人[16]的方法。这些任务包括RNN[25, 26]、CNN[27]、掩码自回归流[28]、全连接网络、语言建模、变分自编码器[29]、简单的二维测试函数、二次曲面等。对于需要数据的任务,我们还额外采样了数据集、批次大小、网络架构和初始化方案。为确保外部训练效率,所有任务每个训练步骤耗时均控制在100毫秒以内。对于涉及数据集的任务,我们将数据划分为四个部分以防止泄漏:训练数据(用于计算梯度并更新内部参数)、内部验证数据(用于计算学习型优化器所需的验证损失)、外部验证数据(用于更新学习型优化器的权重)以及测试数据(用于测试已训练完成的学习型优化器)。由于损失值量级差异较大,在外部训练时我们通过基线优化器达到的最佳损失值和初始损失值对这些外部损失值进行归一化处理。请注意,该归一化操作不用于内部训练阶段。

3.3 Optimizer architecture

3.3 优化器架构

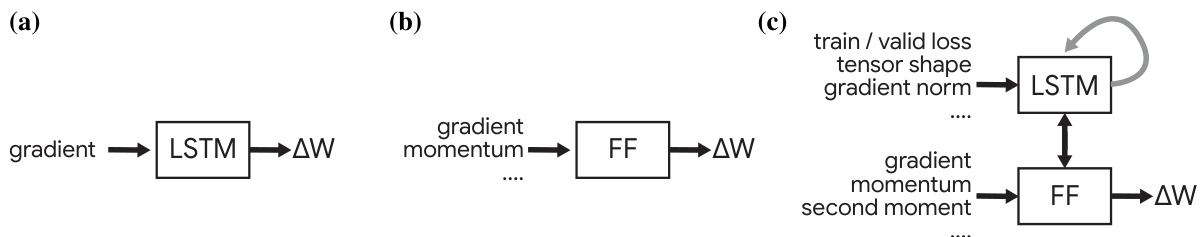

Designing a learned optimizer architecture requires balancing computational efficiency and expressivity. Past work in learned optimizers has shown that incorporating inductive biases based on existing optimization techniques such as momentum or second moment accumulation leads to better performance [10, 14]. The optimizer we use in this work consists of a hierarchical optimizer similar to [10] (Figure 1). A per-tensor LSTM is run on features computed over each parameter tensor. This LSTM then forwards information to the other tensors’ LSTMs as well as to a per-parameter feed forward neural network. The per-parameter feed forward network additionally takes in information about gradients and parameter value, and outputs parameter updates. Additional outputs are aggregated and fed back into the per-tensor network. This information routing allows for communication across all components.

设计一个学习型优化器架构需要在计算效率和表达能力之间取得平衡。过去在学习型优化器方面的研究表明,结合基于现有优化技术(如动量或二阶矩累积)的归纳偏置可以带来更好的性能 [10, 14]。本工作中使用的优化器采用与 [10] 类似的分层结构(图 1)。首先对每个参数张量计算特征,并输入到对应的张量级 LSTM。该 LSTM 会将信息传递给其他张量的 LSTM 以及一个参数级前馈神经网络。参数级前馈网络还会接收梯度和参数值信息,并输出参数更新量。其他输出会被聚合并反馈给张量级网络。这种信息路由机制实现了所有组件之间的通信。

Figure 1: (a) The learned optimizer architecture proposed in An dry ch owicz et al. [9] consisting of a per-parameter LSTM. (b) The learned optimizer architecture proposed in Metz et al. [14] consisting of a per-parameter fully connected (feed-forward, FF) neural network with additional input features. (c) The learned optimizer architecture proposed in this work consisting of a per-tensor LSTM which exchanges information with a per-parameter feed forward neural network (FF). The LSTMs associated with each tensor additionally share information with each other (shown in gray).

图 1: (a) Andrychowicz等人[9]提出的学习优化器架构,包含一个逐参数的LSTM。(b) Metz等人[14]提出的学习优化器架构,包含一个带有额外输入特征的逐参数全连接(前馈,FF)神经网络。(c) 本工作提出的学习优化器架构,包含一个与逐参数前馈神经网络(FF)交换信息的逐张量LSTM。每个张量关联的LSTM还会相互共享信息(如灰色部分所示)。

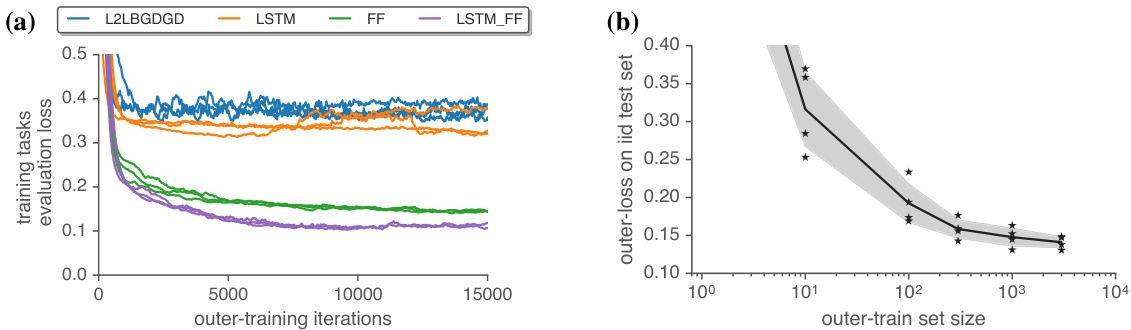

Figure 2: (a) Our proposed learned optimizer has a greater sample efficiency than existing methods. On the $\mathbf{X}$ -axis we show outer-updates performed to outer-train each learned optimizer. On the y-axis we show outer-loss. Each point consists of using a single outer parameter value to train 100 models for $10\mathrm{k\Omega}$ inner-iterations, each with five random initialization s. We show the average validation performance post-normalization averaged over all tasks, seeds, and inner-training steps. (b) Outergeneralization performance increases with an increasing number of outer-training tasks. We show the mean performance achieved after ${\sim}10000$ outer-training iterations (between 10500 and 9500) averaged over four random seeds while varying outer training set size. We show each seed as a star, and standard deviation as the shaded region. We find poor outer-generalization for smaller numbers of tasks. As we increase the number of outer-training tasks performance becomes more consistent, and lower loss values are achieved.

图 2: (a) 我们提出的学习优化器比现有方法具有更高的样本效率。横轴( $\mathbf{X}$ )表示对每个学习优化器进行的外部训练更新次数,纵轴表示外部损失。每个数据点代表使用单一外部参数值训练100个模型进行 $10\mathrm{k\Omega}$ 次内部迭代,每个模型采用五种随机初始化。图中显示的是所有任务、随机种子和内部训练步骤后经归一化的平均验证性能。(b) 外部泛化性能随外部训练任务数量的增加而提升。我们展示了在 ${\sim}10000$ 次外部训练迭代(介于10500至9500之间)后达到的平均性能,该结果是针对不同规模的外部训练集在四个随机种子上的平均值。每个种子以星号标记,阴影区域表示标准差。我们发现任务数量较少时外部泛化性能较差,随着外部训练任务数量的增加,性能表现更加稳定且能达到更低的损失值。

For per-parameter features we leverage effective inductive biases from hand-designed optimizers, and use a variety of features including the gradient, the parameter value, and momentum-like running averages of both. All features are normalized, in a fashion similar to that in RMSProp [30], or relative to the norm across the full tensor. For per-tensor features we use a variety of features built from summary statistics computed from the current tensor, the tensor’s gradient, and running average features such as momentum and second moments. We also include information about the tensor’s rank and shape. We also feed global features into the per-tensor LSTM, such as training loss and validation loss, normalized so as to have a relatively consistent scale across tasks. To compute a weight update, the per-parameter MLP outputs two values, $(a,b)$ , which are used to update inner-parameters: $\dot{w}^{t+1}=w^{\dot{t}}+\dot{\exp}(a)b$ . See Appendix C for many more details.

对于每个参数的特征,我们借鉴了手工设计优化器的有效归纳偏置,并使用了包括梯度、参数值以及两者的动量式滑动平均值在内的多种特征。所有特征都按照类似RMSProp [30] 的方式进行归一化,或相对于整个张量的范数进行归一化。对于每个张量的特征,我们使用了基于当前张量、张量梯度以及动量、二阶矩等滑动平均特征的多种统计摘要特征。我们还包含了张量的秩和形状信息。此外,我们将全局特征(如训练损失和验证损失)输入到每个张量的LSTM中,这些特征经过归一化处理,以保持跨任务间相对一致的尺度。为了计算权重更新,每个参数的多层感知机(MLP)输出两个值$(a,b)$,用于更新内部参数:$\dot{w}^{t+1}=w^{\dot{t}}+\dot{\exp}(a)b$。更多细节详见附录C。

4 Results

4 结果

4.1 Comparing learned optimizer architectures and training task set sizes

4.1 比较学习优化器架构与训练任务集规模

First, we show experiments comparing the performance of different learned optimizer architectures from the literature. We trained: a component-wise LSTM optimizer from An dry ch owicz et al. [9] (L2LBGDGD), a modification of this LSTM with the decomposed direction and magnitude output from Metz et al. [14] (LSTM), the fully connected optimizer from Metz et al. [14] (FF), as well as the proposed learned optimizer in this work (§3.3) (LSTM_FF). As shown in Figure 2(a), the proposed architecture achieves the lowest outer-training loss and achieves this in the fewest outer-training steps. To the best of our knowledge, this is the first published comparison across different learned optimizer architectures, on the same suite of tasks. Previous work only compared a proposed learned optimizer against hand-designed (baseline) optimizers.

首先,我们展示了比较文献中不同学习优化器架构性能的实验。我们训练了:来自Andrychowicz等人[9]的组件级LSTM优化器(L2LBGDGD)、Metz等人[14]对该LSTM的分解方向与幅度输出改进版本(LSTM)、Metz等人[14]的全连接优化器(FF),以及本文提出的学习优化器(§3.3)(LSTM_FF)。如图2(a)所示,所提架构实现了最低的外部训练损失,且以最少的外部训练步数达成目标。据我们所知,这是首次在同一套任务上对不同学习优化器架构进行公开比较。先前工作仅将提出的学习优化器与人工设计(基线)优化器进行对比。

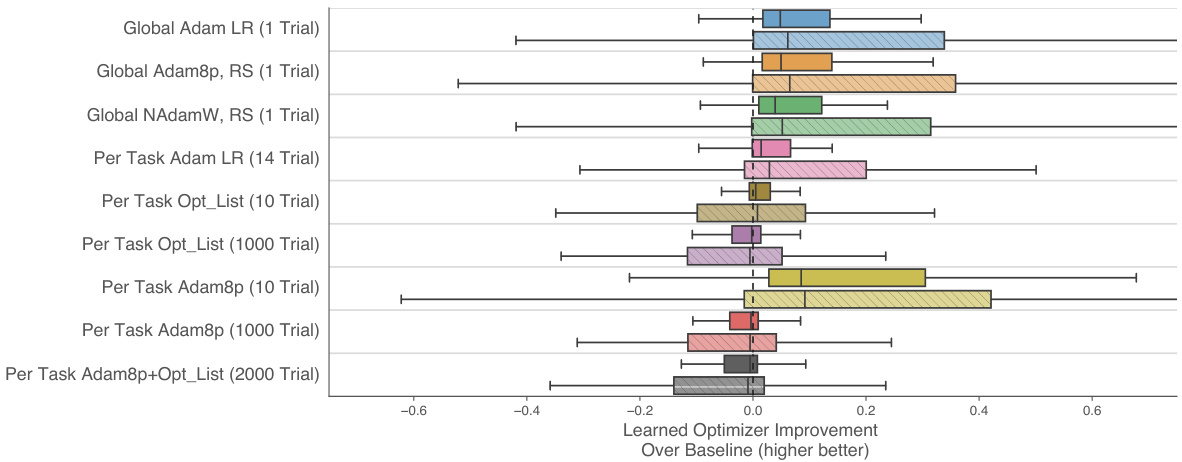

Figure 3: Learned optimizer performance as compared to a battery of different baselines. For each baseline, we show a box plot over 100 different tasks. We show both a training set (solid color) which the optimizer has seen, and a test set (hatched light color) which is sampled from the same distribution but never used for outer-training. Values to the right of the dashed line indicate the learned optimizer outperforming the corresponding baseline. The learned optimizer outperforms any single baseline optimizer with a fixed hyper parameter configuration (marked global, with a single Trial). We also outperform per-task tuning of baselines, when tuning is done only over a modest number of hyper parameter configurations (for instance, learning rate tuned Adam which uses 14 trials). Note that the learned optimizer undergoes no per-task tuning and only makes use of one trial.

图 3: 学习优化器与多种基线方法的性能对比。针对每个基线方法,我们展示了在100个不同任务上的箱线图。其中实色部分表示优化器已见过的训练集,浅色斜纹部分表示从相同分布采样但未用于外部训练的测试集。虚线右侧数值表示学习优化器优于对应基线方法的表现。该学习优化器在固定超参数配置下(标记为global,仅使用单次试验)超越了所有单一基线优化器。即使基线方法进行适度超参数调优(例如使用14次试验调整学习率的Adam),学习优化器仍表现更优。需注意学习优化器未进行任何任务级调优且仅使用单次试验。

Next, we explored how increasing the number of inner-tasks used when training an optimizer affects final performance. To do this, we randomly sampled subsets of tasks from the full task set, while evaluating performance on a common held-out set of tasks. Figure 2(b) shows that increasing the number of tasks leads to large improvements.

接下来,我们探究了在训练优化器时增加内部任务数量如何影响最终性能。为此,我们从完整任务集中随机抽取任务子集,同时在统一的保留任务集上评估性能。图2(b)显示,增加任务数量会带来显著提升。

4.2 Comparisons with hand-designed optimizers

4.2 与人工设计优化器的对比

We compare against three baseline optimizers: AdamLR, which is the Adam optimizer [31] with a tuned learning rate. Adam8p, which is a version of the Adam optimizer with eight tunable hyper parameters: learning rate, $\beta_{1}$ , $\beta_{2}$ , and $\epsilon$ , plus additional $\ell_{1}$ and $\ell_{2}$ regular iz ation penalties, and a learning rate schedule parameterized with a linear decay and exponential decay. See Appendix D for more details. Our final baseline optimizer, called opt_list, consists of the NAdam optimizer [32] with “AdamW” style weight decay [33], cosine learning rate schedules [34] and learning rate warm up (See Metz et al. [16] for more info). Instead of tuning these with some search procedure, however, we draw them from a sorted list of hyper parameters provided by [16] for increased sample efficiency.

我们对比了三种基线优化器:AdamLR,即经过学习率调优的Adam优化器[31];Adam8p,这是一种具有八个可调超参数的Adam优化器变体,包括学习率、$\beta_{1}$、$\beta_{2}$和$\epsilon$,以及额外的$\ell_{1}$和$\ell_{2}$正则化惩罚项,还有采用线性衰减和指数衰减参数化的学习率调度方案(详见附录D)。我们的最终基线优化器opt_list,由NAdam优化器[32]结合"AdamW"风格权重衰减[33]、余弦学习率调度[34]以及学习率预热组成(更多信息参见Metz等人[16])。不过,我们并非通过搜索过程来调整这些参数,而是从[16]提供的排序超参数列表中抽取,以提高样本效率。

Evaluation of optimizers, let alone learned optimizers, is difficult due to different tasks of interest, hyper parameter search strategies, and compute budgets [5, 35]. We structure our comparison by exploring two scenarios for how a machine learning practitioner might go about tuning the hyperparameters of an optimizer. First, we consider an “off-the-shelf” limit, where a practitioner performs a small number of optimizer evaluations using off-the-shelf methods (for instance, tuning learning rate only). This is typically done during exploration of a new machine learning model or dataset. Second, we consider a “finely tuned” limit, where a practitioner has a large compute budget devoted to tuning hyper parameters of a traditional optimizer for a particular problem of interest.

优化器(包括学习型优化器)的评估十分困难,这源于目标任务的差异性、超参数搜索策略的多样性以及计算资源的限制 [5, 35]。我们通过模拟机器学习实践者调整优化器超参数的两种典型场景来构建对比框架:

- 开箱即用模式:实践者仅使用现成方法(例如仅调整学习率)进行少量优化器评估。这种模式常见于探索新机器学习模型或数据集时。

- 精细调参模式:实践者为特定问题投入大量计算资源,专门用于传统优化器的超参数调优。

For the “off-the-shelf” limit, we consider the scenario where a practitioner has access to a limited number of optimization runs (trials) $(\leq10)$ for a particular problem. Thus, we select a first set of baseline hyper parameters using a single, default value (denoted global in Fig 3) across all of the tasks. We use random search (RS) using 1000 different hyper parameter values to find the global value that performs best on average for all tasks.

对于"现成可用"的限制,我们考虑实践者针对特定问题只能进行有限次数优化运行(试验) $(\leq10)$ 的场景。因此,我们首先选择一组基线超参数,在所有任务中使用单一默认值(图3中标记为global)。通过随机搜索(RS)使用1000组不同超参数值,找出在所有任务上平均表现最佳的全局值。

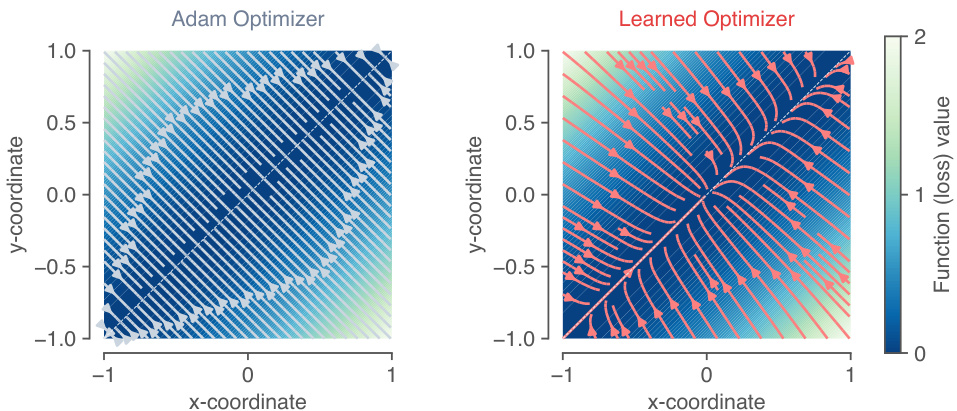

Figure 4: Implicit regular iz ation in the learned optimizer. Panels show optimization trajectories over a 2D loss surface, $\begin{array}{r}{f(x,y)=\frac{1}{2}\left(x-y\right)^{2}}\end{array}$ , that has a continuum of solutions along the diagonal. Left: Trajectories of the Adam optimizer go to the nearest point on the diagonal. Right: Trajectories of the learned optimizer go towards the diagonal, but also decay towards a single solution at the origin, as if there were an added regular iz ation penalty on the magnitude of each parameter.

图 4: 学习型优化器中的隐式正则化。面板展示了在二维损失曲面上的优化轨迹,该曲面函数为$\begin{array}{r}{f(x,y)=\frac{1}{2}\left(x-y\right)^{2}}\end{array}$,沿对角线存在连续解。左图:Adam优化器的轨迹收敛至对角线上最近点。右图:学习型优化器的轨迹不仅趋向对角线,还会衰减至原点处的单一解,仿佛对每个参数幅度施加了额外的正则化惩罚。

Practitioners often tune models with a small number of evaluations. As such, we include comparisons to per-task tuned learning rate tuned Adam, the first 10 entries of opt_list, and 10 hyper parameter evaluations obtained from random search using the adam8p hyper par meter iz ation.

从业者通常只需少量评估就能完成模型调优。为此,我们纳入了以下对比项:针对各任务单独调优的学习率Adam优化器、opt_list前10个配置项,以及通过adam8p超参空间随机搜索获得的10组超参评估结果。

For the “finely tuned” limit, we consider task-specific hyper parameter tuning, where the hyperparameters for each baseline optimizer are selected individually for each task (denoted per-task in Fig 3).

对于"精细调优"的极限情况,我们考虑任务特定的超参数调优,其中每个基线优化器的超参数会针对每个任务单独选择(在图3中标记为per-task)。

We plot a histogram over tasks showing the difference in performance between the each baseline optimizer and the learned optimizer in each row of Fig 3. First, we note that the distribution is broad, indicating that for some tasks the learned optimizer is much better, whereas for others, the baseline optimizer(s) are better. On average, we see small but consistent performance improvements over baseline optimizers, especially in the “off-the-shelf” scenario. We attribute this to the diverse set of tasks used for training the learned optimizer.

我们绘制了任务性能差异的直方图,展示图3每一行中基线优化器与学习优化器之间的表现差异。首先,我们注意到分布范围较广,这表明对于某些任务,学习优化器表现更优,而对于其他任务,基线优化器表现更好。平均而言,我们看到学习优化器相比基线优化器有微小但持续的性能提升,尤其是在"开箱即用"场景中。我们将此归因于用于训练学习优化器的多样化任务集。

4.3 Understanding optimizer behavior

4.3 理解优化器行为

To better understand the behavior of the learned optimizer, we performed probe experiments where we compared trajectories of the learned optimizer on simple loss surfaces against baseline optimizers. The goal of these experiments was to generate insight into what the learned optimizer has learned.

为了更好地理解所学优化器的行为,我们进行了探针实验,将所学优化器在简单损失曲面上的轨迹与基线优化器进行比较。这些实验的目的是深入了解所学优化器学到了什么。

In machine learning, many problems benefit from including some kind of regular iz ation penalty, such as an $\ell_{2}$ penalty on the weights or parameters of a model. We explored whether the learned optimizer (which was trained to minimize validation loss) had any implicit regular iz ation, beyond what was specified as part of the loss. To test this, we ran optimizers on a simple 2D loss surface, with a continuum of solutions along the diagonal: $\begin{array}{r}{f(x,y)=\frac{1}{2}\left(x-y\right)^{2}}\end{array}$ . Although any point along the $x=y$ diagonal is a global minimum, we wanted to see if the learned optimizer would prefer any particular solution within that set.

在机器学习中,许多问题都能通过引入某种正则化 (regularization) 惩罚项而受益,例如对模型权重或参数施加 $\ell_{2}$ 惩罚。我们探究了学习型优化器(训练目标是最小化验证损失)是否具有超出损失函数指定范围之外的隐式正则化。为此,我们在一个简单的二维损失曲面上测试优化器,该曲面沿对角线存在连续解集:$\begin{array}{r}{f(x,y)=\frac{1}{2}\left(x-y\right)^{2}}\end{array}$。虽然 $x=y$ 对角线上的任意点都是全局最小值,但我们希望观察学习型优化器是否会偏好该解集中的特定解。

Figure 4 shows the resulting training trajectories along the 2D loss surface from many starting points. For a baseline optimizer (left), the trajectories find the nearest point on the diagonal. However, we find that the learned optimizer has learned some implicit regular iz ation, in that it pushes the parameters towards a solution with small norm: $(0,0)$ .

图 4: 展示了从多个起点出发沿二维损失面的训练轨迹。对于基线优化器(左图),轨迹会找到对角线上最近的点。然而,我们发现学习到的优化器已掌握某种隐式正则化(regularization),它会将参数推向范数较小的解: $(0,0)$。

4.4 Generalization along different task axes

4.4 不同任务轴上的泛化能力

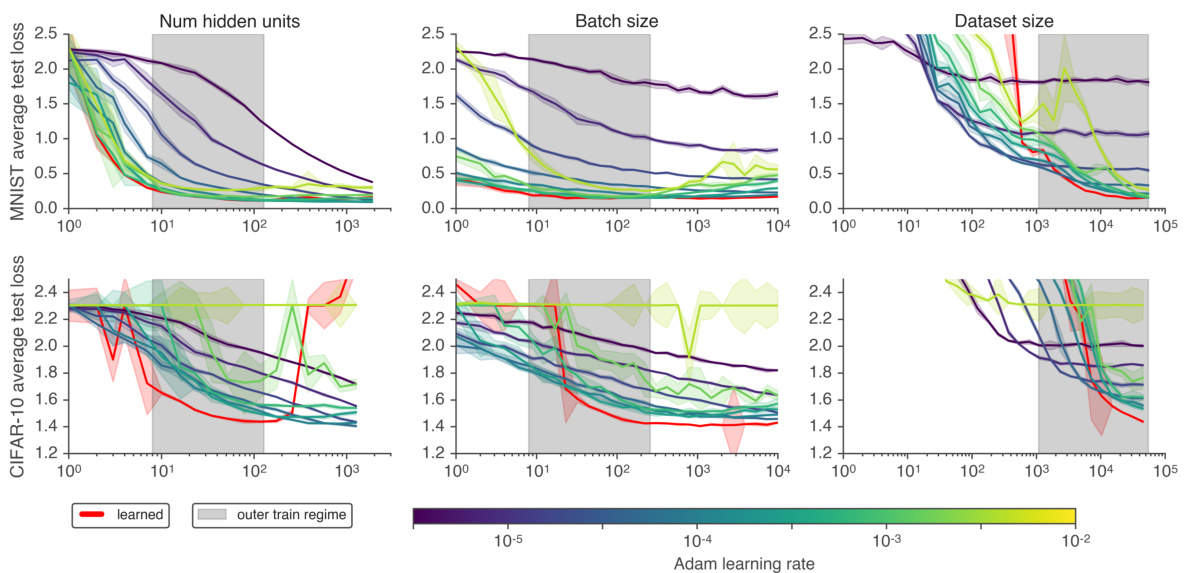

Next, we wondered whether the learned optimizer was capable of training machine learning models which differed across different architectural and training hyper parameters. To test this type of generalization, we trained fully connected neural networks on CIFAR-10 and MNIST, and swept three model hyper parameters: the number of hidden units per layer (network width), the batch size, and the number of training examples (dataset size, formed by sub sampling the full dataset). For each sweep, we compare the learned optimizer to a baseline optimizer, Adam, over a grid of eight different learning rates logarithmic ally spaced from $10^{-5.5}$ to $10^{^{\bullet}-2}$ .

接下来,我们想知道学习到的优化器是否能够训练在不同架构和训练超参数上存在差异的机器学习模型。为了测试这种泛化能力,我们在CIFAR-10和MNIST上训练全连接神经网络,并调整了三个模型超参数:每层隐藏单元数(网络宽度)、批量大小和训练样本数量(数据集大小,通过对完整数据集进行子采样形成)。对于每次调整,我们将学习到的优化器与基线优化器Adam在8个不同学习率的网格上进行比较,这些学习率在$10^{-5.5}$到$10^{^{\bullet}-2}$之间对数均匀分布。

Figure 5: We show outer-generalization of the learned optimizer in a controlled setting by varying hyper parameters of the task being trained. We vary parameters around two types of models. Top row: A two hidden layer, 32 unit fully connected network trained on MNIST with batch size 128. Bottom row: A two layer hidden layer, 64 unit fully connected network trained on CIFAR-10 with batch size 128. We vary the width of the underlying network, batch size, and dataset size in each column. Each point on each curve shows the mean test loss averaged over 10k inner steps. We show median performance over five random task initialization s. Error bars denote one standard deviation away from the median. Color denotes different learning rates for Adam log spaced every half order of magnitude for Adam with purple representing $10^{-\overleftarrow{6}}$ , and yellow at $10^{-2}$ . We find the learned optimizer is able to generalize outside of the outer-training distribution (indicated by the shaded patch) in some cases.

图 5: 我们通过调整训练任务的超参数,在受控环境中展示所学优化器的外推泛化能力。实验围绕两类模型展开参数变化。上图: 在MNIST数据集上训练的批量为128的双隐藏层全连接网络(每层32单元)。下图: 在CIFAR-10数据集上训练的批量为128的双隐藏层全连接网络(每层64单元)。各列分别展示了基础网络宽度、批量大小和数据集规模的变动情况。曲线上的每个点表示1万次内部步骤的平均测试损失,数据点为五次随机任务初始化的中位数表现,误差条表示偏离中位数一个标准差。颜色代表Adam优化器的不同学习率(以半数量级对数间隔),紫色对应$10^{-\overleftarrow{6}}$,黄色对应$10^{-2}$。研究发现,所学优化器在某些情况下能够泛化至外训练分布之外(阴影区域标示)。

The results of these experiments are in Fig. 5. As we vary the number of hidden units (left column) or batch size (middle column), the learned optimizer generalizes outside of the range of hidden units used during training (indicated by the shaded regions). In addition, the learned optimizer matches the performance of the best learning rate tuned Adam optimizer. On CIFAR-10, as we move further away from the outer-training task distribution, the learned optimizer diverges. For dataset size (right column), we find that the learned optimizer is more sensitive to the amount of data present (performance drops off more quickly as the dataset size decreases). These experiments demonstrate the learned optimizer is capable of adapting to some aspects of target tasks which differ from its outer-training distribution, without additional tuning.

这些实验结果见图5。当我们改变隐藏单元数量(左列)或批处理大小(中列)时,学习到的优化器能够泛化到训练时所用隐藏单元范围之外(阴影区域所示)。此外,学习到的优化器性能与经过最佳学习率调优的Adam优化器相当。在CIFAR-10数据集上,当我们进一步偏离外部训练任务分布时,学习到的优化器会出现发散。对于数据集规模(右列),我们发现学习到的优化器对数据量更为敏感(随着数据集规模减小,性能下降更快)。这些实验表明,学习到的优化器能够在无需额外调优的情况下,适应目标任务中某些与外部训练分布不同的方面。

4.5 Generalization to large-scale problems

4.5 大规模问题的泛化

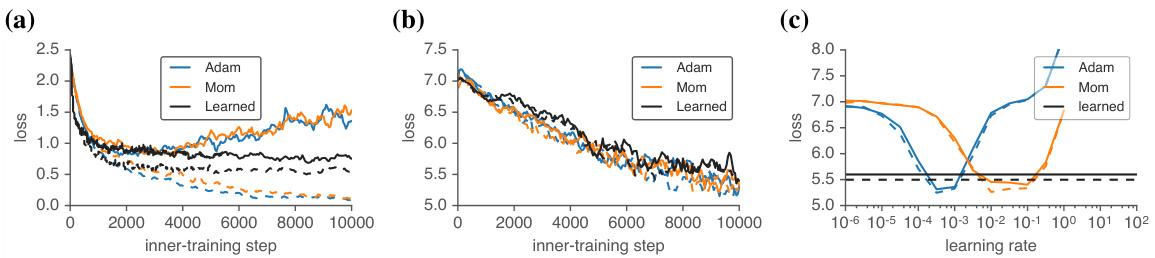

We test the learned optimizer on large-scale machine learning tasks. We use two ResNet V2 [36] architectures: a 14 layer residual network trained on CIFAR-10 [37]; and a 35 layer residual network trained on 64x64 resized ImageNet [38]. We train both networks with the learned optimizer and compare the performance to learning rate tuned Adam and Momentum (Fig. 6). Details in Appendix D. For CIFAR-10, we find that the learned optimizer achieves similar performance as the baselines but does not overfit later in inner-training. For ImageNet, we find that the learned optimizer performs slightly worse.

我们在大型机器学习任务上测试了所学优化器。采用两种ResNet V2 [36]架构:在CIFAR-10 [37]上训练的14层残差网络;以及在64x64尺寸调整后的ImageNet [38]上训练的35层残差网络。使用所学优化器训练这两个网络,并与学习率调优的Adam和Momentum进行性能对比(图6)。具体细节见附录D。对于CIFAR-10,我们发现所学优化器取得了与基线相当的性能,但在内部训练后期没有出现过拟合。对于ImageNet,所学优化器表现略逊一筹。

Note that our baselines only include learning rate tuning. More specialized hyper parameter configurations, designed specifically for these tasks, such as learning rate schedules and data augmentation strategies will perform better. An extensive study of learned optimizer performance on a wide range of state-of-the-art models is a subject for future work.

需要注意的是,我们的基线仅包含学习率调优。针对这些任务专门设计的更专业化的超参数配置(例如学习率调度策略和数据增强方案)将获得更优表现。关于学习优化器在各类前沿模型上的性能表现,仍需通过未来工作展开全面研究。

Figure 6: Learned optimizers are able to optimize ResNet models. (a) Inner-learning curves using the learned optimizer to train a small ResNet on CIFAR-10. We compare to learning rate tuned Adam and Momentum. Solid lines denote test performance, dashed lines are train. (b) Inner-learning curves using the learned optimizer to train a small ResNet on 64x64 resized ImageNet. We compare to learning rate tuned Adam and Momentum. (c) Performance averaged between $9.5\mathrm{k}$ and $10\mathrm{k\Omega}$ inner-iterations for Adam and Momentum as a function of learning rate, on the same task as in (b). Despite requiring no tuning, the learned optimizer performs similarly to these baselines after tuning.

图 6: 学习型优化器能够优化ResNet模型。(a) 使用学习型优化器在CIFAR-10上训练小型ResNet的内部学习曲线。我们与学习率调优后的Adam和Momentum进行比较。实线表示测试性能,虚线表示训练性能。(b) 使用学习型优化器在64x64尺寸调整后的ImageNet上训练小型ResNet的内部学习曲线。我们与学习率调优后的Adam和Momentum进行比较。(c) Adam和Momentum在$9.5\mathrm{k}$至$10\mathrm{k\Omega}$内部迭代区间内的平均性能(作为学习率的函数),任务设置与(b)相同。尽管无需调参,学习型优化器在调优后表现与这些基线方法相当。

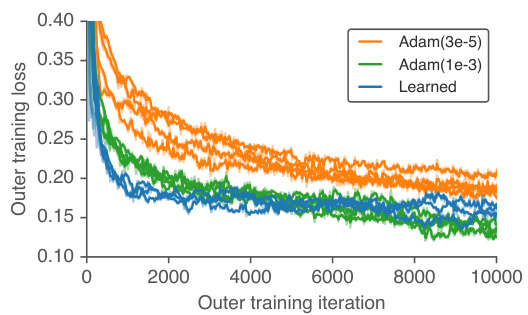

Figure 7: The learned optimizers can be used to train themselves about as efficiently as hand designed methods. On the $\mathbf{X}$ -axis we show number of weight updates done to the learned optimizer. On the y-axis we show outer-loss. Each point consists of inner-training 100 models, each with five random initialization s trained for 10k inner-iterations. We show the average validation performance post-normalization averaged over all tasks, seeds, and inner-training steps. Each line represents a different randomly initialized learned optimizer. In orange we show Adam with a learning rate of 3e-5 the same value used to train the optimizers in this work. In green, we show Adam with a learning rate of 1e-3, the learning rate that performed best in this 10k outer-iteration regime. In blue we show our learned optimizer.

图 7: 学习到的优化器能以与手工设计方法相当的效率训练自身。横轴( $\mathbf{X}$ )表示对学习优化器进行的权重更新次数,纵轴表示外部损失。每个数据点包含100个模型的内部训练,每个模型采用五次随机初始化并训练1万次内部迭代。图中显示的是所有任务、随机种子和内部训练步骤后归一化的平均验证性能。每条线代表不同随机初始化的学习优化器。橙色线表示学习率为3e-5的Adam(与本工作中训练优化器所用值相同),绿色线表示学习率为1e-3的Adam(在该1万次外部迭代机制中表现最佳),蓝色线表示我们学习到的优化器。

4.6 Learned optimizers training themselves

4.6 学习优化器的自我训练

Finally, we performed an experiment to test if a learned optimizer can be use to train new learned optimizers. Figure 8 shows that this “self-optimized” training curve is similar to the training curve using our hand-tuned training setup (using the Adam optimizer). We interpret this as evidence of unexpectedly effective generalization, as the training of a learned optimizer is unlike anything in the set of training tasks used to train the optimizer. We show outer-training for 10k outer-training iterations, matching the number of inner-iterations used when outer-training the learned optimizer. If outer-training is continued beyond $10\mathrm{k\Omega}$ iterations, learned optimizer performance worsens (see Appendix G), suggesting that more inner-iterations are needed when outer-training.

最后,我们进行了一项实验,测试学习到的优化器是否可用于训练新的学习优化器。图 8 显示,这种"自优化"训练曲线与使用手动调优训练设置(使用 Adam 优化器)的训练曲线相似。我们将此解释为意外有效泛化的证据,因为学习优化器的训练与用于训练该优化器的任务集中的任何内容都不同。我们展示了 10k 次外部训练迭代的外训练,与在学习优化器外训练时使用的内部迭代次数相匹配。如果外训练持续超过 $10\mathrm{k\Omega}$ 次迭代,学习优化器的性能会下降(见附录 G),这表明在外训练时需要更多的内部迭代。

5 Discussion

5 讨论

In this work, we train a learned optimizer using a larger and more diverse set of training tasks, better optimization techniques, an improved optimizer architecture, and more compute than previous work. The resulting optimizer outperforms hand-designed optimizers constrained to a single set of hyperparameters, and performs comparably to hand designed optimizers after a modest hyper parameter search. Further, it demonstrates some ability to generalize to optimization tasks unlike those it was trained on. Most dramatically, it demonstrates an ability to train itself.

在本工作中,我们使用比以往研究更大规模、更多样化的训练任务集、更优的优化技术、改进的优化器架构以及更强的算力来训练一个学习型优化器。最终得到的优化器性能优于使用固定超参数的手工设计优化器,并在经过适度超参数搜索后与手工优化器表现相当。此外,它展现出对训练任务之外优化问题的泛化能力。最显著的是,该优化器展示了自我训练的能力。

However, the learned optimizer we develop is not without limitations. Below we summarize areas for future work.

然而,我们开发的学习型优化器并非没有局限性。以下我们总结了未来工作的方向。

Generalization: While it performs well for tasks like those in TaskSet, we do not yet fully understand its outer-generalization capabilities. It shows promising ability to generalize to out of distribution problems such as training itself, and ResNet models, but it does not outperform competing algorithms in all settings on simple “out of distribution” optimization tasks, like those seen in Figure 5.

泛化性:虽然在TaskSet等任务上表现良好,但我们尚未完全理解其外部泛化能力。该系统在分布外问题上展现出有前景的泛化能力(例如对自身训练过程和ResNet模型的优化),但在简单"分布外"优化任务(如图5所示)中,其表现并未全面超越其他竞争算法。

Optimization / Compute: Currently it takes significant compute expenditure to train a learned optimizer at this scale, resulting in a nontrivial carbon footprint. Ideally training can be run once and the resulting learned optimizer can be used broadly as is the case with BERT [39].

优化/计算:目前在这个规模上训练一个学习到的优化器需要大量的计算开销,导致不小的碳足迹。理想情况下,训练可以只运行一次,然后像BERT [39]那样广泛使用所得到的学习优化器。

Learned optimizer architectures: We have shown there is considerable improvement to be had by incorporating better inductive biases both in outer-optimization speed and capacity. Improving these architectures, and leveraging additional features will hopefully lead to more performant learned optimizers. Additionally, the current optimizer, while compute efficient, is memory inefficient requiring $>5\times$ more storage per-parameter than Adam. We do not believe this poses a fundamental barrier, but modifications similar to those in Shazeer and Stern [40] will be needed to train larger models.

学习到的优化器架构:我们已经证明,通过在外层优化速度和容量方面融入更好的归纳偏置,可以带来显著提升。改进这些架构并利用额外特性,有望催生性能更优的学习型优化器。此外,当前优化器虽然计算效率高,但内存效率较低,每个参数所需的存储空间比Adam多$>5\times$倍。我们认为这并非根本性障碍,但要训练更大模型,需要采用类似Shazeer和Stern [40] 提出的改进方案。

Outer-training task distribution: We have shown that training on larger tasksets leads to better generalization. We have not studied which tasks should be included so as to aid outer-generalization.

外训练任务分布:我们已经证明在更大的任务集上进行训练能带来更好的泛化效果。但尚未研究应包含哪些任务以促进外泛化能力。

Acknowledgements

致谢

We would like to thank Alex Alemi, Eric Jang, Diogo Moitinho de Almeida, Timothy Nguyen, Alec Radford, Ruoxi Sun, Paul Vicol and Wojciech Zaremba for discussion related to this work as well as the Brain Team for providing a supportive research environment. We would also like to thank the authors of Numpy [41–43], Seaborn [44], and Matplotlib [45].

我们要感谢Alex Alemi、Eric Jang、Diogo Moitinho de Almeida、Timothy Nguyen、Alec Radford、Ruoxi Sun、Paul Vicol和Wojciech Zaremba就本工作进行的讨论,以及Brain Team提供的支持性研究环境。同时感谢Numpy [41-43]、Seaborn [44]和Matplotlib [45]的作者们。

Broader Impact

更广泛的影响

Machine learning training and inference is a major energy consumer, and training models likely dominates most individual machine learning researcher’s carbon emissions [46]. By meta-learning optimizers, we hope to amortize the cost of training ML models, and thus reduce the environmental impact of training a single model. We hope to achieve this both by reducing the need for extensive hyper parameter tuning as models are developed, and making training more efficient for the best hyper parameters.

机器学习训练与推理是主要的能源消耗环节,而模型训练很可能占据了大多数机器学习研究者个人碳排放的主要部分 [46]。通过元学习优化器,我们希望分摊训练ML模型的成本,从而降低单个模型训练对环境的影响。我们希望通过两方面实现这一目标:一是减少模型开发过程中大量超参数调优的需求,二是让最优超参数下的训练过程更加高效。

With this work, and more generally with meta-learning algorithms, we hope to provide researchers access to more performant easier to use optimizers for their problems. Improving technology to do machine learning will accelerate its impact, for better or worse. We believe machine learning technologies will be beneficial to humanity on the whole, and thus by improving the ability to optimize models we are moving towards this goal.

通过这项工作,以及更广泛的元学习算法,我们希望为研究人员提供性能更优、更易使用的优化器来解决他们的问题。提升机器学习相关技术将加速其影响力,无论好坏。我们相信机器学习技术总体上将对人类有益,因此通过提升模型优化能力,我们正朝着这一目标迈进。

Our optimizer is trained on a mixture of different tasks and datasets. It is our goal to construct an outer-training distribution that learns relevant inductive biases for machine learning tasks of interest. However, we currently do not have good methods to decipher exactly what types of inductive biases a learned optimizer might learn. Thus, future work is needed to explore what kinds of information is absorbed by learned optimizers. In the meantime, care should be taken when employing learned optimizers as the inductive biases of a particular learned optimizer may not be appropriate for an end user’s goals.

我们的优化器是在不同任务和数据集混合训练下完成的。我们的目标是构建一个外部训练分布,以学习相关机器学习任务所需的归纳偏置。然而,目前尚缺乏有效方法来精确解析已学习优化器可能习得的归纳偏置类型。因此,未来需要进一步探索学习型优化器吸收的信息种类。同时,在使用学习型优化器时应保持谨慎,因为特定优化器的归纳偏置可能与终端用户目标不匹配。

In fact, it has been suggested that learned optimizers could be a source of alignment drift in the development of Artificial General Intelligence (AGI) – leading to AGI that does not perform as its creators hoped [47]. While we do not feel that is an immediate danger from the present work, it is a consideration that should be kept in mind as learned optimizers become increasingly powerful.

事实上,有观点认为学习型优化器可能成为通用人工智能(AGI)发展过程中出现对齐漂移的诱因——导致AGI的行为偏离创造者的预期[47]。虽然我们认为当前研究尚未构成迫在眉睫的风险,但随着学习型优化器能力不断增强,这一潜在问题值得持续关注。

AExtended Related Work

扩展相关工作

We categorize progress in learned learned optimizers into three categories: parameter iz at ions, data distributions, and outer-training methodology. We include a review of these previous efforts herein.

我们将学习优化器的进展分为三类:参数化 (parameterizations)、数据分布 (data distributions) 和外层训练方法 (outer-training methodology)。本文对先前这些工作进行了综述。

A.1 Parameter iz at ions

A.1 参数化

Broadly, authors attack the problem of learned optimization at a high level using different parameterizations. We review these different choices below.

广义上,作者们从高层面对学习优化问题采用了不同的参数化方法进行攻克。我们将在下文回顾这些不同的选择。

A.1.1 Controller based parameter iz ation

A.1.1 基于控制器的参数化

One class of learned optimizer parameter ize s some function, usually as a neural network, that returns hyper parameters for an existing hand designed method. These methods impose a strong inductive bias. All inner-learning must be done via manipulation of existing methods. By enforcing a constrained structure, these method limit the types of learning rules expressible. This limitation does inject strong priors into the model making both outer optimization easier, as well as produce better outergeneralization. These methods often additionally make use off derived features from the currently training model. These features include things like loss values, variance of function evaluations, and variance of weight matrices.

一类学习优化器将某些函数(通常作为神经网络)参数化,用于返回现有手工设计方法的超参数。这些方法引入了强归纳偏置。所有内部学习必须通过对现有方法的操作来完成。通过强制约束结构,这些方法限制了可表达的学习规则类型。这种限制确实向模型注入了强先验,既使外部优化更容易,也产生了更好的外部泛化能力。这些方法通常还会利用当前训练模型派生的特征,包括损失值、函数评估方差和权重矩阵方差等指标。

There are a number of existing works that explore different types of features and architectures. Daniel et al. [6] explores using a simple, linear policy which maps from hand designed features to log learning rate which is then used with SGD, RMSProp or momentum. Xu et al. [7] learns a small LSTM network with inputs of current training loss, and predicts a learning rate for SGD. Xu et al. [8] uses an LSTM or an MLP with features computed during training and outputs a scalar which is multiplied by the previous learning rate.

现有研究探索了多种特征类型和架构。Daniel等人[6]采用简单的线性策略,将人工设计的特征映射为对数学习率,用于SGD、RMSProp或动量优化器。Xu等人[7]训练小型LSTM网络,以当前训练损失为输入,预测SGD的学习率。Xu等人[8]则使用LSTM或MLP架构,基于训练过程中计算的特征输出标量值,与先前学习率相乘。

A.1.2 Symbolic parameter iz at ions

A.1.2 符号参数化

Existing learning rules are often symbolic in nature. All first order optimizers used today are symbolic and expressible by a small collection of mathematical operations. Bello et al. [48] take inspiration from this and also parameter ize optimizers as collections of mathematical primitives. This parameter iz ation can lead to interpret able optimizers. For example, Bello et al. [48] show common sub patterns discovered based on multiplication of the sign of the gradient and sign of the momentum value. Not all computations lend themselves to a symbolic regression. Finding symbolic formula to solve increasingly more difficult tasks starts to resemble program synthesis which is notoriously difficult.

现有学习规则通常具有符号化特性。当前所有一阶优化器都是符号化的,可通过少量数学运算表达。Bello等人[48]受此启发,也将优化器参数化为数学原语的集合。这种参数化可产生可解释的优化器,例如[48]展示了基于梯度符号与动量值符号相乘所发现的常见子模式。但并非所有计算都适合符号回归——随着任务难度提升,寻找符号公式求解的过程会逐渐趋近于 notoriously difficult 的程序合成问题。

A.1.3 Continuous parameter iz at ions

A.1.3 连续参数化

The last family of parameter iz ation is based on continuously parameterized function approximations. Older work often makes use of simple / linear combinations of features while more recent work makes use of neural networks. There are a few classes of update rule parameter iz at ions.

最后一类参数化方法基于连续参数化的函数近似。早期研究常采用简单的特征线性组合,而近期工作则多使用神经网络。更新规则参数化可分为若干类别。

Bengio et al. [49] proposes a 7 parameter, and 16 parameter update rule parameterized by mixing various biologically inspired learning signals. Runarsson and Jonsson [50] use a simple continuous parameter iz ation that operates on error signals and produces changes in inner-parameters.

Bengio等人[49]提出了一种7参数和16参数更新规则,通过混合多种受生物学启发的学习信号进行参数化。Runarsson和Jonsson[50]采用了一种简单的连续参数化方法,基于误差信号操作并改变内部参数。

Recently, there has been renewed interest in learned optimizers, in particular using neural network para meri zat ions of the learning rules. An dry ch owicz et al. [9] makes use of an LSTM[25] possibly with global average pooling to enable concepts like $\ell_{2}$ gradient clipping to be implemented [51]. They also explore Neural Turing Machine [52] like parameter iz at ions specifically designed for low rank memory updates so that it can learn algorithms like LBFGS [53, 54]. In contrast, Metz et al. [14] makes use of a per-parameter MLP instead of the LSTM.

最近,人们对学习优化器重新产生了兴趣,特别是利用神经网络参数化学习规则。An dry ch owicz等人[9]采用了LSTM[25],可能结合全局平均池化,以实现诸如$\ell_{2}$梯度裁剪等概念的实现[51]。他们还探索了类似神经图灵机[52]的参数化方法,专门设计用于低秩内存更新,从而能够学习如LBFGS[53, 54]等算法。相比之下,Metz等人[14]则使用了针对每个参数的多层感知机(MLP)而非LSTM。

One critical design choice when designing neural network parameterized learned optimizers is input features. When training neural network models it is critical that the inputs be similar scales to aide in optimization. An dry ch owicz et al. [9] trains on raw gradients which are scaled by either decomposing each scalar into a sign and a magnitude before feeding into an LSTM. Lv et al. [11] uses the gradient and momentum normalized by the rolling average of gradients squared (similar to the rescaling done by Adam). Wichrowska et al. [10] also makes use of momentum except using multiple timescales normalized in a similar way. In addition to these momentum terms, Metz et al. [14] other features such as weight value and additionally applies a normalization based on the second moment of features.

设计神经网络参数化学习优化器时,一个关键的设计选择是输入特征。在训练神经网络模型时,确保输入具有相近的尺度以辅助优化至关重要。An dry ch owicz等人[9]采用原始梯度进行训练,通过将每个标量分解为符号和幅度后再输入LSTM进行缩放。Lv等人[11]使用梯度平方的滚动平均值对梯度和动量进行归一化(类似于Adam中的重缩放方法)。Wichrowska等人[10]同样利用了动量,但采用多时间尺度并按类似方式归一化。除了这些动量项外,Metz等人[14]还引入了权重值等特征,并基于特征的二阶矩应用了额外的归一化处理。

In [9, 11, 12] all methods employ a per-parameter update. The updates are independent of the number of parameters 1. While expressive, this is expensive as no computation can be shared. Wichrowska et al. [10] improve upon this by additionally having per-layer, and a global LSTM.

在[9, 11, 12]中,所有方法都采用了逐参数更新机制。这些更新与参数数量无关1。虽然表达能力强,但由于无法共享计算,这种方法成本高昂。Wichrowska等人[10]通过额外引入逐层更新和全局LSTM对此进行了改进。

When designing learned optimizer architectures, there are a number of design decisions to keep in mind. One must balance compute cost of the learned optimizer with express i bil it y. Often this shifts models to be considerably smaller than those used in supervised learning. Wichrowska et al. [10], for example makes use of a 8-hidden unit LSTM per-parameter. Selection of features to feed into the learned optimizer is also critical. While traditionally deep learning involves learning every feature, this learning comes at the cost of increased compute which is often not feasible.

在设计学习型优化器架构时,需考虑多项设计决策。开发者必须在学习型优化器的计算成本与表达能力之间取得平衡。这通常导致模型规模远小于监督学习所用的模型。例如 Wichrowska 等人 [10] 就采用了每参数 8 个隐藏单元的 LSTM 结构。选择输入学习型优化器的特征也至关重要:虽然传统深度学习会学习所有特征,但这种学习会带来难以承受的计算成本增长。

A.2 Data distribution of tasks

A.2 任务的数据分布

There is no agreed upon standards when defining datasets for training learned optimizers. The community is adhoc, training on what ever dataset is available or what ever best suites the goals (training a particular model, creating more general optimizers). Constructing large distributions of tasks is labor intensive and thus not often done.

在定义用于训练学习优化器的数据集时,目前尚无统一标准。该领域的研究具有临时性,通常基于现有数据集或最符合目标的数据集(如训练特定模型或开发更通用的优化器)进行训练。构建大规模任务分布需要大量人力,因此并不常见。

Metz et al. [14] draws inspiration from the few shot learning literature and constructs 10 way classification problems sampling from different classes on imagenet.

Metz et al. [14] 从少样本学习文献中汲取灵感,通过在 ImageNet 上对不同类别进行采样构建了 10 路分类问题。

Wichrowska et al. [10] leverages a large distribution of synthetic tasks. These tasks are designed to represent different types of loss surfaces that might be found in loss surfaces.

Wichrowska等人[10]利用了大量合成任务的分布。这些任务旨在代表损失曲面中可能出现的不同类型损失曲面。

TaskSet, [16] is a dataset of tasks specifically designed for learned optimizer research. We use this dataset throughout our work.

TaskSet [16] 是一个专为学习型优化器研究设计的任务数据集。我们在整个工作中都使用该数据集。

There is a balance between performance of the tasks, and ability to outer-train. Selecting the types of problems we want to train on is often not enough. Additionally, outer-training on the closest task possible will not produce the best optimizer nor even converge. Training on distributions with increased variation smooths the outer-loss surface and makes exploration simpler. This mirrors phenomena found in RL [55, 56].

在任务性能与外训练能力之间存在平衡。仅选择我们想要训练的问题类型往往不够。此外,在最接近的任务上进行外训练既不会产生最佳优化器,甚至无法收敛。在变化增大的分布上进行训练可以平滑外损失表面,使探索更简单。这与强化学习中发现的现象相呼应 [55, 56]。

A.3 Outer-Optimization methods

A.3 外部优化方法

The outer-optimization problem consists of finding a particular set of weights, or configuration of a learned optimizer. A number of different strategies have been proposed.

外层优化问题在于寻找一组特定的权重,或是学习优化器的某种配置。目前已有多种不同策略被提出。

A.3.1 Hyper parameter optimization

A.3.1 超参数优化

One of the most common outer-learning methods used is hyper-parameter optimization. In the context of learning optimizers, this can be seen as finding optimizer hyper parameters, e.g. learning rate, over a particular task instance. Numerous methods exist to do this ranging from Bayesian hyper parameter optimization [57], to grid search, to genetic algorithms. See Golovin et al. [58] for a more complete description.

最常用的外部学习方法之一是超参数优化。在学习优化器的背景下,这可以看作是为特定任务实例寻找优化器超参数(例如学习率)。实现这一目标的方法多种多样,包括贝叶斯超参数优化[57]、网格搜索和遗传算法等。更全面的描述可参考Golovin等人[58]的研究。

The types of outer-learning problems encountered in learned optimizers is different in that the evaluation function is often an expectation over some distribution. Additionally, the amount of outer-parameters is often larger than simply finding a few hyper parameters. Never the less, we include this here to show the similarity to learned optimizer research.

学习式优化器(learned optimizers)遇到的外部学习问题类型有所不同,其评估函数通常是某种分布的期望值。此外,外部参数量通常远超简单寻找几个超参数的情况。尽管如此,我们仍将其纳入讨论以展示其与学习式优化器研究的相似性。

A.3.2 Reinforcement learning

A.3.2 强化学习

Learned optimizers can naturally be cast into a sequential decision process. The state of the system consists of the inner-parameter values, action space is the steps taken, and the reward is achieving a low loss in the future. A number of works have thus taken this viewpoint. Li and Malik [59, 60] makes use of the guided policy search algorithm. Xu et al. [8] uses PPO Schulman et al. [61], Daniel et al. [6] make use of Relative Entropy Policy Search [62]. The exact algorithm used is a function of the underlying parameter iz ation.

学习型优化器自然可以被视为一个序列决策过程。系统状态由内部参数值构成,动作空间是采取的步骤,而奖励则是未来实现较低的损失。因此,多篇研究采用了这一视角。Li和Malik [59, 60] 利用了引导策略搜索算法。Xu等人 [8] 使用了PPO (Schulman等人 [61]),Daniel等人 [6] 则采用了相对熵策略搜索 [62]。具体使用的算法取决于底层参数化方式。

A.3.3 Neural Architecture Search Style

A.3.3 神经架构搜索风格

Instead of learning the policy directly, Bello et al. [48] makes use of reinforcement learning (PPO [61] to learn a controller which produces the symbolic learned optimizer. This is distinct from $\S\mathrm{A}.3.2$ as it does not leverage the sequential nature of the inner problem. Instead, it treats the environment as a bandit problem.

与直接学习策略不同,Bello等人[48]利用强化学习(PPO[61])训练控制器来生成符号化学习优化器。该方法与$\S\mathrm{A}.3.2$的区别在于:不利用内部问题的序列特性,而是将环境视为多臂老虎机问题。

A.3.4 Back pro pog ation / Gradient based

A.3.4 反向传播/基于梯度

Gradient based methods leverage local perturbations in parameter space. Computing derivatives through leaning procedures has been explored in the context of hyper parameter optimization in [63–65]. An dry ch owicz et al. [9] was the first to make use of gradient based learning for this application.

基于梯度的方法利用参数空间中的局部扰动。在超参数优化背景下,[63-65] 探讨了通过学习过程计算导数的方法。An dry ch owicz 等人 [9] 首次将基于梯度的学习应用于此场景。

To train a learned optimizer, ideally, one would compute the derivative of the entire training run with respect to optimizer parameters. This is often referred to as unrolling the entire training procedure into one large graph then running reverse mode automatic differentiation on this. Not only is this often too expensive to do in practice, the resulting loss surface can be poorly conditioned [14]. As such approximations are often made.

为了训练一个学习型优化器,理想情况下需要计算整个训练过程对优化器参数的导数。这通常被称为将整个训练过程展开成一个大图,然后对其运行反向模式自动微分。但这种方法不仅在实际中往往计算成本过高,而且得到的损失曲面可能条件不佳[14]。因此通常会采用近似方法。

One common family of approximation is based on truncated back pro pog ation through time. The core idea is to break apart long unrolled computations into shorter sequences and thus not propagating any error back through the entire unrolled computation graph pieces [66, 67]. This approximation is used widely in neural network parameterized learned optimizers [9–11, 14]. Unlike in language modeling, truncated backprop has been shown to lead to dramatically worse solutions for metalearning applications [19, 14].

一种常见的近似方法基于截断时间反向传播 (truncated back propagation through time)。其核心思想是将长时间展开的计算过程分解为较短的序列,从而避免误差在整个展开计算图中反向传播 [66, 67]。这种近似方法被广泛应用于神经网络参数化学习优化器 [9–11, 14]。与语言建模不同,研究表明截断反向传播会导致元学习应用的解决方案显著变差 [19, 14]。

A second family of approximations involve first order gradient calculations. An dry ch owicz et al. [9] does use the first order gradient calculation where as subsequent work, [10] does and computes the full gradient. The trade offs between these two gradient estimators has been discussed in the few shot learning literature in the context of MAML / Reptile [68, 69].

第二类近似方法涉及一阶梯度计算。An dry ch owicz等人[9]确实使用了一阶梯度计算,而后续工作[10]则计算完整梯度。这两种梯度估计器之间的权衡已在少样本学习文献中关于MAML/Reptile的背景下讨论过[68, 69]。

Computing gradients through iterative, non-linear, dynamics has been shown to cause chaotic dynamics. Pearl mutter [70], Maclaurin et al. [65] showed high sensitivity to learning rate with respect to performance after multiple steps of unrolled optimization. Metz et al. [14] shows this issue for neural network parameterized learned optimizers and proposes a solution based on variation al optimization and multiple gradient estimators.

通过迭代、非线性动力学计算梯度已被证明会导致混沌动力学。Pearl mutter [70] 和 Maclaurin 等人 [65] 研究表明,在展开多步优化后,性能对学习率具有高度敏感性。Metz 等人 [14] 针对神经网络参数化学习优化器揭示了这一问题,并提出基于变分优化和多重梯度估计器的解决方案。

Despite improvements, there are a lot of considerations that must be taken into account for doing gradient based training.

尽管有所改进,但基于梯度的训练仍需考虑诸多因素。

A.3.5 Evolutionary Strategies

A.3.5 进化策略

A alternative way to estimate gradients is to use black box method such as Evolutionary Strategies[71, 20, 72–74]. These methods are memory efficient, requiring no storage of intermediate states, but can suffer from high variance. In the case of learned optimizer optimization, however, these methods can result in lower variance gradient estimators [14]. Hybrid approaches that leverage both gradients and ES have been such as Guided ES [75] have also been proposed for meta-optimization. This work leverages one of the simplest forms of evolutionary strategies as described in [74] which uses a fixed standard deviation.

估计梯度的另一种方法是使用黑盒方法,如进化策略 (Evolutionary Strategies) [71, 20, 72–74]。这些方法内存效率高,无需存储中间状态,但可能存在高方差问题。然而,在学习优化器优化的情况下,这些方法可以产生方差更低的梯度估计器 [14]。同时,也有研究提出了结合梯度和进化策略的混合方法,例如用于元优化的引导进化策略 (Guided ES) [75]。本文采用了一种最简单的进化策略形式 [74],即使用固定标准差的方法。

B Outer Optimization Details

B 外部优化细节

In this work, as with Metz et al. [12], we use asynchronous, batched training. Each task has a different complexity, thus will produce outer-gradient estimates at a different rate. We use a syn crono us mini batched training as synchronous training with these he te rogen io us workloads would be too slow and wasteful. We tie the outer batch size to the number of workers. To prevent stale gradients, we additionally throw away all outer-gradient estimates that are from more than five outer-iterations away from the current weights.

在本工作中,与Metz等人[12]类似,我们采用异步批处理训练方式。由于每个任务具有不同复杂度,其产生外部梯度估计的速率也各不相同。我们采用同步小批量训练策略,因为针对这种异构工作负载进行完全同步训练会过于缓慢且低效。我们将外部批次大小与工作节点数量绑定。为防止梯度陈旧化,我们额外丢弃所有与当前权重相差超过五次外部迭代的梯度估计。

We optimize all models with Adam. We sweep learning rates between 3e-5 and 3e-3 for all experiments. We find the optimal learning rate is very sensitive and changes depending on how long outer-training occurs. We have preliminary explorations into learning rate schedules but have not yet been able to improve on this constant schedule. For all outer-training experiments, we always run more than one random seed. Due to the relatively small number of units, and biased gradient estimators, performance is dependant on random seed. For all experiments we use gradient clipping of 0.1 applied to each weight independently. Without this clipping no training occurs. This surprises us as our gradient estimator is evolutionary strategies which will not typically have exploding gradients. Upon further investigation, however, the outer-gradient variance is much larger without this clipping.

我们使用 Adam 优化所有模型。在所有实验中,我们将学习率扫描范围设定在 3e-5 至 3e-3 之间。我们发现最优学习率非常敏感,且会随外部训练时长而变化。我们初步探索了学习率调度方案,但尚未能改进这种固定调度方式。对于所有外部训练实验,我们始终运行多个随机种子。由于单元数量较少且梯度估计存在偏差,性能表现依赖于随机种子。所有实验均采用独立应用于每个权重的 0.1 梯度裁剪。若不进行裁剪,训练将无法进行。这让我们感到意外,因为我们的梯度估计采用的是通常不会出现梯度爆炸的进化策略。但进一步研究发现,若不进行裁剪,外部梯度的方差会显著增大。

When computing outer-gradients, we follow [14] and compute a outer-loss based on multiple mini batches of data. In our work we use 5. Note inner-training always uses a single minibatch of inner-training data as well as a single batch of inner-validation data when used.

计算外部梯度时,我们遵循[14]的方法,基于多个小批量数据计算外部损失。本工作中我们采用5个小批量。需注意,内部训练始终使用单个小批量的内部训练数据,若涉及内部验证数据时也仅使用单批次。

We first train with 240-360 length unrolls over a max of 10k inner-steps. While training we logged out 10k length unrolls from 100 tasks sampled from the outer-training distribution and saved outerparameters every hour. While training we monitor performance across all outer-learning rates and all seeds on the outer-training distribution. When training plateaus, we manually look through these evaluations and select a candidate set of optimizers to further train with an increasing truncation schedule. Not all optimizers fine tune in the same way despite having the same performance on the outer-training data so selecting more than one is critical. At this point we are unsure where this phenomenon comes from.

我们首先在最多1万次内部步骤上,使用240-360长度的展开进行训练。训练过程中,我们从外部训练分布中采样100个任务,记录1万长度的展开数据,并每小时保存一次外部参数。训练期间,我们会监控所有外部学习率和所有种子在外部训练分布上的表现。当训练进入平台期时,我们会手动检查这些评估结果,并选出一组候选优化器,采用逐步增加的截断计划继续训练。尽管在外部训练数据上表现相同,但并非所有优化器都以相同方式微调,因此选择多个优化器至关重要。目前我们尚不清楚这一现象的成因。

Next we fine tune theses models in an unbiased fashion. We explored two methods. First, based on an increasing truncation schedule. We tested linearly increasing truncation length from 300-10k steps over the course of $30\mathrm{k\Omega}$ or 10k steps. We find the faster increase, 10k steps, performs best. Second, we explored fine tuning with Persisted Evolutionary Strategies – an unbiased gradient estimator [21]. We found this achieved similar final performance but achieved it in half the time. When fine tuning we also make use of different learning rates. We find higher learning rates make progress faster, but can be unstable in that the performance varies as a function of outer-training step. Additionally, the learning rate chosen depends on the learning rate used previously in the first training phase. Before finetuning, we ‘warm up’ the Adam internal rolling statistics. While this might not be strictly required, it ensures that there is no decrease in performance early in outer-training. This can be done by simply setting the outer-learning rate to zero for the first 300 outer-iterations.

接下来我们以无偏方式对这些模型进行微调。我们探索了两种方法:第一种基于递增截断调度方案,测试了在$30\mathrm{k\Omega}$或1万步内将截断长度从300线性增加到1万步的情况,发现更快的1万步增幅方案效果最佳。第二种采用持久进化策略(Persisted Evolutionary Strategies)——一种无偏梯度估计器[21],发现该方法最终性能相当但耗时减半。微调时我们还采用了不同学习率,发现较高学习率能加速进程,但可能因外训练步数变化导致性能波动。此外,所选学习率取决于第一阶段训练采用的学习率。微调前会对Adam内部滚动统计量进行"预热",虽非必需但能避免外训练初期性能下降,具体实现只需在前300次外迭代中将外学习率设为零。

C Learned Optimizer Architecture Details

C 学习型优化器架构详解

In this section we describe the detailed learned optimizer architecture. For ease of understanding we opt to show a mix of pseudo-code based on python and textual descriptions as opposed to mathematical expressions. Finally we chose to describe our optimizer as a series of stateless and pure functions for clarity.

在本节中,我们将详细描述学习到的优化器架构。为了便于理解,我们选择展示基于Python语言的伪代码和文本描述的混合形式,而非数学表达式。最后,为了清晰起见,我们选择将优化器描述为一系列无状态且纯函数的形式。

We used this architecture for all of our experiments except of Fig 2b which used an older version of the architecture with additional features which where dropped from the final version.

除图 2b 使用了旧版架构(含最终版本已移除的附加功能)外,其余实验均采用此架构。

C.1 High level structure of the optimizer

C.1 优化器的高层结构

The learned optimizer has two main components: a function that maps from some set of inputs, a state, and parameters to some new state and new parameters.

学习到的优化器包含两个主要组件:一个将输入集、状态和参数映射到新状态和新参数的函数。

| class Optimizer: |

| def next_state(inputs: Inputs, |

| state: State, |

| parameters: Params |

| (State, Params); |

class Optimizer:

def next_state(inputs: Inputs,

state: State,

parameters: Params

(State, Params);

And a function to produce an initial state from the given inner-parameters.

以及一个根据给定内部参数生成初始状态的函数。

| def | initial_state(self, params: | Params) | -> | State; |

def initial_state(self, params: Params) -> State;

Parameters are stored as a dictionary of different tensors keyed by name.

参数以不同张量的字典形式存储,按名称索引。

For convenience, we also define gradients to be the same type as Params:

为方便起见,我们也将梯度定义为与Params相同的类型:

| Grads Params Dict [Text, Tensor] |

梯度参数字典 [Text, Tensor]

The state consists of multiple values that we will discuss in detail further. For now, however, we list the full state with high level comments.

状态由多个值组成,我们将在后文详细讨论。不过现在,我们先列出完整状态并附上概要说明。

State $=$ namedtuple ( " State ", [ # current inner training iteration " training step " : Int # the statistics for the rolling averages . " rolling features ": Rolling Feature State , # Hidden state of the lstm " l stm hidden state ": L STM Hidden State , # Activation s passed from the MLP to the LSTM. " from_mlp ": List [ Tensor [ shape $=$ ( from m lp size ,)]], # Activation s from LSTM passed to LSTM " from_lstm ": Tensor [ shape $=$ ( from l stm size ,)], # Rolling statistics of the train loss value " train loss ac cum ": Loss Ac cum State , # Rolling statistics of the valid loss value " valid loss ac cum ": Loss Ac cum State , # State to manage inner - gradinet clipping . " dynamic clip ": Rolling Clip State , # Parameter values from the nearest 100 steps in the past. ])

State = namedtuple( "State", [ # 当前内部训练迭代次数 "training_step": Int # 滚动平均的统计量 "rolling_features": RollingFeatureState, # LSTM的隐藏状态 "lstm_hidden_state": LSTMLSTMHiddenState, # 从MLP传递到LSTM的激活值 "from_mlp": List[Tensor[shape=(from_mlp_size,)]], # 从LSTM传递到LSTM的激活值 "from_lstm": Tensor[shape=(from_lstm_size,)], # 训练损失值的滚动统计量 "train_loss_accum": LossAccumState, # 验证损失值的滚动统计量 "valid_loss_accum": LossAccumState, # 内部梯度裁剪的管理状态 "dynamic_clip": RollingClipState, # 过去最近100步的参数值 ])

Both from l stm size, from m lp size are hyper parameters set as part of the learned optimizer to control how much information is sent from the MLP or from the LSTM.

从LSTM大小和MLP大小都是作为学习优化器的一部分设置的超参数,用于控制从MLP或LSTM发送的信息量。

The input to the next_state function consists of inner-gradients, computed on a inner-training batch of data, as well as optionally validation data which is passed in every 10 iterations. We choose to not pass in validation data every steps for computational efficiency.

next_state函数的输入包括在内部训练数据批次上计算得到的内部梯度 (inner-gradients) ,以及每10次迭代传入的可选验证数据。出于计算效率考虑,我们选择不每一步都传入验证数据。

Inputs $=$ namedtuple ( " Inputs ", [ # gradient from task. This is same type as the parameters . " inner grads ": Grads , # training loss computed from a mini batch . " train_loss ": float , # validation loss from a mini batch of validation data. " valid_loss ": Optional [ float ], ])

Inputs = namedtuple("Inputs", [ # 来自任务的梯度。与参数类型相同。 "inner_grads": Grads, # 通过小批量数据计算出的训练损失。 "train_loss": float, # 来自验证数据小批量的验证损失。 "valid_loss": Optional[float], ])

Each task specifies a function that samples parameter initialization, as well as to produce outputs.

每个任务指定一个用于采样参数初始化的函数,以及生成输出的函数。

class Task : def initial params ( self ) -> Params def inputs for params (self , params ) -> ( Grads , # inner - training gradients float , # inner - training loss Optional [ float ]) # optional inner - training validation

class Task : def initial params ( self ) -> Params def inputs for params (self , params ) -> ( Grads , # 内部训练梯度 float , # 内部训练损失 Optional [ float ]) # 可选的内部训练验证

For outer-training each task also includes a second loss function which computes the task’s loss on the outer-validation split of data. Note this uses a different validation set of data than the previous function.

对于外部训练,每个任务还包含第二个损失函数,用于计算该任务在外部分割验证数据上的损失。请注意,这里使用的验证数据集与前一个函数不同。

Inner training / application of the learned optimizer looks like:

学习优化器的内部训练/应用过程如下:

Computing the outer objective and outer-gradients from inner-initialization looks like the following:

从内部初始化计算外部目标和外部梯度的过程如下:

C.2 Utilities / components

C.2 实用工具/组件

First we will describe the individual components and utilities used, then we will go on to describe the full update function.

首先我们将介绍所使用的各个组件和工具,然后继续描述完整的更新函数。

C.2.1 Rolling Features

C.2.1 滚动特征

These are a moving average of gradients and second moments computed similarly to Adam / RMSProp. The rolling state consists of tensors containing momentum values (ms) and second moment values (rms):

这些是梯度和二阶矩的移动平均值,计算方式与 Adam/RMSProp 类似。滚动状态由包含动量值 (ms) 和二阶矩值 (rms) 的张量组成:

| RollingFeaturesState | collections.namedtuple("RollingFeaturesState" |

| ["ms":I Dict[Text,Tensor] | |

| rms Dict[Text, Tensor]]) |

| RollingFeaturesState | collections.namedtuple("RollingFeaturesState" |

|---|---|

| ["ms": Dict[Text, Tensor] | |

| rms Dict[Text, Tensor]) |

The Text represent names that map to the tensor of the corresponding shape from the parameters. The values are the same shape as the corresponding inner-parameter with an additional axis appended to keep track of multiple different decay values. While its possible to outer-learn these values, we fix them at 0.5, 0.9, 0.99, 0.999, 0.9999.

文本表示映射到参数中对应形状张量的名称。这些值与对应的内部参数形状相同,但额外增加了一个轴以跟踪多个不同的衰减值。虽然可以通过外部学习这些值,但我们将其固定为0.5、0.9、0.99、0.999、0.9999。

To update these we construct a helper class.

为了更新这些内容,我们构建了一个辅助类。

Class Rolling State : def init (self , decay values ): self . decay values $=$ decay values

类滚动状态: def init(self, decay values): self.decay values = decay values

We define an initial value which is simply simply zeros:

我们定义一个初始值,它简单地全为零:

def initial state (self , params : Params ): -> Rolling Feature State n_dims $=$ len( decay values )

初始状态 (self, params: Params): -> 滚动特征状态 n_dims $=$ len(decay values)

ms = {k: tf. zeros ([v. shape $^+$ [ n_dims ]} rms $=$ {k: tf. zeros ([v. shape + [ n_dims ]} return Rolling Features State ( ${\mathfrak{m}}\mathbf{s}={\mathfrak{m}}\mathbf{s}$ , rms = rms )

ms = {k: tf.zeros([v.shape $^+$ [n_dims]} rms $=$ {k: tf.zeros([v.shape + [n_dims]} return Rolling Features State (${\mathfrak{m}}\mathbf{s}={\mathfrak{m}}\mathbf{s}$, rms=rms)

To update these values we follow a procedure similar to RMSProp update equations:

为更新这些值,我们遵循与RMSProp更新方程类似的步骤:

def update state (self , state : Rolling Feature State , grads : Grads ) > Rolling Feature State : $\begin{array}{r c l}{{{\bf r}{\bf e}{\sf t}_ {-}{\sf m}{\bf s}}}&{{=}}&{{{\bf}}}\ {{{\bf r}{\bf e}{\bf t}_{-}{\bf r}{\bf m}{\bf s}}}&{{=}}&{{{\bf}}}\end{array}$ for k in state . keys (): ${\tt{g}}={\tt{\Psi}}$ grads [k] $\mathrm{~\bf~s~}=$ state [k] ret_ms [k] $=$ np. zeros_like (ret.ms[k]) ret_rms [k] $=$ np. zeros_like ( state .rms[k]) for di , decay in enumerate ( self . decay values ): ret_ms [k][... , di] $=$ ret[k][..., di] $^ * $ decay + (1 - decay ) * grad ret_rms [k][... , di] $=$ ret[k][.. di] $^ * $ decay $^+$ (1 decay ) $^*$ grad ** 2 return Rolling Feature State ( ${\mathfrak{m}}{\mathfrak{s}}={\mathfrak{r}}$ et_ms , rms $=$ ret_rms )

def update state (self , state : Rolling Feature State , grads : Grads ) > Rolling Feature State : $\begin{array}{r c l}{{{\bf r}{\bf e}{\sf t}_ {-}{\sf m}{\bf s}}}&{{=}}&{{{\bf}}}\ {{{\bf r}{\bf e}{\bf t}_{-}{\bf r}{\bf m}{\bf s}}}&{{=}}&{{{\bf}}}\end{array}$

for k in state . keys ():

${\tt{g}}={\tt{\Psi}}$

grads [k]

$\mathrm{~\bf~s~}=$

state [k]

ret_ms [k] $=$ np. zeros_like (ret.ms[k])

ret_rms [k] $=$ np. zeros_like ( state .rms[k])

for di , decay in enumerate ( self . decay values ):

ret_ms [k][... , di] $=$ ret[k][..., di] $^*$ decay + (1 - decay ) * grad

ret_rms [k][... , di] $=$ ret[k][.. di] $^*$ decay $^+$ (1 decay ) $^*$ grad ** 2

return Rolling Feature State ( ${\mathfrak{m}}{\mathfrak{s}}={\mathfrak{r}}$ et_ms , rms $=$ ret_rms )

C.2.2 LossAccum

C.2.2 LossAccum

This represents how we get loss information into our learned optimizer. Loss values have no predetermined scale and span many orders of magnitude. As such, we must somehow standardize them so that the inputs are bounded and able to be easily used by neural networks. We get around this by keeping track of the normalized mean and variance of the mini-batch losses.

这代表了我们如何将损失信息输入到学习到的优化器中。损失值没有预定的尺度,且跨越多个数量级。因此,我们必须以某种方式将其标准化,使得输入有界且易于神经网络使用。我们通过跟踪小批量损失的正态化均值和方差来解决这一问题。

Loss Ac cum State $=$ collections . namedtuple (" Loss Ac cum State ", [" mean ": float , # rolling mean loss value "var": float , # rolling second moment loss value " updates ": int # Number of updates performed so far ])

损失累积状态 (Loss Accum State) = collections.namedtuple("Loss Accum State", ["mean": float, # 滚动平均损失值 "var": float, # 滚动二阶矩损失值 "updates": int # 当前已执行的更新次数 ])

The class that manages these states is parameterized by the decay of the rolling window.

管理这些状态的类通过滚动窗口的衰减进行参数化。

class LossAccum : def init (self , decay ): self. decay $=$ decay

class LossAccum : def init (self , decay ): self. decay $=$ decay

The initial state is simply zeros.

初始状态全为零。

def initial state ( self ) -> Loss Ac cum State : return Loss Ac cum State ( mean $=$ tf. constant (0., dtype $=$ tf. float32 ), var $=$ tf. constant (0., dtype $=$ tf. float32 ), updates $=$ tf. constant (0, dtype $=$ tf. int64 ))

def initial state ( self ) -> Loss Ac cum State : return Loss Ac cum State ( mean $=$ tf. constant (0., dtype $=$ tf. float32 ), var $=$ tf. constant (0., dtype $=$ tf. float32 ), updates $=$ tf. constant (0, dtype $=$ tf. int64 ))

To compute updates we do rolling mean and variance computations.

为了计算更新,我们进行滚动均值和方差计算。

def next_state (self , state : Rolling Ac cum State , loss : float ): -> Loss Ac cum State new_mean $=$ self.decay $^ * $ state.mean $^+$ (1.0 - self. decay ) $^ * $ loss new_var $=$ self.decay $^ * $ state.var $^+$ ( 1.0 - self.decay) $^*$ tf. square ( new_mean - loss) new updates $=$ state . updates $^+\quad1$ return Loss Ac cum State ( mean $=$ new_mean , var $=$ new_var , updates $=$ new updates )

def next_state (self , state : Rolling Ac cum State , loss : float ): -> Loss Ac cum State new_mean $=$ self.decay $^ * $ state.mean $^+$ (1.0 - self. decay ) $^ * $ loss new_var $=$ self.decay $^ * $ state.var $^+$ ( 1.0 - self.decay) $^*$ tf. square ( new_mean - loss) new updates $=$ state . updates $^+\quad1$ return Loss Ac cum State ( mean $=$ new_mean , var $=$ new_var , updates $=$ new updates )

Two additional functions are used to normalize loss values for use in neural networks. First, we have a “corrected” mean (similar to what is done by Adam) for a given AccumState.

使用两个附加函数对损失值进行归一化处理,以便在神经网络中使用。首先,我们为给定的AccumState计算"修正"均值(类似于Adam优化器的做法)。

def corrected mean (self , state : AccumState ) -> float : c = 1. / (1 - self.decay $^{**}$ tf. to_float ( state . updates ) + 1e-8) return state . mean $^*$ c

def corrected_mean(self, state: AccumState) -> float:

c = 1. / (1 - self.decay$^{**}$ tf.to_float(state.updates) + 1e-8)

return state.mean$^*$ c

Second, we have a function that weights one loss by a different AccumState. This is eventually used to weight the validation loss accum against the training loss state allowing the learned optimizer to detect over fitting.

其次,我们有一个函数,它通过不同的 AccumState 对某个损失进行加权。这一机制最终用于对验证损失累积与训练损失状态进行加权,使学习到的优化器能够检测过拟合。

def weight loss (self , state : AccumState , loss : float ) -> float : $\textsf{c}=\textsf{1}$ . / (1 - self.decay $^{**}$ tf. to_float ( state . updates ) $^+$ 1e-8) cor_mean $=$ state.mean $^ * $ c cor_var $=$ state.var $^ * $ c $\begin{array}{r l}{{}^{1}}&{{}={}}\end{array}$ (loss - cor_mean ) $^*$ tf. rsqrt ( cor_var + 1e-8) return tf. clip by value (l, -5, 5)

def weight loss (self , state : AccumState , loss : float ) -> float : $\textsf{c}=\textsf{1}$ . / (1 - self.decay $^{**}$ tf. to_float ( state . updates ) $^+$ 1e-8) cor_mean $=$ state.mean $^ * $ c cor_var $=$ state.var $^ * $ c $\begin{array}{r l}{{}^{1}}&{{}={}}\end{array}$ (loss - cor_mean ) $^*$ tf. rsqrt ( cor_var + 1e-8) return tf. clip by value (l, -5, 5)

C.2.3 Rolling Clip State

C.2.3 滚动剪辑状态

Gradient clipping is a often used technique in deep learning. We found large benefits by applying some form of learned clipping (clipping inner-gradient values) in our learned optimizer. We cannot simply select a default value because gradient norms vary across problem. As such we meta-learn pieces of a dynamic gradient clipping algorithm. This is our first iteration of this concept and we expect large gains can be obtained with a better scheme.

梯度裁剪是深度学习中常用的技术。我们发现,在学习的优化器中应用某种形式的自适应裁剪(即裁剪内部梯度值)能带来显著优势。由于不同问题的梯度范数存在差异,我们无法简单地选择默认值。因此,我们通过元学习构建了动态梯度裁剪算法的关键组件。这是该概念的首次迭代,我们预期通过更优方案能实现更大提升。

This algorithm is stateful thus also needs some state container.

该算法是有状态的,因此也需要某种状态容器。

| RollingClipState | (int, | the | number | of | times | this | has | been | updated, | ||

| float | t# rolling | average | of | mean | of | squared | |||||

| gradient | values | ||||||||||

| RollingClipState | (int, | the | number | of | times | this | has | been | updated, | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| float | t# rolling | average | of | mean | of | squared | |||||

| gradient | values | ||||||||||

This class is parameterized by the decay constant of the rolling average and the multiplier to determine when clipping should start. Both of these values are outer-learned with the rest of the learned optimizer.

该类由滚动平均的衰减常数和确定何时开始剪裁的乘数参数化。这两个值与其他学习优化器一起进行外部学习。

| class RollingGradClip: def -_init_-(self, alpha=0.99, clip_mult=10): |

class RollingGradClip: def -init- (self, alpha=0.99, clip_mult=10):

The initial states are initialized to 1.

初始状态设为1。

def initial state ( self ) -> Rolling Clip State : return (tf. constant (1, dtype $=$ tf. float32 ), tf. constant (1.0, dtype $=$ tf. float32 )*(1- self . alpha ))

初始状态 (self) -> Rolling Clip State: return (tf.constant(1, dtype $=$ tf.float32), tf.constant(1.0, dtype $=$ tf.float32)*(1 - self.alpha)

We provide a normalization function that both updates the Rolling Clip State and provides clipped gradients.

我们提供了一个归一化函数,既能更新滚动裁剪状态,又能提供裁剪后的梯度。

def next state and normalize (self , state : Rolling Clip State , grads : Grads ) -> Rolling Clip State , Grads : def _normalize ( state $:$ Rolling Clip State , grads $:$ Grads ): summary ops $=[ ]$ t, snd $=$ state clip amount $=$ (snd / (1-self. alpha $^{\ast\ast}$ t)) $^*$ self . clip_mult clipgs $=$ [tf. clip by value (g, - clip amount , clip amount ) for g in grads . values ()] returnclipgs

def next state and normalize (self , state : Rolling Clip State , grads : Grads ) -> Rolling Clip State , Grads : def _normalize ( state $:$ Rolling Clip State , grads $:$ Grads ): summary ops $=[ ]$ t, snd $=$ state clip amount $=$ (snd / (1-self. alpha $^{\ast\ast}$ t)) $^*$ self . clip_mult clipgs $=$ [tf. clip by value (g, - clip amount , clip amount ) for g in grads . values ()] returnclipgs

C.3 Learned optimizer specific / putting it all together

C.3 学习优化器专项/整体整合

The learned optimizer has two main components. The first is the Optimizer class that manages everything surrounding inner-learning. This function has no learnable outer-parameters. The second is what we call “theta_mod” which contains all of the outer-variables and functions that we are learning.

学习到的优化器包含两个主要组件。第一个是Optimizer类,它负责管理所有与内部学习相关的事务。该函数没有可学习的外部参数。第二个是我们称为"thet