Atlas: Few-shot Learning with Retrieval Augmented Language Models

Atlas: 基于检索增强的大语言模型的少样本学习

Abstract

摘要

Large language models have shown impressive few-shot results on a wide range of tasks. However, when knowledge is key for such results, as is the case for tasks such as question answering and fact checking, massive parameter counts to store knowledge seem to be needed. Retrieval augmented models are known to excel at knowledge intensive tasks without the need for as many parameters, but it is unclear whether they work in few-shot settings. In this work we present Atlas, a carefully designed and pre-trained retrieval augmented language model able to learn knowledge intensive tasks with very few training examples. We perform evaluations on a wide range of tasks, including MMLU, KILT and Natural Questions, and study the impact of the content of the document index, showing that it can easily be updated. Notably, Atlas reaches over 42% accuracy on Natural Questions using only 64 examples, outperforming a 540B parameters model by 3% despite having 50x fewer parameters.

大语言模型在广泛任务上展现了令人印象深刻的少样本学习能力。然而,当任务结果高度依赖知识时(如问答和事实核查),似乎需要海量参数来存储知识。检索增强模型虽以较少参数就能在知识密集型任务中表现优异,但其在少样本环境下的有效性尚不明确。本研究提出的Atlas是一个经过精心设计和预训练的检索增强语言模型,能够通过极少量训练样本掌握知识密集型任务。我们在MMLU、KILT和Natural Questions等多样化任务上展开评估,并分析文档索引内容的影响,证明其可轻松更新。值得注意的是,Atlas仅用64个样本就在Natural Questions任务中达到42%以上的准确率,以50倍的参数劣势超越5400亿参数模型3个百分点。

1 Introduction

1 引言

Large language models (LLMs) are impressive few-shot learners (Brown et al., 2020; Rae et al., 2021; Hoffmann et al., 2022; Chowdhery et al., 2022). They are able to learn new tasks with very few examples or even from instructions alone. For this generalisation ability to emerge, the key ingredients are scaling both the parameter count of the model, and the size of the training data. Large language models owe this improvement to both a larger computational budget, enabling more complex reasoning, and the ability to memorize more information related to downstream tasks from the larger training data. While it is intuitive to assume that increased reasoning abilities lead to better generalisation, and hence few-shot learning, the same is not true for in-parameter mem or is ation. Specifically, it is unclear to what extent effective few-shot learning requires vast knowledge in the parameters of the model.

大语言模型 (LLMs) 是出色的少样本学习者 (Brown et al., 2020; Rae et al., 2021; Hoffmann et al., 2022; Chowdhery et al., 2022)。它们能够通过极少量示例甚至仅凭指令学习新任务。这种泛化能力的关键在于同步扩展模型参数量与训练数据规模。大语言模型的提升既得益于更大的计算资源预算(支持更复杂的推理能力),也得益于从海量训练数据中记忆更多下游任务相关信息。虽然增强推理能力会带来更好的泛化表现(即少样本学习)这一假设符合直觉,但模型参数内的记忆机制却并非如此。具体而言,目前尚不清楚有效的少样本学习究竟需要模型参数中存储多大体量的知识。

In this paper, we investigate whether few-shot learning requires models to store a large amount of information in their parameters, and if mem or is ation can be decoupled from generalisation. To do so, we leverage the fact that memory can be outsourced and replaced by an external non-parametric knowledge source by employing a retrieval-augmented architecture. These models employ a non-parametric memory, e.g. a neural retriever over a large, external, potentially non-static knowledge source to enhance a parametric language model. In addition to their mem or is ation abilities, such architectures are attractive due to a number of other established advantages in terms of adaptability, interpret ability and efficiency (Guu et al., 2020; Lewis et al., 2020; Yogatama et al., 2021; Borgeaud et al., 2021, inter alia). However, retrieval-augmented models have yet to demonstrate compelling few-shot learning capabilities. In this work we address this gap, and present Atlas, a retrieval-augmented language model capable of strong few-shot learning, despite having lower parameter counts than other powerful recent few-shot learners.

本文研究了少样本学习是否需要模型在参数中存储大量信息,以及记忆能力是否可与泛化能力解耦。为此,我们利用记忆可被外包至外部非参数化知识源的特性,采用检索增强架构。这类模型通过神经检索器等非参数化记忆组件,从大规模外部(可能动态更新的)知识源中获取信息,从而增强参数化语言模型的性能。除记忆能力外,此类架构还具备适应性、可解释性和效率等多重优势 (Guu et al., 2020; Lewis et al., 2020; Yogatama et al., 2021; Borgeaud et al., 2021等) 。然而,检索增强模型尚未展现出显著的少样本学习能力。本研究填补了这一空白,提出Atlas——一个参数量少于当前先进少样本学习模型,却具备强大少样本学习能力的检索增强语言模型。

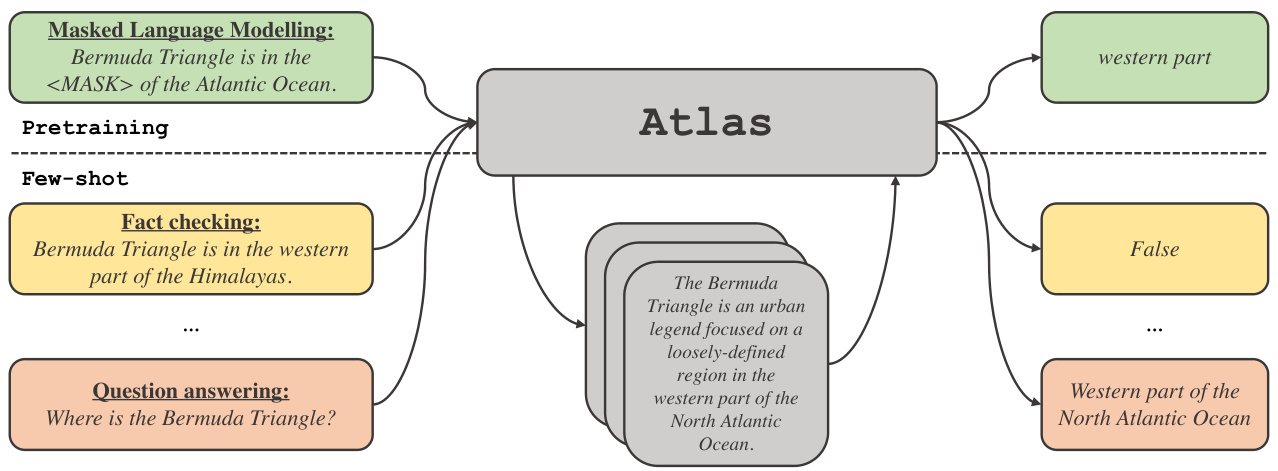

Figure 1: We introduce Atlas, a retrieval-augmented language model that exhibits strong few-shot perfor mance on knowledge tasks, and uses retrieval during both pre-training and fine-tuning.

图 1: 我们介绍了Atlas,这是一个检索增强的大语言模型,在知识任务上表现出强大的少样本性能,并在预训练和微调期间都使用了检索。

Atlas retrieves relevant documents based on the current context by using a general-purpose dense retriever using a dual-encoder architecture, based on the Contriever (Izacard et al., 2022). The retrieved documents are processed, along with the current context, by a sequence-to-sequence model using the Fusion-in-Decoder architecture (Izacard $\text{&}$ Grave, 2020) that generates the corresponding output. We study the impact of different techniques to train Atlas on its few-shot performance on a range of downstream tasks, including question answering and fact checking. We find that jointly pre-training the components is crucial for few-shot performance, and we carefully evaluate a number of existing and novel pre-training tasks and schemes for this purpose. Atlas achieves strong downstream performance in both few-shot and resource-rich settings. For example, with only 11B parameters, Atlas achieves an accuracy of $42.4%$ on Natural Questions using 64 training examples (45.1% with a Wikipedia-only index), outperforming PaLM (Chowdhery et al., 2022), a 540B parameter model by almost 3 points, and $64.0%$ in a full-dataset setting with a Wikipedia index, establishing a new state of the art by 8 points.

Atlas 通过基于 Contriever (Izacard et al., 2022) 的双编码器架构通用密集检索器,根据当前上下文检索相关文档。检索到的文档与当前上下文一起,由采用 Fusion-in-Decoder 架构 (Izacard & Grave, 2020) 的序列到序列模型处理并生成相应输出。我们研究了不同训练技术对 Atlas 在问答和事实核查等一系列下游任务中少样本性能的影响,发现联合预训练各组件对少样本性能至关重要,为此我们系统评估了多种现有及新颖的预训练任务与方案。Atlas 在少样本和资源充足场景下均展现出强劲的下游任务性能。例如,仅用 110 亿参数的 Atlas 在使用 64 个训练样本时,在 Natural Questions 上达到 42.4% 准确率(仅使用维基百科索引时为 45.1%),以近 3 个百分点的优势超越 5400 亿参数的 PaLM (Chowdhery et al., 2022);在维基百科索引的全数据集设置中达到 64.0% 准确率,以 8 个百分点的优势创下新纪录。

In summary we make the following contributions:

我们主要贡献如下:

Our code, pretrained Atlas checkpoints, and various supporting data are available at https://github.com/ facebook research/atlas

我们的代码、预训练的Atlas检查点以及各种支持数据可在 https://github.com/facebookresearch/atlas 获取

Figure 2: Examples of query and output pairs for different tasks from KILT.

| Task | Query | Output |

| Fact Checking | Bermuda Triangle is in the western part of the HiP malayas. | False |

| QuestionAnswering | who is playing the halftime show at super bowl 2016 | Coldplay |

| Entity Linking | NTFS-3G is an open source | Cross-platformsoftware |

图 2: KILT中不同任务的查询与输出示例。

| 任务 | 查询 | 输出 |

|---|---|---|

| 事实核查 | 百慕大三角位于喜马拉雅山脉西部。 | 错误 |

| 问答 | 谁在2016年超级碗中场秀表演 | Coldplay |

| 实体链接 | NTFS-3G是一个开源的跨平台实现,支持读写Microsoft Windows NTFS文件系统。 | 跨平台软件 |

2 Method

2 方法

Our approach follows the text-to-text framework (Raffel et al., 2019). This means that all the tasks are framed as follows: the system gets a text query as input, and generates a text output. For example, in the case of question answering, the query corresponds to the question and the model needs to generate the answer. In the case of classification tasks, the query corresponds to the textual input, and the model generates the lexical i zed class label, i.e. the word corresponding to the label. We give more examples of downstream tasks, from the KILT benchmark in Figure 2. As many natural language processing tasks require knowledge, our goal is to enhance standard text-to-text models with retrieval, which, as we hypothesis e in the introduction, may be crucial to endow models with few-shot capabilities.

我们的方法遵循文本到文本(text-to-text)框架(Raffel et al., 2019)。这意味着所有任务都被构造成如下形式:系统接收文本查询作为输入,并生成文本输出。例如在问答任务中,查询对应问题而模型需要生成答案;在分类任务中,查询对应文本输入,模型则生成词汇化的类别标签(即与标签对应的单词)。我们在图2中展示了来自KILT基准测试的更多下游任务示例。由于许多自然语言处理任务需要知识,我们的目标是通过检索机制增强标准文本到文本模型——正如引言中假设的,这对于赋予模型少样本能力可能至关重要。

2.1 Architecture

2.1 架构

Our model is based on two sub-models: the retriever and the language model. When performing a task, from question answering to generating Wikipedia articles, our model starts by retrieving the top-k relevant documents from a large corpus of text with the retriever. Then, these documents are fed to the language model, along with the query, which in turns generates the output. Both the retriever and the language model are based on pre-trained transformer networks, which we describe in more detail below.

我们的模型基于两个子模型:检索器(Retriever)和大语言模型(Language Model)。在执行任务时,无论是问答还是生成维基百科文章,模型首先通过检索器从大型文本语料库中检索出前k个相关文档。随后,这些文档与查询语句一起输入大语言模型,由后者生成最终输出。检索器和大语言模型均基于预训练的Transformer网络架构,下文将对此进行更详细的说明。

Retriever. Our retriever module is based on the Contriever (Izacard et al., 2022), an information retrieval technique based on continuous dense embeddings. The Contriever uses a dual-encoder architecture, where the query and documents are embedded independently by a transformer encoder (Huang et al., 2013; Karpukhin et al., 2020). Average pooling is applied over the outputs of the last layer to obtain one vector representation per query or document. A similarity score between the query and each document is then obtained by computing the dot product between their corresponding embeddings. The Contriever model is pre-trained using the MoCo contrastive loss (He et al., 2020), and uses unsupervised data only. As shown in the following section, an advantage of dense retrievers is that both query and document encoders can be trained without document annotation, using standard techniques such as gradient descent and distillation.

检索器。我们的检索器模块基于Contriever (Izacard等人,2022),这是一种基于连续密集嵌入的信息检索技术。Contriever采用双编码器架构,其中查询和文档通过Transformer编码器 (Huang等人,2013;Karpukhin等人,2020) 独立嵌入。通过对最后一层输出应用平均池化,获得每个查询或文档的向量表示。然后通过计算相应嵌入之间的点积,获得查询与每个文档之间的相似度分数。Contriever模型使用MoCo对比损失 (He等人,2020) 进行预训练,并且仅使用无监督数据。如下一节所示,密集检索器的一个优势是,无需文档标注,即可使用梯度下降和蒸馏等标准技术训练查询和文档编码器。

Language model. For the language model, we rely on the T5 sequence-to-sequence architecture (Raffel et al., 2019). We rely on the Fusion-in-Decoder modification of sequence-to-sequence models, and process each document independently in the encoder (Izacard $\text{&}$ Grave, 2020). We then concatenate the outputs of the encoder corresponding to the different documents, and perform cross-attention over this single sequence in the decoder. Following Izacard & Grave (2020), we concatenate the query to each document in the encoder. Another way to process the retrieved documents in the language model would be to concatenate the query and all the documents, and to use this long sequence as input of the model. Unfortunately, this approach does not scale with the number of documents, since the self-attention in the encoder results in a quadratic complexity with respect to the number of documents.

语言模型。对于语言模型,我们采用T5序列到序列架构(Raffel et al., 2019)。我们基于序列到序列模型的Fusion-in-Decoder改进方案,在编码器中独立处理每个文档(Izacard $\text{&}$ Grave, 2020)。然后将不同文档对应的编码器输出进行拼接,在解码器中对这个单一序列执行交叉注意力机制。遵循Izacard & Grave(2020)的方法,我们在编码器中将查询语句与每个文档拼接。另一种处理检索文档的方式是将查询语句与所有文档拼接,并将这个长序列作为模型输入。但这种方法会随着文档数量增加而面临可扩展性问题,因为编码器中的自注意力机制会导致计算复杂度随文档数量呈平方级增长。

2.2 Training objectives for the retriever

2.2 检索器的训练目标

In this section, we discuss four different loss functions to train the retriever jointly with the language model. We consider loss functions that leverage the language model to provide supervisory signal to train the retriever. In other words, if the language model finds a document useful when generating the output, the retriever objective should encourage the retriever to rank said document higher. This allows us to train models using only query and output pairs from the task of interest, without relying on document annotations. For example, in the case of fact checking, a model only requires pairs of claims and corresponding verdicts but no documents containing the evidence to back up the verdict. In practice, we can apply this approach on any task, including self-supervised pre-training. As shown in the experimental section, pre-training is critical for obtaining models that exhibit few-shot learning abilities.

在本节中,我们讨论四种不同的损失函数,用于联合训练检索器与大语言模型。我们考虑的损失函数利用大语言模型提供监督信号来训练检索器。换句话说,如果大语言模型在生成输出时发现某个文档有用,检索器的目标函数应鼓励检索器将该文档排名更高。这使得我们仅使用目标任务中的查询和输出对即可训练模型,而无需依赖文档标注。例如,在事实核查任务中,模型仅需要声明和相应判定组成的配对,而不需要包含支撑判定的证据文档。实践中,该方法可应用于包括自监督预训练在内的任何任务。如实验部分所示,预训练对于获得具备少样本学习能力的模型至关重要。

Attention Distillation (ADist). The first loss that we consider is based on the attention scores of the language model, and is heavily inspired by Izacard $\text{&}$ Grave (2021). The main idea is that the cross-attention scores between the input documents and the output, can be used as a proxy of the importance of each input document when generating the output. In particular, Izacard $\text{&}$ Grave (2021) showed that these scores can be aggregated across attention heads, layers and tokens for a given document to obtain a single score for each document. Then, these scores can be distilled into the retriever by minimizing the KL-divergence with the probability distribution $p_{\mathrm{RETR}}$ over the top-K documents ${\mathbf{d}_ {k}}_{1,\ldots,K}$ obtained from the retriever:

注意力蒸馏 (ADist)。我们考虑的第一个损失函数基于语言模型的注意力分数,其灵感主要来自 Izacard $\text{&}$ Grave (2021)。核心思想是:输入文档与输出之间的交叉注意力分数,可以作为生成输出时各输入文档重要性的代理指标。具体而言,Izacard $\text{&}$ Grave (2021) 证明这些分数可以跨注意力头、网络层和 token 进行聚合,从而为每个文档计算单一分数。随后,通过最小化这些分数与检索器返回的 top-K 文档 ${\mathbf{d}_ {k}}_ {1,\ldots,K}$ 概率分布 $p_{\mathrm{RETR}}$ 之间的 KL 散度,即可将注意力分数蒸馏到检索器中:

$$

p_{\mathrm{RETR}}\left(\mathbf{d}\mid\mathbf{q}\right)=\frac{\exp(s(\mathbf{d},\mathbf{q})/\theta)}{\sum_{k=1}^{K}\exp(s(\mathbf{d}_{k},\mathbf{q})/\theta)},

$$

$$

p_{\mathrm{RETR}}\left(\mathbf{d}\mid\mathbf{q}\right)=\frac{\exp(s(\mathbf{d},\mathbf{q})/\theta)}{\sum_{k=1}^{K}\exp(s(\mathbf{d}_{k},\mathbf{q})/\theta)},

$$

where $s$ is the dot-product between the query and documents vectors and $\theta$ is a temperature hyper-parameter.

其中 $s$ 是查询向量与文档向量的点积,$\theta$ 是温度超参数。

In the original paper, it was proposed to use the pre-softmax scores from the decoder cross-attentions, and average across heads, layers and tokens. Here, we propose an alternative which gives slightly stronger results, which relies on the following observation. In the attention mechanism, as defined by

在原论文中,提出使用解码器交叉注意力层的预softmax分数,并在注意力头、层和token之间取平均。我们在此提出一个能获得略好结果的替代方案,该方案基于以下观察:在注意力机制中,如定义所述

$$

\mathbf{y}=\sum_{n=1}^{N}\alpha_{n}\mathbf{v}_{n},

$$

$$

\mathbf{y}=\sum_{n=1}^{N}\alpha_{n}\mathbf{v}_{n},

$$

the contribution to the output $\mathbf{y}$ of a particular token $n$ cannot be evaluated from the attention score $\alpha_{n}$ alone, but should also take the norm of the value ${\bf v}_ {n}$ into account. Hence, we use the quantity $\alpha_{n}|\mathbf{v}_ {n}|_ {2}$ as the measure of relevance for token $n$ . Following Izacard $\text{&}$ Grave (2021), we average these scores over all attention heads, layers, and tokens to obtain a score for each document. We apply the Softmax operator over the resulting scores, to obtain a distribution $p_{\mathrm{ATTN}}(\mathbf{d}_ {k})$ over the top-K retrieved documents. We then minimize the KL-divergence between $p_{\mathrm{ATTN}}(\mathbf{d}_ {k})$ , and the distribution $p_{\mathrm{RETR}}$ from the retriever defined in Equation 1:

特定token $n$ 对输出 $\mathbf{y}$ 的贡献不能仅通过注意力分数 $\alpha_{n}$ 来评估,还应考虑值向量 ${\bf v}_ {n}$ 的范数。因此,我们使用 $\alpha_{n}|\mathbf{v}_ {n}|_ {2}$ 作为token $n$ 的相关性度量。遵循Izacard $\text{&}$ Grave (2021) 的方法,我们对所有注意力头、层和token的分数进行平均,从而为每个文档获得一个分数。我们对结果分数应用Softmax算子,得到前K个检索文档上的分布 $p_{\mathrm{ATTN}}(\mathbf{d}_ {k})$。然后,我们最小化 $p_{\mathrm{ATTN}}(\mathbf{d}_ {k})$ 与检索器在公式1中定义的分布 $p_{\mathrm{RETR}}$ 之间的KL散度:

$$

\mathrm{KL}(p_{\mathrm{ATTN}}\parallel p_{\mathrm{RETR}})=\sum_{k=1}^{K}p_{\mathrm{ATTN}}(\mathbf{d}_ {k})\log\left(\frac{p_{\mathrm{ATTN}}(\mathbf{d}_ {k})}{p_{\mathrm{RETR}}(\mathbf{d}_{k})}\right).

$$

$$

\mathrm{KL}(p_{\mathrm{ATTN}}\parallel p_{\mathrm{RETR}})=\sum_{k=1}^{K}p_{\mathrm{ATTN}}(\mathbf{d}_ {k})\log\left(\frac{p_{\mathrm{ATTN}}(\mathbf{d}_ {k})}{p_{\mathrm{RETR}}(\mathbf{d}_{k})}\right).

$$

Here, this loss is only used to optimize the parameters of the retriever, and not the language model. When using recent deep learning frameworks, this is achieved by applying a Stop Gradient operator on $p_{\mathrm{ATTN}}$ .

在这里,该损失仅用于优化检索器的参数,而不用于优化语言模型。在使用现代深度学习框架时,这是通过在 $p_{\mathrm{ATTN}}$ 上应用 Stop Gradient 算子来实现的。

End-to-end training of Multi-Document Reader and Retriever (EMDR2). Next, we consider the method introduced by Sachan et al. (2021), which is inspired by the expectation-maximization algorithm, treating retrieved documents as latent variables. Given a query $\mathbf{q}$ , the corresponding output $\mathbf{a}$ and the set $\mathcal{D}_{K}$ of top-K retrieved documents with the current retriever, the EMDR $^2$ loss to train the retriever is

端到端多文档阅读器与检索器联合训练 (EMDR2)。接下来我们分析 Sachan 等人 (2021) 提出的方法,该方法受期望最大化算法启发,将检索文档视为隐变量。给定查询 $\mathbf{q}$、对应输出 $\mathbf{a}$ 以及当前检索器返回的 top-K 文档集合 $\mathcal{D}_{K}$,训练检索器的 EMDR$^2$ 损失函数为

$$

\log\left[\sum_{k=1}^{K}p_{\mathrm{LM}}({\bf a}\mid{\bf q},{\bf d}_ {k})p_{\mathrm{RETR}}({\bf d}_{k}\mid{\bf q})\right],

$$

$$

\log\left[\sum_{k=1}^{K}p_{\mathrm{LM}}({\bf a}\mid{\bf q},{\bf d}_ {k})p_{\mathrm{RETR}}({\bf d}_{k}\mid{\bf q})\right],

$$

where $p_{\mathrm{RETR}}$ is again the probability over the top-K documents obtained with the retriever, as defined by Equation 1. Again, only the parameters of the retriever are updated by applying a Stop Gradient operator around $p_{\mathrm{LM}}$ . One should note that the probability distribution over documents that maximizes this loss function is an indicator of the document corresponding to the highest probability of the output according to the language model. Finally, in practice, the EMDR $_{,^{-}}^{2}$ loss function is applied at the token level, and not at the sequence level.

其中 $p_{\mathrm{RETR}}$ 仍为检索器获取的Top-K文档概率(由公式1定义)。同样地,仅通过应用 $p_{\mathrm{LM}}$ 周围的Stop Gradient算子来更新检索器参数。需注意,使该损失函数最大化的文档概率分布,即为语言模型输出最高概率对应文档的指示器。实际应用中,EMDR$_{,^{-}}^{2}$ 损失函数是在token级别而非序列级别进行计算的。

Perplexity Distillation (PDist). Third, we discuss a simpler loss function which is loosely inspired by the objectives from the attention distillation and EMDR $^2$ methods (Izacard & Grave, 2021; Sachan et al., 2021). More precisely, we want to train the retriever to predict how much each document would improve the language model perplexity of the output, given the query. To this end, we minimize the KL-divergence between the documents distribution of the retriever (Eqn. 1), and the documents posterior distribution according to the language model, using a uniform prior:

困惑度蒸馏 (PDist) 。第三,我们讨论了一种更简单的损失函数,其灵感大致来自注意力蒸馏和EMDR$^2$方法 (Izacard & Grave, 2021; Sachan et al., 2021) 的目标。具体而言,我们希望训练检索器在给定查询时预测每篇文档能多大程度改善输出的大语言模型困惑度。为此,我们最小化检索器文档分布 (公式1) 与大语言模型文档后验分布之间的KL散度,其中先验分布采用均匀分布:

$$

p_{k}\propto p_{L M}(\mathbf{a}\mid\mathbf{d}_{k},\mathbf{q}).

$$

$$

p_{k}\propto p_{L M}(\mathbf{a}\mid\mathbf{d}_{k},\mathbf{q}).

$$

Using the Softmax operator, we have that

使用 Softmax 算子时,我们有

$$

p_{k}=\frac{\exp(\log p_{L M}(\mathbf{a}\mid\mathbf{d}_ {k},\mathbf{q}))}{\sum_{i=1}^{K}\exp(\log p_{L M}(\mathbf{a}\mid\mathbf{d}_{i},\mathbf{q}))}.

$$

$$

p_{k}=\frac{\exp(\log p_{L M}(\mathbf{a}\mid\mathbf{d}_ {k},\mathbf{q}))}{\sum_{i=1}^{K}\exp(\log p_{L M}(\mathbf{a}\mid\mathbf{d}_{i},\mathbf{q}))}.

$$

Leave-one-out Perplexity Distillation (LOOP). Finally, we propose an objective based on how much worse the prediction of the language model gets, when removing one of the top $\mathrm{k\Omega}$ retrieved documents. To do so, we compute the log probability of the output for each subset of k-1 documents, and use the negative value as relevance score for each document. Following the previous loss function, we use the softmax operator to obtain a probability distribution over documents:

留一困惑度蒸馏 (LOOP)。最后,我们提出一种基于当移除前 $\mathrm{k\Omega}$ 篇检索文档中的一篇时,语言模型预测结果变差程度的评估目标。具体实现时,我们计算每组 k-1 篇文档子集对应输出的对数概率,并将该负值作为每篇文档的相关性分数。沿用先前损失函数的思路,我们使用 softmax 算子得到文档的概率分布:

$$

p_{\mathrm{LOOP}}(\mathbf{d}_ {k})=\frac{\exp(-\log p_{L M}(\mathbf{a}\mid\mathcal{D}_ {K}\setminus{\mathbf{d}_ {k}},\mathbf{q}))}{\sum_{i=1}^{K}\exp(-\log p_{L M}(\mathbf{a}\mid\mathcal{D}_ {K}\setminus{\mathbf{d}_{i}},\mathbf{q}))}.

$$

$$

p_{\mathrm{LOOP}}(\mathbf{d}_ {k})=\frac{\exp(-\log p_{L M}(\mathbf{a}\mid\mathcal{D}_ {K}\setminus{\mathbf{d}_ {k}},\mathbf{q}))}{\sum_{i=1}^{K}\exp(-\log p_{L M}(\mathbf{a}\mid\mathcal{D}_ {K}\setminus{\mathbf{d}_{i}},\mathbf{q}))}.

$$

As before, we then minimize the KL-divergence between this distribution, and the one obtained with retriever. This loss is more expensive to compute than PDist and EMDR, but, like ADist, employs the language model more closely to the way it is trained i.e. the LM is trained to be conditioned on a set of $K$ documents. For LOOP, the language model is conditioned on $(K-1)$ documents, rather than a single document as in EMDR $^2$ and PDist.

与之前一样,我们随后最小化该分布与通过检索器获得分布之间的KL散度。该损失的计算成本高于PDist和EMDR,但与ADist类似,它更贴近语言模型的训练方式,即语言模型被训练为以一组$K$篇文档为条件。对于LOOP,语言模型以$(K-1)$篇文档为条件,而非像EMDR$^2$和PDist那样仅以单篇文档为条件。

For all losses, we can also use a temperature hyper-parameter when computing the target or retriever distributions to control the distribution’s peakiness of, which might be important for some tasks or losses. Indeed, for PDist and LOOP, the perplexity of the output may not vary much when conditioning on different documents, especially in the case of long outputs.

对于所有损失函数,在计算目标分布或检索器分布时,我们也可以使用温度超参数来控制分布的峰值程度,这对某些任务或损失函数可能很重要。实际上,对于PDist和LOOP而言,当基于不同文档进行条件生成时,输出的困惑度可能变化不大,尤其是在生成长文本的情况下。

2.3 Pretext tasks

2.3 前置任务

In this section, we describe pretext tasks that can be used to jointly pre-train the retriever and the language model using only unsupervised data.

在本节中,我们将介绍仅使用无监督数据即可联合预训练检索器与大语言模型的代理任务 (pretext tasks)。

Prefix language modeling. First, we consider a standard language modeling task as potential pre-training objective. To cast language modeling in the text-to-text framework, we consider a chunk of $N$ words, and split this chunk in two sub-sequences of equal length $N/2$ . Then, the first sub-sequence is used as the query, and the second corresponds to the output. We thus retrieve relevant documents by using the first sub-sequence of $N/2$ tokens, to generate the output.

前缀语言建模。首先,我们将标准语言建模任务视为潜在的预训练目标。为了在文本到文本框架中实现语言建模,我们考虑一个包含 $N$ 个单词的块,并将其拆分为两个长度均为 $N/2$ 的子序列。然后,第一个子序列用作查询,第二个子序列对应输出。因此,我们通过使用前 $N/2$ 个 token 的子序列来检索相关文档以生成输出。

Masked language modeling. Second, we consider masked language modeling, as formulated by Raffel et al. (2019). Again, starting from a chunk of $N$ words, we sample $k$ spans of average length 3 tokens, leading to a masking ratio of $15%$ . We then replace each span by a different special token. The model is then trained to generate the masked spans, each span beginning with the special sentinel mask token that was inserted in the input sequence. We retrieve documents using the masked query, but replace the special mask tokens with a mask token supported by the retriever vocabulary.

掩码语言建模。其次,我们考虑由Raffel等人 (2019) 提出的掩码语言建模方法。同样,从包含$N$个单词的文本块出发,我们采样$k$个平均长度为3个token的文本片段,掩码比例达到$15%$。随后,每个片段被替换为不同的特殊token。模型被训练用于生成被掩码的片段,每个片段以输入序列中插入的特殊哨兵掩码token开头。我们使用掩码查询检索文档,但需将特殊掩码token替换为检索器词表支持的掩码token。

Title to section generation. Finally, we consider a more abstract ive generation task, generating sections from Wikipedia articles, given the article and section title. Here, the query corresponds to the title of the article, together with the title of the section, and the output corresponds to the text of the section. We exclude sections “See also”, “References”, “Further reading” and “External links”.

标题生成章节。最后,我们考虑一个更抽象的生成任务:在给定文章和章节标题的情况下,从维基百科文章中生成章节内容。此处的查询对应文章标题与章节标题的组合,输出则对应章节正文。我们排除了"参见"、"参考文献"、"延伸阅读"和"外部链接"等章节。

2.4 Efficient retriever fine-tuning

2.4 高效检索器微调

Retrieval is facilitated by using a document index, which is a pre-computed collection of the document embeddings for all the documents in the retrieval corpus. When jointly training the retriever and language model, the index needs to be updated regularly, otherwise, the embeddings of the documents stored in the index become stale relative to the updated retriever. This means that we need to recompute the embeddings for the full collection of documents regularly during training to keep the index fresh, which can be computationally expensive for large indices. This is particularly true at fine-tuning time, where the number of training examples could be small relative to the number of documents in the index. Training the retriever could thus add an important computational overhead compared to standard language model finetuning. In this section, we analyse strategies that might make this process more efficient, alleviating the need to re-compute the embeddings of all the documents too often.

通过使用文档索引可以方便检索,该索引是检索语料库中所有文档的预计算文档嵌入集合。在联合训练检索器和语言模型时,需要定期更新索引,否则索引中存储的文档嵌入会相对于更新后的检索器变得过时。这意味着我们需要在训练期间定期为整个文档集合重新计算嵌入以保持索引新鲜,对于大型索引来说这可能带来高昂的计算成本。在微调阶段尤其如此,此时训练样本数量可能远少于索引中的文档数量。因此与传统语言模型微调相比,训练检索器可能带来显著的计算开销。本节我们将分析可能提升该过程效率的策略,从而减少频繁重新计算所有文档嵌入的需求。

Full index update. Let us start by analysing the overhead due to updating the index, compared to using a fixed retriever. To compare the computation time of different models, we will make the following assumption: the time required to perform a forward pass on a document with a model of $P$ parameters is $O(P)$ . While this computation model may seem naive, the main assumption is that document sizes are constant.1 Since we split long documents into passages with similar number of words, and use padding when processing documents of different sizes, this assumption is reasonable in practice. Let $K$ be the number of documents that are retrieved and processed by the language model, $P_{\mathrm{LM}}$ be the number of parameters of the language model and $B$ the batch size. Each training step has a complexity of $4\times B\times K\times P_{\mathrm{LM}}$ .2

完整索引更新。我们首先分析相比使用固定检索器,更新索引带来的开销。为了比较不同模型的计算时间,我们做出以下假设:在参数量为 $P$ 的模型上对文档执行前向传播所需时间为 $O(P)$。虽然这个计算模型看似简单,但核心假设是文档大小恒定。由于我们将长文档分割为词数相近的段落,并在处理不同大小文档时使用填充(padding),该假设在实践中是合理的。设 $K$ 为语言模型检索处理的文档数量,$P_{\mathrm{LM}}$ 为语言模型的参数量,$B$ 为批量大小。每个训练步骤的计算复杂度为 $4\times B\times K\times P_{\mathrm{LM}}$。

Next, let $N$ be the number of documents in the index, and $P_{\mathrm{RETR}}$ be the number of parameters of the retriever. Then, re-computing the full index has a complexity of $N\times P_{\mathrm{RETR}}$ . If we refresh the index every $R$ training steps, we obtain the following overhead:

接下来,设 $N$ 为索引中的文档数量,$P_{\mathrm{RETR}}$ 为检索器的参数量。那么,重新计算完整索引的复杂度为 $N\times P_{\mathrm{RETR}}$。若每 $R$ 个训练步骤刷新一次索引,则得到以下开销:

$$

\frac{N\times P_{\mathrm{RETR}}}{4\times B\times K\times P_{\mathrm{LM}}\times R}.

$$

$$

\frac{N\times P_{\mathrm{RETR}}}{4\times B\times K\times P_{\mathrm{LM}}\times R}.

$$

If we use the BERT-base architecture for our retriever and T5-XL for our language model, we get $\begin{array}{r}{\frac{P_{\mathrm{RETR}}}{P_{\mathrm{LM}}}\approx\frac{1}{25}}\end{array}$ lading to the overhead:

如果我们使用BERT-base架构作为检索器,T5-XL作为大语言模型,就会得到 $\begin{array}{r}{\frac{P_{\mathrm{RETR}}}{P_{\mathrm{LM}}}\approx\frac{1}{25}}\end{array}$ 从而导致开销:

$$

\frac{N}{100\times B\times K\times R}.

$$

$$

\frac{N}{100\times B\times K\times R}.

$$

If we use an index containing $37M$ documents (the size of our Wikipedia index), train with a batch size of 64 with 20 retrieved documents and refresh the index every 1000 steps, this results in an overhead of $\sim30%$ .

如果我们使用包含 $37M$ 个文档的索引(维基百科索引的大小),以 64 的批量大小训练并检索 20 个文档,每 1000 步刷新一次索引,这将导致约 $\sim30%$ 的开销。

Re-ranking. A second strategy is to retrieve a larger number of documents $L$ with the retriever, and to re-embed and rerank these documents with the up-to-date retriever, and pass the resulting top $K$ to the language model. In that case, the overhead of reranking the top $L$ documents is equal to $B\times L\times P_{\mathrm{RETR}}$ Since we perform this operation at every time step, the overhead is equal to

重排序。第二种策略是使用检索器检索更多文档$L$,并用最新的检索器对这些文档重新嵌入和排序,然后将排名前$K$的文档传递给大语言模型。在这种情况下,对前$L$个文档进行重排序的开销等于$B\times L\times P_{\mathrm{RETR}}$。由于我们在每个时间步都执行此操作,因此总开销为

$$

\frac{L\times P_{\mathrm{RETR}}}{4\times K\times P_{\mathrm{LM}}}.

$$

$$

\frac{L\times P_{\mathrm{RETR}}}{4\times K\times P_{\mathrm{LM}}}.

$$

Using the same assumption as before, we finally get that the overhead is of the order of 100L×K . If we re-rank 10x more documents than what the language model processes (i.e., $L=10\times K$ ), we get an overhead of $10%$ . However, note that if many updates are performed on the retriever, the index might still need to be fully updated, as the true top $\mathrm{k\Omega}$ documents may not be retrieved in the top-L results from the stale index. In practice, it is possible to track the positions of the top-K re-ranked documents in the top-L, and estimate when the index needs to be updated.

采用与之前相同的假设,我们最终得出开销约为100L×K量级。若对大语言模型处理文档量的10倍进行重排序(即$L=10\times K$),则会产生$10%$的开销。但需注意,若对检索器进行多次更新,索引可能仍需完整更新,因为在过期索引的top-L结果中可能无法检索到真实的top-$\mathrm{k\Omega}$文档。实际应用中,可通过追踪top-K重排文档在top-L中的位置来预估索引更新时间。

Query-side fine-tuning. Finally, the last strategy is to decouple the encoding of the queries and documents. In this case, we fix the parameters corresponding to the document encoder, and only train the parameters corresponding to the query encoder. Thus, the embeddings of documents are fixed, and we do not need to refresh the index, and thus there is no computational overhead. As we will see in practice, the impact of fixing the documents encoder varies greatly for different tasks when a large training dataset is available. For most of the few-shot settings that we consider, query-side finetuning does not have large performance impact, and sometimes even slightly improves performance.

查询端微调。最后一种策略是将查询和文档的编码解耦。在这种情况下,我们固定文档编码器对应的参数,仅训练查询编码器对应的参数。因此,文档的嵌入向量保持不变,无需刷新索引,也就没有计算开销。实际应用中我们会发现,当拥有大规模训练数据集时,固定文档编码器对不同任务的影响差异很大。在我们考察的大多数少样本场景中,查询端微调对性能影响不大,有时甚至能略微提升性能。

3 Related work

3 相关工作

3.1 Retrieval in natural language processing

3.1 自然语言处理中的检索

Retrieval for knowledge intensive tasks. Previous work has shown that retrieval improves performance across a variety of tasks such as question answering (Voorhees et al., 1999; Chen et al., 2017; Kwiatkowski et al., 2019), fact checking (Thorne et al., 2018), dialogue (Dinan et al., 2019) or citation recommendation (Petroni et al., 2022). Historically, this information retrieval step was implemented using term-matching methods, such as TF-IDF or BM25 (Jones, 1972; Robertson et al., 1995). For open-domain question answering (Voorhees et al., 1999), documents are often retrieved from Wikipedia (Chen et al., 2017). Recently, dense retrievers based on neural networks have become popular. These usually follow a dual-encoder architecture (Yih et al., 2011; Huang et al., 2013; Shen et al., 2014), where queries and passages are encoded independently as vectors, and relevance is computed using the inner product or Euclidean distance. Popular supervised retrievers include DPR (Karpukhin et al., 2020), which is trained to discriminate the relevant passage among negative passages, and extensions such as ANCE (Xiong et al., 2020) which improved the hard negatives mining process. We refer the reader to Yates et al. (2021) for a survey of dense retrieval techniques.

知识密集型任务中的检索。先前的研究表明,检索能提升多种任务的性能,例如问答 (Voorhees et al., 1999; Chen et al., 2017; Kwiatkowski et al., 2019)、事实核查 (Thorne et al., 2018)、对话 (Dinan et al., 2019) 或引文推荐 (Petroni et al., 2022)。传统上,这一信息检索步骤通过词项匹配方法实现,例如 TF-IDF 或 BM25 (Jones, 1972; Robertson et al., 1995)。在开放域问答任务中 (Voorhees et al., 1999),文档通常从维基百科中检索 (Chen et al., 2017)。近年来,基于神经网络的密集检索器逐渐流行,这类方法通常采用双编码器架构 (Yih et al., 2011; Huang et al., 2013; Shen et al., 2014),将查询和段落独立编码为向量,并通过内积或欧氏距离计算相关性。常见的监督式检索器包括 DPR (Karpukhin et al., 2020)——其训练目标是区分相关段落与负样本段落,以及改进硬负样本挖掘过程的扩展方法如 ANCE (Xiong et al., 2020)。关于密集检索技术的综述可参阅 Yates et al. (2021)。

After retrieval, the relevant documents are processed to produce the final output. In open-domain QA, models can extract a span of text from retrieved documents as the answer (Chen et al., 2017; Clark & Gardner, 2018; Wang et al., 2019; Karpukhin et al., 2020), a method inspired by reading comprehension (Richardson, 2013; Rajpurkar et al., 2016). Recently, generating the answer as free-form text, using a seq2seq model conditioned on retrieved documents have become prevalent (Lewis et al., 2020; Izacard & Grave, 2020; Min et al., 2020). These architectures have also been shown to reduce hallucination in dialogue agents (Shuster et al., 2021).

检索后,相关文档经过处理生成最终输出。在开放域问答中,模型可以从检索到的文档中提取一段文本作为答案 (Chen et al., 2017; Clark & Gardner, 2018; Wang et al., 2019; Karpukhin et al., 2020),这种方法受到阅读理解任务的启发 (Richardson, 2013; Rajpurkar et al., 2016)。近年来,基于检索文档的序列到序列 (seq2seq) 模型生成自由形式答案的方法逐渐流行 (Lewis et al., 2020; Izacard & Grave, 2020; Min et al., 2020)。这些架构也被证明可以减少对话智能体中的幻觉现象 (Shuster et al., 2021)。

Retriever training. The need for expensive query-document annotations for training the retriever can be bypassed, by leveraging signals from the language model, or using unsupervised learning. REALM (Guu et al., 2020) and RAG (Lewis et al., 2020) jointly train the retriever and language model by modelling documents as latent variable, and minimizing the objective with gradient descent. REALM pre-trains end-to-end with an MLM approach but uses an extractive BERT-style model (Devlin et al., 2019). Guu et al. (2020) also explore a query-side finetuning at finetuning time to avoid index refreshes, which is also explored in the context of phrase-based retrieval by Lee et al. (2021b). Izacard & Grave (2020) proposed to use cross-attention scores as supervision with knowledge distillation. Sachan et al. (2021) perform joint training of the reader and the retriever by leveraging the perplexity of the output generated by the reader. Sachan et al. (2021) and Lee et al. (2021a) both employ salient span masking to pre-train retrievers, leveraging the perplexity and attention scores from the language model. The inverse cloze task was proposed by Lee et al. (2019) to pre-train dense retrievers in an unsupervised way. Paranjape et al. (2021) propose a method to train retrieval-augmented generators using a second “informed” retriever with access to the output, which the test-time retriever can be distilled from, and Hofstätter et al. (2022) recently proposed a training set filtering/weighting approach to train stronger retrieval-augmented generators. Izacard et al. (2022) explored different contrastive learning methods to train retrievers, while Ram et al. (2022) used recurring spans within a document to create pseudo-positive query-document pairs.

检索器训练。通过利用语言模型的信号或采用无监督学习,可以绕过训练检索器时对昂贵查询-文档标注的需求。REALM (Guu等,2020) 和 RAG (Lewis等,2020) 通过将文档建模为隐变量,并用梯度下降最小化目标函数,联合训练检索器和语言模型。REALM采用MLM方法进行端到端预训练,但使用的是抽取式BERT风格模型 (Devlin等,2019)。Guu等 (2020) 还在微调阶段探索了查询侧微调以避免索引更新,Lee等 (2021b) 在基于短语的检索场景中也研究了该方法。Izacard & Grave (2020) 提出使用交叉注意力分数作为知识蒸馏的监督信号。Sachan等 (2021) 通过利用阅读器生成输出的困惑度,实现了阅读器与检索器的联合训练。Sachan等 (2021) 和 Lee等 (2021a) 都采用显著片段掩码来预训练检索器,利用了语言模型的困惑度和注意力分数。Lee等 (2019) 提出逆完形填空任务,以无监督方式预训练稠密检索器。Paranjape等 (2021) 提出使用能访问输出结果的第二"知情"检索器来训练检索增强生成器的方法,测试时检索器可从中蒸馏知识,Hofstätter等 (2022) 最近提出通过训练集过滤/加权方法来训练更强的检索增强生成器。Izacard等 (2022) 探索了不同对比学习方法来训练检索器,而Ram等 (2022) 则利用文档内重复出现的片段创建伪正例查询-文档对。

Retrieval-augmented language models. Continuous cache models (Grave et al., 2017b) defines a probability distribution over recent tokens, by computing the similarity between previous and current representations of tokens. This distribution is then interpolated with the distribution of the language model, to improve predictions. Later, the amount of tokens used to compute this distribution was extended to a much larger memory by leveraging approximate nearest neighbors search (Grave et al., 2017a). The related kNN-LM model (Khandelwal et al., 2020) replaced LSTMs by transformer networks, and scaled the memory to billions of tokens, leading to strong performance improvements. More recently, RETRO (Borgeaud et al., 2021) extended these by scaling the retrieval memory to trillions of tokens, and changing the model architecture to take retrieved documents as input.

检索增强的语言模型。连续缓存模型 (Grave et al., 2017b) 通过计算token历史表示与当前表示的相似度,定义了一个针对近期token的概率分布。该分布随后与大语言模型的分布进行插值,以提升预测效果。后续研究 (Grave et al., 2017a) 通过近似最近邻搜索算法,将用于计算该分布的token数量扩展至更大的存储规模。相关研究kNN-LM模型 (Khandelwal et al., 2020) 用Transformer网络替代了LSTM,并将记忆规模扩展至数十亿token,实现了显著的性能提升。最新进展RETRO模型 (Borgeaud et al., 2021) 进一步将检索记忆规模扩展至万亿级token,并通过修改模型架构将检索文档作为输入。

Retrieval-Augmentation with Search Engines. Recently, different works have proposed to train large language models to interact with a search engine, by generating text queries, and using the retrieved documents as additional context (Nakano et al., 2021; Thoppilan et al., 2022; Shuster et al., 2022). In the context of few-shot question answering, Lazaridou et al. (2022) used the question to perform a search query, and retrieved documents are added to the prompt of a large language model performing in-context learning.

搜索引擎增强检索。近期多项研究提出训练大语言模型与搜索引擎交互,通过生成文本查询并将检索到的文档作为附加上下文 (Nakano et al., 2021; Thoppilan et al., 2022; Shuster et al., 2022)。在少样本问答场景中,Lazaridou et al. (2022) 将问题作为搜索查询,并将检索到的文档添加至执行上下文学习的大语言模型提示中。

3.2 Few-shot learning

3.2 少样本学习

Few-shot learning, the task of learning from very few examples, has been studied for decades (Thrun & Pratt, 1998; Fink, 2005; Vinyals et al., 2016), but has recently seen an explosion of interest in NLP with the arrival of large pre-trained models, which exhibit emergent few-shot learning abilities (Wei et al., 2022).

少样本学习 (Few-shot learning) 是指从极少量样本中学习的任务,已研究数十年 (Thrun & Pratt, 1998; Fink, 2005; Vinyals et al., 2016),但随着具备涌现少样本学习能力的大规模预训练模型的出现 (Wei et al., 2022),该领域近期在自然语言处理领域引发了研究热潮。

In-context Learning with large Language models. Providing language models with natural language descriptions of tasks, as proposed by Radford et al. (2019) has led to significant developments in few-shot learning. GPT-3 (Brown et al., 2020) demonstrated the ability of large language models to perform few-shot predictions, where the model is given a description of the task in natural language with few examples. Scaling model size, data and compute is crucial to enable this learning ability, leading to the further development of large models (Lieber et al., 2021; Rae et al., 2021; Smith et al., 2022; Chowdhery et al., 2022; Smith et al., 2022). Hoffmann et al. (2022) revisited the scaling law from Kaplan et al. (2020), suggesting that training on more data with a smaller model may be more effective, resulting in Chinchilla, a 70B parameter model with improved parameter efficiency.

大语言模型的上下文学习。Radford等人(2019)提出的通过自然语言任务描述来增强语言模型的方法,推动了少样本学习的重大发展。GPT-3(Brown等人,2020)展示了大语言模型进行少样本预测的能力,即模型仅需少量自然语言示例即可理解任务。扩大模型规模、数据量和计算资源对这种学习能力至关重要,这促进了大模型的进一步发展(Lieber等人,2021; Rae等人,2021; Smith等人,2022; Chowdhery等人,2022; Smith等人,2022)。Hoffmann等人(2022)重新审视了Kaplan等人(2020)提出的扩展定律,指出使用较小模型在更多数据上训练可能更有效,由此诞生了参数效率更高的700亿参数模型Chinchilla。

Few-shot finetuning and prompt-based learning. The above models perform few-shot learning with in-context instructions without training the parameters of the language model. Few-shot learning can also be accomplished by combining textual templates (“prompts”) and various forms of model finetuning, either fully updating a model’s parameters, e.g. for classification (Schick & Schütze, 2021a; Schick & Schutze, 2021; Gao et al., 2021; Tam et al., 2021) or generation (Schick & Schütze, 2021b). Prompts themselves can be optimized, for example by search (Jiang et al., 2020; Shin et al., 2020) or by only updating parts of the model (Logan et al., 2021), or learning “soft-prompts” (Lester et al., 2021; Li & Liang, 2021). Due to its simplicity, in this work we either employ simple prompts or simply feed in inputs without preprocessing, and perform full-model finetuning, a method similar to Le Scao & Rush (2021).

少样本微调 (few-shot finetuning) 和基于提示的学习。上述模型通过上下文指令进行少样本学习,无需训练语言模型的参数。少样本学习也可以通过结合文本模板("提示")和多种形式的模型微调来实现,既可以完全更新模型参数(例如用于分类 [Schick & Schütze, 2021a; Schick & Schutze, 2021; Gao et al., 2021; Tam et al., 2021] 或生成任务 [Schick & Schütze, 2021b]),也可以仅优化提示本身——例如通过搜索 [Jiang et al., 2020; Shin et al., 2020]、仅更新模型部分参数 [Logan et al., 2021] 或学习"软提示" [Lester et al., 2021; Li & Liang, 2021]。出于简便性考虑,本研究采用简单提示或直接输入未预处理数据,并执行全模型微调,该方法与 Le Scao & Rush (2021) 类似。

4 Experiments

4 实验

In this section, we report empirical evaluations of our language models on few-shot learning. We start by introducing our experimental setup, describing our evaluation benchmarks in section 4.1, and giving the training details of our models in section 4.2. Then, we perform an ablation study to compare the different technical choices leading to our main model. We finally evaluate this model, called Atlas, on different natural language understanding tasks in few-shot and full dataset settings.

在本节中,我们报告了大语言模型在少样本学习上的实证评估。首先介绍实验设置,在第4.1节描述评估基准,并在第4.2节给出模型训练细节。随后通过消融实验比较了影响主模型性能的不同技术选择。最终,我们在少样本和全数据集两种设定下,评估了名为Atlas的模型在多种自然语言理解任务上的表现。

4.1 Benchmarks

4.1 基准测试

To evaluate our retrieval-augmented language models we consider the following benchmarks, which include different tasks.

为了评估我们的检索增强语言模型,我们考虑了以下包含不同任务的基准测试。

Knowledge-Intensive Language Tasks (KILT). First, we use the KILT evaluation suite (Petroni et al., 2020), containing 11 datasets corresponding to 5 tasks: fact checking, question answering, dialog generation, entity linking and slot-filling. These different tasks require knowledge about the world to be solved, which can be found in Wikipedia. We evaluate our model on the following tasks and datasets included in KILT: question answering: Natural Questions (Kwiatkowski et al., 2019), TriviaQA (Joshi et al., 2017) and HotpotQA (Yang et al., 2018); slot filling: Zero Shot RE (Levy et al., 2017) and T-REx (Elsahar et al., 2018); entity linking: AIDA CoNLL-YAGO (Hoffart et al., 2011); dialogue: Wizard of Wikipedia (Dinan et al., 2019); and fact checking: FEVER (Thorne et al., 2018). The KILT versions of these datasets differ from their original versions, as instances requiring knowledge not present in the August 2019 Wikipedia dump have been removed.

知识密集型语言任务 (KILT) 。首先,我们使用 KILT 评估套件 (Petroni et al., 2020) ,其中包含对应 5 类任务的 11 个数据集:事实核查、问答、对话生成、实体链接和槽填充。这些不同任务需要利用关于世界的知识来解决,而这些知识可以在维基百科中找到。我们在 KILT 包含的以下任务和数据集上评估模型:问答任务:Natural Questions (Kwiatkowski et al., 2019) 、TriviaQA (Joshi et al., 2017) 和 HotpotQA (Yang et al., 2018) ;槽填充任务:Zero Shot RE (Levy et al., 2017) 和 T-REx (Elsahar et al., 2018) ;实体链接任务:AIDA CoNLL-YAGO (Hoffart et al., 2011) ;对话任务:Wizard of Wikipedia (Dinan et al., 2019) ;事实核查任务:FEVER (Thorne et al., 2018) 。这些数据集的 KILT 版本与其原始版本不同,因为需要 2019 年 8 月维基百科转储中不存在知识的实例已被移除。

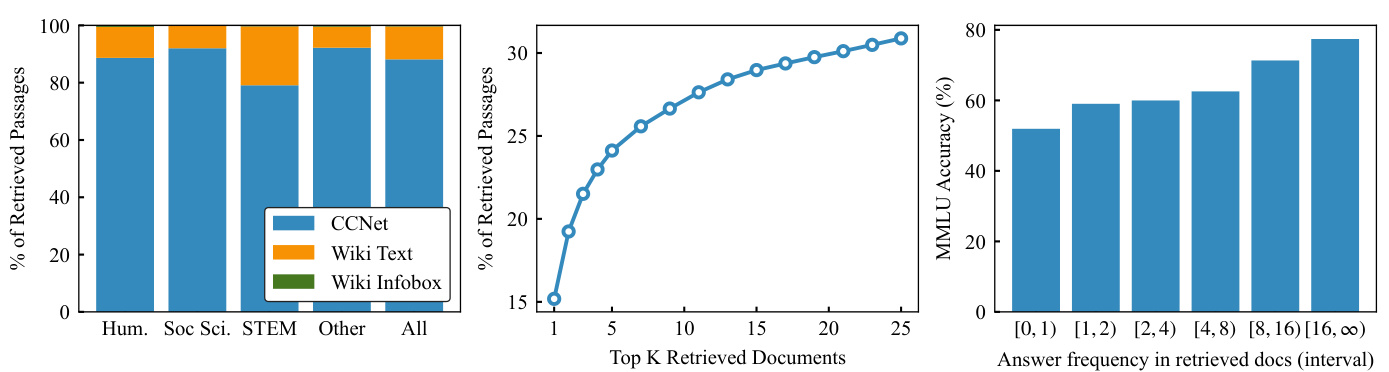

Massively-Multitask Language Understanding (MMLU). Our second main evaluation benchmark is MMLU (Hendrycks et al., 2021), which contains 57 multi-choice question answering datasets (referred to as domains), sourced from real examinations designed for humans. These cover a very broad range of topics, e.g. high school mathematics, professional law, logical fallacies and clinical knowledge and can be broadly categorized in four subsets: humanities, social sciences, STEM and “other”. We focus on few-shot learning, and the authors of the benchmarks suggest to use 5 training examples per domain. Beyond the 5-shot setting, We also consider three additional settings. The first is a zero-shot setting, with no training data at all. The second, which we call multi-task few-shot, is where we train a single model on the 6-shot data from all tasks, hence leading to a training set of 285 examples. The last, which we call transfer learning, leverages additional training examples from other multiple-choice QA tasks provided by the MMLU authors, namely MCTest (Richardson, 2013), RACE (Lai et al., 2017), ARC (Clark et al., 2018) and OBQA (Mihaylov et al., 2018) leading to a training set of 95k examples.

大规模多任务语言理解 (MMLU)。我们的第二个主要评估基准是 MMLU (Hendrycks et al., 2021),它包含57个多选问答数据集(称为领域),这些数据源自为人类设计的真实考试。这些数据集涵盖非常广泛的主题,例如高中数学、专业法律、逻辑谬误和临床知识,并大致分为四个子集:人文、社会科学、STEM和“其他”。我们专注于少样本学习,基准作者建议每个领域使用5个训练示例。除了5样本设置外,我们还考虑了另外三种设置。第一种是零样本设置,完全没有训练数据。第二种称为多任务少样本,我们在所有任务的6样本数据上训练单一模型,从而形成包含285个示例的训练集。最后一种称为迁移学习,利用MMLU作者提供的其他多选问答任务(即 MCTest (Richardson, 2013)、RACE (Lai et al., 2017)、ARC (Clark et al., 2018) 和 OBQA (Mihaylov et al., 2018))的额外训练示例,最终形成包含95k示例的训练集。

Additional benchmarks. Additionally, we report results on the original open-domain versions of the popular Natural Questions (Kwiatkowski et al., 2019), and TriviaQA (Joshi et al., 2017) datasets. We also evaluate our model on the original version of FEVER (Thorne et al., 2018), which presents fact checking as a three-way classification problem for textual claims (either “Supported”: the text is supported by evidence in Wikipedia, “refuted”: the claim is not consistent with evidence in Wikipedia, or “not enough info”, where there is insufficient evidence to make a judgement). We also perform experiments to assess temporal sensitivity of our models. Here, we construct a dataset from TempLAMA (Dhingra et al., 2022), consisting of a set of time-sensitive cloze questions on a range of topics, where the answer changes from 2017 to 2020. We assess the accuracy of our models when supplied with a index from 2017 vs 2020 to assess to what degree models faithfully reflect the content of the index supplied to them at test time, and how effective updating the index is as a continual learning or model update ability method.

其他基准测试。此外,我们还在流行的Natural Questions (Kwiatkowski等人,2019) 和TriviaQA (Joshi等人,2017) 数据集的原始开放域版本上报告了结果。我们还评估了模型在FEVER (Thorne等人,2018) 原始版本上的表现,该任务将事实核查作为文本声明的三分类问题("支持":文本有维基百科证据支撑,"反驳":声明与维基百科证据不一致,或"信息不足":证据不足以做出判断)。我们还进行了实验来评估模型的时间敏感性。为此,我们从TempLAMA (Dhingra等人,2022) 构建了一个数据集,包含一系列主题的时间敏感性完形填空问题,其答案在2017至2020年间会发生变化。我们通过对比模型在2017年和2020年索引下的准确率,评估模型在测试时对给定索引内容的忠实反映程度,以及更新索引作为持续学习或模型更新方法的有效性。

4.2 Technical details

4.2 技术细节

We now describe the procedure for pre-training and fine-tuning our models. We focus on the setting used for the ablation studies performed in Section 4.3 and Section 4.4. We give more details about the hyper parameters used for our final model later.

我们现在描述模型的预训练和微调流程。重点介绍第4.3节和第4.4节消融实验所使用的配置方案。关于最终模型采用的超参数细节将在后文详述。

Pre-training. For the pre-training, we initialize the retriever module using the unsupervised Contriever model, which uses the BERT-base architecture. We initialize the language model with the T5 pre-trained weight. As the original T5 pre-trained model included supervised data in the training set, we use the version 1.1 models which were trained on unlabeled text only. Specifically, we initialize from the T5-lm-adapt variants due to their improved stability.

预训练。在预训练阶段,我们使用基于BERT-base架构的无监督Contriever模型初始化检索模块,并采用T5预训练权重初始化语言模型。由于原始T5预训练模型包含监督数据,我们选用仅基于无标注文本训练的1.1版本模型。具体而言,我们选用T5-lm-adapt变体进行初始化,因其具有更好的稳定性。

For the ablation studies performed in Section 4.3 and Section 4.4, we use T5-XL which contains 3B weights. We pre-train all our models for 10,000 iterations, using AdamW with a batch size of 64 and a learning rate of $10^{-4}$ for the reader and $10^{-5}$ for the retriever with linear decay and 1,000 warmup steps. We refresh the index every 1,000 steps. This means that the index is recomputed 10 times during the pre-training, leading to an overhead of around $30%$ , compared to training with a fixed retriever. We set the number of retrieved documents to 20. We detail the hyper parameters used for the training of our final model at the beginning of Section 4.5.

在第4.3节和第4.4节进行的消融研究中,我们使用包含30亿参数的T5-XL模型。所有模型均预训练10,000次迭代,采用AdamW优化器:阅读器(reader)的批量大小为64、学习率为$10^{-4}$,检索器(retriever)学习率为$10^{-5}$,并应用线性衰减和1,000步预热。每1,000步更新一次索引,这意味着预训练期间会重新计算10次索引,相比固定检索器训练会产生约$30%$的额外开销。设置检索文档数量为20篇。第4.5节开头详细列出了最终模型的训练超参数。

Table 1: Retriever loss ablation. We compare different loss functions to pre-train the retriever jointly with the language model. We use the prefix MLM task, and the December 2021 Wikipedia dump for both the index and pre-training data. Fine-tuning is performed with query-side fine-tuning and the loss used for pre-training. Best result is bold, second highest underlined.

| 64-shot | 1024-shot | ||||||||

| MLM | NQ | WoW | FEVER | Avg. | NQ | WoW | FEVER | Avg. | |

| Closed-book | 1.083 | 6.5 | 14.1 | 59.0 | 26.5 | 10.7 | 16.5 | 75.3 | 34.2 |

| No Joint pre-training | 9.0 | 14.1 | 67.0 | 30.0 | 9.9 | 16.6 | 78.3 | 34.9 | |

| Fixed retriever | 0.823 | 39.9 | 14.3 | 72.4 | 42.2 | 45.3 | 17.9 | 90.0 | 51.1 |

| ADist | 0.780 | 40.9 | 14.4 | 73.8 | 43.0 | 46.2 | 17.2 | 90.9 | 51.4 |

| EMDR2 | 0.783 | 43.3 | 14.6 | 72.1 | 43.3 | 44.9 | 18.3 | 85.7 | 49.6 |

| PDist | 0.783 | 45.0 | 15.0 | 77.0 | 45.7 | 44.9 | 17.9 | 90.2 | 51.0 |

| LOOP | 0.766 | 41.8 | 15.0 | 74.4 | 43.7 | 47.1 | 17.9 | 87.5 | 50.8 |

表 1: 检索器损失消融实验。我们比较了不同损失函数在预训练检索器与语言模型联合训练时的效果。实验使用前缀 MLM 任务,索引和预训练数据均采用 2021 年 12 月的维基百科数据快照。微调采用查询端微调策略,并沿用预训练阶段的损失函数。最优结果加粗显示,次优结果添加下划线。

| MLM | NQ | WoW | FEVER | Avg. | NQ | WoW | FEVER | Avg. | |

|---|---|---|---|---|---|---|---|---|---|

| Closed-book | 1.083 | 6.5 | 14.1 | 59.0 | 26.5 | 10.7 | 16.5 | 75.3 | 34.2 |

| No Joint pre-training | 9.0 | 14.1 | 67.0 | 30.0 | 9.9 | 16.6 | 78.3 | 34.9 | |

| Fixed retriever | 0.823 | 39.9 | 14.3 | 72.4 | 42.2 | 45.3 | 17.9 | 90.0 | 51.1 |

| ADist | 0.780 | 40.9 | 14.4 | 73.8 | 43.0 | 46.2 | 17.2 | 90.9 | 51.4 |

| EMDR2 | 0.783 | 43.3 | 14.6 | 72.1 | 43.3 | 44.9 | 18.3 | 85.7 | 49.6 |

| PDist | 0.783 | 45.0 | 15.0 | 77.0 | 45.7 | 44.9 | 17.9 | 90.2 | 51.0 |

| LOOP | 0.766 | 41.8 | 15.0 | 74.4 | 43.7 | 47.1 | 17.9 | 87.5 | 50.8 |

Fine-tuning. When performing a downstream task, either in a few-shot setting or with a large training set, we employ fine-tuning to adapt our models to these tasks. For the few-shot KILT ablation experiments, we perform a fixed number of fine-tuning iterations, instead of using early-stopping. More precisely, we decided to use 50 iterations for the 64-shot setting and 200 iterations in the 1024-shot setting. In both cases, we use a batch size of 32 examples, a learning rate of $4\times10^{-5}$ with linear decay and 5 warmup steps for both the reader and the retriever.

微调 (Fine-tuning)。在执行下游任务时,无论是少样本场景还是大规模训练集场景,我们都采用微调技术使模型适配具体任务。在少样本KILT消融实验中,我们采用固定次数的微调迭代,而非早停机制。具体而言,64样本场景设置50次迭代,1024样本场景设置200次迭代。两种情况下均使用32样本的批量大小,学习率设为$4\times10^{-5}$并采用线性衰减策略,同时为阅读器(retriever)和检索器(reader)设置5次预热步数。

Unlabeled datasets. Finally, we discuss the unlabeled text datasets that we use to train our models, which form the retrieval index. First, we consider the Dec. 20, 2021 Wikipedia dump, for which we keep the lists and infoboxes, which are linearized by adding a semi-colon separator between the entries. We split articles by section, and split long sections into passages of equal sizes and containing less than 200 words. This leads to a total of 37M passages, containing 78 words in average. We also use documents from the 2020-10 common crawl dump, pre processed with the CCNet pipeline (Wenzek et al., 2020). We perform additional document filtering, in a similar fashion to Gopher (Rae et al., 2021). More precisely, we filter documents based on document length, average word length, ratio of alphanumeric characters and number of repeated tokens. This leads to a total of 350M passages. The same passages are used for the index and model pre-training. During pre-training, we ensure the passage we are training on is filtered out from the retrieved documents, to prevent the model from simply retrieving the passage it is de-nosing/generating, and trivially using it to solve the pre-training task.

无标注数据集。最后,我们讨论用于训练模型并构成检索索引的无标注文本数据集。首先,我们采用2021年12月20日的维基百科数据转储,保留列表和信息框,并通过在条目间添加分号分隔符进行线性化处理。文章按章节分割,过长的章节被切分为长度相等且不超过200词的段落,最终得到总计3700万段落,平均每段含78词。此外,我们还使用2020年10月Common Crawl转储的文档,这些数据经过CCNet流程预处理(Wenzek et al., 2020)。我们参照Gopher(Rae et al., 2021)的方法进行额外文档过滤,具体包括基于文档长度、平均词长、字母数字字符比例及重复token数量的筛选,最终获得3.5亿段落。这些段落同时用于索引构建和模型预训练。在预训练阶段,我们会确保当前训练段落已从检索文档中排除,以防止模型直接检索到正在去噪/生成的段落并借此简单完成预训练任务。

4.3 Pre-training loss and tasks

4.3 预训练损失与任务

We start our ablation study by comparing different pre-training tasks, and objective functions to jointly train the retriever and the language model. Our goal here is to answer the following research questions:

我们通过比较不同的预训练任务和目标函数来联合训练检索器和大语言模型,从而开始消融研究。我们的目标是回答以下研究问题:

(RQ 1) Does jointly pre-training the whole model lead to better few-shot performance? (RQ 2) What is the best objective function for the retriever, and the best pretext task?

(RQ 1) 联合预训练整个模型是否能带来更好的少样本性能?

(RQ 2) 检索器的最佳目标函数和最佳前置任务是什么?

We start by comparing the training objectives of the retriever, introduced in Section 2.2, by pre-training models using the masked language modelling task. We evaluate these models on a subset of the 64-shot and 1024-shot KILT benchmark: Natural Questions, FEVER and Wizard of Wikipedia, along with two baselines: a ‘closed-book” (i.e. non-augmented T5) baseline, pre-trained on the same data, and initialized from Contriever and T5-lm-adapt. We report results in Table 1. First, we note the poor performance of the closed-book baseline, indicating the importance of augmentation. Next, we observe that pre-training our model with retrieval is important to obtain good performance on few-shot tasks. Indeed, all models that include retrieval during pre-training strongly outperform the baseline without joint pre-training. Next, we compare a model that was pre-trained with a fixed retriever, and models using the various retriever training objectives. On the MLM validation metric corresponding to the pre-training objective, we observe that jointly training the retriever leads to strong improvements. This effect tends to be less marked on 64-shot downstream tasks, and almost non-existent for 1024-shot. We believe that this is evidence that the biggest impact of pre-training is on the language model, which learns to use and aggregate information from the retrieved documents. Lastly, we do not observe significant systematic differences between the different retriever training objectives. We thus decide adopt use Perplexity Distillation for subsequent experiments, as it tends to be more stable than EMDR $_{,^{-}}^{2}$ or ADist, and more computationally efficient than LOOP.

我们首先通过使用掩码语言建模任务预训练模型,比较第2.2节介绍的检索器训练目标。在64样本和1024样本的KILT基准测试子集(Natural Questions、FEVER和Wizard of Wikipedia)上评估这些模型,同时设置两个基线:基于相同数据预训练的"闭卷"(即未增强的T5)基线,以及从Contriever和T5-lm-adapt初始化的模型。结果如表1所示。首先,闭卷基线的较差表现印证了增强的重要性。其次,我们发现通过检索进行模型预训练对少样本任务的良好表现至关重要——所有在预训练阶段包含检索的模型都显著优于未联合预训练的基线。接着比较了使用固定检索器预训练的模型与采用不同检索器训练目标的模型。在与预训练目标对应的MLM验证指标上,联合训练检索器带来了显著提升,但这种效果在64样本下游任务中较弱,在1024样本任务中几乎消失。我们认为这表明预训练最主要的影响是让语言模型学会利用和整合检索文档的信息。最后,不同检索器训练目标之间未呈现显著系统性差异,因此后续实验采用Perplexity Distillation方法,因其比EMDR$_{,^{-}}^{2}$或ADist更稳定,且计算效率高于LOOP。

Table 2: Pretext task ablation. We compare different pretext tasks, used to jointly pre-train our models. Examples are randomly sampled from the training set of the KILT version of the dataset. We report the exact match on Natural Questions, the F1 score on Wizard of Wikipedia and the accuracy on FEVER.

| 64-shot | 1024-shot | |||||||

| NQ | Wow | FEVER | Avg. | NQ | WoW | FEVER | Avg. | |

| Prefix Language Modelling | 41.0 | 14.5 | 64.9 | 40.1 | 44.7 | 17.9 | 86.0 | 49.5 |

| Masked Language Modelling | 42.7 | 14.9 | 69.7 | 42.4 | 44.7 | 18.3 | 88.8 | 50.6 |

| Title-to-section generation | 41.1 | 15.2 | 66.1 | 40.8 | 45.4 | 17.9 | 84.6 | 49.3 |

表 2: 预训练任务消融实验。我们比较了用于联合预训练模型的不同预训练任务。示例随机采样自数据集KILT版本的训练集。我们报告了Natural Questions的精确匹配率、Wizard of Wikipedia的F1分数以及FEVER的准确率。

| 64-shot | 1024-shot | |||||||

|---|---|---|---|---|---|---|---|---|

| NQ | WoW | FEVER | Avg. | NQ | WoW | FEVER | Avg. | |

| Prefix Language Modelling | 41.0 | 14.5 | 64.9 | 40.1 | 44.7 | 17.9 | 86.0 | 49.5 |

| Masked Language Modelling | 42.7 | 14.9 | 69.7 | 42.4 | 44.7 | 18.3 | 88.8 | 50.6 |

| Title-to-section generation | 41.1 | 15.2 | 66.1 | 40.8 | 45.4 | 17.9 | 84.6 | 49.3 |

Table 3: Index content ablation. In this table, we report results for models where the content of the index was changed between the pre-training and the fine-tuning.

| 64-shot | 1024-shot | ||||||||

| Index | Training data | NQ | WoW | FEVER | Avg. | NQ | WoW | FEVER | Avg. |

| Wiki | Wiki | 42.7 | 14.9 | 69.7 | 42.4 | 44.7 | 18.3 | 88.8 | 50.6 |

| Wiki | CC | 40.9 | 15.3 | 67.3 | 41.2 | 44.8 | 18.4 | 88.1 | 50.4 |

| CC | Wiki | 32.9 | 14.5 | 72.1 | 39.8 | 37.8 | 17.1 | 85.8 | 46.9 |

| CC | CC | 38.4 | 14.9 | 70.1 | 41.1 | 42.0 | 17.3 | 88.9 | 49.4 |

表 3: 索引内容消融实验。本表展示了在预训练和微调阶段改变索引内容后的模型结果。

| Index | Training data | NQ | WoW | FEVER | Avg. | NQ | WoW | FEVER | Avg. |

|---|---|---|---|---|---|---|---|---|---|

| Wiki | Wiki | 42.7 | 14.9 | 69.7 | 42.4 | 44.7 | 18.3 | 88.8 | 50.6 |

| Wiki | CC | 40.9 | 15.3 | 67.3 | 41.2 | 44.8 | 18.4 | 88.1 | 50.4 |

| CC | Wiki | 32.9 | 14.5 | 72.1 | 39.8 | 37.8 | 17.1 | 85.8 | 46.9 |

| CC | CC | 38.4 | 14.9 | 70.1 | 41.1 | 42.0 | 17.3 | 88.9 | 49.4 |

Next, we compare the different self-supervised pretext tasks introduced in Section 2.3 in Table 2. Here we observe similar results for all three tasks, with a small advantage for masked language modelling. Thus, in what follows, we adopt masked language modelling for pre-training.

接下来,我们在表2中对比第2.3节介绍的三种自监督预训练任务。结果显示所有任务表现相近,其中掩码语言建模 (masked language modelling) 略占优势。因此后续实验均采用掩码语言建模进行预训练。

Finally, we consider different combinations of data sources—Wikipedia and common crawl—for the index and training data during pre-training. In all cases, we use the Wikipedia 2021 dump as the index when performing few-shot fine-tuning. We report results in Table 3. First, we observe that using a Wikipedia-based index leads to better downstream performance. There could be two explanations for this: first, as we use Wikipedia for the few-shot tasks, the model might be better adapted when trained using the same data. Another explanation might be that Wikipedia is a higher-quality and denser source of knowledge than common crawl. Second, when using a common crawl index, we observe that pre-training on Wikipedia data leads to lower performance than using common crawl data. We believe that the primary reason is that the distribution mismatch between the two domains leads to generally-less relevant retrieved documents. In turn, this probably means that the pre-training is less efficient, because the language model does not leverage as much information from the documents. In the following, we thus decide to combine the data from both domains for both the index and the pre-training data.

最后,我们考虑了预训练阶段索引数据和训练数据的不同组合——维基百科和Common Crawl。在所有情况下,执行少样本微调时均使用2021年版维基百科转储文件作为索引。结果如表3所示:首先发现基于维基百科的索引能带来更好的下游性能,可能有两个原因:(1) 由于少样本任务采用维基百科数据,模型在相同数据训练时适应度更高;(2) 维基百科相比Common Crawl具有更高质量和更密集的知识密度。其次,当使用Common Crawl索引时,预训练采用维基百科数据的效果反而低于Common Crawl数据。我们认为主要原因是两个领域的分布差异导致检索文档的相关性普遍降低,进而使得预训练效率下降——因为语言模型无法从文档中充分提取有效信息。基于此,我们最终决定在索引和预训练数据中同时采用两个领域的数据组合。

表3:

4.4 Fine-tuning

4.4 微调

In this section, we perform an ablation study on how to apply our models on downstream tasks, which relies on fine-tuning. In particular, we want to investigate the following research question:

在本节中,我们对如何将模型应用于依赖微调的下游任务进行了消融研究。具体而言,我们重点探究以下研究问题:

(RQ 3) How to efficiently fine-tune Atlas on tasks with limited training data?

(RQ 3) 如何在训练数据有限的任务上高效微调Atlas?

Table 4: Retriever fine-tuning ablation. Here, we compare different strategies to fine-tune the retriever in a few-shot setting.

| 64-shot | 1024-shot | |||||||

| NQ | Wow | FEVER | Avg. | NQ | Wow | FEVER | Avg. | |

| Standard fine-tuning | 44.3 | 14.9 | 73.2 | 44.1 | 47.0 | 18.4 | 89.7 | 51.7 |

| Top-100 re-ranking | 44.2 | 14.6 | 75.4 | 44.7 | 47.1 | 18.7 | 88.9 | 51.6 |

| Query-side fine-tuning | 45.0 | 15.0 | 77.0 | 45.7 | 44.9 | 17.9 | 90.2 | 51.0 |

| Fixed retriever | 36.8 | 14.5 | 72.0 | 41.1 | 38.0 | 17.7 | 89.3 | 48.3 |

表 4: 检索器微调消融实验。这里我们比较了在少样本设置下微调检索器的不同策略。

| 64-shot | 1024-shot | |||||||

|---|---|---|---|---|---|---|---|---|

| NQ | Wow | FEVER | Avg. | NQ | Wow | FEVER | Avg. | |

| 标准微调 | 44.3 | 14.9 | 73.2 | 44.1 | 47.0 | 18.4 | 89.7 | 51.7 |

| Top-100重排序 | 44.2 | 14.6 | 75.4 | 44.7 | 47.1 | 18.7 | 88.9 | 51.6 |

| 查询端微调 | 45.0 | 15.0 | 77.0 | 45.7 | 44.9 | 17.9 | 90.2 | 51.0 |

| 固定检索器 | 36.8 | 14.5 | 72.0 | 41.1 | 38.0 | 17.7 | 89.3 | 48.3 |

Table 5: Performance on MMLU as a function of model size.

| 5-shot | 5-shot t (multi-task) | Full / Transfer | |||||||

| 770M | 3B | 11B | 770M | 3B | 11B | 770M | 3B | 11B | |

| Closed-book T5 | 29.2 | 35.7 | 36.1 | 26.5 | 40.0 | 43.5 | 42.4 | 50.4 | 54.0 |

| ATLAS | 38.9 | 42.3 | 43.4 | 42.1 | 48.7 | 56.4 | 56.3 | 59.9 | 65.8 |

| △ | +9.8 | +6.6 | +7.3 | +15.6 | +8.7 | +12.9 | +13.9 | +9.5 | +11.8 |

表 5: MMLU性能随模型规模的变化

| 5-shot | 5-shot t (multi-task) | Full / Transfer | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 770M | 3B | 11B | 770M | 3B | 11B | 770M | 3B | 11B | |

| Closed-book T5 | 29.2 | 35.7 | 36.1 | 26.5 | 40.0 | 43.5 | 42.4 | 50.4 | 54.0 |

| ATLAS | 38.9 | 42.3 | 43.4 | 42.1 | 48.7 | 56.4 | 56.3 | 59.9 | 65.8 |

| △ | +9.8 | +6.6 | +7.3 | +15.6 | +8.7 | +12.9 | +13.9 | +9.5 | +11.8 |

To answer this question, we compare the different strategies to fine-tune the retriever module, described in Section 2.4. We report results in Table 4. First, as for pre-training, we observe that keeping the retriever fixed during fine-tuning leads to a significant performance drops, for both 64- and 1024-shot settings. Second, the re-ranking strategy (row 2) leads to very similar results to fully updating the index (row 1), while being significantly more efficient. Lastly, fine-tuning only the query encoder also leads to strong results: in particular, in the 64-shot setup, this is slightly stronger than performing full fine-tuning, which we attribute to there being less opportunity for over-fitting. On the other hand, on the 1024-shot setting, performing a full fine-tuning leads to stronger results, especially on Natural Questions. In the following, we thus use query-side fine-tuning for experiments with small numbers of examples, and standard fine-tuning for larger datasets.

为回答这个问题,我们比较了第2.4节中描述的微调检索器模块的不同策略。结果如表4所示。首先,与预训练类似,我们观察到在微调期间固定检索器会导致性能显著下降,无论是64样本还是1024样本设置。其次,重排序策略(第2行)与完全更新索引(第1行)的结果非常接近,但效率显著更高。最后,仅微调查询编码器也能取得强劲效果:特别是在64样本设置中,其表现略优于完全微调,我们认为这是因为过拟合的机会更少。另一方面,在1024样本设置中,完全微调能带来更好的结果,尤其在Natural Questions数据集上。因此,后续实验中我们对少量样本采用查询端微调,对较大数据集采用标准微调。

4.5 Training and evaluating Atlas

4.5 Atlas的训练与评估

In this section, we apply the findings from the ablations of the previous sections to train a family of Atlas models, ranging from 770M to 11B parameters. More specifically, we use the Perplexity Distillation objective function, along with the masked language modelling pretext task. We pre-train these models using a mix of Wikipedia and Common Crawl data, for both the training data and content of the index. We retrieve 20 documents, and update the index every 2,500 steps and perform re-ranking of the top-100 documents. We pre-train models for 10,000 iterations using AdamW with a batch size of 128.

在本节中,我们应用前几章节的消融实验结果,训练了参数量从7.7亿到110亿不等的Atlas模型系列。具体而言,我们采用困惑度蒸馏(Perplexity Distillation)目标函数,并结合掩码语言建模(masked language modelling)前置任务。预训练数据同时使用Wikipedia和Common Crawl的混合数据集作为训练数据与索引内容,每次检索20篇文档,每2,500步更新一次索引并对Top-100文档进行重排序。所有模型均采用AdamW优化器,以128的批量大小进行了10,000次迭代预训练。

4.5.1 MMLU Results

4.5.1 MMLU 结果

As mentioned in section 4.1, we consider four setting for MMLU: 1) a zero-shot setting where we directly apply the pretrained model with no few-shot finetuning 2) a 5-shot setting, where we finetune a model using 5 training examples for each of the 57 domains 3) a 5-shot multitask setting, where, rather than finetuning a model independently for each domain, we train a single model to perform all tasks and 4) a setting with access to a number of auxiliary datasets, with 95K total training examples. We train the models to generate the letter corresponding to the correct answer option (‘A’, ‘B’, ‘C’ or ‘D’), and pick the answer with the most likely of the 4 letters at test time. Full technical details can be found in appendix A.1.

如第4.1节所述,我们为MMLU考虑了四种设置:1) 零样本设置,直接应用未经少样本微调的预训练模型;2) 5样本设置,针对57个领域中的每个领域使用5个训练样本进行模型微调;3) 5样本多任务设置,不独立微调每个领域的模型,而是训练单一模型处理所有任务;4) 使用包含9.5万训练样本的辅助数据集。我们将模型训练为生成对应正确答案选项的字母('A'、'B'、'C'或'D'),并在测试时选择4个字母中概率最高的答案。完整技术细节见附录A.1。

Performance vs Parameters. We start by comparing Atlas to closed-book models of different sizes for 5-shot, 5-shot multitask and the full setting, and report results in Table 5. Across these settings, Atlas outperforms the closed-book baselines by between 6.6 and 15.6 points, demonstrating consistent utility of retrieval for few-shot language understanding across 57 domains. The closed-book T5 struggles to perform significantly better than random $(25%)$ in few-shot settings with 770M parameters, whereas the equivalent Atlas achieves around $40%$ , significantly better than random, despite its small size. All models improve with more data, but interestingly, the 770M models do not benefit as much from few-shot multitask learning compared to larger models (for closed-book, it actually loses 3 points) suggesting smaller models struggle to grasp the synergies between the tasks in the few-shot setting. Larger models exploit the multi-task setting well, with Atlas improving more than closed-book. For example, Atlas-11B improves by 13 points ( $43.4\rightarrow$ 56.4), but equivalent closed-book only improves by 7 $36.1\rightarrow43.5$ ). Finally, on the transfer learning setting, all models improve, but the relative gaps between closed-book at Atlas models remain similar.

性能与参数对比。我们首先将Atlas与不同规模的闭卷模型在5样本、5样本多任务及完整设置下进行对比,结果如表5所示。在这些设置中,Atlas以6.6至15.6分的优势超越闭卷基线模型,证明了检索机制在57个领域的少样本语言理解任务中具有持续效用。拥有7.7亿参数的闭卷T5模型在少样本设置中表现仅略高于随机水平$(25%)$,而同等规模的Atlas模型能达到约$40%$的准确率,显著优于随机水平。所有模型都随数据量增加而提升,但有趣的是,与更大规模模型相比,7.7亿参数模型从少样本多任务学习中获益较少(闭卷版本反而下降3分),这表明小模型难以在少样本设置中把握任务间的协同效应。大规模模型能更好地利用多任务设置,其中Atlas的改进幅度大于闭卷模型。例如Atlas-110亿参数版本提升13分($43.4\rightarrow$56.4),而同规模闭卷模型仅提升7分($36.1\rightarrow43.5$)。在迁移学习设置中,所有模型均有提升,但闭卷模型与Atlas模型之间的相对差距保持稳定。

Table 6: Standard vs de-biased inference for MMLU These results are reported for Atlas-11B, using cyclic permutations for de-biasing, which increases inference costs by a factor of $4\times$ .

| Zero-shot | 5-shot | 5-shot (multi-task) | Full 1/T Transfer | |

| Standard Inference | 36.8 | 43.4 | 56.4 | 65.8 |

| De-biased Inference | 47.1 | 47.9 | 56.6 | 66.0 |

表 6: MMLU标准推理与去偏推理对比 这些结果是针对Atlas-11B模型报告的,使用循环排列进行去偏处理,这会使推理成本增加 $4\times$ 。

| 零样本 | 少样本(5次) | 少样本(5次,多任务) | 完整1/T迁移 | |

|---|---|---|---|---|

| 标准推理 | 36.8 | 43.4 | 56.4 | 65.8 |

| 去偏推理 | 47.1 | 47.9 | 56.6 | 66.0 |

De-biasing. When finetuning, we permute which answer option appears with which answer letter to reduce over-fitting and encourage a uniform prior over answer letters. However, the model may still exhibit a bias towards some letters, especially in few-shot settings, so we also include a second ‘de-biased’ inference mode in addition the standard inference used above. Here, we run 4 forward passes, one for each cyclic permutation of the answer letter-answer option assignment in the question, e.g. the answer option assigned to letter ‘A’ becomes ‘B’, what was ‘B’ becomes ‘C’ etc.3 We then sum the 4 probabilities to obtain the final prediction, which reduces spurious bias towards one of the answer letters (further details in appendix A.1). The results are shown in Table 6. We find that in zero-shot and 5-shot settings, de-biasing is very effective, improving results by 10.3 and 4.5 points respectively. When more training data is available, the need for de-biasing decreases, leading to only 0.2 point improvement in the multi-task and full data settings.

去偏。在微调时,我们会随机排列答案选项与字母的对应关系,以减少过拟合并促使模型对答案字母保持均匀先验。然而,模型仍可能对某些字母表现出偏好(尤其在少样本场景下),因此除上述标准推理模式外,我们还引入了第二种"去偏"推理模式。该模式下,我们会执行4次前向计算,每次对应问题中答案字母-选项分配的循环置换(例如原分配给字母'A'的选项改为'B','B'改为'C'等)[3]。最终将4次概率求和得到预测结果,以此降低对特定字母的虚假偏好(详见附录A.1)。结果如表6所示:零样本和5样本场景下去偏效果显著,分别提升10.3和4.5个百分点;当训练数据量增加时(多任务和全数据场景),去偏收益降至仅0.2个百分点。

Comparison to published works Next, we compare our Atlas-11B results with de-biasing to recently reported results with state-of-the-art large language models such as GPT-3 or Chinchilla, which required significantly more amount of computation to train. We report results in Table 7. We find that Atlas is able to perform significantly better than random in zero-shot, and in conjunction with de-biased inference, achieves zero-shot scores that exceed 5-shot results reported with GPT3 in the literature (47.1% vs $43.9%$ ) (Hendrycks et al., 2021). For the 5-shot setting, Atlas outperforms GPT-3 by 4%, while using $15\times$ less parameters, and $10\times$ less pre-training compute.4 When multitask-training on the combined 5-shot data, Atlas improves to $56.6%$ close to the 5-shot performance of Gopher $(60.0%)$ ). Finally, on the full data setting, where we train on auxiliary data recommended by the MMLU authors, Atlas reaches an overall accuracy of $65.6%$ , close to the state-of-the-art. Interestingly, in this setup, Atlas significantly outperforms GPT-3, while on the 5-shot setting, their performance is similar.

与已发表工作的对比

接下来,我们将经过去偏处理的Atlas-11B模型结果与最新报道的先进大语言模型(如GPT-3或Chinchilla)进行对比,后者的训练计算量显著更大。结果如表7所示。我们发现Atlas在零样本场景下的表现显著优于随机基线,结合去偏推理后,其零样本得分(47.1%)超越了文献中GPT-3的5样本结果(43.9%)(Hendrycks et al., 2021)。在5样本设定下,Atlas以少15倍的参数量和10倍的预训练计算量,性能仍高出GPT-3达4%。当在组合5样本数据上进行多任务训练时,Atlas提升至56.6%,接近Gopher的5样本表现(60.0%)。最后,在MMLU作者推荐的辅助数据上进行全数据训练时,Atlas达到65.6%的整体准确率,接近当前最优水平。值得注意的是,在此设定下Atlas显著优于GPT-3,而在5样本设定中两者性能相近。

(注:根据规则要求,已对特殊符号/公式保持原样,表格引用格式转换为"表7",引用文献保留原始标注[Hendrycks et al., 2021],技术术语如"zero-shot"译为"零样本"并在首次出现时标注英文)

4.5.2 Open-domain Question Answering Results

4.5.2 开放域问答结果

Next we evaluate Atlas on two open-domain question answering benchmarks: Natural Questions and TriviaQA. We compare to prior work, both in a few-shot setting using 64 examples, and using the full training set, and report results in Table 8. On these benchmarks, which require high-degree of mem or is ation, we clearly see the benefits of retrieval-augmentation. Atlas-11B obtains state-of-the-art results on 64-shot question answering, for both Natural Questions and TriviaQA. In particular, it outperforms significantly larger models, such as PaLM, or models that required significantly more training compute such as Chinchilla. When using the full training set, Atlas also obtains state-of-the-art results, for example improving the accuracy on Natural Questions from $55.9%$ to $60.4%$ . This result is obtained using an index comprised of CCNet and the December 2021 Wikipedia corpora, our default setting for the index. In section 5.2 we consider using indexes composed of Wikipedia corpus archived at different dates, and demonstrate an additional $+3.6%$ on Natural Questions when using an index which is temporally matched to Natural Questions. We report performance as a function of model size as well as detailed hyper parameters in Appendix A.2.

接下来我们在两个开放域问答基准测试上评估Atlas:Natural Questions和TriviaQA。我们将与先前的工作进行比较,包括使用64个示例的少样本设置和使用完整训练集的情况,并在表8中报告结果。在这些需要高度记忆化的基准测试上,我们清楚地看到了检索增强的优势。Atlas-11B在64样本问答任务上取得了最先进的结果,无论是在Natural Questions还是TriviaQA上。特别是,它的表现显著优于规模更大的模型,如PaLM,或需要更多训练计算的模型,如Chinchilla。当使用完整训练集时,Atlas同样取得了最先进的结果,例如将Natural Questions的准确率从$55.9%$提升至$60.4%$。这一结果是使用由CCNet和2021年12月维基百科语料库组成的索引(我们的默认索引设置)获得的。在第5.2节中,我们考虑了使用不同日期存档的维基百科语料库构建索引,并展示了当使用与Natural Questions时间匹配的索引时,准确率可进一步提高$+3.6%$。我们在附录A.2中报告了性能随模型规模变化的函数以及详细的超参数。

Table 7: Comparison to state-of-the-art on MMLU. ∗For the 5-shot setting, Atlas uses fine-tuning, while previous works use in-context learning. The Atlas model uses de-biased inference. Train FLOPS refers to total the amount of computation necessary to train the model, including pre-training and/or fine-tuning.

| Setting | Model | Params | Train FLOPS | All | Hum. | Soc. Sci. | STEM | Other |

| zero-shot | ATLAS | 11B | 3.5e22 | 47.1 | 43.6 | 54.1 | 38.0 | 54.4 |

| 5-shot | GPT-3 | 175B | 3.1e23 | 43.9 | 40.8 | 50.4 | 36.7 | 48.8 |

| Gopher | 280B | 5.0e23 | 60.0 | 56.2 | 71.9 | 47.4 | 66.1 | |

| Chinchilla | 70B | 5.0e23 | 67.5 | 63.6 | 79.3 | 55.0 | 73.9 | |

| ATLAS | 11B | 3.5e22 | 47.9 | 46.1 | 54.6 | 38.8 | 52.8 | |

| 5-shot (multi-task) | ATLAS | 11B | 3.5e22 | 56.6 | 50.1 | 66.4 | 46.4 | 66.2 |

| Full / Transfer | UnifiedQA | 11B | 3.3e22 | 48.9 | 45.6 | 56.6 | 40.2 | 54.6 |

| GPT-3 | 175B | 3.1e23 | 53.9 | 52.5 | 63.9 | 41.4 | 57.9 | |

| ATLAS | 11B | 3.5e22 | 66.0 | 61.1 | 77.2 | 53.2 | 74.4 |

表 7: MMLU 最新技术对比。*在 5-shot 设置中,Atlas 使用微调方法,而先前工作采用上下文学习。Atlas 模型使用去偏推理。训练 FLOPS 指训练模型所需的总计算量,包括预训练和/或微调。

| 设置 | 模型 | 参数量 | 训练 FLOPS | 综合 | 人文 | 社科 | STEM | 其他 |

|---|---|---|---|---|---|---|---|---|

| 零样本 | ATLAS | 11B | 3.5e22 | 47.1 | 43.6 | 54.1 | 38.0 | 54.4 |

| 5-shot | GPT-3 | 175B | 3.1e23 | 43.9 | 40.8 | 50.4 | 36.7 | 48.8 |

| 5-shot | Gopher | 280B | 5.0e23 | 60.0 | 56.2 | 71.9 | 47.4 | 66.1 |

| 5-shot | Chinchilla | 70B | 5.0e23 | 67.5 | 63.6 | 79.3 | 55.0 | 73.9 |

| 5-shot | ATLAS | 11B | 3.5e22 | 47.9 | 46.1 | 54.6 | 38.8 | 52.8 |

| 5-shot (多任务) | ATLAS | 11B | 3.5e22 | 56.6 | 50.1 | 66.4 | 46.4 | 66.2 |

| 全量/迁移 | UnifiedQA | 11B | 3.3e22 | 48.9 | 45.6 | 56.6 | 40.2 | 54.6 |

| 全量/迁移 | GPT-3 | 175B | 3.1e23 | 53.9 | 52.5 | 63.9 | 41.4 | 57.9 |

| 全量/迁移 | ATLAS | 11B | 3.5e22 | 66.0 | 61.1 | 77.2 | 53.2 | 74.4 |

Table 8: Comparison to state-of-the-art on question answering. We report results on Natural Questions, and on TriviaQA for both the filtered set, commonly used for open-domain question answering and the unfiltered hidden set for which evaluation is accessible online: https://competitions.codalab.org/ competitions/17208. For the 64-shot setting, our model uses fine-tuning, while the other models use prompting.

| Model | NQ | TriviaQA filtered | TriviaQA unfiltered | |||

| 64-shot | Full | 64-shot | Full | 64-shot | Full | |

| GPT-3 Brown et al.,2020 | 29.9 | 71.2 | ||||

| Gopher (Rae et al.,2021) | 28.2 | 57.2 | 61.3 | |||

| Chinchilla (Hoffmann et al., 2022 | 35.5 | 64.6 | 72.3 | |||

| PaLM (Chowdhery et al., 2022) | 39.6 | 81.4 | ||||

| RETRO (Borgeaud et al., 2021) | 45.5 | |||||

| FiD (Izacard & Grave, 2020) | 51.4 | 67.6 | 80.1 | |||

| FiD-KD Izacard & Grave, 2021 | 54.7 | 73.3 | ||||

| R2-D2 (] (Fajcik et al., 2021) | 55.9 | 69.9 | ||||

| ATLAS | 42.4 | 60.4 | 74.5 | 79.8 | 84.7 | 89.4 |

表 8: 问答任务与当前最优模型的对比。我们在Natural Questions和TriviaQA数据集上报告结果,其中TriviaQA包含常用于开放域问答的过滤集和可在线上评估的非过滤隐藏集:https://competitions.codalab.org/competitions/17208。在64样本设置中,我们的模型使用微调方法,其他模型则使用提示方法。

| 模型 | NQ 64-shot | NQ Full | TriviaQA filtered 64-shot | TriviaQA filtered Full | TriviaQA unfiltered 64-shot | TriviaQA unfiltered Full |

|---|---|---|---|---|---|---|

| GPT-3 (Brown et al., 2020) | 29.9 | 71.2 | ||||

| Gopher (Rae et al., 2021) | 28.2 | 57.2 | 61.3 | |||

| Chinchilla (Hoffmann et al., 2022) | 35.5 | 64.6 | 72.3 | |||

| PaLM (Chowdhery et al., 2022) | 39.6 | 81.4 | ||||

| RETRO (Borgeaud et al., 2021) | 45.5 | |||||

| FiD (Izacard & Grave, 2020) | 51.4 | 67.6 | 80.1 | |||

| FiD-KD (Izacard & Grave, 2021) | 54.7 | 73.3 | ||||

| R2-D2 (Fajcik et al., 2021) | 55.9 | 69.9 | ||||

| ATLAS | 42.4 | 60.4 | 74.5 | 79.8 | 84.7 | 89.4 |

Atlas also compares favorably to recent work exploring retrieval-augmented few-shot question answering with very large models. Lazaridou et al. (2022) explore Natural Questions in a 15-shot setup using Gopher, augmenting questions with 50 passages retrieved using Google Search. This method consists of generating 4 candidate answers from each retrieved passages, and then re-ranking using either a score inspired by RAG (Lewis et al., 2020) or a more expensive approach. This method (not shown in our tables) achieves exact match scores of 32.7% (RAG) and $38.4%$ (Ensemble), requiring 50 (RAG) or 450 (Ensemble) forward passes of Gopher-280B per test-time question. Atlas, using the same 15 training examples and 50 passages achieves 38.7 EM, despite having 25 $\times$ fewer parameters, and requiring comparatively negligible compute.

Atlas在与近期探索检索增强少样本问答的超大模型研究对比中表现优异。Lazaridou等(2022) 使用Gopher模型在15样本设置下研究Natural Questions数据集,通过Google Search检索50个段落增强问题。该方法对每个检索段落生成4个候选答案,再使用RAG (Lewis等, 2020) 启发的评分或更高成本方法进行重排序。该方案 (未在表格中显示) 取得32.7% (RAG) 和$38.4%$ (集成) 的精确匹配分数,每个测试问题需调用Gopher-280B模型50次 (RAG) 或450次 (集成) 前向计算。而Atlas在相同15个训练样本和50个段落条件下,以25$\times$更少的参数量实现38.7 EM分数,且所需计算量相对可忽略。

4.5.3 FEVER Results

4.5.3 FEVER 结果

We report results on the original 3-class FEVER fact checking test set in Table 9. We consider a 64-shot setting, with training examples uniformly sampled from the full training set. Unlike the development and test sets, the train set is imbalanced, with more positive labels than negative, posing a challenge for few-shot learning. In this setting, we achieve an accuracy of $64.3%$ . We also report a 15-shot setting, with 5 examples uniformly sampled from each class to compare with published results from Gopher (Rae et al., 2021), where Atlas scores $56.2%$ , outperforming Gopher by 5.1 points. Lastly we fine-tune our model on the full training set, and achieve a score of 78%, within $1.5%$ of the ProoFVer, which uses a specialized architecture, a retriever trained with sentence-level annotations, and is supplied with the Wikipedia corpus released with FEVER, whereas Atlas retrieves from CCNet and the December 2021 Wikipedia dump. If we give Atlas an index comprised of the FEVER Wikipedia corpus, we set a new state-of-the-art of $80.1%$

我们在表9中报告了原始3类FEVER事实核查测试集的结果。我们采用64样本设置,从完整训练集中均匀采样训练样本。与开发和测试集不同,训练集存在类别不平衡问题,正例标签多于负例,这对少样本学习提出了挑战。在此设置下,我们取得了64.3%的准确率。我们还报告了15样本设置(每类均匀采样5个样本)的结果,Atlas达到56.2%的准确率,比Gopher (Rae et al., 2021)发表的成果高出5.1个百分点。最后,我们在完整训练集上微调模型,获得78%的分数,与采用专用架构、基于句子级标注训练的检索器、并使用FEVER配套维基百科语料的ProoFVer相比,差距仅1.5%。值得注意的是,Atlas的检索源是CCNet和2021年12月维基百科快照。当为Atlas提供FEVER维基百科语料构建的索引时,我们以80.1%的准确率创造了新纪录。