DETRs with Collaborative Hybrid Assignments Training

基于协作混合分配训练的DETR模型

Abstract

摘要

In this paper, we provide the observation that too few queries assigned as positive samples in DETR with oneto-one set matching leads to sparse supervision on the encoder’s output which considerably hurt the disc rim i native feature learning of the encoder and vice visa for attention learning in the decoder. To alleviate this, we present a novel collaborative hybrid assignments training scheme, namely Co-DETR, to learn more efficient and effective DETR-based detectors from versatile label assignment manners. This new training scheme can easily enhance the encoder’s learning ability in end-to-end detectors by training the multiple parallel auxiliary heads supervised by one-to-many label assignments such as ATSS and Faster RCNN. In addition, we conduct extra customized positive queries by extracting the positive coordinates from these auxiliary heads to improve the training efficiency of positive samples in the decoder. In inference, these auxiliary heads are discarded and thus our method introduces no additional parameters and computational cost to the original detector while requiring no hand-crafted non-maximum suppression (NMS). We conduct extensive experiments to evaluate the effectiveness of the proposed approach on DETR variants, including DAB-DETR, Deformable-DETR, and DINO-DeformableDETR. The state-of-the-art DINO-Deformable-DETR with Swin-L can be improved from $58.5%$ to $59.5%$ AP on COCO val. Surprisingly, incorporated with ViT-L backbone, we achieve $66.0%$ AP on COCO test-dev and $67.9%$ AP on LVIS val, outperforming previous methods by clear margins with much fewer model sizes. Codes are available at https://github.com/Sense-X/Co-DETR.

本文提出一个观察结论:在采用一对一集合匹配的DETR中,被分配为正样本的查询过少会导致编码器输出的监督信号稀疏,从而严重损害编码器的判别性特征学习能力,反之亦然地影响解码器中的注意力学习。为此,我们提出一种创新的协作混合分配训练方案Co-DETR,通过多样化标签分配策略来学习更高效、更强大的基于DETR的检测器。该方案利用ATSS和Faster RCNN等一对多标签分配方法监督多个并行辅助头,可显著增强端到端检测器中编码器的学习能力。此外,通过从这些辅助头提取正样本坐标生成定制化正查询,从而提升解码器中正样本的训练效率。推理时这些辅助头会被移除,因此本方法既不会引入额外参数量和计算成本,也无需人工设计非极大值抑制(NMS)。我们在DAB-DETR、Deformable-DETR和DINO-DeformableDETR等变体上进行了广泛实验:采用Swin-L的先进模型DINO-DeformableDETR在COCO val上的AP从58.5%提升至59.5%;结合ViT-L主干网络时,在COCO test-dev和LVIS val上分别达到66.0%和67.9% AP,以更小模型尺寸显著超越现有方法。代码已开源:https://github.com/Sense-X/Co-DETR。

1. Introduction

1. 引言

Object detection is a fundamental task in computer vision, which requires us to localize the object and classify its category. The seminal R-CNN families [11, 14, 27] and a series of variants [31, 37, 44] such as ATSS [41], RetinaNet [21], FCOS [32], and PAA [17] lead to the significant breakthrough of object detection task. One-to-many label assignment is the core scheme of them, where each groundtruth box is assigned to multiple coordinates in the detector’s output as the supervised target cooperated with proposals [11, 27], anchors [21] or window centers [32]. Despite their promising performance, these detectors heavily rely on many hand-designed components like a non-maximum suppression procedure or anchor generation [1]. To conduct a more flexible end-to-end detector, DEtection TRansformer (DETR) [1] is proposed to view the object detection as a set prediction problem and introduce the one-to-one set matching scheme based on a transformer encoder-decoder architecture. In this manner, each ground-truth box will only be assigned to one specific query, and multiple handdesigned components that encode prior knowledge are no longer needed. This approach introduces a flexible detection pipeline and encourages many DETR variants to fur- ther improve it. However, the performance of the vanilla end-to-end object detector is still inferior to the traditional detectors with one-to-many label assignments.

目标检测是计算机视觉中的一项基础任务,要求我们定位物体并分类其类别。开创性的R-CNN系列[11, 14, 27]及其变体[31, 37, 44](如ATSS[41]、RetinaNet[21]、FCOS[32]和PAA[17]推动了目标检测任务的重大突破。这些方法的核心是一对多标签分配机制,即每个真实框会被分配给检测器输出中的多个坐标作为监督目标,与候选框[11, 27]、锚点[21]或窗口中心[32]协同工作。尽管性能优异,这些检测器严重依赖非极大值抑制、锚点生成[1]等人工设计组件。为实现更灵活的端到端检测器,DEtection TRansformer (DETR)[1]将目标检测视为集合预测问题,并基于Transformer编码器-解码器架构引入一对一集合匹配机制。这种方式下,每个真实框仅分配给特定查询(query),无需编码先验知识的多个人工设计组件。该方法开创了灵活的检测流程,催生了许多改进型DETR变体。然而,原始端到端目标检测器的性能仍逊色于采用一对多标签分配的传统检测器。

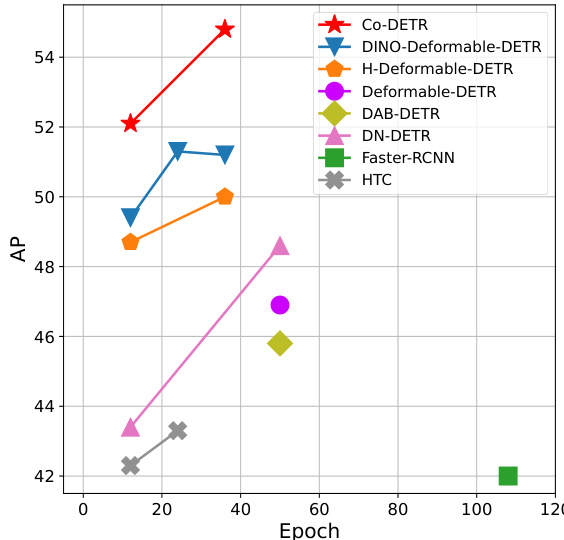

Figure 1. Performance of models with ResNet-50 on COCO val. $\mathcal{C}\mathrm{o}$ -DETR outperforms other counterparts by a large margin.

图 1: 使用 ResNet-50 的模型在 COCO val 上的性能。$\mathcal{C}\mathrm{o}$ -DETR 以显著优势超越其他对比模型。

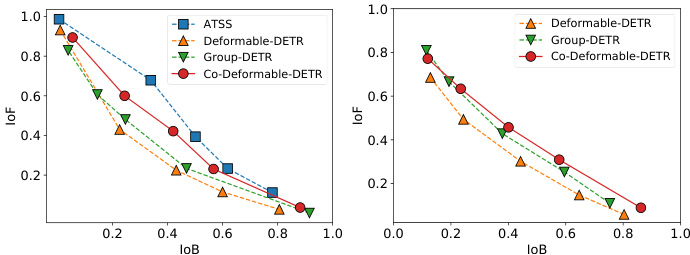

Figure 2. IoF-IoB curves for the feature disc rim inability score in the encoder and attention disc rim inability score in the decoder.

图 2: 编码器中特征判别力得分与解码器中注意力判别力得分的 IoF-IoB 曲线。

In this paper, we try to make DETR-based detectors superior to conventional detectors while maintaining their end-to-end merit. To address this challenge, we focus on the intuitive drawback of one-to-one set matching that it explores less positive queries. This will lead to severe inefficient training issues. We detailedly analyze this from two aspects, the latent representation generated by the encoder and the attention learning in the decoder. We first compare the disc rim inability score of the latent features between the Deformable-DETR [43] and the one-to-many label assignment method where we simply replace the decoder with the ATSS head. The feature $l^{2}$ -norm in each spatial coordinate is utilized to represent the disc rim inability score. Given the encoder’s output $\mathcal{F}\in\mathbb{R}^{C\times H\times W}$ , we can obtain the disc rim inability score map $S\in\mathbb{R}^{1\times H\times W}$ . The object can be better detected when the scores in the corresponding area are higher. As shown in Figure 2, we demonstrate the IoF-IoB curve (IoF: intersection over foreground, IoB: intersection over background) by applying different thresholds on the disc rim inability scores (details in Section 3.4). The higher IoF-IoB curve in ATSS indicates that it’s easier to distinguish the foreground and background. We further visualize the disc rim inability score map $s$ in Figure 3. It’s obvious that the features in some salient areas are fully activated in the one-to-many label assignment method but less explored in one-to-one set matching. For the exploration of decoder training, we also demonstrate the IoF-IoB curve of the cross-attention score in the decoder based on the Deformable-DETR and the Group-DETR [5] which introduces more positive queries into the decoder. The illustration in Figure 2 shows that too few positive queries also influence attention learning and increasing more positive queries in the decoder can slightly alleviate this.

本文致力于在保持端到端优势的同时,使基于DETR的检测器性能超越传统检测器。针对这一挑战,我们聚焦于一对一集合匹配的固有缺陷——正样本查询(positive queries)探索不足,这会导致严重的训练低效问题。我们从编码器生成的潜在表示和解码器的注意力学习两方面展开详细分析。

首先比较了Deformable-DETR [43]与一对多标签分配方法(仅将解码器替换为ATSS头)的潜在特征判别力得分。利用每个空间坐标中特征的$l^{2}$-范数表征判别力得分,给定编码器输出$\mathcal{F}\in\mathbb{R}^{C\times H\times W}$,可获得判别力得分图$S\in\mathbb{R}^{1\times H\times W}$。当对应区域得分越高时,目标检测效果越好。如图2所示,通过对判别力得分施加不同阈值(详见3.4节),我们绘制了IoF-IoB曲线(IoF:前景交并比,IoB:背景交并比)。ATSS中更高的IoF-IoB曲线表明其更易区分前景与背景。图3进一步可视化判别力得分图$s$,可见一对多方法能充分激活显著区域特征,而一对一集合匹配对此探索不足。

针对解码器训练分析,我们还基于Deformable-DETR和引入更多正样本查询的Group-DETR [5],绘制了解码器交叉注意力得分的IoF-IoB曲线。图2表明过少的正样本查询会影响注意力学习,而增加解码器中的正样本查询可略微缓解此问题。

This significant observation motivates us to present a simple but effective method, a collaborative hybrid assignment training scheme (Co-DETR). The key insight of $\mathcal{C}\mathrm{o}.$ - DETR is to use versatile one-to-many label assignments to improve the training efficiency and effectiveness of both the encoder and decoder. More specifically, we integrate the auxiliary heads with the output of the transformer encoder. These heads can be supervised by versatile one-to-many label assignments such as ATSS [41], FCOS [32], and Faster RCNN [27]. Different label assignments enrich the supervisions on the encoder’s output which forces it to be discri mi native enough to support the training convergence of these heads. To further improve the training efficiency of the decoder, we elaborately encode the coordinates of positive samples in these auxiliary heads, including the positive anchors and positive proposals. They are sent to the original decoder as multiple groups of positive queries to predict the pre-assigned categories and bounding boxes. Positive coordinates in each auxiliary head serve as an independent group that is isolated from the other groups. Versatile oneto-many label assignments can introduce lavish (positive query, ground-truth) pairs to improve the decoder’s training efficiency. Note that, only the original decoder is used during inference, thus the proposed training scheme only introduces extra overheads during training.

这一重要发现促使我们提出了一种简单而有效的方法——协作式混合分配训练方案 (Co-DETR)。Co-DETR 的核心思想是通过多样化的一对多标签分配 (one-to-many label assignments) 来提升编码器和解码器的训练效率与效果。具体而言,我们将辅助头 (auxiliary heads) 与 Transformer 编码器的输出进行集成。这些辅助头可以由 ATSS [41]、FCOS [32] 和 Faster RCNN [27] 等多种一对多标签分配方案进行监督。不同的标签分配方式丰富了编码器输出的监督信号,迫使其具备足够判别性以支持这些辅助头的训练收敛。为了进一步提升解码器的训练效率,我们精心编码了这些辅助头中正样本(包括正锚框和正提议框)的坐标信息,并将它们作为多组正查询 (positive queries) 输入原始解码器,用于预测预分配的类别和边界框。每个辅助头中的正样本坐标作为独立组别与其他组别隔离。多样化的一对多标签分配能够引入丰富的(正查询,真实标注)配对,从而提升解码器的训练效率。值得注意的是,推理阶段仅使用原始解码器,因此所提出的训练方案仅在训练时引入额外开销。

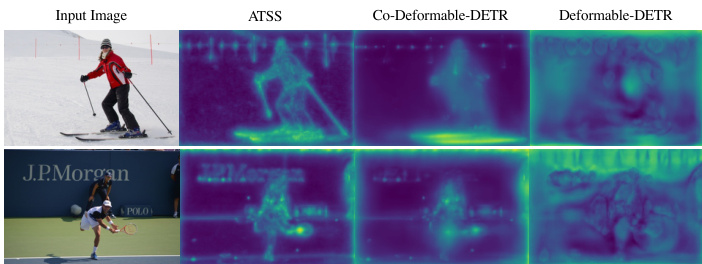

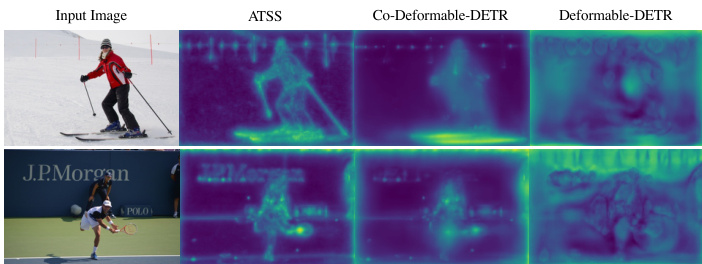

Figure 3. Visualization s of disc rim inability scores in the encoder.

图 3: 编码器中判别性得分的可视化。

We conduct extensive experiments to evaluate the efficiency and effectiveness of the proposed method. Illustrated in Figure 3, $\mathcal{C}\mathrm{o}$ -DETR greatly alleviates the poorly encoder’s feature learning in one-to-one set matching. As a plug-and-play approach, we easily combine it with different DETR variants, including DAB-DETR [23], DeformableDETR [43], and DINO-Deformable-DETR [39]. As shown in Figure 1, $\mathcal{C}\mathrm{o}$ -DETR achieves faster training convergence and even higher performance. Specifically, we improve the basic Deformable-DETR by $5.8%$ AP in 12-epoch training and $3.2%$ AP in 36-epoch training. The state-of-theart DINO-Deformable-DETR with Swin-L [25] can still be improved from $58.5%$ to $59.5%$ AP on COCO val. Surprisingly, incorporated with ViT-L [8] backbone, we achieve $66.0%$ AP on COCO test-dev and $67.9%$ AP on LVIS val, establishing the new state-of-the-art detector with much fewer model sizes.

我们进行了大量实验来评估所提方法的效率和效果。如图3所示,$\mathcal{C}\mathrm{o}$-DETR显著改善了一对一集合匹配中编码器特征学习不佳的问题。作为一种即插即用方案,我们轻松将其与多种DETR变体结合,包括DAB-DETR [23]、DeformableDETR [43]和DINO-Deformable-DETR [39]。如图1所示,$\mathcal{C}\mathrm{o}$-DETR实现了更快的训练收敛和更高性能。具体而言,我们将基础版Deformable-DETR在12轮训练中AP提升了5.8%,在36轮训练中AP提升了3.2%。采用Swin-L [25]的顶尖DINO-Deformable-DETR在COCO验证集上仍可从58.5% AP提升至59.5% AP。令人惊讶的是,结合ViT-L [8]骨干网络,我们在COCO测试集上达到66.0% AP,在LVIS验证集上达到67.9% AP,以更小的模型规模建立了新的检测器标杆。

2. Related Works

2. 相关工作

One-to-many label assignment. For one-to-many label assignment in object detection, multiple box candidates can be assigned to the same ground-truth box as positive samples in the training phase. In classic anchor-based detectors, such as Faster-RCNN [27] and RetinaNet [21], the sample selection is guided by the predefined IoU threshold and matching IoU between anchors and annotated boxes. The anchor-free FCOS [32] leverages the center priors and assigns spatial locations near the center of each bounding box as positives. Moreover, the adaptive mechanism is incorporated into one-to-many label assignments to overcome the limitation of fixed label assignments. ATSS [41] performs adaptive anchor selection by the statistical dynamic IoU values of top $k$ closest anchors. PAA [17] adaptively separates anchors into positive and negative samples in a probabilistic manner. In this paper, we propose a collaborative hybrid assignment scheme to improve encoder representations via auxiliary heads with one-to-many label assignments.

一对多标签分配。在目标检测的一对多标签分配中,训练阶段可以将多个候选框分配给同一个真实框作为正样本。经典基于锚点的检测器(如Faster-RCNN [27] 和 RetinaNet [21])通过预定义的IoU阈值以及锚点与标注框之间的匹配IoU来指导样本选择。无锚点检测器FCOS [32] 利用中心先验,将每个边界框中心附近的空间位置分配为正样本。此外,自适应机制被引入一对多标签分配以克服固定分配方式的局限性:ATSS [41] 通过统计前$k$个最近锚点的动态IoU值进行自适应锚点选择;PAA [17] 以概率方式自适应地将锚点划分为正负样本。本文提出协作式混合分配方案,通过配备一对多标签分配的辅助头来提升编码器表征能力。

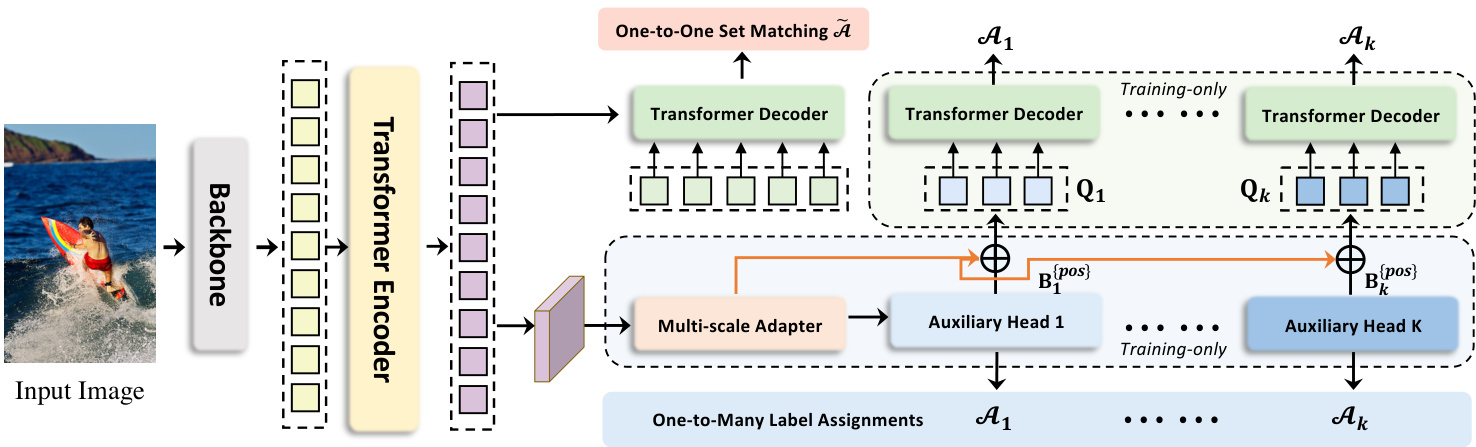

Figure 4. Framework of our Collaborative Hybrid Assignment Training. The auxiliary branches are discarded during evaluation.

图 4: 我们的协作混合分配训练框架。评估阶段会丢弃辅助分支。

One-to-one set matching. The pioneering transformerbased detector, DETR [1], incorporates the one-to-one set matching scheme into object detection and performs fully end-to-end object detection. The one-to-one set matching strategy first calculates the global matching cost via Hungarian matching and assigns only one positive sample with the minimum matching cost for each ground-truth box. DNDETR [18] demonstrates the slow convergence results from the instability of one-to-one set matching, thus introducing denoising training to eliminate this issue. DINO [39] inherits the advanced query formulation of DAB-DETR [23] and incorporates an improved contrastive denoising technique to achieve state-of-the-art performance. Group-DETR [5] constructs group-wise one-to-many label assignment to exploit multiple positive object queries, which is similar to the hybrid matching scheme in $\mathcal{H}$ -DETR [16]. In contrast with the above follow-up works, we present a new perspective of collaborative optimization for one-to-one set matching.

一对一集合匹配。开创性的基于Transformer的检测器DETR [1]将一对一集合匹配方案引入目标检测任务,实现了完全端到端的目标检测。该策略首先通过匈牙利匹配计算全局匹配代价,并为每个真实标注框仅分配一个具有最小匹配代价的正样本。DNDETR [18]揭示了因一对一集合匹配不稳定性导致的收敛缓慢问题,进而引入去噪训练来解决这一缺陷。DINO [39]继承了DAB-DETR [23]的高级查询构建方法,结合改进的对比去噪技术实现了最先进的性能。Group-DETR [5]构建了分组式一对多标签分配机制以利用多个正样本对象查询,其思路类似于$\mathcal{H}$-DETR [16]中的混合匹配方案。与上述后续研究不同,我们提出了一种针对一对一集合匹配的协同优化新视角。

3. Method

3. 方法

3.1. Overview

3.1. 概述

Following the standard DETR protocol, the input image is fed into the backbone and encoder to generate latent features. Multiple predefined object queries interact with them in the decoder via cross-attention afterwards. We introduce $\mathcal{C}\mathrm{o}$ -DETR to improve the feature learning in the encoder and the attention learning in the decoder via the collaborative hybrid assignments training scheme and the customized positive queries generation. We will detailedly describe these modules and give insights why they can work well.

遵循标准DETR协议,输入图像通过主干网络和编码器生成潜在特征。随后多个预定义物体查询(query)在解码器中通过交叉注意力机制与这些特征交互。我们提出的$\mathcal{C}\mathrm{o}$-DETR通过协作式混合分配训练方案和定制化正查询生成机制,改进了编码器的特征学习与解码器的注意力学习。下文将详细阐述这些模块并解析其有效性原理。

3.2. Collaborative Hybrid Assignments Training

3.2. 协作式混合分配训练

To alleviate the sparse supervision on the encoder’s output caused by the fewer positive queries in the decoder, we incorporate versatile auxiliary heads with different one-tomany label assignment paradigms, e.g., ATSS, and Faster R-CNN. Different label assignments enrich the supervisions on the encoder’s output which forces it to be discriminative enough to support the training convergence of these heads. Specifically, given the encoder’s latent feature $\mathcal{F}$ , we firstly transform it to the feature pyramid ${\mathcal{F}{1},\cdots,\mathcal{F}{J}}$ via the multi-scale adapter where $J$ indicates feature map with $2^{2+J}$ down sampling stride. Similar to ViTDet [20], the feature pyramid is constructed by a single feature map in the single-scale encoder, while we use bilinear interpolation and $3\times3$ convolution for upsampling. For instance, with the single-scale feature from the encoder, we successively apply down sampling $3\times3$ convolution with stride 2) or upsampling operations to produce a feature pyramid. As for the multi-scale encoder, we only downsample the coarsest feature in the multi-scale encoder features $\mathcal{F}$ to build the feature pyramid. Defined $K$ collaborative heads with corresponding label assignment manners $A_{k}$ , for the $i$ -th collaborative head, ${\mathcal{F}{1},\cdots,\mathcal{F}{J}}$ is sent to it to obtain the predictions $\hat{\mathbf{P}}{i}$ . At the $i$ -th head, $\mathcal{A}{i}$ is used to compute the supervised targets for the positive and negative samples in $\mathbf{P}_{i}$ . Denoted $\mathbf{G}$ as the ground-truth set, this procedure can be formulated as:

为缓解解码器中正样本查询较少导致编码器输出监督稀疏的问题,我们引入了采用不同一对多标签分配范式(如ATSS和Faster R-CNN)的多功能辅助头。不同的标签分配方式丰富了编码器输出的监督信号,迫使编码器具备足够判别性以支持这些辅助头的训练收敛。具体而言,给定编码器的潜在特征$\mathcal{F}$,我们首先通过多尺度适配器将其转换为特征金字塔${\mathcal{F}{1},\cdots,\mathcal{F}{J}}$,其中$J$表示下采样步长为$2^{2+J}$的特征图。与ViTDet [20]类似,该特征金字塔由单尺度编码器中的单一特征图构建,但我们采用双线性插值和$3\times3$卷积进行上采样。例如,对于编码器的单尺度特征,我们连续应用步长为2的$3\times3$下采样卷积或上采样操作来生成特征金字塔。对于多尺度编码器,我们仅对多尺度编码器特征$\mathcal{F}$中最粗糙的特征进行下采样以构建特征金字塔。定义$K$个具有相应标签分配方式$A{k}$的协作头,对于第$i$个协作头,将${\mathcal{F}{1},\cdots,\mathcal{F}{J}}$输入其中以获得预测结果$\hat{\mathbf{P}}{i}$。在第$i$个头中,$\mathcal{A}{i}$用于计算$\mathbf{P}_{i}$中正负样本的监督目标。设$\mathbf{G}$为真实标签集,该过程可表述为:

$$

\mathbf{P}{i}^{{p o s}},\mathbf{B}{i}^{{p o s}},\mathbf{P}{i}^{{n e g}}=\mathcal{A}{i}(\hat{\mathbf{P}}_{i},\mathbf{G}),

$$

$$

\mathbf{P}{i}^{{p o s}},\mathbf{B}{i}^{{p o s}},\mathbf{P}{i}^{{n e g}}=\mathcal{A}{i}(\hat{\mathbf{P}}_{i},\mathbf{G}),

$$

where ${p o s}$ and ${n e g}$ indicate the pair set of $(j$ , positive coordinates or negative coordinates in ${\mathcal{F}}{j}$ ) determined by $\mathcal{A}{i}$ . $j$ means the feature index in ${\mathcal{F}{1},\cdots,\mathcal{F}{J}}$ . $\mathbf{B}_{i}^{{p o s}}$ is the set of spatial positive coordinates. Pi{pos} and P{neg} are the supervised targets in the corresponding coordinates, including the categories and regressed offsets. To be specific, we describe the detailed information about each variable in Table 1. The loss functions can be defined as:

其中 ${pos}$ 和 ${neg}$ 表示由 $\mathcal{A}{i}$ 确定的 $(j$, ${\mathcal{F}}{j}$ 中正坐标或负坐标) 的配对集合。$j$ 表示 ${\mathcal{F}{1},\cdots,\mathcal{F}{J}}$ 中的特征索引。$\mathbf{B}{i}^{{pos}}$ 是空间正坐标的集合。$P_{i}^{{pos}}$ 和 $P^{{neg}}$ 是对应坐标中的监督目标,包括类别和回归偏移量。具体来说,我们在表1中描述了每个变量的详细信息。损失函数可以定义为:

Table 1. Detailed information of auxiliary heads. The auxiliary heads include Faster-RCNN [27], ATSS [41], RetinaNet [21], and FCOS [32]. If not otherwise specified, we follow the original implementations, e.g., anchor generation.

表 1: 辅助头部的详细信息。辅助头部包括 Faster-RCNN [27]、ATSS [41]、RetinaNet [21] 和 FCOS [32]。除非另有说明,否则我们遵循原始实现,例如锚点生成。

| Head i | Loss L | AssignmentA |

|---|---|---|

| Faster-RCNN [27] | cls:CE loss, reg: GIoU loss | {pos}: IoU(proposal, gt)>0.5 {neg}: IoU(proposal, gt)<0.5 |

| ATSS [41] | cls:Focal loss reg:GIoU, BCE loss | {pos}:IoU(anchor, gt)>(mean+std) {neg}:IoU(anchor,gt)<(mean+std) |

| RetinaNet [21] | cls:Focal loss reg:GIoU Loss | {pos}: IoU(anchor, gt)>0.5 {neg}: IoU(anchor, gt)<0.4 |

| FCOS [32] | cls:Focal Loss reg: GIoU, BCE loss | {pos}:points inside gt center area {neg}:points outside gt center area |

$$

\mathcal{L}{i}^{e n c}=\mathcal{L}{i}(\hat{\mathbf{P}}{i}^{{p o s}},\mathbf{P}{i}^{{p o s}})+\mathcal{L}{i}(\hat{\mathbf{P}}{i}^{{n e g}},\mathbf{P}_{i}^{{n e g}}),

$$

$$

\mathcal{L}{i}^{e n c}=\mathcal{L}{i}(\hat{\mathbf{P}}{i}^{{p o s}},\mathbf{P}{i}^{{p o s}})+\mathcal{L}{i}(\hat{\mathbf{P}}{i}^{{n e g}},\mathbf{P}_{i}^{{n e g}}),

$$

Note that the regression loss is discarded for negative samples. The training objective of the optimization for $K$ auxiliary heads is formulated as follows:

请注意,对于负样本会舍弃回归损失。针对 $K$ 个辅助头的优化训练目标定义如下:

$$

\mathcal{L}^{e n c}=\sum_{i=1}^{K}\mathcal{L}_{i}^{e n c}

$$

$$

\mathcal{L}^{e n c}=\sum_{i=1}^{K}\mathcal{L}_{i}^{e n c}

$$

3.3. Customized Positive Queries Generation

3.3. 定制化正向查询生成

In the one-to-one set matching paradigm, each groundtruth box will only be assigned to one specific query as the supervised target. Too few positive queries lead to inefficient cross-attention learning in the transformer decoder as shown in Figure 2. To alleviate this, we elaborately generate sufficient customized positive queries according to the label assignment $\mathcal{A}{i}$ in each auxiliary head. Specifically, given the positive coordinates set $\mathbf{B}{i}^{{p o s}}\in\mathbb{R}^{M_{i}\times4}$ in the $i\cdot$ -th auxiliary head, where $M_{i}$ is the number of positive samples, the extra customized positive queries $\bar{\mathbf{Q}}{i}\in\mathbb{R}^{M_{i}\times C}$ can be generated by:

在一对一集合匹配范式中,每个真实框只会被分配给一个特定查询作为监督目标。如图2所示,过少的正样本查询会导致Transformer解码器中的交叉注意力学习效率低下。为缓解这一问题,我们根据每个辅助头中的标签分配$\mathcal{A}{i}$精心生成足量的定制化正样本查询。具体而言,给定第$i$个辅助头中的正样本坐标集$\mathbf{B}{i}^{{pos}}\in\mathbb{R}^{M_{i}\times4}$(其中$M_{i}$为正样本数量),可通过以下方式生成额外的定制化正样本查询$\bar{\mathbf{Q}}{i}\in\mathbb{R}^{M_{i}\times C}$:

$$

\mathbf{Q}{i}=\operatorname{Linear}(\mathrm{PE}(\mathbf{B}{i}^{{p o s}}))+\operatorname{Linear}(\mathrm{E}({\mathcal{F}_{*}},{p o s})).

$$

$$

\mathbf{Q}{i}=\operatorname{Linear}(\mathrm{PE}(\mathbf{B}{i}^{{p o s}}))+\operatorname{Linear}(\mathrm{E}({\mathcal{F}_{*}},{p o s})).

$$

where $\mathrm{PE}(\cdot)$ stands for positional encodings and we select the corresponding features from $\operatorname{E}(\cdot)$ according to the index pair $j$ , positive coordinates or negative coordinates in ${\mathcal{F}}_{j}$ ).

其中 $\mathrm{PE}(\cdot)$ 表示位置编码 (positional encodings),我们根据索引对 $j$、正坐标或负坐标从 $\operatorname{E}(\cdot)$ 中选择 ${\mathcal{F}}_{j}$ 中对应的特征。

As a result, there are $K+1$ groups of queries that contribute to a single one-to-one set matching branch and $K$ branches with one-to-many label assignments during training. The auxiliary one-to-many label assignment branches share the same parameters with $L$ decoders layers in the original main branch. All the queries in the auxiliary branch are regarded as positive queries, thus the matching process is discarded. To be specific, the loss of the $l$ -th decoder layer in the $i$ -th auxiliary branch can be formulated as:

因此,在训练过程中,有 $K+1$ 组查询分别贡献于一个一对一集合匹配分支和 $K$ 个一对多标签分配分支。辅助的一对多标签分配分支与原始主分支中的 $L$ 个解码器层共享相同参数。辅助分支中的所有查询均被视为正样本查询,因此省略了匹配过程。具体而言,第 $i$ 个辅助分支中第 $l$ 个解码器层的损失可表示为:

$$

\mathcal{L}{i,l}^{d e c}=\widetilde{\mathcal{L}}(\widetilde{\mathbf{P}}{i,l},\mathbf{P}_{i}^{{p o s}}).

$$

$$

\mathcal{L}{i,l}^{d e c}=\widetilde{\mathcal{L}}(\widetilde{\mathbf{P}}{i,l},\mathbf{P}_{i}^{{p o s}}).

$$

$\widetilde{\mathbf{P}}_{i,l}$ refers to the output predictions of the $l$ -th decoder layer ien the $i$ -th auxiliary branch. Finally, the training objective for $\mathcal{C}\mathrm{o}$ -DETR is:

$\widetilde{\mathbf{P}}_{i,l}$ 表示第 $i$ 个辅助分支中第 $l$ 个解码器层的输出预测。最终,$\mathcal{C}\mathrm{o}$-DETR 的训练目标为:

$$

\mathcal{L}^{g l o b a l}=\sum_{l=1}^{L}(\widetilde{\mathcal{L}}{l}^{d e c}+\lambda_{1}\sum_{i=1}^{K}\mathcal{L}{i,l}^{d e c}+\lambda_{2}\mathcal{L}^{e n c}),

$$

$$

\mathcal{L}^{g l o b a l}=\sum_{l=1}^{L}(\widetilde{\mathcal{L}}{l}^{d e c}+\lambda_{1}\sum_{i=1}^{K}\mathcal{L}{i,l}^{d e c}+\lambda_{2}\mathcal{L}^{e n c}),

$$

where $\widetilde{\mathcal{L}}{l}^{d e c}$ stands for the loss in the original one-to-one set matchi neg branch [1], $\lambda_{1}$ and $\lambda_{2}$ are the coefficient balancing the losses.

其中 $\widetilde{\mathcal{L}}{l}^{d e c}$ 表示原始一对一集合匹配分支 [1] 中的损失,$\lambda_{1}$ 和 $\lambda_{2}$ 是用于平衡损失的系数。

3.4. Why Co-DETR works

3.4. 为什么Co-DETR有效

$\mathcal{C}\mathrm{o}$ -DETR leads to evident improvement to the DETRbased detectors. In the following, we try to investigate its effectiveness qualitatively and quantitatively. We conduct detailed analysis based on Deformable-DETR with ResNet50 [15] backbone using the 36-epoch setting.

$\mathcal{C}\mathrm{o}$ -DETR显著提升了基于DETR的检测器性能。下文我们将从定性和定量两个维度探究其有效性。实验采用ResNet50 [15] 主干网络和36训练周期的Deformable-DETR框架进行详细分析。

Enrich the encoder’s supervisions. Intuitively, too few positive queries lead to sparse supervisions as only one query is supervised by regression loss for each ground-truth. The positive samples in one-to-many label assignment manners receive more localization supervisions to help enhance the latent feature learning. To further explore how the sparse supervisions impede the model training, we detailedly investigate the latent features produced by the encoder. We introduce the IoF-IoB curve to quantize the disc rim in a bility score of the encoder’s output. Specifically, given the latent feature $\mathcal{F}$ of the encoder, inspired by the feature visualization in Figure 3, we compute the IoF (intersection over foreground) and IoB (intersection over background). Given the encoder’s feature $\mathcal{F}{j}\in\mathbb{R}^{C\times H{j}\times W_{j}}$ at level $j$ , we first calculate the $l^{2}$ -norm $\widehat{\mathcal{F}}{j}\in\mathbb{R}^{1\times H{j}\times W_{j}}$ and resize it to the image size $H\times W$ . bThe disc rim inability score $\mathcal{D}(\mathcal{F})$ is computed by averaging the scores from all levels:

增强编码器的监督信号。直观来看,过少的正样本查询会导致监督稀疏,因为每个真实标注仅通过回归损失监督一个查询。在一对多标签分配机制中,正样本能获得更多定位监督,从而促进潜在特征学习。为深入探究稀疏监督如何阻碍模型训练,我们详细分析了编码器生成的潜在特征。通过引入IoF-IoB曲线来量化编码器输出的边缘判别能力得分:给定编码器的潜在特征$\mathcal{F}$,受图3特征可视化的启发,我们计算前景交并比(IoF)与背景交并比(IoB)。对于第$j$层编码器特征$\mathcal{F}{j}\in\mathbb{R}^{C\times H{j}\times W_{j}}$,先计算其$l^{2}$范数$\widehat{\mathcal{F}}{j}\in\mathbb{R}^{1\times H{j}\times W_{j}}$并缩放到图像尺寸$H\times W$。边缘判别能力得分$\mathcal{D}(\mathcal{F})$通过对所有层级得分取平均得到:

$$

\mathcal{D}(\mathcal{F})=\frac{1}{J}\sum_{j=1}^{J}\frac{\widehat{\mathcal{F}}_{j}}{m a x(\widehat{\mathcal{F}}_{j})},

$$

$$

\mathcal{D}(\mathcal{F})=\frac{1}{J}\sum_{j=1}^{J}\frac{\widehat{\mathcal{F}}_{j}}{m a x(\widehat{\mathcal{F}}_{j})},

$$

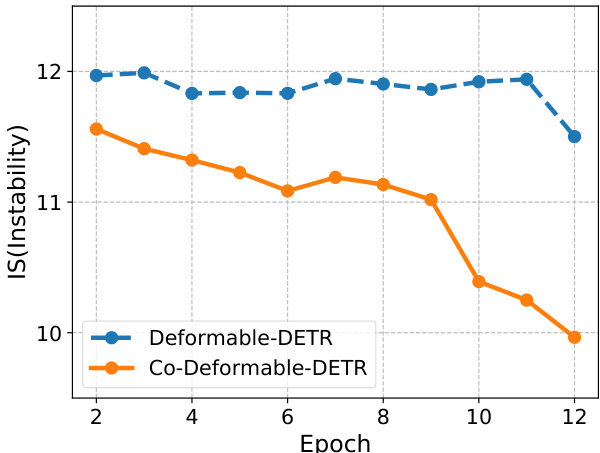

Figure 5. The instability (IS) [18] of Deformable-DETR and ${\mathcal{C}}_{0}.$ - Deformable-DETR on COCO dataset. These detectors are trained for 12 epochs with ResNet-50 backbones.

图 5: Deformable-DETR与${\mathcal{C}}_{0}$的不稳定性(IS) [18] - 基于COCO数据集使用ResNet-50骨干网络训练12个epoch的Deformable-DETR检测器。

where the resize operation is omitted. We visualize the disc rim inability scores of ATSS, Deformable-DETR, and our $\mathcal{C}\mathrm{o}$ -Deformable-DETR in Figure 3. Compared with Deformable-DETR, both ATSS and $\mathcal{C}\mathrm{o}$ -Deformable-DETR own stronger ability to distinguish the areas of key objects, while Deformable-DETR is almost disturbed by the background. Consequently, we define the indicators for foreground and background as $\mathbb{1}(D(\mathcal{F})>S)\in\mathbb{R}^{H\times W}$ and $\mathbb{1}(\mathcal{D}(\mathcal{F})<S)\in\mathbb{R}^{H\times W}$ , respectively. $S$ is a predefined score thresh, $\mathbb{1}(x)$ is 1 if $x$ is true and 0 otherwise. As for the mask of foreground Mfg ∈ RH×W , the element Mfh,gw is 1 if the point $(h,w)$ is inside the foreground and 0 otherwise. The area of intersection over foreground (IoF) $\mathcal{T}^{f g}$ can be computed as:

其中省略了调整大小的操作。我们在图3中可视化了ATSS、Deformable-DETR以及我们的$\mathcal{C}\mathrm{o}$-Deformable-DETR的圆盘边缘不可区分性分数。与Deformable-DETR相比,ATSS和$\mathcal{C}\mathrm{o}$-Deformable-DETR都具备更强的区分关键目标区域的能力,而Deformable-DETR几乎被背景干扰。因此,我们分别将前景和背景的指示器定义为$\mathbb{1}(D(\mathcal{F})>S)\in\mathbb{R}^{H\times W}$和$\mathbb{1}(\mathcal{D}(\mathcal{F})<S)\in\mathbb{R}^{H\times W}$。$S$是预定义的分数阈值,$\mathbb{1}(x)$在$x$为真时为1,否则为0。至于前景掩码Mfg ∈ RH×W,若点$(h,w)$位于前景内,则元素Mfh,gw为1,否则为0。前景交并比(IoF) $\mathcal{T}^{f g}$可计算为:

$$

\mathcal{T}^{f g}=\frac{\sum_{h=1}^{H}\sum_{w=1}^{W}(\mathbb{1}(\mathcal{D}(\mathcal{F}_{h,w})>S)\cdot\mathcal{M}_{h,w}^{f g})}{\sum_{h=1}^{H}\sum_{w=1}^{W}\mathcal{M}_{h,w}^{f g}}.

$$

$$

\mathcal{T}^{f g}=\frac{\sum_{h=1}^{H}\sum_{w=1}^{W}(\mathbb{1}(\mathcal{D}(\mathcal{F}_{h,w})>S)\cdot\mathcal{M}_{h,w}^{f g})}{\sum_{h=1}^{H}\sum_{w=1}^{W}\mathcal{M}_{h,w}^{f g}}.

$$

Concretely, we compute the area of intersection over background areas (IoB) in a similar way and plot the curve IoF and IoB by varying $S$ in Figure 2. Obviously, ATSS and $C\mathbf{0}$ -Deformable-DETR obtain higher IoF values than both Deformable-DETR and Group-DETR under the same IoB values, which demonstrates the encoder representations benefit from the one-to-many label assignment.

具体来说,我们以类似方式计算背景区域交并比(IoB),并通过调整$S$在图2中绘制IoF和IoB曲线。显然,在相同IoB值下,ATSS和$C\mathbf{0}$-Deformable-DETR获得的IoF值均高于Deformable-DETR和Group-DETR,这表明编码器表征受益于一对多标签分配策略。

Improve the cross-attention learning by reducing the instability of Hungarian matching. Hungarian matching is the core scheme in one-to-one set matching. Cross-attention is an important operation to help the positive queries encode abundant object information. It requires sufficient training to achieve this. We observe that the Hungarian matching introduces uncontrollable instability since the ground-truth assigned to a specific positive query in the same image is changing during the training process. Following [18], we present the comparison of instability in Figure 5, where we find our approach contributes to a more stable matching process. Furthermore, in order to quantify how well crossattention is being optimized, we also calculate the IoF-IoB curve for attention score. Similar to the feature discriminability score computation, we set different thresholds for attention score to get multiple IoF-IoB pairs. The comparisons between Deformable-DETR, Group-DETR, and CoDeformable-DETR can be viewed in Figure 2. We find that the IoF-IoB curves of DETRs with more positive queries are generally above Deformable-DETR, which is consistent with our motivation.

通过降低匈牙利匹配的不稳定性来改进交叉注意力学习。匈牙利匹配是一对一集合匹配的核心方案。交叉注意力是帮助正查询(positive queries)编码丰富目标信息的重要操作,需要充分训练才能实现。我们观察到匈牙利匹配会引入不可控的不稳定性,因为在训练过程中,同一图像中分配给特定正查询的真实标签(ground-truth)会不断变化。参照[18],我们在图5中展示了不稳定性对比,发现我们的方法能实现更稳定的匹配过程。此外,为量化交叉注意力的优化效果,我们还计算了注意力分数的IoF-IoB曲线。与特征可区分性得分的计算类似,我们为注意力分数设置不同阈值以获得多组IoF-IoB值。可变形DETR(Deformable-DETR)、分组DETR(Group-DETR)和协同可变形DETR(CoDeformable-DETR)的对比结果如图2所示。我们发现具有更多正查询的DETR模型,其IoF-IoB曲线通常位于可变形DETR上方,这与我们的设计动机一致。

3.5. Comparison with other methods

3.5. 与其他方法的对比

Differences between our method and other counterparts. Group-DETR, $\mathcal{H}$ -DETR, and SQR [2] perform oneto-many assignments by one-to-one matching with duplicate groups and repeated ground-truth boxes. $\mathcal{C}\mathrm{o}$ -DETR explicitly assigns multiple spatial coordinates as positives for each ground truth. Accordingly, these dense supervision signals are directly applied to the latent feature map to enable it more disc rim i native. By contrast, Group-DETR, $\mathcal{H}.$ - DETR, and SQR lack this mechanism. Although more positive queries are introduced in these counterparts, the oneto-many assignments implemented by Hungarian Matching still suffer from the instability issues of one-to-one matching. Our method benefits from the stability of off-theshelf one-to-many assignments and inherits their specific matching manner between positive queries and ground-truth boxes. Group-DETR and $\mathcal{H}$ -DETR fail to reveal the comple ment ari ties between one-to-one matching and traditional one-to-many assignment. To our best knowledge, we are the first to give the quantitative and qualitative analysis on the detectors with the traditional one-to-many assignment and one-to-one matching. This helps us better understand their differences and complement ari ties so that we can naturally improve the DETR’s learning ability by leveraging off-theshelf one-to-many assignment designs without requiring additional specialized one-to-many design experience.

我们的方法与其他方法的差异。Group-DETR、$\mathcal{H}$-DETR和SQR [2]通过单组重复和真实框复现的一对一匹配实现一对多分配。$\mathcal{C}\mathrm{o}$-DETR显式地为每个真实框分配多个空间坐标作为正样本,这些密集监督信号直接作用于潜在特征图以增强其判别性。相比之下,Group-DETR、$\mathcal{H}$-DETR和SQR缺乏这一机制。尽管这些方法引入了更多正样本查询(query),但基于匈牙利匹配实现的一对多分配仍受限于一对一匹配的不稳定性。我们的方法受益于现成一对多分配的稳定性,并继承了正样本查询与真实框间的特定匹配方式。Group-DETR和$\mathcal{H}$-DETR未能揭示一对一匹配与传统一对多分配的互补性。据我们所知,我们首次对传统一对多分配与一对一匹配的检测器进行了定量与定性分析,这有助于理解二者的差异与互补关系,从而无需额外专业设计经验即可自然提升DETR的学习能力。

No negative queries are introduced in the decoder. Duplicate object queries inevitably bring large amounts of negative queries for the decoder and a significant increase in GPU memory. However, our method only processes the positive coordinates in the decoder, thus consuming less memory as shown in Table 7.

解码器中没有引入负查询。重复的对象查询不可避免地会给解码器带来大量负查询,并显著增加GPU内存占用。然而,我们的方法仅处理解码器中的正坐标,因此内存消耗更低,如表7所示。

4. Experiments

4. 实验

4.1. Setup

4.1. 配置

Datasets and Evaluation Metrics. Our experiments are conducted on the MS COCO 2017 dataset [22] and LVIS v1.0 dataset [12]. The COCO dataset consists of 115K labeled images for training and 5K images for validation. We report the detection results by default on the val subset. The results of our largest model evaluated on the test-dev (20K images) are also reported. LVIS v1.0 is a large-scale and long-tail dataset with 1203 categories for large vocabulary instance segmentation. To verify the scalability of $\mathcal{C}\mathrm{o}$ -DETR, we further apply it to a large-scale object detection benchmark, namely Objects365 [30]. There are 1.7M labeled images used for training and 80K images for validation in the Objects365 dataset. All results follow the standard mean Average Precision(AP) under IoU thresholds ranging from 0.5 to 0.95 at different object scales.

数据集与评估指标。我们在MS COCO 2017数据集[22]和LVIS v1.0数据集[12]上进行实验。COCO数据集包含11.5万张训练图像和5000张验证图像,默认在验证集上报告检测结果,同时汇报最大模型在test-dev子集(2万张图像)上的评估结果。LVIS v1.0是一个包含1203个类别的大规模长尾分布数据集,用于大词汇量实例分割任务。为验证$\mathcal{C}\mathrm{o}$-DETR的扩展性,我们将其应用于Objects365[30]大规模目标检测基准,该数据集包含170万训练图像和8万验证图像。所有结果均采用标准平均精度均值(mAP)指标,在0.5至0.95交并比(IoU)阈值范围内评估不同尺度目标的性能。

| Method | K | #epochs | AP |

| Conditional DETR-C5[26] ConditionalDETR-C5 5[26] ConditionalDETR-C5 [26] DAB-DETR-C5 [23] | 0 1 2 0 | 36 36 36 36 | 39.4 41.5(+2.1) 41.8(+2.4) 41.2 |

| DAB-DETR-C5 [23] DAB-DETR-C5 [23] Deformable-DETR [43] Deformable-DETR[43] | 1 2 0 1 | 36 36 12 12 | 43.1(+1.9) 43.5(+2.3) 37.1 42.3(+5.2) |

| Deformable-DETR[43] Deformable-DETR [43] Deformable-DETR [43] | 0 1 2 | 12 36 36 36 | 42.9(+5.8) 43.3 46.8(+3.5) 46.5(+3.2) |

Table 2. Results of plain baselines on COCO val.

| 方法 | K | 训练轮数 | AP |

|---|---|---|---|

| Conditional DETR-C5 [26] | 0 | 36 | 39.4 |

| Conditional DETR-C5 [26] | 1 | 36 | 41.5 (+2.1) |

| Conditional DETR-C5 [26] | 2 | 36 | 41.8 (+2.4) |

| DAB-DETR-C5 [23] | 0 | 36 | 41.2 |

| DAB-DETR-C5 [23] | 1 | 36 | 43.1 (+1.9) |

| DAB-DETR-C5 [23] | 2 | 36 | 43.5 (+2.3) |

| Deformable-DETR [43] | 0 | 12 | 37.1 |

| Deformable-DETR [43] | 1 | 12 | 42.3 (+5.2) |

| Deformable-DETR [43] | 0 | 36 | 42.9 (+5.8) |

| Deformable-DETR [43] | 1 | 36 | 43.3 |

| Deformable-DETR [43] | 2 | 36 | 46.8 (+3.5) |

| Deformable-DETR [43] | 2 | 36 | 46.5 (+3.2) |

Implementation Details. We incorporate our $\mathcal{C}\mathrm{o}$ -DETR into the current DETR-like pipelines and keep the training setting consistent with the baselines. We adopt ATSS and Faster-RCNN as the auxiliary heads for $K=2$ and only keep ATSS for $K=1$ . More details about our auxiliary heads can be found in the supplementary materials. We choose the number of learnable object queries to 300 and set ${\lambda_{1},\lambda_{2}}$ to $\lbrace1.0,2.0\rbrace$ by default. For $\mathcal{C}\mathrm{o}$ -DINO- Deformable-DETR $^{++}$ , we use large-scale jitter with copypaste [10].

实现细节。我们将$\mathcal{C}\mathrm{o}$-DETR集成到当前类DETR流程中,并保持与基线一致的训练设置。对于$K=2$的情况采用ATSS和Faster-RCNN作为辅助头,$K=1$时仅保留ATSS。辅助头的更多细节详见补充材料。我们将可学习目标查询数量设为300,默认设置${\lambda_{1},\lambda_{2}}$为$\lbrace1.0,2.0\rbrace$。对于$\mathcal{C}\mathrm{o}$-DINO-Deformable-DETR$^{++}$,采用带copypaste[10]的大尺度抖动增强。

4.2. Main Results

4.2. 主要结果

In this section, we empirically analyze the effectiveness and generalization ability of $\mathcal{C}\mathrm{o}$ -DETR on different DETR variants in Table 2 and Table 3. All results are reproduced using mm detection [4]. We first apply the collaborative hybrid assignments training to single-scale DETRs with C5 features. Surprisingly, both Conditional-DETR and DAB-DETR obtain $2.4%$ and $2.3%$ AP gains over the baselines with a long training schedule. For DeformableDETR with multi-scale features, the detection performance is significantly boosted from $37.1%$ to $42.9%$ AP. The overall improvements $(+3.2%$ AP) still hold when the training time is increased to 36 epochs. Moreover, we conduct experiments on the improved Deformable-DETR (denoted as Deformable-DETR $^{++}$ ) following [16], where a $+2.4%$ AP gain is observed. The state-of-the-art DINO-Deformable

在本节中,我们通过实证分析$\mathcal{C}\mathrm{o}$-DETR在不同DETR变体上的有效性和泛化能力,结果如表2和表3所示。所有实验均使用mm detection [4]复现。我们首先将协同混合分配训练应用于具有C5特征的单尺度DETR。令人惊讶的是,Conditional-DETR和DAB-DETR在长训练周期下分别获得$2.4%$和$2.3%$的AP提升。对于具有多尺度特征的DeformableDETR,检测性能从$37.1%$显著提升至$42.9%$ AP。当训练周期延长至36轮时,整体改进$(+3.2%$ AP)仍然保持。此外,我们在改进版Deformable-DETR(记为Deformable-DETR$^{++}$)上按照[16]进行实验,观察到$+2.4%$ AP提升。当前最先进的DINO-Deformable

| Method | K | #epochs | AP |

| Deformable-DETR++[43] Deformable-DETR++[43] Deformable-DETR++[43] DINO-Deformable-DETR [39] | 0 1 2 0 | 12 12 12 | 47.1 48.7(+1.6) 49.5(+2.4) 49.4 |

| DINO-Deformable-DETRt [39] DINO-Deformable-DETRt [39] | 1 2 | 12 12 12 | 51.0(+1.6) 51.2(+1.8) |

| Deformable-DETR+++ [43] Deformable-DETR+++ [43] Deformable-DETR+++ [43] | 0 1 2 | 12 12 12 | 55.2 56.4(+1.2) 56.9(+1.7) |

| DINO-Deformable-DETR+ [39] DINO-Deformable-DETR+ [39] DINO-Deformable-DETR+ [39] | 0 1 2 | 12 12 12 | 58.5 59.3(+0.8) 59.5(+1.0) |

Table 3. Results of strong baselines on COCO val. Methods with $\dagger$ use 5 feature levels. $\ddagger$ refers to Swin-L backbone.

| 方法 | K | 训练轮数 | AP |

|---|---|---|---|

| Deformable-DETR++[43] Deformable-DETR++[43] Deformable-DETR++[43] DINO-Deformable-DETR [39] | 0 1 2 0 | 12 12 12 | 47.1 48.7(+1.6) 49.5(+2.4) 49.4 |

| DINO-Deformable-DETRt [39] DINO-Deformable-DETRt [39] | 1 2 | 12 12 12 | 51.0(+1.6) 51.2(+1.8) |

| Deformable-DETR+++ [43] Deformable-DETR+++ [43] Deformable-DETR+++ [43] | 0 1 2 | 12 12 12 | 55.2 56.4(+1.2) 56.9(+1.7) |

| DINO-Deformable-DETR+ [39] DINO-Deformable-DETR+ [39] DINO-Deformable-DETR+ [39] | 0 1 2 | 12 12 12 | 58.5 59.3(+0.8) 59.5(+1.0) |

表 3: COCO验证集上的强基线结果。带$\dagger$的方法使用5个特征层级,$\ddagger$表示采用Swin-L骨干网络。

DETR equipped with our method can achieve $51.2%$ AP, which is $+1.8%$ AP higher than the competitive baseline.

采用我们方法的DETR可实现51.2% AP (average precision) ,较竞争基线提升+1.8% AP。

We further scale up the backbone capacity from ResNet50 to Swin-L [25] based on two state-of-the-art baselines. As presented in Table 3, $\mathcal{C}\mathrm{o}$ -DETR achieves $56.9%$ AP and surpasses the Deformable $\mathrm{DETR++}$ baseline by a large margin $(+1.7%$ AP). The performance of DINODeformable-DETR with Swin-L can still be boosted from $58.5%$ to $59.5%$ AP.

我们基于两个最先进的基线模型,将骨干网络容量从ResNet50扩展到Swin-L [25]。如表3所示,Co-DETR实现了56.9%的平均精度(AP),大幅超越Deformable DETR++基线(+1.7% AP)。采用Swin-L的DINODeformable-DETR性能仍可从58.5%提升至59.5% AP。

4.3. Comparisons with the state-of-the-art

4.3. 与现有最优技术的比较

We apply our method with $K=2$ to Deformable $\mathrm{DETR++}$ and DINO. Besides, the quality focal loss [19] and NMS are adopted for our $\mathcal{C}\mathrm{o}$ -DINO-Deformable-DETR. We report the comparisons on COCO val in Table 4. Compared with other competitive counterparts, our method converges much faster. For example, $\mathcal{C}\mathrm{o}$ -DINO-DeformableDETR readily achieves $52.1%$ AP when using only 12 epochs with ResNet-50 backbone. Our method with SwinL can obtain $58.9%$ AP for $1\times$ scheduler, even surpassing other state-of-the-art frameworks on $3\times$ scheduler. More importantly, our best model $\mathcal{C}\mathrm{o}$ -DINO-Deformable $\mathrm{DETR++}$ achieves $54.8%$ AP with ResNet-50 and $60.7%$ AP with Swin-L under 36-epoch training, outperforming all existing detectors with the same backbone by clear margins.

我们将方法应用于 $K=2$ 的 Deformable $\mathrm{DETR++}$ 和 DINO。此外,我们的 $\mathcal{C}\mathrm{o}$-DINO-Deformable-DETR 采用了质量焦点损失 [19] 和非极大值抑制 (NMS)。表 4 展示了在 COCO val 上的对比结果。与其他竞争方法相比,我们的方法收敛速度显著更快。例如,$\mathcal{C}\mathrm{o}$-DINO-DeformableDETR 仅使用 12 个训练周期和 ResNet-50 骨干网络即可达到 $52.1%$ AP。采用 SwinL 骨干网络时,我们的方法在 $1\times$ 调度器下可获得 $58.9%$ AP,甚至超越了其他使用 $3\times$ 调度器的先进框架。更重要的是,我们的最佳模型 $\mathcal{C}\mathrm{o}$-DINO-Deformable $\mathrm{DETR++}$ 在 36 周期训练下,使用 ResNet-50 和 Swin-L 骨干网络分别达到 $54.8%$ 和 $60.7%$ AP,显著优于所有采用相同骨干网络的现有检测器。

To further explore the s cal ability of our method, we extend the backbone capacity to 304 million parameters. This large-scale backbone ViT-L [7] is pre-trained using a selfsupervised learning method (EVA-02 [8]). We first pre-train $\mathcal{C}\mathrm{o}$ -DINO-Deformable-DETR with ViT-L on Objects365 for 26 epochs, then fine-tune it on the COCO dataset for 12 epochs. In the fine-tuning stage, the input resolution is randomly selected between $480\times2400$ and $1536\times2400$ . The detailed settings are available in supplementary materials. Our results are evaluated with test-time augmentation. Table 5 presents the state-of-the-art comparisons on the

为了进一步探索我们方法的可扩展性,我们将骨干网络参数量扩展至3.04亿。这个大规模骨干网络ViT-L [7]采用自监督学习方法(EVA-02 [8])进行预训练。我们首先在Objects365数据集上用ViT-L预训练$\mathcal{C}\mathrm{o}$-DINO-Deformable-DETR 26个周期,然后在COCO数据集上微调12个周期。微调阶段输入分辨率在$480\times2400$和$1536\times2400$之间随机选择。详细设置见补充材料。我们的结果采用测试时增强进行评估。表5展示了当前最先进的

Table 4. Comparison to the state-of-the-art DETR variants on COCO val.

| Method | Backbone | Multi-scale | #query | #epochs | AP | AP50 | AP75 | APs | APM | APL |

| Conditional-DETR [26] | R50 | 300 | 108 | 43.0 | 64.0 | 45.7 | 22.7 | 46.7 | 61.5 | |

| Anchor-DETR [35] | R50 | 300 | 50 | 42.1 | 63.1 | 44.9 | 22.3 | 46.2 | 60.0 | |

| DAB-DETR [23] | R50 | 900 | 50 | 45.7 | 66.2 | 49.0 | 26.1 | 49.4 | 63.1 | |

| AdaMixer [9] | R50 | 300 | 36 | 47.0 | 66.0 | 51.1 | 30.1 | 50.2 | 61.8 | |

| Deformable-DETR [43] | R50 | 300 | 50 | 46.9 | 65.6 | 51.0 | 29.6 | 50.1 | 61.6 | |

| DN-Deformable-DETR [18] | R50 | 300 | 50 | 48.6 | 67.4 | 52.7 | 31.0 | 52.0 | 63.7 | |

| DINO-Deformable-DETR [39] | R50 | 900 | 12 | 49.4 | 66.9 | 53.8 | 32.3 | 52.5 | 63.9 | |

| DINO-Deformable-DETRt [39] | R50 | 900 | 36 | 51.2 | 69.0 | 55.8 | 35.0 | 54.3 | 65.3 | |

| DINO-Deformable-DETR [39] | Swin-L (IN-22K) | 900 | 36 | 58.5 | 77.0 | 64.1 | 41.5 | 62.3 | 74.0 | |

| Group-DINO-Deformable-DETR [5] | Swin-L (IN-22K) | 900 | 36 | 58.4 | 41.0 | 62.5 | 73.9 | |||

| H-Deformable-DETR [16] | R50 | 300 | 12 | 48.7 | 66.4 | 52.9 | 31.2 | 51.5 | 63.5 | |

| H-Deformable-DETR [16] | Swin-L (IN-22K) | 900 | 36 | 57.9 | 76.8 | 63.6 | 42.4 | 61.9 | 73.4 | |

| Co-Deformable-DETR | R50 | 300 | 12 | 49.5 | 67.6 | 54.3 | 32.4 | 52.7 | 63.7 | |

| Co-Deformable-DETR | Swin-L (IN-22K) | 900 | 36 | 58.5 | 77.1 | 64.5 | 42.4 | 62.4 | 74.0 | |

| Co-DINO-Deformable-DETRt | R50 | 900 | 12 | 52.1 | 69.4 | 57.1 | 35.4 | 55.4 | 65.9 | |

| Co-DINO-Deformable-DETRt | Swin-L (IN-22K) | 900 | 12 | 58.9 | 76.9 | 64.8 | 42.6 | 62.7 | 75.1 | |

| Co-DINO-Deformable-DETRt | Swin-L (IN-22K) | 900 | 24 | 59.8 | 77.7 | 65.5 | 43.6 | 63.5 | 75.5 | |

| Co-DINO-Deformable-DETRt | Swin-L (IN-22K) | 900 | 36 | 60.0 | 77.7 | 66.1 | 44.6 | 63.9 | 75.7 | |

| Co-DINO-Deformable-DETR++t | R50 | 900 | 12 | 52.1 | 69.3 | 57.3 | 35.4 | 55.5 | 67.2 | |

| Co-DINO-Deformable-DETR++t | R50 | 900 | 36 | 54.8 | 72.5 | 60.1 | 38.3 | 58.4 | 69.6 | |

| Co-DINO-Deformable-DETR++t | Swin-L (IN-22K) | 900 | 12 | 59.3 | 77.3 | 64.9 | 43.3 | 63.3 | 75.5 | |

| Co-DINO-Deformable-DETR++t | Swin-L (IN-22K) | 900 | 24 | 60.4 | 78.3 | 66.4 | 44.6 | 64.2 | 76.5 | |

| Co-DINO-Deformable-DETR++t | Swin-L (IN-22K) | 900 | 36 | 60.7 | 78.5 | 66.7 | 45.1 | 64.7 | 76.4 |

: 5 feature levels.

表 4: 在COCO验证集上与最先进DETR变体的对比

| 方法 | 主干网络 | 多尺度 | 查询数 | 训练轮数 | AP | AP50 | AP75 | APs | APM | APL |

|---|---|---|---|---|---|---|---|---|---|---|

| Conditional-DETR [26] | R50 | 300 | 108 | 43.0 | 64.0 | 45.7 | 22.7 | 46.7 | 61.5 | |

| Anchor-DETR [35] | R50 | 300 | 50 | 42.1 | 63.1 | 44.9 | 22.3 | 46.2 | 60.0 | |

| DAB-DETR [23] | R50 | 900 | 50 | 45.7 | 66.2 | 49.0 | 26.1 | 49.4 | 63.1 | |

| AdaMixer [9] | R50 | 300 | 36 | 47.0 | 66.0 | 51.1 | 30.1 | 50.2 | 61.8 | |

| Deformable-DETR [43] | R50 | 300 | 50 | 46.9 | 65.6 | 51.0 | 29.6 | 50.1 | 61.6 | |

| DN-Deformable-DETR [18] | R50 | 300 | 50 | 48.6 | 67.4 | 52.7 | 31.0 | 52.0 | 63.7 | |

| DINO-Deformable-DETR [39] | R50 | 900 | 12 | 49.4 | 66.9 | 53.8 | 32.3 | 52.5 | 63.9 | |

| DINO-Deformable-DETRt [39] | R50 | 900 | 36 | 51.2 | 69.0 | 55.8 | 35.0 | 54.3 | 65.3 | |

| DINO-Deformable-DETR [39] | Swin-L (IN-22K) | 900 | 36 | 58.5 | 77.0 | 64.1 | 41.5 | 62.3 | 74.0 | |

| Group-DINO-Deformable-DETR [5] | Swin-L (IN-22K) | 900 | 36 | 58.4 | 41.0 | 62.5 | 73.9 | |||

| H-Deformable-DETR [16] | R50 | 300 | 12 | 48.7 | 66.4 | 52.9 | 31.2 | 51.5 | 63.5 | |

| H-Deformable-DETR [16] | Swin-L (IN-22K) | 900 | 36 | 57.9 | 76.8 | 63.6 | 42.4 | 61.9 | 73.4 | |

| Co-Deformable-DETR | R50 | 300 | 12 | 49.5 | 67.6 | 54.3 | 32.4 | 52.7 | 63.7 | |

| Co-Deformable-DETR | Swin-L (IN-22K) | 900 | 36 | 58.5 | 77.1 | 64.5 | 42.4 | 62.4 | 74.0 | |

| Co-DINO-Deformable-DETRt | R50 | 900 | 12 | 52.1 | 69.4 | 57.1 | 35.4 | 55.4 | 65.9 | |

| Co-DINO-Deformable-DETRt | Swin-L (IN-22K) | 900 | 12 | 58.9 | 76.9 | 64.8 | 42.6 | 62.7 | 75.1 | |

| Co-DINO-Deformable-DETRt | Swin-L (IN-22K) | 900 | 24 | 59.8 | 77.7 | 65.5 | 43.6 | 63.5 | 75.5 | |

| Co-DINO-Deformable-DETRt | Swin-L (IN-22K) | 900 | 36 | 60.0 | 77.7 | 66.1 | 44.6 | 63.9 | 75.7 | |

| Co |