MEDAGENTS: Large Language Models as Collaborators for Zero-shot Medical Reasoning

MEDAGENTS: 大语言模型作为零样本医学推理的协作伙伴

Abstract

摘要

Large language models (LLMs), despite their remarkable progress across various general domains, encounter significant barriers in medicine and healthcare. This field faces unique challenges such as domain-specific terminologies and reasoning over specialized knowledge. To address these issues, we propose MEDAGENTS, a novel multi-disciplinary collaboration framework for the medical domain. MedAgents leverages LLM-based agents in a role-playing setting that participate in a collaborative multi-round discussion, thereby enhancing LLM proficiency and reasoning capabilities. This training-free framework encompasses five critical steps: gathering domain experts, proposing individual analyses, summa rising these analyses into a report, iterating over discussions until a consensus is reached, and ultimately making a decision. Our work focuses on the zeroshot setting, which is applicable in realworld scenarios. Experimental results on nine datasets (MedQA, MedMCQA, PubMedQA, and six subtasks from MMLU) establish that our proposed MEDAGENTS framework excels at mining and harnessing the medical expertise within LLMs, as well as extending its reasoning abilities. Our code can be found at https: //github.com/ger stein lab/MedAgents.

大语言模型 (LLMs) 虽然在多个通用领域取得了显著进展,但在医学和医疗健康领域仍面临重大障碍。该领域存在特定领域术语和专业知识的推理等独特挑战。为解决这些问题,我们提出了MEDAGENTS,一种面向医学领域的新型多学科协作框架。MedAgents利用基于LLM的智能体在角色扮演环境中参与协作式多轮讨论,从而提升LLM的专业水平和推理能力。这个无需训练的框架包含五个关键步骤:召集领域专家、提出独立分析、汇总分析形成报告、迭代讨论直至达成共识、最终做出决策。我们的研究聚焦于适用于现实场景的零样本设置。在九个数据集(MedQA、MedMCQA、PubMedQA以及MMLU的六项子任务)上的实验结果表明,我们提出的MEDAGENTS框架擅长挖掘和利用LLM中的医学专业知识,并能扩展其推理能力。代码详见https://github.com/gersteinlab/MedAgents。

1 Introduction

1 引言

Large language models (LLMs) (Brown et al., 2020; Scao et al., 2022; Chowdhery et al., 2022; Touvron et al., 2023; OpenAI, 2023) have exhibited notable generalization abilities across a wide range of tasks and applications (Lu et al., 2023; Zhou et al., 2023; Park et al., 2023), with these capabilities stemming from their extensive training on vast comprehensive corpora covering diverse topics. However, in real-world scenarios, LLMs tend to encounter domain-specific tasks that necessitate a combination of domain expertise and complex reasoning abilities (Moor et al., 2023; Wu et al., 2023b; Singhal et al., 2023a; Yang et al., 2023). Amidst this backdrop, a noteworthy research topic lies in the adoption of LLMs in the medical field, which has gained increasing prominence recently (Zhang et al., 2023b; Bao et al., 2023; Singhal et al., 2023a).

大语言模型 (LLMs) (Brown 等人, 2020; Scao 等人, 2022; Chowdhery 等人, 2022; Touvron 等人, 2023; OpenAI, 2023) 在广泛的任务和应用中展现出显著的泛化能力 (Lu 等人, 2023; Zhou 等人, 2023; Park 等人, 2023), 这些能力源于其对涵盖多元主题的海量综合语料库的广泛训练。然而在现实场景中, 大语言模型往往会遇到需要结合领域专业知识和复杂推理能力的特定领域任务 (Moor 等人, 2023; Wu 等人, 2023b; Singhal 等人, 2023a; Yang 等人, 2023)。在此背景下, 一个值得关注的研究主题是大语言模型在医疗领域的应用, 这一方向近期正获得越来越多的重视 (Zhang 等人, 2023b; Bao 等人, 2023; Singhal 等人, 2023a)。

Two major challenges prevent LLMs from effectively handling tasks in the medical sphere: (i) Limited volume and specificity of medical training data compared to the vast general text data, due to cost and privacy concerns (Thiru nav uk a rasu et al., 2023).1 (ii) The demand for extensive domain knowledge (Schmidt and Rikers, 2007) and advanced reasoning skills (Liévin et al., 2022) makes eliciting medical expertise via simple prompting challenging (Kung et al., 2023; Singhal et al., 2023a). Although numerous attempts, particularly within math and coding, have been made to enhance prompting methods (Besta et al., 2023; Lála et al., 2023; Wang et al., 2023b), strategies used in the medical field have been shown to induce hallucination (Umapathi et al., 2023; Harris, 2023; Ji et al., 2023), indicating the need for more robust approaches.

大语言模型在医疗领域有效处理任务面临两大挑战:(i) 由于成本和隐私问题 (Thirunavukarasu et al., 2023)[1],医疗训练数据的数量和特异性相较于海量通用文本数据更为有限。(ii) 对广泛领域知识 (Schmidt and Rikers, 2007) 和高级推理能力 (Liévin et al., 2022) 的需求,使得通过简单提示激发医疗专业知识具有挑战性 (Kung et al., 2023; Singhal et al., 2023a)。尽管在数学和编程等领域已有大量改进提示方法的尝试 (Besta et al., 2023; Lála et al., 2023; Wang et al., 2023b),但医疗领域采用的策略已被证明会引发幻觉现象 (Umapathi et al., 2023; Harris, 2023; Ji et al., 2023),这表明需要更稳健的方法。

Meanwhile, recent research has surprisingly witnessed the success of multi-agent collaboration (Xi et al., 2023; Wang et al., 2023d) which highlights the simulation of human activities (Du et al., 2023; Liang et al., 2023; Park et al., 2023) and optimizes the collective power of multiple agents (Chen et al., 2023; Li et al., 2023d; Hong et al., 2023). Through such design, the expertise implicitly embedded within LLMs, or that the model has encountered during its training, which may not be readily accessible via traditional prompting, is effectively brought to the fore.

与此同时,近期研究意外见证了多智能体协作的成功 (Xi et al., 2023; Wang et al., 2023d) ,这种协作凸显了对人类活动的模拟 (Du et al., 2023; Liang et al., 2023; Park et al., 2023) ,并优化了多个智能体的集体力量 (Chen et al., 2023; Li et al., 2023d; Hong et al., 2023) 。通过这种设计,大语言模型中隐性嵌入的专业知识,或模型在训练过程中接触过的、传统提示方法难以调用的知识,被有效地激发出来。

A 66-year-old male with a history of heart attack and recurrent stomach ulcers is experiencing persistent cough and chest pain, and recent CT scans indicate a possible lung tumor. Designing a treatment plan that minimizes risk and maximizes outcomes is the current concern due to his deteriorating health and medical history.

一名66岁男性患者,有心脏病发作史和复发性胃溃疡,现出现持续性咳嗽和胸痛症状,近期CT扫描提示可能存在肺部肿瘤。鉴于其健康状况恶化和复杂病史,当前需制定一个风险最小化、疗效最大化的治疗方案。

Figure 1: Diagram of our proposed MEDAGENTS framework. Given a medical question as input, the framework performs reasoning in five stages: (i) expert gathering, (ii) analysis proposition, (iii) report sum mari z ation, (iv) collaborative consultation, and (v) decision making.

图 1: 我们提出的MEDAGENTS框架示意图。该框架以医疗问题作为输入,通过五个阶段进行推理:(i) 专家召集,(ii) 分析提案,(iii) 报告汇总,(iv) 协作会诊,(v) 决策制定。

This process subsequently enhances the model’s reasoning capabilities throughout multiple rounds of interaction (Wang et al., 2023c; Fu et al., 2023).

这一过程随后在多轮交互中增强了模型的推理能力 (Wang et al., 2023c; Fu et al., 2023)。

Motivated by these notions, we pioneer a multi-disciplinary collaboration framework (MedAgents) specifically tailored to the clinical domain. Our objective centers on unveiling the intrinsic medical knowledge embedded in LLMs and reinforcing reasoning proficiency in a training-free manner. As shown in Figure 1, the MEDAGENTS framework gathers experts from diverse disciplines and reaches consistent conclusions through collaborative discussions.

受这些理念启发,我们首创了一个专为临床领域定制的多学科协作框架(MedAgents)。该框架的核心目标是揭示大语言模型(LLM)中内嵌的医学知识,并以免训练方式增强推理能力。如图1所示,MEDAGENTS框架汇集了来自不同学科的专家,通过协作讨论达成一致结论。

Based on our MEDAGENTS framework, we conduct experiments on nine datasets, including MedQA (Jin et al., 2021), MedMCQA (Pal et al., 2022), PubMedQA (Jin et al., 2019) and six medical subtasks from MMLU (Hendrycks et al., 2020).2 To better align with real-world application scenarios, our study focuses on the zero-shot setting, which can serve as a plug-and-play method to supplement existing medical LLMs such as MedPaLM 2 (Singhal et al., 2023b). Encouragingly, our proposed approach outperforms settings for both chain-of-thought (CoT) and self-consistency (SC) prompting methods. Most notably, our approach achieves better performance under the zero-shot setting compared with the 5-shot baselines.

基于我们的MEDAGENTS框架,我们在九个数据集上进行了实验,包括MedQA (Jin et al., 2021)、MedMCQA (Pal et al., 2022)、PubMedQA (Jin et al., 2019)以及来自MMLU (Hendrycks et al., 2020)的六个医学子任务。为了更好地贴合实际应用场景,我们的研究聚焦于零样本设置,这种方法可作为即插即用的方案来补充现有医疗大语言模型,如MedPaLM 2 (Singhal et al., 2023b)。令人鼓舞的是,我们提出的方法在思维链(CoT)和自我一致性(SC)提示方法上均表现更优。最显著的是,我们的方法在零样本设置下甚至超越了5样本基线模型的性能。

Based on our results, we further investigate the influence of agent numbers and conduct human evaluations to pinpoint the limitations and issues prevalent in our approach. We find four common categories of errors: (i) lack of domain knowledge, (ii) mis-retrieval of domain knowledge, (iii) consistency errors, and (iv) CoT errors. Targeted refinements focused on mitigating these particular shortcomings would enhance the model’s proficiency and reliability.

根据我们的结果,我们进一步研究了智能体数量的影响,并通过人工评估来找出我们方法中普遍存在的局限性和问题。我们发现错误主要分为四类:(i) 缺乏领域知识,(ii) 领域知识检索错误,(iii) 一致性错误,以及 (iv) 思维链 (CoT) 错误。针对这些特定缺陷进行有针对性的改进,将提高模型的熟练度和可靠性。

Our contributions are summarized as follows:

我们的贡献总结如下:

(i) To the best of our knowledge, we are the first to propose a multi-agent framework within the medical domain and explore how multi-agent communication within the medical setting can lead to a consensus decision, adding a novel dimension to the current literature on medical question answering.

(i) 据我们所知,我们是首个在医疗领域提出多智能体框架并探索医疗场景中多智能体通信如何达成共识决策的研究,为当前医学问答文献增添了新维度。

(ii) Our proposed MEDAGENTS framework enjoys enhanced faithfulness and interpret ability by harnessing role-playing and collaborative agent discussion. And we demonstrate that role-playing allows LLM to explicitly reason with accurate knowledge, without the need for retrieval augmented generation (RAG). Examples illustrating interpret ability are shown in Appendix B.

(ii) 我们提出的MEDAGENTS框架通过角色扮演和智能体协作讨论,显著提升了忠实度和可解释性。研究表明,角色扮演能使大语言模型显式运用准确知识进行推理,而无需依赖检索增强生成(RAG)。可解释性示例如附录B所示。

(iii) Experimental results on nine datasets

(iii) 在九个数据集上的实验结果

Question: A 3-month-old infant is brought to her pediatrician because she coughs and seems to have difficulty breathing while feeding. In addition, she seems to have less energy compared to other babies and appears listless throughout the day. She was born by cesarean section to a G1P1 woman with no prior medical history and had a normal APGAR score at birth. Her parents say that she has never been observed to turn blue. Physical exam reveals a high-pitched hol o systolic murmur that is best heard at the lower left sternal border. The most likely cause of this patient's symptoms is associated with which of the following abnormalities? Options: (A) 22q11 deletion (B) Deletion of genes on chromosome 7 (C) Lithium exposure in utero (D) Retinoic acid exposure in utero

问题:一名3个月大的女婴因喂养时咳嗽和呼吸困难被带到儿科医生处就诊。此外,她比其他婴儿精力差,整天显得无精打采。该婴儿通过剖宫产出生,母亲为G1P1(初次妊娠初次分娩),无既往病史,出生时APGAR评分正常。父母表示从未观察到婴儿出现发绀。体格检查发现胸骨左下缘可闻及高调全收缩期杂音。该患者症状最可能的原因与以下哪种异常相关?

选项:

(A) 22q11缺失

(B) 7号染色体基因缺失

(C) 子宫内锂暴露

(D) 子宫内维甲酸暴露

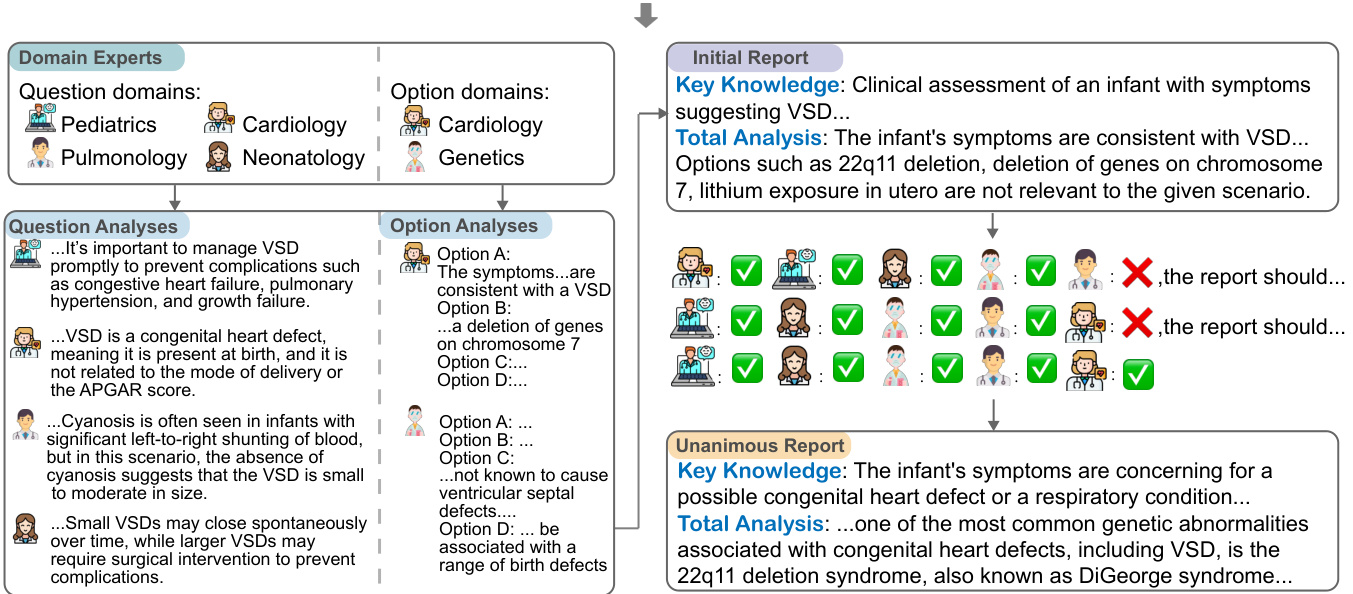

Figure 2: Illustrative example of our proposed MedAgents, a multi-disciplinary collaboration framework. The questions and options are first presented, with domain experts subsequently gathered. The recruited experts conduct thorough Question and Option analyses based on their respective fields. An initial report synthesizing these analyses is then prepared to concisely represent the performed evaluations. The assembled LLM experts, possessing respective disciplinary backgrounds, engage in a discussion over the initial report, voicing agreements and disagreements. Ultimately, after iterative refinement and consultation, a unanimous report is generated that best represents the collective expert knowledge and reasoning on the given medical problem.

图 2: 我们提出的MedAgents框架示意图,这是一个多学科协作框架。首先呈现问题与选项,随后召集领域专家。被招募的专家根据各自领域进行全面的问题与选项分析。接着汇总这些分析形成初步报告,简明呈现已完成的评估。具备相应学科背景的大语言模型专家们围绕初步报告展开讨论,表达赞同或反对意见。最终经过迭代优化与协商,生成一份代表集体专家知识及医学问题推理的共识报告。

demonstrate the general effectiveness of our proposed MEDAGENTS framework. Besides, we identify and categorize common error types in our approach through rigorous human evaluation to shed light on future studies.

我们提出的MEDAGENTS框架展现了普遍有效性。此外,通过严格的人工评估,我们识别并分类了该方法中的常见错误类型,为未来研究提供了启示。

2 Method

2 方法

This section presents the details of our proposed multi-disciplinary collaboration MEDAGENTS framework. Figure 1 and 2 give an overview and an illustrative example of its pipeline, respectively. Our proposed MEDAGENTS framework works in five stages: (i) expert gathering: assemble experts from various disciplines based on the clinical question; (ii) analysis proposition: domain experts present their own analyses with their expertise; (iii) report sum mari z ation: develop a report summary on the basis of previous analyses; (iv) collaborative consultation: hold a consultation over the summarized report with the experts. The report will be revised repeatedly until every expert has given their approval. (v) decision making: derive a final decision from the unanimous report.3

本节详细介绍我们提出的多学科协作MEDAGENTS框架。图1和图2分别展示了其流程概览和示例。我们提出的MEDAGENTS框架包含五个阶段:(i) 专家召集:根据临床问题组建跨学科专家团队;(ii) 分析提案:领域专家运用专业知识提出各自分析;(iii) 报告汇总:基于前期分析形成报告摘要;(iv) 协作会诊:组织专家对汇总报告进行协商。报告将反复修改直至获得所有专家认可。(v) 决策制定:从达成共识的报告中得出最终结论。3

2.1 Expert Gathering

2.1 专家收集

Given a clinical question $q$ and a set of options $o p={o_ {1},o_ {2},\ldots,o_ {k}}$ where $k$ is the number of options, the goal of the Expert Gathering stage is to recruit a group of question domain experts $\mathcal{Q}\mathcal{D}={q d_ {1},q d_ {2},\ldots,q d_ {m}}$ and option domain experts $\begin{array}{l l l}{{\mathcal O D}}&{{=}}&{{{o d_ {1},o d_ {2},\dots,o d_ {n}}}}\end{array}$ where $m$ and $n$ represent the number of question domain experts and option domain experts, respectively. Specifically, we assign a role to the model and provide instructions to guide the model output to the corresponding domains based on the input question and options, respectively:

给定一个临床问题 $q$ 和一组选项 $o p={o_ {1},o_ {2},\ldots,o_ {k}}$ ,其中 $k$ 是选项数量,专家召集阶段的目标是招募一组问题领域专家 $\mathcal{Q}\mathcal{D}={q d_ {1},q d_ {2},\ldots,q d_ {m}}$ 和选项领域专家 $\begin{array}{l l l}{{\mathcal O D}}&{{=}}&{{{o d_ {1},o d_ {2},\dots,o d_ {n}}}}\end{array}$ ,其中 $m$ 和 $n$ 分别表示问题领域专家和选项领域专家的数量。具体而言,我们为模型分配角色,并根据输入问题和选项分别提供指导,引导模型输出到相应领域:

$$

\begin{array}{r l}&{\mathcal{Q}\mathcal{D}=\mathrm{LLM}\left(q,\boldsymbol{\mathsf{r}}_ {\mathsf{q d}},\mathsf{p r o m p t}_ {\mathsf{q d}}\right),}\ &{\mathcal{O}\mathcal{D}=\mathrm{LLM}\left(q,o p,\boldsymbol{\mathsf{r}}_ {\mathsf{o d}},\mathsf{p r o m p t}_ {\mathsf{o d}}\right),}\end{array}

$$

$$

\begin{array}{r l}&{\mathcal{Q}\mathcal{D}=\mathrm{LLM}\left(q,\boldsymbol{\mathsf{r}}_ {\mathsf{q d}},\mathsf{p r o m p t}_ {\mathsf{q d}}\right),}\ &{\mathcal{O}\mathcal{D}=\mathrm{LLM}\left(q,o p,\boldsymbol{\mathsf{r}}_ {\mathsf{o d}},\mathsf{p r o m p t}_ {\mathsf{o d}}\right),}\end{array}

$$

where $\left(\boldsymbol{\Gamma}_ {\mathrm{qd}},\mathsf{p r o m p t}_ {\mathrm{qd}}\right)$ and $\left(\Gamma_ {\tt o d},\tt p r o m p t_ {\tt o d}\right)$ stand for the system role and guideline prompt to gather domain experts for the question $q$ and options $o p$ .

其中 $(\boldsymbol{\Gamma}_ {\mathrm{qd}},\mathsf{p r o m p t}_ {\mathrm{qd}})$ 和 $(\Gamma_ {\tt o d},\tt p r o m p t_ {\tt o d})$ 分别表示用于召集领域专家针对问题 $q$ 和选项 $o p$ 的系统角色与指导提示。

Table 1: Summary of the Datasets. Part of the values are from the appendix of (Singhal et al., 2023a).

| Dataset | Format | Choice | TestingSize | Domain |

| MedQA | Question+ Answer | A/B/C/D | 1273 | USMedical LicensingExamination |

| MedMCQA | Question+Answer | A/B/C/DandExplanations | 6.1K | AIIMSandNEETPGentranceexams |

| PubMedQA | Question+Context+Answer | Yes/No/Maybe | 500 | PubMedpaper abstracts |

| MMLU | Question+Answer | A/B/C/D | 1089 | GraduateRecordExamination & US Medical Licensing Examination |

表 1: 数据集摘要。部分数据来自 (Singhal et al., 2023a) 的附录。

| 数据集 | 格式 | 选项 | 测试集大小 | 领域 |

|---|---|---|---|---|

| MedQA | 问题+答案 | A/B/C/D | 1273 | 美国医师执照考试 |

| MedMCQA | 问题+答案 | A/B/C/D及解释 | 6.1K | AIIMS和NEETPG入学考试 |

| PubMedQA | 问题+上下文+答案 | 是/否/可能 | 500 | PubMed论文摘要 |

| MMLU | 问题+答案 | A/B/C/D | 1089 | 研究生入学考试 & 美国医师执照考试 |

2.2 Analysis Proposition

2.2 分析命题

After gathering domain experts for the question $q$ and options $o p$ , we aim to inquire experts to generate corresponding analyses prepared for later reasoning: $\mathcal{Q A}={q a_ {1},q a_ {2},\dots,q a_ {m}}$ and $\mathcal{O A}={o a_ {1},o a_ {2},...,o a_ {n}}$ .

在针对问题$q$和选项$op$召集领域专家后,我们的目标是请专家生成相应分析,为后续推理做准备:$\mathcal{QA}={qa_ {1},qa_ {2},\dots,qa_ {m}}$和$\mathcal{OA}={oa_ {1},oa_ {2},...,oa_ {n}}$。

Question Analyses Given a question $q$ and a question domain $q d_ {i}\in\mathcal{Q}\mathcal{D}$ , we ask LLM to serve as an expert specialized in domain $q d_ {i}$ and derive the analyses for the question $q$ following the guideline prompt prompt $\mathbf{q}\mathsf{a}$ :

问题分析

给定一个问题 $q$ 和一个问题领域 $q d_ {i}\in\mathcal{Q}\mathcal{D}$,我们要求大语言模型 (LLM) 作为专注于领域 $q d_ {i}$ 的专家,并按照指导提示 prompt $\mathbf{q}\mathsf{a}$ 对问题 $q$ 进行分析:

$$

q a_ {i}=\mathrm{LLM}\left(q,q d_ {i},\mathbf{r}_ {\mathbf{q}\mathbf{a}},\mathsf{p r o m p t}_ {\mathbf{q}\mathbf{a}}\right).

$$

$$

q a_ {i}=\mathrm{LLM}\left(q,q d_ {i},\mathbf{r}_ {\mathbf{q}\mathbf{a}},\mathsf{p r o m p t}_ {\mathbf{q}\mathbf{a}}\right).

$$

Option Analyses Now that we have an option domain $o d_ {i}$ and question analyses $\mathcal{Q A}$ , we can further analyze the options by taking into account both the relationship between the options and the relationship between the options and question. Concretely, we deliver the question $q$ , the options $o p$ , a specific option domain $o d_ {i}\in\mathcal{O}\mathcal{D}$ , and the question analyses $\mathcal{Q A}$ to the LLM:

选项分析

现在我们有了一个选项域 $o d_ {i}$ 和问题分析 $\mathcal{Q A}$,我们可以通过考虑选项之间的关系以及选项与问题之间的关系来进一步分析选项。具体来说,我们将问题 $q$、选项 $o p$、特定选项域 $o d_ {i}\in\mathcal{O}\mathcal{D}$ 以及问题分析 $\mathcal{Q A}$ 输入到大语言模型中:

$$

o a_ {i}=\mathrm{LLM}\left(q,o p,o d_ {i},\mathcal{Q}\mathcal{A},\mathfrak{r}_ {\mathsf{o a}},\mathsf{p r o m p t}_ {\mathsf{o a}}\right).

$$

$$

o a_ {i}=\mathrm{LLM}\left(q,o p,o d_ {i},\mathcal{Q}\mathcal{A},\mathfrak{r}_ {\mathsf{o a}},\mathsf{p r o m p t}_ {\mathsf{o a}}\right).

$$

2.3 Report Sum mari z ation

2.3 报告摘要

In the Report Sum mari z ation stage, we attempt to summarize and synthesize previous analyses from various domain experts $\mathcal{Q A}\cup\mathcal{O A}$ . Given question analyses $\mathcal{Q A}$ and option analyses $\mathcal{O A}$ , we ask LLMs to play the role of a medical report assistant, allowing it to generate a synthesized report by extracting key knowledge and total analysis based on previous analyses:

在报告汇总阶段,我们尝试综合各领域专家的先前分析$\mathcal{Q A}\cup\mathcal{O A}$。基于问题分析$\mathcal{Q A}$和选项分析$\mathcal{O A}$,我们让大语言模型扮演医疗报告助手的角色,使其能够通过提取关键知识并整合先前分析来生成综合报告:

$$

R e p o=\mathrm{LLM}\left(\mathcal{Q A},\mathcal{O A},\mathfrak{r}_ {r s},\mathfrak{p r o m p t}_ {r s}\right).

$$

$$

R e p o=\mathrm{LLM}\left(\mathcal{Q A},\mathcal{O A},\mathfrak{r}_ {r s},\mathfrak{p r o m p t}_ {r s}\right).

$$

2.4 Collaborative Consultation

2.4 协作咨询

Since we have a preliminary summary report Repo, the objective of the Collaborative Consultation stage is to engage distinct domain experts in multiple rounds of discussions and ultimately render a summary report that is recognized by all

由于我们已有一份初步的总结报告Repo,协作咨询阶段的目标是让不同领域的专家参与多轮讨论,最终形成一份获得各方认可的总结报告

Algorithm 1: Collaborative Consultation

算法 1: 协同咨询

experts. The overall procedure of this phase is presented in Algorithm 1. During each round of discussions, the experts give their votes $(y e s/n o)$ : $v o t e=\mathsf{L L M}\left(R e p o,\mathsf{r_ {v o t e}},\mathsf{p r o m p t_ {v o t e}}\right)$ , as well as modification opinions if they vote no for the current report. Afterward, the report will be revised based on the modification opinions. Specifically, during the $j$ -th round of discussion, we note the modification comments from the experts as $M o d_ {j}$ , then we can acquire the updated report as $R e p o_ {j}=$ LLM $(R e p o_ {j-1},M o d_ {j}$ , $\mathsf{p r o m p t}_ {\mathsf{m o d}},$ . In this way, the discussions are held iterative ly until all experts vote yes for the final report $R e p o_ {f}$ or the discussion number attains the maximum attempts threshold.

专家。该阶段的整体流程如算法1所示。在每轮讨论中,专家会给出投票结果$(是/否)$: $vote=\mathsf{大语言模型}\left(Repo,\mathsf{r_ {vote}},\mathsf{prompt_ {vote}}\right)$,若对当前报告投反对票则同时提出修改意见。随后报告将根据修改意见进行修订。具体而言,在第$j$轮讨论中,我们将专家的修改意见记为$Mod_ {j}$,则可通过$Repo_ {j}=$大语言模型$(Repo_ {j-1},Mod_ {j}$,$\mathsf{prompt_ {mod}},$获得更新后的报告。如此迭代进行讨论,直至所有专家对最终报告$Repo_ {f}$投赞成票,或讨论次数达到最大尝试阈值。

2.5 Decision Making

2.5 决策制定

In the end, we demand LLM act as a medical decision maker to derive the final answer to the clinical question $q$ referring to the unanimous

最终,我们要求大语言模型 (LLM) 扮演医疗决策者的角色,根据一致意见推导出临床问题 $q$ 的最终答案。

report $R e p o_ {f}$

报告 $R e p o_ {f}$

$$

a n s=\mathrm{LLM}\left(q,o p,R e p o_ {f},\mathsf{p r o m p t}_ {\mathsf{d m}}\right).

$$

$$

a n s=\mathrm{LLM}\left(q,o p,R e p o_ {f},\mathsf{p r o m p t}_ {\mathsf{d m}}\right).

$$

3 Experiments

3 实验

3.1Setup

3.1 设置

Tasks and Datasets. We evaluate our MEDAGENTS framework on three benchmark datasets MedQA (Jin et al., 2021), MedMCQA (Pal et al., 2022), and PubMedQA (Jin et al., 2019), as well as six subtasks most relevant to the medical domain from MMLU datasets (Hendrycks et al., 2020) including anatomy, clinical knowledge, college medicine, medical genetics, professional medicine, and college biology. Table 1 summarizes the data statistics. More information about the evaluated datasets is presented in Appendix C.

任务与数据集。我们在三个基准数据集MedQA (Jin et al., 2021)、MedMCQA (Pal et al., 2022)和PubMedQA (Jin et al., 2019)上评估MEDAGENTS框架,同时选取MMLU数据集 (Hendrycks et al., 2020)中与医学领域最相关的六个子任务:解剖学、临床知识、大学医学、医学遗传学、专业医学和大学生物学。表1总结了数据统计信息,更多评估数据集详情见附录C。

Implementation. We utilize the popular and publicly available GPT-3.5-Turbo and GPT-4 (OpenAI, 2023) from Azure OpenAI Service.5 All experiments are conducted in the zero-shot setting. The temperature is set to 1.0 and top_ $p$ to 1.0 for all generations. The iteration number and temperature of SC are 5 and 0.7, respectively. The number $k$ of options is 4 except for PubMedQA (3). The numbers of domain experts for the question and options are set as: $m=5,n=2$ except for PubMedQA $(m=4,n=2)$ . The number of maximum attempts $t$ is set as 5. We randomly sample 300 examples for each dataset and conduct experiments on them. Statistically, the cost of our method is $\$1.41$ for $100\mathrm{QA}$ examples (about $\mathbf{\Sigma}_ {\phi1.4}$ per question) and the inference time per example is about $40s$ .6

实现。我们使用了Azure OpenAI服务中广受欢迎且公开可用的GPT-3.5-Turbo和GPT-4 (OpenAI, 2023)。所有实验均在零样本设置下进行。生成过程中,温度参数设为1.0,top_ $p$ 设为1.0。SC (Self-Consistency) 的迭代次数和温度分别设置为5和0.7。选项数量 $k$ 默认为4(PubMedQA数据集为3)。问题和选项的领域专家数量设置为 $m=5,n=2$ (PubMedQA为 $(m=4,n=2)$ )。最大尝试次数 $t$ 设为5。每个数据集随机抽取300个样本进行实验。统计显示,本方法处理100个QA样本的成本为 $\$1.41$ (约 $\mathbf{\Sigma}_ {\phi1.4}$ /问题),单样本推理时间约 $40s$。

Baselines. We have utilized models that are readily accessible through public APIs with the following baselines:

基线模型。我们使用了可通过公共API轻松访问的以下基准模型:

Setting w/o CoT: Zero-shot (Kojima et al., 2022) appends the prompt /emphA: The answer is to a given question and utilizes it as the input fed into LLMs. Few-shot (Brown et al., 2020) introduces several manually templated demonstrations, structured as [Q: q, A: The answer is a], preceding the input question.

无思维链设置:零样本 (Kojima et al., 2022) 在给定问题后追加提示符 /emphA: The answer is 并将其作为大语言模型的输入。少样本 (Brown et al., 2020) 则在输入问题前引入若干人工模板化的示例,其结构为 [Q: q, A: The answer is a]。

Setting w/ CoT: Zero-shot CoT (Kojima et al., 2022) directly incorporates the prompt Let’s think step by step after a question to facilitate inference. Few-shot CoT (Wei et al., 2022) adopts comparable methodologies to Few-shot but distinguishes itself by integrating rationales before deducing the answer.

设置 CoT 方法:零样本 CoT (Kojima et al., 2022) 直接在问题后加入提示词 "Let’s think step by step" 以促进推理。少样本 CoT (Wei et al., 2022) 采用与少样本类似的方法,但在推导答案前会整合推理过程以形成差异。

• Setting w/ SC: SC (Wang et al., 2022) serves as an additional sampling method on Zero-shot CoT and Few-shot CoT, which yields the majority answer by sampling multiple chains.

• 设置 w/ SC: SC (Wang et al., 2022) 作为零样本 CoT 和少样本 CoT 的额外采样方法,通过采样多条链生成多数答案。

3.2 Main Results

3.2 主要结果

Table 2 presents the main results on the nine datasets, including MedQA, MedMCQA, PubMedQA, and six subtasks from MMLU. We compare our method with several baselines in both zero-shot and few-shot settings. Notably, our proposed MEDAGENTS framework outperforms the zero-shot baseline methods by a large margin, indicating the effectiveness of our MEDAGENTS framework in real-world application scenarios. Furthermore, our approach achieves comparable performance under the zero-shot setting compared with the strong baseline Few-shot $C o T{+}S C$ .

表 2: 展示了在九个数据集上的主要结果,包括 MedQA、MedMCQA、PubMedQA 以及 MMLU 的六个子任务。我们将所提方法与零样本和少样本设置下的多个基线方法进行了比较。值得注意的是,我们提出的 MEDAGENTS 框架大幅优于零样本基线方法,这表明 MEDAGENTS 框架在实际应用场景中的有效性。此外,在零样本设置下,我们的方法与强基线 Few-shot $C o T{+}S C$ 相比也取得了相当的性能。

Interestingly, the introduction of CoT occasionally leads to a surprising degradation in performance.7 We have found that reliance on CoT in isolation can inadvertently result in hallucinations - spurious outputs typically associated with the misapplication of medical terminologies. In contrast, our multi-agent roleplaying methodology effectively mitigates these issues, thus underscoring its potential as a more robust approach in medically oriented LLM applications.

有趣的是,引入思维链 (CoT) 偶尔会导致性能出现意外的下降[7]。我们发现,单独依赖思维链可能会无意中导致幻觉现象——即通常与医学术语误用相关的虚假输出。相比之下,我们的多智能体角色扮演方法有效缓解了这些问题,从而凸显了其在医学导向的大语言模型应用中作为一种更稳健方法的潜力。

4 Analysis

4 分析

4.1 Ablation Study

4.1 消融实验

Since our MEDAGENTS framework simulates a multi-disciplinary collaboration process that contains multiple intermediate steps, a natural question is whether each intermediate step contributes to the ultimate result. To investigate this, we ablate three major processes, namely analysis proposition, report sum mari z ation and collaborative consultation. Results in Table 3 show that all of these processes are non-trivial. Notably, the proposition of MEDAGENTS substantially boosts the performance (i.e., $55.0%{\rightarrow}62.0%$ ), whereas the subsequent processes achieve relatively slight improvements over the previous one (i.e., $62.0%{\rightarrow}65.0/67.0%)$ . This suggests that the initial role-playing agents are responsible for exploring medical knowledge of various levels and aspects within LLMs, while the following processes play a role in further verification and revision.

由于我们的MEDAGENTS框架模拟了一个包含多个中间步骤的多学科协作流程,一个自然的问题是每个中间步骤是否对最终结果有所贡献。为了研究这一点,我们消融了三个主要流程,即分析提案、报告总结和协作咨询。表3中的结果显示所有这些流程都不可忽视。值得注意的是,MEDAGENTS的提案显著提升了性能(即$55.0%{\rightarrow}62.0%$),而后续流程相对于前一步仅实现了相对较小的改进(即$62.0%{\rightarrow}65.0/67.0%)$。这表明初始的角色扮演智能体负责在大语言模型中探索不同层次和方面的医学知识,而后续流程则起到进一步验证和修正的作用。

| Method | MedQA MedMCQA PubMedQA Anatomy | Clinical knowledge medicine genetics | College Medical Professional College medicine | biology | Avg. | |||||

| Flan-Palm | ||||||||||

| Few-shot CoT | 60.3 | 53.6 | 77.2 | 66.7 | 77.0 | 83.3 | 75.0 | 76.5 | 71.1 | 71.2 |

| Few-shot CoT + SC | 67.6 | 57.6 | 75.2 | 71.9 | 80.4 | 88.9 | 74.0 | 83.5 | 76.3 | 75.0 |

| GPT-3.5 | ||||||||||

| * few-shot setting | ||||||||||

| Few-shot | 54.7 | 56.7 | 67.6 | 65.9 | 71.3 | 59.0 | 72.0 | 75.7 | 73.6 | 66.3 |

| Few-shot CoT | 55.3 | 54.7 | 71.4 | 48.1 | 65.7 | 55.5 | 57.0 | 69.5 | 61.1 | 59.8 |

| Few-shot CoT + SC | 62.1 | 58.3 | 73.4 | 70.4 | 76.2 | 69.8 | 78.0 | 79.0 | 77.2 | 71.6 |

| * zero-shot setting | ||||||||||

| Zero-shot | 54.3 | 56.3 | 73.7 | 61.5 | 76.2 | 63.6 | 74.0 | 75.4 | 75.0 | 67.8 |

| Zero-shot CoT | 44.3 | 47.3 | 61.3 | 63.7 | 61.9 | 53.2 | 66.0 | 62.1 | 65.3 | 58.3 |

| Zero-shot CoT + SC | 61.3 | 52.5 | 75.7 | 71.1 | 75.1 | 68.8 | 76.0 | 82.3 | 75.7 | 70.9 |

| MedAgents (Ours) | 64.1 | 59.3 | 72.9 | 65.2 | 77.7 | 69.8 | 79.0 | 82.1 | 78.5 | 72.1 |

| GPT-4 | ||||||||||

| * few-shot setting | ||||||||||

| Few-shot | 70.1 | 89.5 | 75.6 | 93.0 | 91.5 | 91.7 | 82.3 | |||

| Few-shot CoT | 76.6 | 73.4 | 79.3 | 63.2 | 73.3 | |||||

| Few-shot CoT + SC | 73.3 | 63.2 | 74.9 | 75.6 | 89.9 | 61.0 | 79.0 | 79.8 | ||

| 82.9 | 73.1 | 75.6 | 80.7 | 90.0 | 88.2 | 90.0 | 95.2 | 93.0 | 85.4 | |

| * zero-shot setting | ||||||||||

| Zero-shot | 73.0 | 69.0 | 76.2 | 78.5 | 83.3 | 75.6 | 90.0 | 90.0 | 90.0 | 80.6 |

| Zero-shot CoT | 61.8 | 69.0 | 71.0 | 82.1 | 85.2 | 80.8 | 92.0 | 93.5 | 91.7 | 80.8 |

| Zero-shot CoT + SC | 74.5 | 70.1 | 75.3 | 80.0 | 86.3 | 81.2 | 93.0 | 94.8 | 91.7 | 83.0 |

| MedAgents (Ours) | 83.7 | 74.8 | 76.8 | 83.5 | 91.0 | 87.6 | 93.0 | 96.0 | 94.3 | 86.7 |

Table 2: Main results (Acc). SC denotes the self-consistency prompting method. Results in bold are the best performances.

| 方法 | MedQA | MedMCQA | PubMedQA | Anatomy | 临床知识 | 医学遗传学 | 大学医学专业 | 大学生物学 | 平均分 |

|---|---|---|---|---|---|---|---|---|---|

| * * Flan-Palm* * | |||||||||

| 少样本思维链 (Few-shot CoT) | 60.3 | 53.6 | 77.2 | 66.7 | 77.0 | 83.3 | 75.0 | 76.5 | 71.1 |

| 少样本思维链+自洽 (Few-shot CoT + SC) | 67.6 | 57.6 | 75.2 | 71.9 | 80.4 | 88.9 | 74.0 | 83.5 | 76.3 |

| * * GPT-3.5* * | |||||||||

| * 少样本设定 | |||||||||

| 少样本 | 54.7 | 56.7 | 67.6 | 65.9 | 71.3 | 59.0 | 72.0 | 75.7 | 73.6 |

| 少样本思维链 | 55.3 | 54.7 | 71.4 | 48.1 | 65.7 | 55.5 | 57.0 | 69.5 | 61.1 |

| 少样本思维链+自洽 | 62.1 | 58.3 | 73.4 | 70.4 | 76.2 | 69.8 | 78.0 | 79.0 | 77.2 |

| * 零样本设定 | |||||||||

| 零样本 | 54.3 | 56.3 | 73.7 | 61.5 | 76.2 | 63.6 | 74.0 | 75.4 | 75.0 |

| 零样本思维链 | 44.3 | 47.3 | 61.3 | 63.7 | 61.9 | 53.2 | 66.0 | 62.1 | 65.3 |

| 零样本思维链+自洽 | 61.3 | 52.5 | 75.7 | 71.1 | 75.1 | 68.8 | 76.0 | 82.3 | 75.7 |

| MedAgents (Ours) | 64.1 | 59.3 | 72.9 | 65.2 | 77.7 | 69.8 | 79.0 | 82.1 | 78.5 |

| * * GPT-4* * | |||||||||

| * 少样本设定 | |||||||||

| 少样本 | 70.1 | 89.5 | 75.6 | 93.0 | 91.5 | 91.7 | |||

| 少样本思维链 | 76.6 | 73.4 | 79.3 | 63.2 | |||||

| 少样本思维链+自洽 | 73.3 | 63.2 | 74.9 | 75.6 | 89.9 | 61.0 | 79.0 | 79.8 | |

| 82.9 | 73.1 | 75.6 | 80.7 | 90.0 | 88.2 | 90.0 | 95.2 | 93.0 | |

| * 零样本设定 | |||||||||

| 零样本 | 73.0 | 69.0 | 76.2 | 78.5 | 83.3 | 75.6 | 90.0 | 90.0 | 90.0 |

| 零样本思维链 | 61.8 | 69.0 | 71.0 | 82.1 | 85.2 | 80.8 | 92.0 | 93.5 | 91.7 |

| 零样本思维链+自洽 | 74.5 | 70.1 | 75.3 | 80.0 | 86.3 | 81.2 | 93.0 | 94.8 | 91.7 |

| MedAgents (Ours) | 83.7 | 74.8 | 76.8 | 83.5 | 91.0 | 87.6 | 93.0 | 96.0 | 94.3 |

表 2: 主要结果 (准确率)。SC表示自洽提示方法。加粗结果为最佳表现。

Table 3: Ablation study for different processes on MedQA. Anal: Analysis proposition, Summ: Report sum mari z ation, Cons: Collaborative consultation.

| Method | Accuracy(%) |

| Direct Prompting | 49.0 |

| CoTPrompting | 55.0 |

| w/MedAgents | |

| + Anal | 62.0(↑ 7.0) |

| +Anal&Summ | 65.0(↑ 10.0) |

| +Anal&Summ&Cons | 67.0(↑ 12.0) |

表 3: MedQA不同处理流程的消融研究。Anal: 分析命题,Summ: 报告总结,Cons: 协作会诊。

| 方法 | 准确率(%) |

|---|---|

| Direct Prompting | 49.0 |

| CoTPrompting | 55.0 |

| w/MedAgents | |

| + Anal | 62.0(↑ 7.0) |

| +Anal&Summ | 65.0(↑ 10.0) |

| +Anal&Summ&Cons | 67.0(↑ 12.0) |

Table 4: Comparison with open-source medical models.

| Method | MedQA | MedMCQA |

| MEDAGENTS(GPT-3.5) | 64.1 | 59.3 |

| MEDAGENTS (GPT-4) | 83.7 | 74.8 |

| MedAlpaca-7B | 55.2 | 45.8 |

| BioMedGPT-10B | 50.4 | 42.2 |

| BioMedLM-2.7B | 50.3 | - |

| BioBERT (large) | 36.7 | 37.1 |

| SciBERT (large) | 39.2 | |

| BERT (large) | 33.6 |

Table 6: Domain variation study. The results are based on GPT-3.5.

表 4: 开源医疗模型对比

| 方法 | MedQA | MedMCQA |

|---|---|---|

| MEDAGENTS(GPT-3.5) | 64.1 | 59.3 |

| MEDAGENTS (GPT-4) | 83.7 | 74.8 |

| MedAlpaca-7B | 55.2 | 45.8 |

| BioMedGPT-10B | 50.4 | 42.2 |

| BioMedLM-2.7B | 50.3 | - |

| BioBERT (large) | 36.7 | 37.1 |

| SciBERT (large) | 39.2 | |

| BERT (large) | 33.6 |

表 6: 领域差异研究 (基于 GPT-3.5)

Table 5: Optimal number of agents on MedQA, MedMCQA, PubMedQA, and MMLU.

| Dataset | MedQA MedMCQA PubMedQA MMLU | |||

| #Questionagents | 5 | 5 | 4 | 5 |

| #Option agents | 2 | 2 | 2 | 2 |

表 5: MedQA、MedMCQA、PubMedQA 和 MMLU 上的最优智能体数量

| 数据集 | MedQA | MedMCQA | PubMedQA | MMLU |

|---|---|---|---|---|

| 问题智能体数量 | 5 | 5 | 4 | 5 |

| 选项智能体数量 | 2 | 2 | 2 | 2 |

| Method | MedQA | MedMCQA |

| MEDAGENTS | 63.8 | 58.9 |

| Removemostrelevant | 60.5 | 55.4 |

| Removeleastrelevant | 66.2 | 61.5 |

| Remove randomly | 62.2 | 56.3 |

| 方法 | MedQA | MedMCQA |

|---|---|---|

| MEDAGENTS | 63.8 | 58.9 |

| Removemostrelevant | 60.5 | 55.4 |

| Removeleastrelevant | 66.2 | 61.5 |

| Remove randomly | 62.2 | 56.3 |

4.2 Comparison with Open-source Medical Models

4.2 与开源医疗模型的对比

We conduct a comprehensive comparison between our proposed MEDAGENTS framework with more baseline methods, including open-source domainadapted models such as MedAlpaca-7B (Han et al., 2023), BioMedGPT-10B (Luo et al., 2023), BioMedLM-2.7B (Bolton et al., 2024), BioBERT (large) (Lee et al., 2020), SciBERT (large) (Beltagy et al., 2019) and BERT (large). We leverage them in the early stages of our work for preliminary attempts. Results in Table 4 demonstrate that the open-source methods fell short of the baseline in Table 2, which leads us to focus on the more effective methods.

我们对提出的MEDAGENTS框架与更多基线方法进行了全面比较,包括开源领域适配模型如MedAlpaca-7B (Han et al., 2023)、BioMedGPT-10B (Luo et al., 2023)、BioMedLM-2.7B (Bolton et al., 2024)、BioBERT (large) (Lee et al., 2020)、SciBERT (large) (Beltagy et al., 2019)和BERT (large)。我们在工作初期利用这些模型进行了初步尝试。表4结果显示,开源方法未达到表2中的基线水平,这促使我们聚焦于更有效的方法。

Figure 3: Influence of the number of question and option agents on various datasets.

图 3: 问题和选项智能体数量对不同数据集的影响。

4.3 Number of agents

4.3 智能体数量

As our MEDAGENTS framework involves multiple agents that play certain roles to acquire the ultimate answer, we explore how the number of collaborating agents influences the overall performance. We vary the number of question and option agents while fixing other variables to observe the performance trends on the MedQAdataset. Figure 3 and Table 5 illustrate the corresponding trend and the optimal number of different agents. Our key observation lies in that the performance improves significantly with the introduction of any number of expert agents compared to our baseline, thus verifying the consistent contribution of multiple expert agents. We find that the optimal number of agents is relatively consistent across different datasets, pointing to its potential applicability to other datasets beyond those we test on.

由于我们的MEDAGENTS框架涉及多个扮演特定角色以获取最终答案的智能体,我们探究了协作智能体数量如何影响整体性能。我们在固定其他变量的情况下,调整问题和选项智能体的数量,观察MedQA数据集上的性能趋势。图3和表5展示了相应趋势及不同智能体的最优数量。关键发现表明:与基线相比,引入任意数量的专家智能体都能显著提升性能,由此验证了多专家智能体的持续贡献。我们发现最优智能体数量在不同数据集间相对一致,这表明该框架可能适用于我们测试范围之外的其他数据集。

4.4 Domain Variation Study

4.4 领域变化研究

In order to investigate the influence of the changes in agent numbers, we perform additional studies where we manipulate agent numbers by selectively eliminating the most and least relevant domain experts based on domain relevance. Due to the manual evaluation involved in identifying the relevance of agent domains, our additional analysis was conducted on a limited set of 20 samples. Results in Table 6 depict minor variance for different sizes of data with random removing, reinforcing the notion that large-scale experiment performance remains largely robust against the effect of domain changes.

为了研究智能体数量变化的影响,我们进行了额外实验:基于领域相关性选择性剔除最相关和最不相关的领域专家来调整智能体数量。由于识别智能体领域相关性涉及人工评估,我们的附加分析仅在20个样本的有限集合上进行。表6结果显示,随机剔除不同规模数据时仅产生微小差异,这进一步证明大规模实验性能对领域变化的鲁棒性。

| Method | MedQA | MedMCQA |

| MEDAGENTS | ||

| w/6differentdomains | 64.1 | 59.3 |

| w/6samedomains | 59.2 | 58.1 |

| w/5samedomains | 57.5 | 57.3 |

| w/4samedomains | 55.9 | 57.0 |

Table 7: Agent quantity study. The results are based on GPT-3.5.

| 方法 | MedQA | MedMCQA |

|---|---|---|

| MEDAGENTS | ||

| w/6不同领域 | 64.1 | 59.3 |

| w/6相同领域 | 59.2 | 58.1 |

| w/5相同领域 | 57.5 | 57.3 |

| w/4相同领域 | 55.9 | 57.0 |

表 7: AI智能体数量研究。结果基于 GPT-3.5。

4.5 Agent Quantity Study

4.5 AI智能体数量研究

To further explore the effect of agent quantity without changes in domain representation, we conduct experiments with $k$ $(k:=:6)$ identicaldomain agents, then with $k-1$ and $k-2$ , to observe performance shifts. The process of selecting these domains is automated via prompting, and our manual inspection confirms the high relevance and quality of the selected domains. The experiment is conducted on a dataset of 300 samples.

为了进一步探究在不改变领域表征的情况下智能体数量的影响,我们进行了以下实验:首先使用 $k$ $(k:=:6)$ 个相同领域的智能体,然后依次减少至 $k-1$ 和 $k-2$ 个,以观察性能变化。领域选择过程通过提示自动完成,经人工检查确认所选领域具有高度相关性和质量。实验在包含300个样本的数据集上进行。

4.6 Error Analysis

4.6 错误分析

Based on our results, we conduct a human evaluation to pinpoint the limitations and issues prevalent in our model. We distill these errors into four major categories: (i) Lack of Domain Knowledge: the model demonstrates an inadequate understanding of the specific medical knowledge necessary to provide an accurate response; (ii) Mis-retrieval of Domain Knowledge: the model has the necessary domain knowledge but fails to retrieve or apply it correctly in the given context; (iii) Consistency Errors: the model provides differing responses to the same statement. The inconsistency suggests confusion in the model’s understanding or application of the underlying knowledge; (iv) CoT Errors: the model may form and follow inaccurate rationales, leading to incorrect conclusions.

根据我们的结果,我们进行了人工评估以确定模型中普遍存在的局限性和问题。我们将这些错误归纳为四大类:(i) 领域知识缺失:模型对提供准确回答所需的特定医学知识理解不足;(ii) 领域知识检索错误:模型具备必要的领域知识,但未能在给定上下文中正确检索或应用;(iii) 一致性错误:模型对相同陈述给出不同回应,这种不一致性表明模型对基础知识的理解或应用存在混淆;(iv) 思维链(CoT)错误:模型可能形成并遵循不准确的推理路径,导致错误结论。

To illustrate the error examples intuitively, we select four typical samples from the four error categories, which can be shown in Figure 5: (i) The first error is due to a lack of domain knowledge regarding cutaneous larva migrans, whose symptoms are not purely hypo pigmented rash, as well as the fact that skin biopsy is not an appropriate test method, which results in the hallucination phenomenon. (ii) The second error is caused by mis-retrieval of domain knowledge, wherein the fact in green is not relevant to Valsalva maneuver. (iii) The third error is attributed to consistency errors, where the model incorrectly regards 20 mmHg within 6 minutes and 20 mmHg within 3 minutes as the same meaning. (iv) The fourth error is provoked by incorrect inference about the relevance of a fact and option A in CoT.

为直观展示错误示例,我们从四类错误中各选取一个典型样本,如图5所示:(i) 第一类错误源于缺乏皮肤幼虫移行症( cutaneous larva migrans )的领域知识,其症状并非单纯色素减退性皮疹,且皮肤活检并非合适的检测方法,导致出现幻觉现象。(ii) 第二类错误由领域知识检索错误引发,绿色标注的事实与Valsalva动作并无关联。(iii) 第三类错误归因于一致性错误,模型错误地将"6分钟内20 mmHg"与"3分钟内20 mmHg"视为相同含义。(iv) 第四类错误由思维链( CoT )中对事实与选项A相关性的错误推断导致。

Figure 4: Ratio of different categories in error cases.

图 4: 错误案例中不同类别的占比。

Furthermore, we analyze the percentage of different categories by randomly selecting 40 error cases in MedQA and MedMCQA datasets. As is shown in Figure 4, the majority $(77%)$ of the error examples are due to confusion about the domain knowledge (including the lack and mis-retrieval of domain knowledge), which illustrates that there still exists a portion of domain knowledge that is explicitly beyond the intrinsic knowledge of LLMs, leading to a bottleneck of our proposed method. As a result, our analysis sheds light on future directions to mitigate the aforementioned drawbacks and further strengthen the model’s proficiency and reliability.

此外,我们通过随机选取MedQA和MedMCQA数据集中的40个错误案例来分析不同类别的占比。如图4所示,绝大多数错误样本(77%)源于领域知识混淆(包括领域知识缺失和检索错误),这表明仍存在部分领域知识明显超出大语言模型的内在知识范畴,从而成为我们提出方法的瓶颈。因此,我们的分析为缓解上述缺陷、进一步提升模型熟练度和可靠性指明了未来研究方向。

4.7 Correctional Capabilities and Interpret ability

4.7 校正能力与可解释性

In our extensive examination of the MEDAGENTS framework, we discover the decent correctional capabilities of our framework. Please refer to Appendix B and Table B for an in-depth overview of instances where our approach successfully amends previous inaccuracies, steering the discussion towards more accurate outcomes. These corrections showcase the MEDAGENTS framework’s strength in collaborative synthesis; it distills and integrates diverse expert opinions into a cohesive and accurate conclusion. By interweaving a variety of perspectives, the collaborative consultation actively refines and rectifies initial analyses, thereby aligning the decision-making process closer to clinical accuracy. This iterative refinement serves as a practical demonstration of our model’s proficiency in rectifying errors, substantiating its interpret ability and accuracy in complex medical reasoning tasks.

在我们对MEDAGENTS框架的广泛测试中,发现该框架具备出色的纠错能力。具体案例请参阅附录B和表B,其中详细展示了我们的方法如何成功修正先前错误,引导讨论得出更准确结论。这些修正案例凸显了MEDAGENTS框架在协同合成方面的优势:它能提炼整合多方专家意见,形成连贯准确的结论。通过交织不同观点,协同会诊机制能持续优化初始分析,使决策过程更贴近临床准确性。这种迭代优化过程实证了该模型在复杂医疗推理任务中纠正错误的能力,验证了其可解释性和准确性。

5 Related Work

5 相关工作

5.1 LLMs in Medical Domains

5.1 医疗领域的大语言模型

Recent years have seen remarkable progress in the application of LLMs (Wu et al., 2023b; Singhal et al., $2023\mathrm{a}$ ; Yang et al., 2023), with a particularly notable impact on the medical field (Bao et al., 2023; Nori et al., 2023; Rosoł et al., 2023). Although LLMs have demonstrated their potential in distinct medical applications encompassing diagnostics (Singhal et al., 2023a; Han et al., 2023), genetics (Duong and Solomon, 2023; Jin et al., 2023), pharmacist (Liu et al., 2023), and medical evidence sum mari z ation (Tang et al., 2023b,a; Shaib et al., 2023), concerns persist when LLMs encounter clinical inquiries that demand intricate medical expertise and decent reasoning abilities (Umapathi et al., 2023; Singhal et al., 2023a). Thus, it is of crucial importance to further arm LLMs with enhanced clinical reasoning capabilities. Currently, there are two major lines of research on LLMs in medical domains, tool-augmented methods and instruction-tuning methods.

近年来,大语言模型(LLM)的应用取得了显著进展(Wu et al., 2023b; Singhal et al., $2023\mathrm{a}$; Yang et al., 2023),尤其在医疗领域产生了重大影响(Bao et al., 2023; Nori et al., 2023; Rosoł et al., 2023)。尽管大语言模型已在诊断(Singhal et al., 2023a; Han et al., 2023)、遗传学(Duong and Solomon, 2023; Jin et al., 2023)、药剂师(Liu et al., 2023)和医学证据总结(Tang et al., 2023b,a; Shaib et al., 2023)等不同医疗应用中展现出潜力,但当面对需要复杂医学专业知识和良好推理能力的临床问题时,仍存在诸多担忧(Umapathi et al., 2023; Singhal et al., 2023a)。因此,进一步提升大语言模型的临床推理能力至关重要。目前医疗领域大语言模型研究主要有两个方向:工具增强方法和指令调优方法。

For tool-augmented approaches, recent studies rely on external tools to acquire additional information for clinical reasoning. For instance, GeneGPT (Jin et al., 2023) guided LLMs to leverage the Web APIs of the National Center for Biotechnology Information (NCBI) to meet various biomedical information needs. Zakka et al. (2023) proposed Almanac, a framework that is augmented with retrieval capabilities for medical guidelines and treatment recommendations. Kang et al. (2023) introduced a method named KARD to improve small LMs on specific domain knowledge by finetuning small LMs on the rationales generated from LLMs and augmenting small LMs with external knowledge from a non-parametric memory.

对于工具增强方法,近期研究依赖外部工具获取临床推理所需的额外信息。例如,GeneGPT (Jin et al., 2023) 引导大语言模型利用美国国家生物技术信息中心(NCBI)的Web API来满足各类生物医学信息需求。Zakka等人(2023)提出了Almanac框架,该框架通过增强医学指南和治疗建议的检索能力实现功能扩展。Kang等人(2023)提出名为KARD的方法,通过在大语言模型生成的原理上微调小型语言模型,并用非参数记忆中的外部知识增强小型语言模型,从而提升特定领域知识的表现。

Current instruction tuning research predominantly leverages external clinical knowledge bases and self-prompted data to obtain instruction datasets (Tu et al., 2023; Zhang et al., $2023\mathrm{a}$ ; Singhal et al., 2023b; Tang et al., 2023c). These datasets are then employed to fine-tune LLMs within the medical field (Singhal et al., 2023b). Some of these models utilize a wide array of datasets collected from medical and biomedical literature, fine-tuned with specialized or openended instruction data (Li et al., 2023a; Singhal et al., 2023b). Others focus on specific areas such as traditional Chinese medicine or largescale, diverse medical instruction data to enhance their medical proficiency (Tan et al., 2023; Zhang et al., 2023b). Unlike these methods, our work emphasizes harnessing latent medical knowledge intrinsic to LLMs and improving reasoning in a training-free setting.

当前指令微调研究主要利用外部临床知识库和自提示数据来获取指令数据集 (Tu et al., 2023; Zhang et al., $2023\mathrm{a}$; Singhal et al., 2023b; Tang et al., 2023c)。这些数据集随后被用于医疗领域内大语言模型的微调 (Singhal et al., 2023b)。其中部分模型采用了从医学和生物医学文献中收集的多样化数据集,并通过专业或开放式指令数据进行微调 (Li et al., 2023a; Singhal et al., 2023b)。另一些研究则专注于特定领域,如中医药或大规模多样化医疗指令数据,以提升其医学专业能力 (Tan et al., 2023; Zhang et al., 2023b)。与这些方法不同,我们的工作重点在于挖掘大语言模型内在的潜在医学知识,并在无需训练的情况下提升推理能力。

Figure 5: Examples of error cases from MedQA and MedMCQA datasets in four major categories including lack of domain knowledge, mis-retrieval of domain knowledge, consistency errors, and CoT errors.

| Category | Example | Interpretation |

| Lack of Domain Knowledge | ...The hypopigmented rashis a classic symptom of cutaneous larva migrans. To confirm the diagnosis, askinbiopsywouldbethemostappropriatetest. | Aboutcutaneouslarvamigrans: 1. symptoms: not simply hypopigmented rash 2.diagnosticmethod: skin biopsy is not preferred |

| Mis-retrievalofDomainKnowledge | ...The physician instructs the patient to stand from a supine position while still wearing the stethoscope. Itisknownasthe"Valsalvamaneuver" During the Valsalvamaneuver,... | Thepatient isasked tomerelystand from a supine position. It does not involve theValsalvamaneuver. |

| Consistency Errors | ...Option Astatesthat thereis a decreaseinsystolic bloodpressureof20mmHgwithin6minutes.Thisis a correct statement,as a drop in systolicblood pressure of at least 20 mmHg within 3minutes of standingupisadiagnosticcriterionforpostural hypotension... | Correctstatement: 20mmHg within 3 minutes Option A: 20mmHgwithin6minutes |

| CoT Errors | Q:Deciduousteethdonotshowfluorosisbecause: ...(A)Placentaactsasabarrier:While it'struethat placentacanactasabarrierforcertainsubstances, this option is not relevant? to the question... | placentacanasabarrierforcertain substancessuchasfluoride,whichis partofthereasonwhydeciduousteeth do not show fluorosis... |

图 5: MedQA和MedMCQA数据集中四类典型错误案例示例,包括领域知识缺失、领域知识检索错误、一致性错误和思维链(CoT)错误。

| 类别 | 示例 | 解释 |

|---|---|---|

| 领域知识缺失 | ...色素减退疹是皮肤幼虫移行症的典型症状。为确诊,皮肤活检是最合适的检测方式。 | 关于皮肤幼虫移行症:1. 症状:并非单纯色素减退疹 2. 诊断方法:皮肤活检并非首选 |

| 领域知识检索错误 | ...医生指示患者在仍佩戴听诊器的情况下从仰卧位起身。这被称为"Valsalva动作"。在Valsalva动作过程中... | 患者仅被要求从仰卧位起身,并不涉及Valsalva动作。 |

| 一致性错误 | ...选项A称6分钟内收缩压下降20mmHg。这是正确陈述,因为站立3分钟内收缩压下降至少20mmHg是体位性低血压的诊断标准... | 正确陈述:3分钟内下降20mmHg 选项A:6分钟内下降20mmHg |

| 思维链(CoT)错误 | 问:乳牙不会出现氟斑牙的原因是:...(A)胎盘作为屏障:虽然胎盘确实能阻挡某些物质,但这个选项与问题无关... | 胎盘能阻挡氟化物等物质,这正是乳牙不会出现氟斑牙的部分原因... |

5.2 LLM-based Multi-agent Collaboration

5.2 基于大语言模型(LLM)的多智能体协作

The development of LLM-based agents has made significant progress in the community by endowing LLMs with the ability to perceive surroundings and make decisions individually (Wang et al., 2023a; Yao et al., 2022; Nakajima, 2023; Xie et al., 2023; Zhou et al., 2023). Beyond the initial single- agent mode, the multi-agent pattern has garnered increasing attention recently (Xi et al., 2023; Li et al., 2023d; Hong et al., 2023) which further explores the potential of LLM-based agents by learning from multi-turn feedback and cooperation. In essence, the key to LLM-based multi-agent collaboration is the simulation of human activities such as role-playing (Wang et al., 2023d; Hong et al., 2023) and communication (Wu et al., 2023a; Qian et al., 2023; Li et al., 2023b,c).

基于大语言模型(LLM)的AI智能体发展在学界取得了显著进展,通过赋予大语言模型感知环境和自主决策的能力(Wang et al., 2023a; Yao et al., 2022; Nakajima, 2023; Xie et al., 2023; Zhou et al., 2023)。除了最初的单智能体模式,多智能体协作模式近期受到越来越多关注(Xi et al., 2023; Li et al., 2023d; Hong et al., 2023),通过多轮反馈与合作进一步探索了大语言模型的潜力。本质上,基于大语言模型的多智能体协作关键在于对人类活动的模拟,例如角色扮演(Wang et al., 2023d; Hong et al., 2023)和沟通交流(Wu et al., 2023a; Qian et al., 2023; Li et al., 2023b,c)。

For instance, Solo Performance Prompting (SPP) (Wang et al., 2023d) managed to combine the strengths of multiple minds to improve performance by dynamically identifying and engaging multiple personas throughout tasksolving. Camel (Li et al., 2023b) leveraged roleplaying to enable chat agents to communicate with each other for task completion.

例如,独奏式提示 (SPP) (Wang et al., 2023d) 通过动态识别和调动多个人格角色来结合多方优势以提升任务解决性能。Camel (Li et al., 2023b) 利用角色扮演使聊天智能体能够相互协作完成任务。

Several recent works attempt to incorporate adversarial collaboration including debates (Du et al., 2023; Xiong et al., 2023) and negotiation (Fu et al., 2023) among multiple agents to further boost performance. Liang et al. (2023) proposed a multi-agent debate framework in which various agents put forward their statements in a tit for tat pattern. Inspired by the multi-disciplinary consultation mechanism which is common and effective in hospitals, we are thus inspired to apply this mechanism to medical reasoning tasks through LLM-based multi-agent collaboration.

近期多项研究尝试通过多智能体间的对抗性协作来进一步提升性能,包括辩论(Du等人, 2023; Xiong等人, 2023)和协商(Fu等人, 2023)。Liang等人(2023)提出了一种多智能体辩论框架,各智能体以针锋相对的模式提出论点。受医院常见且有效的多学科会诊机制启发,我们尝试将这种机制通过基于大语言模型的多智能体协作应用于医学推理任务。

6 Conclusion

6 结论

We present a novel medical QA framework that uses role-playing agents for multi-round discussions, offering greater reliability and clarity without prior training. Our method surpasses zeroshot baselines and matches few-shot baselines across nine datasets. Despite successes, humanbased evaluations of errors have highlighted areas for refinement. Our approach differs from most existing methods by eliminating the dependency on knowledge bases, instead uniquely integrating medical knowledge through role-playing agents.

我们提出了一种新颖的医疗问答框架,该框架利用角色扮演AI智能体进行多轮讨论,无需预先训练即可提供更高的可靠性和清晰度。我们的方法在九个数据集上超越了零样本基线,并与少样本基线表现相当。尽管取得了成功,但基于人工的错误评估仍指出了需要改进的领域。与大多数现有方法不同,我们的方法消除了对知识库的依赖,转而通过角色扮演AI智能体独特地整合医学知识。

Limitation

局限性

The proposed MEDAGENTS framework has shown promising results, but there are still a few points that could be addressed in future studies. First, the parameterized knowledge within LLMs may need updating over time, and thus, continuous efforts are required to keep the framework up-to-date. Second, integrating diverse models at different stages of our framework might be an intriguing exploration. Third, the framework may have limited applicability in low-resource languages. Adapting this framework to a wider range of low-resource languages could meet their specific medical needs to some extent.

提出的MEDAGENTS框架已显示出良好的效果,但仍有一些问题值得在未来研究中探讨。首先,大语言模型(LLM)中的参数化知识可能需要随时间更新,因此需要持续努力以保持框架的时效性。其次,在框架的不同阶段整合多样化模型可能是个值得探索的方向。第三,该框架在低资源语言中的适用性可能有限。将该框架适配