VideoMAE V2: Scaling Video Masked Auto encoders with Dual Masking

VideoMAE V2: 采用双重掩码策略扩展视频掩码自编码器

Abstract

摘要

Scale is the primary factor for building a powerful foundation model that could well generalize to a variety of downstream tasks. However, it is still challenging to train video foundation models with billions of parameters. This paper shows that video masked auto encoder (VideoMAE) is a scalable and general self-supervised pre-trainer for building video foundation models. We scale the VideoMAE in both model and data with a core design. Specifically, we present a dual masking strategy for efficient pre-training, with an encoder operating on a subset of video tokens and a decoder processing another subset of video tokens. Although VideoMAE is very efficient due to high masking ratio in encoder, masking decoder can still further reduce the overall computational cost. This enables the efficient pre-training of billion-level models in video. We also use a progressive training paradigm that involves an initial pre-training on a diverse multi-sourced unlabeled dataset, followed by a post-pre-training on a mixed labeled dataset. Finally, we successfully train a video ViT model with a billion parameters, which achieves a new state-of-the-art performance on the datasets of Kinetics $90.0%$ on K400 and $89.9%$ on K600) and Something-Something $(68.7%$ on V1 and $77.0%$ on V2). In addition, we extensively verify the pre-trained video ViT models on a variety of downstream tasks, demonstrating its effectiveness as a general video representation learner.

规模是构建能够良好泛化到各种下游任务的强大基础模型 (foundation model) 的主要因素。然而,训练具有数十亿参数的视频基础模型仍然具有挑战性。本文表明,视频掩码自编码器 (VideoMAE) 是一种可扩展且通用的自监督预训练方法,可用于构建视频基础模型。我们通过核心设计在模型和数据两方面对 VideoMAE 进行了扩展。具体而言,我们提出了一种双掩码策略以实现高效预训练:编码器处理视频 token 的一个子集,解码器处理另一个子集。尽管 VideoMAE 由于编码器的高掩码率已经非常高效,但对解码器进行掩码仍能进一步降低整体计算成本。这使得在视频领域高效预训练十亿级模型成为可能。我们还采用了一种渐进式训练范式:首先在多样化的多源无标注数据集上进行初始预训练,然后在混合标注数据集上进行后预训练。最终,我们成功训练了一个具有十亿参数的视频 ViT 模型,在 Kinetics (K400 上 90.0%,K600 上 89.9%) 和 Something-Something (V1 上 68.7%,V2 上 77.0%) 数据集上取得了新的最先进性能。此外,我们在多种下游任务上广泛验证了预训练视频 ViT 模型的效果,证明了其作为通用视频表示学习器的有效性。

1. Introduction

1. 引言

Effectively pre-training large foundation models [11] on huge amounts of data is becoming a successful paradigm in learning generic representations for multiple data modalities (e.g., language [12, 24], audio [20, 71], image [6, 35, 107], video [28, 90, 104], vision-language [42, 80]). These foundation models could be easily adapted to a wide range of downstream tasks through zero-shot recognition, linear probe, prompt tuning, or fine tuning. Compared with the specialized model to a single task, they exhibit excellent generalization capabilities and have become the main driving force for advancing many areas in AI.

在大量数据上有效预训练大规模基础模型 [11] 正成为学习多模态数据 (如语言 [12, 24]、音频 [20, 71]、图像 [6, 35, 107]、视频 [28, 90, 104]、视觉-语言 [42, 80]) 通用表征的成功范式。这些基础模型可通过零样本识别、线性探测、提示调优或微调轻松适配多种下游任务。与针对单一任务的专用模型相比,它们展现出卓越的泛化能力,并成为推动人工智能多领域发展的主要驱动力。

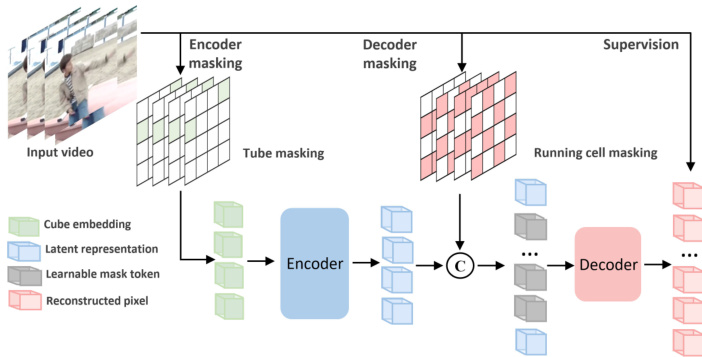

Figure 1. VideoMAE with dual masking. To improve the overall efficiency of computation and memory in video masked autoencoding, we propose to mask the decoder as well and devise the dual masking strategy. Like encoder, we also apply a masking map to the deocoder and simply reconstruct a subset of pixel cubes selected by the running cell masking. The final reconstruction loss only applies for the invisible tokens dropped by the encoder.

图 1: 采用双重掩码的VideoMAE。为提升视频掩码自编码在计算和内存方面的整体效率,我们提出对解码器也进行掩码处理,并设计了双重掩码策略。与编码器类似,我们同样对解码器施加掩码图,仅通过动态单元掩码选择部分像素块进行重建。最终的重建损失仅针对编码器丢弃的不可见Token计算。

For vision research, many efforts have been devoted to developing effective pre-trained models. Among them, Transformer [92] with masked auto encoding [24] is becoming a conceptually simple yet effective self-supervised visual learner (e.g., BEiT [6], SimMIM [107], MAE [35] for images, and MaskFeat [104], VideoMAE [90], MAEST [28] for videos). Meanwhile, based on the results in language models [12], scaling model capacity and data size is an important ingredients for its remarkable performance improvement. However, for pre-trained vision models, very few work [62] has tried to scale up this masked auto encoder pre-training to the billion-level models in image domain, partially due to the high data dimension and the high computational overhead. This issue is even more serious for scaling up video masked auto encoder pre-training owning to its extra time dimension and strong temporal variations.

在视觉研究领域,众多工作致力于开发高效的预训练模型。其中,结合掩码自动编码 (masked auto encoding) [24] 的Transformer [92] 逐渐成为一种概念简洁却高效的自监督视觉学习器(例如图像领域的BEiT [6]、SimMIM [107]、MAE [35],以及视频领域的MaskFeat [104]、VideoMAE [90]、MAEST [28])。同时,基于大语言模型的研究成果 [12],扩大模型容量与数据规模是实现显著性能提升的关键要素。然而,在视觉预训练模型中,仅有极少数工作 [62] 尝试将掩码自动编码预训练扩展到图像领域的十亿级规模,部分归因于高数据维度与高昂计算开销。这一问题在视频掩码自动编码预训练的扩展中更为严峻,因其额外的时间维度和强烈的时序变化特性。

Following the promising findings in languages and images, we aim to study the scaling property of video masked auto encoder (VideoMAE), and push its performance limit on a variety of video downstream tasks. We scale VideoMAE in both model and data. For model scaling, we try to instantiate the VideoMAE with vision transformer (ViT) [26] having billion-level parameters (e.g., ViTg [115]), and for data scaling, we hope to increase the pretraining dataset size to million-level to fully unleash the power of billion-level ViT model. However, to successfully train giant VideoMAE on such huge amounts of data and achieve impressive improvements on all considered downstream tasks, we still need to carefully address a few issues.

继语言和图像领域取得突破性进展后,我们致力于研究视频掩码自编码器(VideoMAE)的扩展特性,并探索其在各类视频下游任务中的性能极限。我们从模型和数据两个维度进行扩展:模型层面尝试采用参数规模达十亿级的视觉Transformer(ViT) [26] (如ViTg [115]) 实现VideoMAE;数据层面则将预训练数据集规模提升至百万级,以充分释放十亿级ViT模型的潜力。但要在海量数据上成功训练巨型VideoMAE,并在所有目标下游任务中取得显著提升,仍需审慎解决若干关键问题。

First, we find computational cost and memory consumption is the bottleneck of scaling VideoMAE on the current GPUs with limited memory. Although VideoMAE [90] has improved its pre-training efficiency and reduced its memory consumption by employing the efficient asymmetric encoder-decoder architecture [35] (i.e., dropping large numbers of tokens in encoder), it still fails to well support the billion-level video transformer pre-training. It takes more than two weeks to pre-train a ViT $\mathbf{g}$ model with VideoMAE on 64 A100 GPUs. To further improve its pre-training efficiency, we find video data redundancy can be used to not only mask a high portion of cubes in the encoder, but also drop some cubes in the decoder. This solution yields higher pre-training efficiency and creates a similarly challenging and meaningful self-supervised task. In practice, it will increase the pre-training batchsize and reduce the pre-training time by a third with almost no performance drop.

首先,我们发现计算成本和内存消耗是当前内存有限的GPU上扩展VideoMAE的瓶颈。尽管VideoMAE [90]通过采用高效的非对称编码器-解码器架构 [35](即在编码器中丢弃大量token)提升了预训练效率并降低了内存占用,但仍无法很好地支持十亿级视频Transformer的预训练。在64块A100 GPU上使用VideoMAE预训练一个ViT $\mathbf{g}$ 模型需要超过两周时间。为了进一步提升预训练效率,我们发现视频数据冗余不仅可以用于在编码器中掩码高比例的立方块,还能在解码器中丢弃部分立方块。该方案能实现更高的预训练效率,同时创建出具有类似挑战性和意义的自监督任务。实际应用中,这种方法可将预训练批次大小提升三分之一,且几乎不会造成性能下降。

Second, MAE is still demanding for large data [108] and billion-level video transformer tends to overfit on relatively small data. Unlike images, the existing public video dataset is much smaller. For example, there are only 0.24M videos in the Kinetics 400 dataset [43], while the ImageNet $22\mathrm{k}$ dataset [23] has $14.2\mathbf{M}$ images, let alone those publicly inaccessible image datasets such as JFT-3B [115]. Therefore, we need to come up with new ways to build a larger video pre-training dataset to well support the billion-level video transformer pre-training. We show that simply mixing the video datasets from multiple resources could produce an effective and diverse pre-training dataset for VideoMAE and improve its downstream performance of pre-trained models.

其次,MAE (Masked Autoencoder) 对数据量的要求仍然较高 [108],而十亿级参数的视频 Transformer 在相对较小的数据集上容易过拟合。与图像不同,现有的公开视频数据集规模要小得多。例如,Kinetics 400 数据集 [43] 仅包含 0.24M 视频,而 ImageNet $22\mathrm{k}$ 数据集 [23] 拥有 $14.2\mathbf{M}$ 图像,更不用说那些无法公开获取的图像数据集(如 JFT-3B [115])。因此,我们需要探索新方法来构建更大的视频预训练数据集,以更好地支持十亿级视频 Transformer 的预训练。实验表明,简单地混合来自多个来源的视频数据集可以为 VideoMAE 构建一个有效且多样化的预训练数据集,并提升预训练模型的下游性能。

Finally, it is still unknown how to adapt the billionlevel pre-trained model by VideoMAE. Masked autoencoding is expected to learn invariant features that provide a favored initialization for vision transformer fine-tuning [46]. However, directly fine-tuning billion-level pre-trained models on a relatively small video dataset (e.g., 0.24M videos) might be suboptimal, as the limited labeled samples might lead to over fitting issue in fine-tuning. In fact, in image domain, the intermediate fine-tuning technique [6, 62] has been employed to boost the performance of masked pretrained models. We show that collecting multiple labeled video datasets and building a supervised hybrid dataset can act as a bridge between the large-scale unsupervised dataset and the small-scale downstream target dataset. Progressive fine-tuning of the pre-trained models through this labeled hybrid dataset could contribute to higher performance in the downstream tasks.

最后,如何通过VideoMAE适应十亿级预训练模型仍属未知。掩码自编码方法有望学习到不变特征,为视觉Transformer (Vision Transformer) 微调提供有利的初始化条件 [46]。然而,直接在较小规模视频数据集(例如24万条视频)上微调十亿级预训练模型可能并非最优选择,因为有限的标注样本可能导致微调过程中的过拟合问题。事实上,在图像领域,已有研究采用中间微调技术 [6,62] 来提升掩码预训练模型的性能。我们证明:收集多个标注视频数据集并构建有监督混合数据集,能够作为大规模无监督数据集与小规模下游目标数据集之间的桥梁。通过该标注混合数据集对预训练模型进行渐进式微调,可提升下游任务性能。

Based on the above analysis, we present a simple and efficient way to scale VideoMAE to billion-level ViT models on a dataset containing million-level pre-training videos. Our technical improvement is to introduce the dual masking strategy for masked auto encoder pipeline as shown in Figure 1. In addition to the masking operation in encoder, we propose to mask decoder as well based on the data redundancy prior in video. With this dual-masked VideoMAE, we follow the intermediate fine-tuning in images [6, 62], and use a progressive training pipeline to perform the video masked pre-training on the million-level unlabeled video dataset and then post-pre-training on the labeled hybrid dataset. These core designs contribute to an efficient billion-level video auto encoding framework, termed as VideoMAE V2. Within this framework, we successfully train the first video transformer model with one billion parameters, which attains a new state-of-the-art performance on a variety of downstream tasks, including action recognition [31, 43, 48, 82], spatial action detection [32, 51], and temporal action detection [41, 61].

基于上述分析,我们提出了一种简单高效的方法,将VideoMAE扩展到包含百万级预训练视频数据集上的十亿级ViT模型。我们的技术改进是在掩码自编码器流程中引入双重掩码策略,如图1所示。除了编码器中的掩码操作外,我们还基于视频数据冗余先验,提出对解码器也进行掩码处理。通过这种双重掩码的VideoMAE,我们遵循图像领域的中间微调方法[6, 62],采用渐进式训练流程:先在百万级无标注视频数据集上进行视频掩码预训练,随后在标注混合数据集上进行后预训练。这些核心设计构成了高效的十亿级视频自编码框架VideoMAE V2。在该框架下,我们成功训练出首个具有十亿参数的视频Transformer模型,在动作识别[31, 43, 48, 82]、空间动作检测[32, 51]和时间动作检测[41, 61]等多种下游任务中取得了新的最优性能。

2. Related Work

2. 相关工作

Vision foundation models. The term of foundation model was invented in [11]. It refers to those powerful models that are pre-trained on broad data and can be adapted to a wide range of downstream tasks. Early research works in vision focused on pre-training CNNs [49] or Transformers [92] on large-scale labeled datasets such as ImageNet-1k [37, 47], ImageNet-22k [63,101], and JFT [115]. Some recent works tried to perform unsupervised pre-training using contrastive learning [17, 36, 105] or siamese learning [18]. Meanwhile, following the success in NLP [12, 24], masked autoencoding was also introduced to pre-train image foundation models in a self-supervised manner, such as BEiT [6], SimMIM [107], and MAE [35]. Some vision-language pretrained models, such as CLIP [80] and ALIGN [42], were proposed by learning from the alignment between images and text on noisy web-scale samples. They have shown excellent performance on zero-shot transfer.

视觉基础模型 (Vision foundation models)。基础模型这一术语源于[11],指的是那些经过广泛数据预训练、能够适应多种下游任务的强大模型。早期视觉领域的研究主要集中于在ImageNet-1k[37,47]、ImageNet-22k[63,101]和JFT[115]等大规模标注数据集上预训练CNN[49]或Transformer[92]。近期一些研究尝试通过对比学习[17,36,105]或孪生学习[18]进行无监督预训练。与此同时,受自然语言处理领域成功案例[12,24]的启发,掩码自编码技术也被引入到图像基础模型的自我监督预训练中,例如BEiT[6]、SimMIM[107]和MAE[35]。一些视觉语言预训练模型如CLIP[80]和ALIGN[42]通过从网络规模噪声数据中学习图像与文本的对齐关系,在零样本迁移任务中展现出卓越性能。

Concerning video foundation models, their progress lags behind images, partially due to the relatively smaller video datasets and higher complexity of video modeling. Since the introduction of Kinetics benchmarks [43], some supervised pre-trained models on it have been transferred to small-scale datasets for action recognition, such as 2D CNNs (TSN [99], TSM [58], TANet [66], TDN [98]), 3D CNNs (I3D [13], $\mathrm{R}(2+1)\mathrm{D}$ [91], ARTNet [97], SlowFast [29]), Transformer (TimeS former [8], Video Swin [65],

关于视频基础模型,其进展落后于图像,部分原因是视频数据集相对较小且视频建模复杂度更高。自Kinetics基准[43]推出以来,一些在其上监督预训练的模型已被迁移至小规模动作识别数据集,例如2D CNN(TSN[99]、TSM[58]、TANet[66]、TDN[98])、3D CNN(I3D[13]、$\mathrm{R}(2+1)\mathrm{D}$[91]、ARTNet[97]、SlowFast[29])、Transformer(TimeSformer[8]、Video Swin[65])。

UniFormer [52]). Recently, some self-supervised video models are developed based on masked auto encoding such as BEVT [100], MaskedFeat [104], VideoMAE [90], and MAE-ST [28] by directly extending these image masked modeling frameworks. However, these video foundation models often limit in their pre-training data size and model scale. More importantly, their downstream tasks have a narrow focus on action recognition, without consideration of other video tasks such as temporal action localization.

UniFormer [52])。最近,一些自监督视频模型基于掩码自动编码技术被开发出来,例如BEVT [100]、MaskedFeat [104]、VideoMAE [90]和MAE-ST [28],这些模型直接扩展了图像掩码建模框架。然而,这些视频基础模型通常受限于预训练数据规模和模型体量。更重要的是,它们的下游任务仅聚焦于动作识别,未考虑其他视频任务(如时序动作定位)。

Masked visual modeling. Early works treated masking in denoised auto encoders [93] or context inpainting [74]. Inspired by the great success in NLP [12, 24], iGPT [15] operated pixel sequences for prediction and ViT [26] investigated the masked token prediction for self-supervised pretraining. Recently, there has been a surge of research into Transformer-based architectures for masked visual modeling [6, 28, 35, 90, 100, 104, 107]. BEiT [6], BEVT [100], and VIMPAC [86] learned visual representations by predicting discrete tokens. MAE [35] and SimMIM [107] directly performed pixel masking and reconstruction for pre-training without discrete token representation. MaskFeat [104] reconstructed the HOG [22] features of masked tokens to perform self-supervised pre-training in videos. VideoMAE [90] and MAE-ST [28] extended MAE [35] to video domain for self-supervised video pre-training and achieved impressive performance on action recognition.

掩码视觉建模。早期研究将掩码处理应用于去噪自编码器 [93] 或上下文修复 [74]。受 NLP [12, 24] 领域重大成功的启发,iGPT [15] 对像素序列进行预测操作,ViT [26] 则探索了掩码 token 预测用于自监督预训练。近期,基于 Transformer 的掩码视觉建模架构研究激增 [6, 28, 35, 90, 100, 104, 107]。BEiT [6]、BEVT [100] 和 VIMPAC [86] 通过预测离散 token 来学习视觉表征。MAE [35] 和 SimMIM [107] 直接进行像素掩码与重建的预训练,无需离散 token 表征。MaskFeat [104] 通过重建掩码 token 的 HOG [22] 特征实现视频自监督预训练。VideoMAE [90] 和 MAE-ST [28] 将 MAE [35] 扩展至视频领域进行自监督视频预训练,在动作识别任务中取得显著性能。

Vision model scaling. Many works tried to scale up CNNs to improve recognition performance [37, 81, 84]. EfficientNet [88] presented a scaling strategy to balance depth, width, and resolution for CNN design. Several works [40, 45, 69] tried to train much larger CNNs to obtain excellent performance by enlarging model capacities and training data size. Recently, a few works [62, 115] tried to scale up the vision transformer to the billion-level models with large-scale supervised pre-training on JFT-3B [115] or selfsupervised pre-training on IN-22K-ext-70M [62]. VideoMAE [90] and MAE-ST [28] have trained the huge video transformer with millions of parameters. MAE-ST [28] also tried the MAE pre-training on 1M IG-uncurated clips but failed to obtain better performance on Kinetics than smallscale pre-training. We are the first work to train video transformer with billion-level parameters.

视觉模型扩展。许多研究尝试通过扩大卷积神经网络(CNN)的规模来提升识别性能[37,81,84]。EfficientNet[88]提出了一种平衡CNN深度、宽度和分辨率的扩展策略。部分工作[40,45,69]尝试通过增加模型容量和训练数据规模来训练更大的CNN以获得优异性能。近期,若干研究[62,115]尝试将视觉Transformer扩展到十亿参数级别,分别在JFT-3B[115]上进行大规模监督预训练或在IN-22K-ext-70M[62]上进行自监督预训练。VideoMAE[90]和MAE-ST[28]训练了具有数百万参数的巨型视频Transformer。MAE-ST[28]还尝试在100万条IG未剪辑视频片段上进行MAE预训练,但在Kinetics数据集上的表现未能超越小规模预训练。我们是首个成功训练十亿级参数视频Transformer的研究团队。

3. VideoMAE V2

3. VideoMAE V2

In this section, we first revisit VideoMAE and analyze its property. Then we present the dual masking strategy for the efficient training of VideoMAE. Finally, we present the scaling details of VideoMAE for large-scale pre-training.

在本节中,我们首先回顾VideoMAE并分析其特性,随后提出用于高效训练VideoMAE的双重掩码策略,最后阐述VideoMAE在大规模预训练中的扩展细节。

3.1. VideoMAE Revisited

3.1. VideoMAE 再探

We scale the video masked auto encoder (VideoMAE) due to its simplicity and high performance. VideoMAE processes the down sampled frames $\mathbf{I}\in\mathbb{R}^{C\times T\times H\times W}$ from a clip with stride $\tau$ , and uses the cube embedding $\Phi_{e m b}$ to transform the frames into a sequence of tokens. Then, it designs a customized tube masking strategy to drop tokens with an extremely high ratio $\rho$ (e.g., $90%$ ). Finally, the unmasked tokens are fed into a video auto encoder $\left(\Phi_{e n c},\Phi_{d e c}\right)$ for reconstructing the masked pixels. Specifically, VideoMAE is composed of three core components: cube embedding, encoder, and decoder. First, cube embedding encodes the local s patio temporal features and builds the token list: $\mathbf{T}=\Phi_{e m b}(\mathbf{I})$ , where $\mathbf{T}={T_{i}}_{i=1}^{N}$ is the token sequence, $T_{i}$ is the token produced by the embedding layer and then added with positional embedding, and $N$ is the total token number. Then the encoder simply operates on the unmasked tokens $\mathbf{T}^{u}$ with a vanilla ViT of joint space-time attention: ${\bf Z}=\Phi_{e n c}({\bf T}^{u})$ , where $\mathbf{T}^{u}$ represents the unmasked visible tokens Tu = {Ti}i (1 M(ρ)), $\mathbb{M}(\rho)$ is the masking map, and its token length $N^{e}$ is equal to $0.1N$ . Finally, the decoder takes the combined tokens $\mathbf{Z}^{c}$ as inputs and performs reconstruction with another ViT: $\hat{\mathbf{I}}=\Phi_{d e c}(\mathbf{Z}^{c})$ , where the combined tokens $\mathbf{Z}^{c}$ is the concatenated sequence of encoded token features $\mathbf{Z}$ and the learnable masked tokens [MASK] (with position embeddings), and its token length $N^{d}$ is equal to the original token number $N$ . The loss function is the mean squared error (MSE) loss between the normalized masked pixels and the reconstructed pixels: $\begin{array}{r}{\ell=\frac{1}{\rho N}\sum_{i\in\mathbb{M}(\rho)}|{\bf I}_{i}-\hat{\hat{\bf I}}_{i}|^{2}}\end{array}$ .

我们选择扩展视频掩码自编码器 (VideoMAE) 因其简洁高效。VideoMAE 以步长 $\tau$ 处理视频片段降采样后的帧 $\mathbf{I}\in\mathbb{R}^{C\times T\times H\times W}$,并通过立方体嵌入 $\Phi_{e m b}$ 将帧转换为token序列。随后采用定制化的管状掩码策略,以极高比例 $\rho$ (例如 $90%$) 丢弃token。最终,未掩码的token被输入视频自编码器 $\left(\Phi_{e n c},\Phi_{d e c}\right)$ 以重建掩码像素。具体而言,VideoMAE 包含三个核心组件:立方体嵌入、编码器和解码器。

首先,立方体嵌入编码局部时空特征并构建token列表:$\mathbf{T}=\Phi_{e m b}(\mathbf{I})$,其中 $\mathbf{T}={T_{i}}_{i=1}^{N}$ 是token序列,$T_{i}$ 由嵌入层生成后加入位置嵌入,$N$ 为总token数。编码器仅对未掩码token $\mathbf{T}^{u}$ 执行联合时空注意力的标准 ViT 操作:${\bf Z}=\Phi_{e n c}({\bf T}^{u})$,其中 $\mathbf{T}^{u}$ 表示未掩码可见token Tu = {Ti}i (1 M(ρ)),$\mathbb{M}(\rho)$ 为掩码映射图,其token长度 $N^{e}$ 等于 $0.1N$。

解码器以拼接token $\mathbf{Z}^{c}$ 为输入,通过另一 ViT 进行重建:$\hat{\mathbf{I}}=\Phi_{d e c}(\mathbf{Z}^{c})$。拼接token $\mathbf{Z}^{c}$ 由编码token特征 $\mathbf{Z}$ 与可学习的掩码token [MASK] (含位置嵌入) 连接而成,其token长度 $N^{d}$ 等于原始token数 $N$。损失函数为归一化掩码像素与重建像素间的均方误差 (MSE):$\begin{array}{r}{\ell=\frac{1}{\rho N}\sum_{i\in\mathbb{M}(\rho)}|{\bf I}_{i}-\hat{\hat{\bf I}}_{i}|^{2}}\end{array}$。

Computational cost analysis. High efficiency is an important characteristic of masked auto encoder. VideoMAE employs an asymmetric encoder-decoder architecture [35], where token sequence length of encoder is only one-tenth of decoder (i.e. $N^{e}=0.1N^{d} $ ). This smaller encoder input contributes to more efficient pre-training pipeline compared with other masked auto encoding frameworks [6,107]. However, when scaling VideoMAE in both depth and width (channels) to a billion-level model, the overall computation and memory consumption is still the bottleneck for the current available GPUs with limited memory. Therefore, the current asymmetric encoder-decoder architecture needs to be further improved for scaling VideoMAE.

计算成本分析。高效是掩码自编码器 (masked auto encoder) 的重要特性。VideoMAE 采用非对称编码器-解码器架构 [35],其编码器的 token 序列长度仅为解码器的十分之一 (即 $N^{e}=0.1N^{d}$)。与其他掩码自编码框架 [6,107] 相比,这种更小的编码器输入有助于实现更高效的预训练流程。然而,当将 VideoMAE 的深度和宽度 (通道数) 扩展到十亿级模型时,整体计算量和内存消耗仍是当前内存有限的 GPU 的瓶颈。因此,当前的非对称编码器-解码器架构需要进一步改进以实现 VideoMAE 的规模化。

3.2. Dual Masking for VideoMAE

3.2. 视频MAE的双重掩码机制

To better enable large-scale VideoMAE pre-training under a limited computational budget, we present a dual masking scheme to further improve its pre-training efficiency. As shown in Figure 1, our dual masking scheme generates two masking maps ${\mathbb M}{e}={\mathcal M}{e}(\rho^{e})$ and $\mathbb{M}{d}=\mathcal{M}{d}(\rho^{d})$ with two different masking generation strategies and masking ratios. These two masking maps $\mathbb{M}{e}$ and $\mathbb{M}{d}$ are for encoder and decoder, respectively. Like VideoMAE, our encoder operates on the partial and visible tokens under the encoder mask ${\mathbb M}{e}$ , and maps the observed tokens into latent feature representations. But unlike VideoMAE, our decoder takes inputs from the encoder visible tokens and part of the remaining tokens visible under the decoder mask $\mathbb{M}_{d}$ . In this sense, we use the decoder mask to reduce the decoder input length for high efficiency yet attain similar information to the full reconstruction. Our decoder maps the latent features and the remaining incomplete tokens into the pixel values at the corresponding locations. The supervision only applies to the decoder output tokens invisible to the encoder. We will detail the design next.

为了在有限计算预算下更好地实现大规模VideoMAE预训练,我们提出了一种双重掩码方案以进一步提升其预训练效率。如图1所示,我们的双重掩码方案通过两种不同的掩码生成策略和掩码比例,生成两个掩码图${\mathbb M}{e}={\mathcal M}{e}(\rho^{e})$和$\mathbb{M}{d}=\mathcal{M}{d}(\rho^{d})$。这两个掩码图$\mathbb{M}{e}$和$\mathbb{M}{d}$分别用于编码器和解码器。与VideoMAE类似,我们的编码器在编码器掩码${\mathbb M}{e}$下对部分可见的Token进行操作,并将观察到的Token映射为潜在特征表示。但与VideoMAE不同,我们的解码器输入来自编码器可见Token和解码器掩码$\mathbb{M}_{d}$下可见的部分剩余Token。通过这种方式,我们利用解码器掩码缩短解码器输入长度以提高效率,同时获得与完整重建相似的信息。解码器将潜在特征与剩余不完整Token映射至对应位置的像素值,监督信号仅作用于编码器不可见的解码器输出Token。具体设计将在下文详述。

Masking decoder. As analyzed in Section 3.1, the decoder of VideoMAE is still inefficient as it needs to process all the cubes in videos. Thus, we further explore the prior of data redundancy in the decoder and propose the strategy of masking decoder. Our idea is mainly inspired by the recent efficient action recognition transformer [79], which only uses a small portion of tokens to achieve similar performance. It implies data redundancy exists in inference, which applies for our reconstruction target as well.

掩码解码器 (Masking decoder)。如第3.1节所述,VideoMAE的解码器仍需处理视频中所有立方体,效率较低。因此我们进一步探索解码器的数据冗余先验,提出掩码解码器策略。该思路主要受近期高效动作识别Transformer [79] 的启发,该研究仅使用少量token即可达到相近性能。这表明推理过程中存在数据冗余,这一现象同样适用于我们的重建任务。

Our dual masking strategy is composed of encoder masking $\mathcal{M}{e}$ and decoder masking $\mathcal{M}{d}$ . The encoder masking is the random tube masking with an extremely high ratio, which is the same as the original VideoMAE. For decoder masking, our objective is opposite to encoder masking. The tube masking in encoder tries to relieve the issue of “information leakage” caused by temporal correlation. In contrast, in decoder masking, we need to encourage “information complement” to ensure minimal information loss in this partial reconstruction. In this sense, we need to select as diverse cubes as possible to cover the whole video information. In the implementation, we compare different mask- ing strategies and eventually choose the running cell masking [79]. With this decoder masking map $\mathbb{M}_{d}$ 1, we reduce the decoder input length to improve efficiency.

我们的双重掩码策略由编码器掩码 $\mathcal{M}{e}$ 和解码器掩码 $\mathcal{M}{d}$ 组成。编码器掩码采用与原始VideoMAE相同的极高比例随机管状掩码。对于解码器掩码,我们的目标与编码器掩码相反:编码器中的管状掩码旨在缓解时间相关性导致的"信息泄漏"问题,而解码器掩码则需要促进"信息互补"以确保部分重建时的信息损失最小化。为此,我们需要选择尽可能多样化的立方体来覆盖整个视频信息。在实现中,我们比较了不同掩码策略,最终选择了运行单元掩码[79]。通过这种解码器掩码映射 $\mathbb{M}_{d}$ 1,我们缩短了解码器输入长度以提高效率。

VideoMAE with dual masking. Our improved VideoMAE shares the same cube embedding and encoder with the original VideoMAE as described in Section 3.1. For decoder, it processes the combined tokens of encoder output and part the remaining visible tokens under the decoder mask $\mathbb{M}_{d}$ . Specifically, the combined sequence is defined as:

采用双重掩码的VideoMAE。我们改进的VideoMAE与原始VideoMAE共享相同的立方体嵌入和编码器结构(如第3.1节所述)。其解码器会处理编码器输出token与解码器掩码$\mathbb{M}_{d}$下部分剩余可见token的拼接序列,具体定义为:

$$

\mathbf{Z}^{c}=\mathbf{Z}\cup{\mathbf{M}{i}}{i\in\mathbb{M}_{d}},

$$

$$

\mathbf{Z}^{c}=\mathbf{Z}\cup{\mathbf{M}{i}}{i\in\mathbb{M}_{d}},

$$

where $\mathbf{Z}$ is the latent representation from encoder, $\mathbf{M}_{i}$ is the learnable masking token with corresponding positional embedding. With this combined token sequence $\mathbf{Z}^{c}$ , our decoder only reconstructs the visible tokens under the decoder mask. The final MSE loss is computed between the normalized masked pixels I and the reconstructed ones I over the decoder visible cubes:

其中 $\mathbf{Z}$ 是编码器的潜在表示,$\mathbf{M}_{i}$ 是可学习的掩码 token 及其对应的位置嵌入。通过这个组合 token 序列 $\mathbf{Z}^{c}$,我们的解码器仅重建解码器掩码下的可见 token。最终的均方误差 (MSE) 损失是在归一化掩码像素 I 和解码器可见立方体上的重建像素 I 之间计算的:

$$

\ell=\frac{1}{(1-\rho^{d})N}\sum_{i\in\mathbb{M}{d}\cap\mathbb{M}{e}}|{\bf I}{i}-\hat{{\bf I}}_{i}|^{2}.

$$

$$

\ell=\frac{1}{(1-\rho^{d})N}\sum_{i\in\mathbb{M}{d}\cap\mathbb{M}{e}}|{\bf I}{i}-\hat{{\bf I}}_{i}|^{2}.

$$

3.3. Scaling VideoMAE

3.3. 扩展 VideoMAE

Model scaling. Model scale is the primary force in obtaining excellent performance. Following the original VideoMAE, we use the vanilla ViT [26] as the backbone due to its simplicity. According to the scaling law of ViT [115], we build VideoMAE encoder with backbones of different capacities ranging from ViT-B, ViT-L, ViT-H, to ViT-g. Note that $W i T{-}g$ is a large model with billion-level parameters and has never been explored in video domain. Its performance with masked auto encoding for video representation learning is still unknown to the community. More details on these backbone designs could be referred to [115]. For decoder design, we use relatively shallow and lightweight backbones [35, 90] with fewer layers and channels. In addition, we apply our dual masking strategy to further reduce computational cost and memory consumption. More details on the decoder design could be found in the appendix.

模型缩放。模型规模是获得卓越性能的主要驱动力。遵循原始VideoMAE的设计,我们采用简洁的ViT [26]作为主干网络。根据ViT的缩放定律 [115],我们构建了具有不同容量主干的VideoMAE编码器,包括ViT-B、ViT-L、ViT-H和ViT-g。值得注意的是,$W i T{-}g$是一个拥有十亿级参数的大型模型,此前从未在视频领域进行过探索。其基于掩码自编码的视频表征学习性能对学术界仍是未知数。更多关于这些主干设计的细节可参考 [115]。在解码器设计方面,我们采用层数和通道数较少的轻量级浅层主干 [35, 90]。此外,我们应用双重掩码策略进一步降低计算成本和内存消耗。解码器设计的更多细节可在附录中找到。

Data scaling. Data scale is another important factor that influences the performance of VideoMAE pre-training. The original VideoMAE simply pre-train the ViT models on relatively small-scale datasets by emphasizing its data efficiency. In addition, they require to pre-train the individual models specific to each dataset (i.e., Something-Something and Kinetics datasets have different pre-trained models). In contrast, we aim to learn a universal pre-trained model that could be transferred to different downstream tasks. To this end, we try to increase the pre-training video samples to a million-level size and aim to understand the data scaling property for VideoMAE pre-training. Data diversity is important for learning general video representations. Therefore, we build an unlabeled hybrid video dataset covering videos from General Webs, Youtube, Instagram, Movies, and Manual Recordings. We collect videos from the public datasets of Kinetics, Something-Something, AVA, WebVid, and uncurated videos crawled from Instagram. In total, there are 1.35M clips in our unlabeled mixed dataset. Note that pre-training video transformer on a such large-scale and diverse dataset is rare in previous works and it still remains unknown the influence of data scale and diversity on VideoMAE pre-training. More details on our dataset could be found in the appendix.

数据规模。数据规模是影响VideoMAE预训练性能的另一关键因素。原始VideoMAE通过在相对小规模数据集上预训练ViT模型来强调其数据效率,且需为每个特定数据集(如Something-Something与Kinetics数据集)单独预训练不同模型。与之相反,我们的目标是学习一个可迁移至不同下游任务的通用预训练模型。为此,我们将预训练视频样本量提升至百万级别,旨在探究VideoMAE预训练中的数据规模特性。数据多样性对学习通用视频表征至关重要,因此我们构建了一个包含通用网页视频、YouTube、Instagram、电影及人工录制视频的无标注混合数据集,收集了来自Kinetics、Something-Something、AVA、WebVid等公开数据集及Instagram爬取的非精选视频,最终形成包含135万段视频的无标注混合数据集。需注意的是,此前工作中鲜有在此类大规模多样化数据集上预训练视频Transformer的研究,数据规模与多样性对VideoMAE预训练的影响仍属未知领域。更多数据集细节见附录。

Progressive training. Transferring scheme is an important step to adapt the pre-trained large video transformers to the downstream tasks. The masked auto encoder pretraining is expected to learn some invariant features and can provide a favored initialization for vision transformer finetuning [46]. The original VideoMAE directly fine-tunes the pre-trained models on the target dataset only with its corresponding supervision. This direct adapting strategy might fail to fully unleash the power of large pre-trained video transformer due to limited supervision. Instead, in order to relieve the over fitting risk, we argue that we should leverage the semantic supervision signals from multiple sources in multiple stages to gradually adapt the pre-trained video transformers to downstream tasks. Accordingly, following the intermediate fine-tuning in images [6, 62], we devise a progressive training pipeline for the whole training process of billion-level video transformers. First, we conduct unsupervised pre-training with masked auto encoding on the unlabeled hybrid video dataset. Then, we build a labeled hybrid dataset by collecting and aligning multiple existing supervised datasets with labels. We perform the supervised post-pre-training stage on this labeled hybrid dataset to incorporate the semantics from multiple sources into the previous pre-trained video transformers. Finally, we perform the specific fine-tuning stage on the target dataset to transfer the general semantics to the task-centric knowledge.

渐进式训练。迁移方案是将预训练的大型视频Transformer适配到下游任务的重要步骤。掩码自编码器预训练旨在学习某些不变特征,可为视觉Transformer微调提供有利的初始化条件 [46]。原始VideoMAE仅使用目标数据集对应的监督信号直接微调预训练模型。由于监督信号有限,这种直接适配策略可能无法充分释放大型预训练视频Transformer的潜力。相反,为降低过拟合风险,我们认为应分阶段利用多来源的语义监督信号,逐步将预训练视频Transformer适配到下游任务。据此,遵循图像领域的中间微调方法 [6, 62],我们为十亿级视频Transformer的完整训练流程设计了渐进式训练管线:首先在未标注的混合视频数据集上进行掩码自编码的无监督预训练;然后通过收集和对齐多个现有标注数据集构建带标注的混合数据集,在此数据集上进行监督式后预训练阶段,将多来源语义融入预训练视频Transformer;最后在目标数据集上进行特定微调阶段,将通用语义转化为任务核心知识。

Based on the above designs of dual masking, data scaling, and progressive training, we implement a simple and efficient masked auto encoding framework with a billionlevel ViT backbone, termed as VideoMAE V2. With this new framework, we successfully train the first billion-level video transformer and push the vanilla ViT performance limit on a variety of video downstream tasks, including video action recognition, action detection, and temporal action detection.

基于上述双重掩码、数据缩放和渐进式训练的设计,我们实现了一个简单高效的掩码自编码框架,采用十亿级ViT主干网络,称为VideoMAE V2。通过这一新框架,我们成功训练出首个十亿级视频Transformer,并在多种视频下游任务(包括视频动作识别、动作检测和时间动作检测)上突破了原始ViT的性能极限。

4. Experiments

4. 实验

4.1. Implementation and Downstream Tasks

4.1. 实现与下游任务

Model. We conduct investigations on the VideoMAE V2 by scaling its model capacity and pre-training data size. We scale the backbone network from the existing huge ViT model (ViT-H) to the giant ViT model (ViT-g) [115]. The ViT $\mathbf{g}$ has a smaller patch size (14), more encoder blocks (40), a higher dimension of cube embedding and self-attention (1408), and more attention heads (16). It has 1,011M parameters. More details could be referred to [115].

模型。我们对VideoMAE V2进行了模型容量和预训练数据规模的扩展研究。将主干网络从现有的巨型ViT模型(ViT-H)扩展到超巨型ViT模型(ViT-g) [115]。ViT $\mathbf{g}$采用更小的图像块尺寸(14)、更多的编码器块(40)、更高的立方体嵌入维度与自注意力维度(1408),以及更多的注意力头(16)。该模型参数量达到10.11亿。更多细节可参考[115]。

Data. To well support the billion-level ViT model pretraining, we build two large-scale video datasets for our proposed progressive training. For self-supervised pre-training of VideoMAE V2, we build a million-level unlabeled video dataset by collecting clips from multiple resources such as Movie, Youtube, Instagram, General Webs, and manual recordings from scripts, and the dataset is termed as Unlabeled Hybrid. Specifically, our dataset is built by simply selecting videos from the public available datasets of Kinetics [43], Something-Something [31], AVA [32], WebVid2M [5], and our own crawled Instagram dataset. In total, there are around 1.35M clips in our mixed dataset and this is the largest dataset ever used for video masked autoencoding. For supervised post-pre-training, we collect the larger video dataset with human annotations, termed as Labe led Hybrid. Following [53], we take the union of different versions of Kinetics datasets (K400, K600, K700) by aligning their label semantics and removing the duplicate videos with the validation sets. This labeled hybrid dataset has 710 categories and 0.66M clips. We pre-train our video transformer model on these two datasets and then transfer them to the downstream tasks as detailed next. More details on these pre-training datasets could be found in the appendix.

数据。为了充分支持十亿级ViT模型的预训练,我们为提出的渐进式训练构建了两个大规模视频数据集。针对VideoMAE V2的自监督预训练,我们通过从电影、YouTube、Instagram、通用网站及剧本手动录制等多渠道收集片段,构建了百万级无标注视频数据集Unlabeled Hybrid。具体而言,该数据集通过简单筛选公开可用的Kinetics [43]、Something-Something [31]、AVA [32]、WebVid2M [5]数据集及我们自行爬取的Instagram数据构建而成。混合数据集共包含约135万条视频片段,是当前视频掩码自编码领域使用的最大规模数据集。

对于监督式后预训练,我们收集了带人工标注的更大规模视频数据集Labe led Hybrid。参照[53]的方法,我们通过对齐标签语义并剔除验证集中的重复视频,整合了不同版本的Kinetics数据集(K400/K600/K700)。该标注混合数据集涵盖710个类别和66万条视频片段。我们在这两个数据集上预训练视频Transformer模型,随后迁移至下游任务(详见后续说明)。更多预训练数据集细节可参阅附录。

Tasks. To verify the generalization ability of VideoMAE V2 pre-trained ViTs as video foundation models, we transfer their representations to a variety of downstream tasks.

任务。为了验证VideoMAE V2预训练ViT作为视频基础模型的泛化能力,我们将其表征迁移至多种下游任务。

Video Action Classification. Action classification is the most common task in video understanding. Its objective is to classify each trimmed clip into a predefined action class and evaluated the average accuracy over action classes. According to the original VideoMAE [90], we perform detailed analysis on this task to investigate the property of scaling video masked auto encoding. In experiments, we choose four datasets to report its performance: Kinetics [43], Something-Something [31], UCF101 [82], and HMDB51 [48]. Kinetics and Something-Something are two large-scale action recognition datasets and have their own unique property for action recognition, where Kinetics contains appearance-centric action classes while SomethingSomething focuses on motion-centric action understanding. UCF101 and HMDB51 are two relatively small datasets and suitable to verify the transfer performance of large pretrained models as shown in the appendix.

视频动作分类。动作分类是视频理解中最常见的任务,其目标是将每个剪辑片段归类到预定义的动作类别中,并评估各类别的平均准确率。根据VideoMAE [90] 的原始设定,我们对该任务进行了详细分析,以探究视频掩码自编码 (video masked auto encoding) 的扩展特性。实验中选取了四个数据集进行性能评估:Kinetics [43]、Something-Something [31]、UCF101 [82] 和 HMDB51 [48]。Kinetics 与 Something-Something 是两个大规模动作识别数据集,各自具有独特的动作识别特性——Kinetics 包含以外观为核心的动作类别,而 Something-Something 侧重于以运动为核心的动作理解。UCF101 和 HMDB51 作为两个较小规模的数据集(如附录所示),适合验证大型预训练模型的迁移性能。

Spatial Action Detection. Action detection is an important task in video understanding, and it aims to recognize all action instances and localize them in space. This task is more challenging than action classification as it deals with more fine-grained action classes and needs to capture detailed structure information to discriminate co-occurring action classes. In experiments, we choose two action detec- tion benchmarks to illustrate the effectiveness of our pre- trained models by VideoMAE V2, namely AVA [32] and AVA-Kinetics [51]. AVA contains the box annotations and their corresponding action labels on keyframes (could be more than one label for each human box). The annotations are done at 1FPS over 80 atomic classes. AVA-Kinetics introduces the AVA style annotations to the Kinetics dataset and a single frame of selected video from Kinetics is annotated with AVA labels. The evaluation metric is frame-level Average Precision (mAP) under the IoU threshold of 0.5.

空间动作检测。动作检测是视频理解中的重要任务,旨在识别所有动作实例并在空间中进行定位。该任务比动作分类更具挑战性,因为它处理更细粒度的动作类别,并需要捕捉详细的结构信息以区分共现的动作类别。实验中,我们选择两个动作检测基准(AVA [32] 和 AVA-Kinetics [51])来展示 VideoMAE V2 预训练模型的有效性。AVA 包含关键帧上的边界框标注及其对应的动作标签(每个人体框可能有多个标签),标注以每秒1帧的频率覆盖80个原子类别。AVA-Kinetics 将 AVA 风格的标注引入 Kinetics 数据集,对 Kinetics 选定视频的单个帧进行 AVA 标签标注。评估指标为 IoU 阈值为0.5时的帧级平均精度 (mAP)。

Temporal Action Detection. Temporal action detection is an important task in long-form video understanding. Its goal is to recognize all action instances in an untrimmed video and localize their temporal extent (starting and ending timestamps). Unlike spatial action detection, temporal action localization aims to focus on precise temporal boundary localization. Intuitively, in addition to capturing semantic information for recognition, the pre-trained models should be able to effectively model the temporal evolu- tion of features to detect action boundaries. In experiments, we choose two temporal action detection benchmarks to evaluate the performance of our pre-trained video models: THUMOS14 [61] and FineAction [61]. THUMOS14 is a relatively small and well labeled temporal action detection dataset, that has been widely used by the previous methods. It only includes sports action classes on this dataset. FineAction is a new large-scale temporal action dataset with fine-grained action class definitions. The evaluation metric is the average mAP under different tIoU thresholds.

时序动作检测。时序动作检测是长视频理解中的重要任务,其目标是在未剪辑视频中识别所有动作实例并定位其时间范围(起始与结束时间戳)。与空间动作检测不同,时序动作定位侧重于精确的时间边界定位。直观而言,预训练模型除了需要捕捉语义信息进行识别外,还应能有效建模特征的时间演化以检测动作边界。实验中我们选择两个时序动作检测基准来评估视频预训练模型的性能:THUMOS14 [61] 和 FineAction [61]。THUMOS14 是一个规模较小但标注完善的时序动作检测数据集,已被先前方法广泛采用,该数据集仅包含体育动作类别。FineAction 是具备细粒度动作类别定义的新兴大规模时序动作数据集,评估指标为不同 tIoU 阈值下的平均 mAP。

Table 1. Ablation study on the decoder masking strategies. Experiments are conducted with ViT-B by pre-training on SSv2 with 800 epochs. “None” refers to the original VideoMAE without decoder masking. We use a better fine-tuning setting than the original VideoMAE. 1 Loss computed over all decoder output tokens. 2 Loss computed over only decoder output tokens invisible to encoder. The default setting for VideoMAE v2 is colored in gray .

表 1: 解码器掩码策略的消融研究。实验采用ViT-B架构,在SSv2数据集上进行800轮预训练。"None"表示原始VideoMAE未使用解码器掩码。我们采用了比原始VideoMAE更优的微调设置。1 损失计算基于所有解码器输出token。2 损失仅计算编码器不可见的解码器输出token。VideoMAE v2的默认设置以灰色标注。

| 解码器掩码方式 | pd | Top-1 | FLOPs |

|---|---|---|---|

| None | 0% | 70.28 | 35.48G |

| Frame | 50% | 69.76 | 25.87G |

| Random | 50% | 64.87 | 25.87G |

| Running cell | 50% | 66.74 | 25.87G |

| Running cell 2 | 25% | 70.22 | 31.63G |

| Running cell 2 | 50% | 70.15 | 25.87G |

| Running cell 12 | 75% | 70.01 | 21.06G |

4.2. Main Results

4.2. 主要结果

We first conduct the experimental study on the core designs of our VideoMAE V2 pre-training framework. We report the fine-tuning action recognition accuracy on the datasets of Kinetics-400 and Something-Something (SthSth) V2. Implementation details about the pre-training and fine-tuning could be found in the appendix.

我们首先对VideoMAE V2预训练框架的核心设计进行了实验研究。报告了在Kinetics-400和Something-Something (SthSth) V2数据集上的微调动作识别准确率。预训练和微调的具体实现细节可参阅附录。

Results on dual masking. We first perform an ablation study on the decoder masking strategy. In this study, we use the ViT-B as the backbone and the pre-training is performed on the Sth-Sth V2 dataset with 800 epochs. The results are evaluated with the fine-tuning accuracy on the SthSth V2 and reported in Table 1. We first re-implement the original VideoMAE without decoder masking and achieve slightly better performance $70.28%$ vs. $69.6%$ Top-1 acc.). Then, we try two masking alternatives in decoder: frame masking and random masking. For frame masking, we only reconstruct half of the frames in the decoder, and for random masking, we stochastic ally drop half of the cubes in the decoder for reconstruction. These two alternatives perform worse than the original VideoMAE. Finally, we apply the running cell masking to select a subset of representative cubes for reconstruction. In this setting, we apply loss functions computed over all decoder output tokens or only encoder-invisible tokens. Agreed with the results in MAE and VideoMAE, the loss on all tokens performs worse partially due to information leakage of these visible tokens from encoder. The running cell masking scheme performs on par with the original result $70.28%$ vs. $70.15%$ top-1 acc.). We also ablates the decoder masking ratio and $50%$ keeps a good trade-off between accuracy and efficiency.

双掩码策略实验结果。我们首先对解码器掩码策略进行消融研究。该研究采用ViT-B作为主干网络,在Sth-Sth V2数据集上进行800轮预训练,结果通过SthSth V2微调准确率评估(如表1所示)。我们首先复现了未使用解码器掩码的原始VideoMAE模型,获得了略优于原论文的性能(70.28% vs. 69.6% Top-1准确率)。随后在解码器中尝试两种掩码替代方案:帧掩码(仅重构半数帧)和随机掩码(随机丢弃半数立方体进行重构),这两种方案表现均逊于原始VideoMAE。最终我们采用动态单元掩码选择代表性立方体子集进行重构,此时对比了在所有解码器输出token上计算损失与仅在编码器不可见token上计算损失的方案。与MAE和VideoMAE的研究结论一致,全token损失函数会因编码器可见token的信息泄露而导致性能下降。动态单元掩码方案与原始结果表现相当(70.28% vs. 70.15% Top-1准确率)。我们还测试了不同解码器掩码比例,发现50%能在准确率与效率间取得最佳平衡。

In Table 2, we report the computational cost (FLOPs), memory consumption (Mems), and per-epoch running time (Time) of dual masking, and compare with the original encoder-only masking in VideoMAE. In this comparison, we use a lightweight decoder only with 4 transformer blocks (channel 384 for ViT-B and 512 for ViT-g). Our dual masking can further improve the computational efficiency of the original asymmetric encoder-decoder architecture in MAE [35]. For memory consumption, we can reduce almost half of the overall memory of feature maps, and this is particularly important for pre-training billion-level video transformer under the available GPUs of limited memory.

在表2中,我们报告了双重掩码的计算成本(FLOPs)、内存消耗(Mems)和每轮运行时间(Time),并与VideoMAE中原始的仅编码器掩码进行了对比。本次对比中,我们仅使用含4个Transformer块( ViT-B通道384/ViT-g通道512 )的轻量级解码器。我们的双重掩码能进一步提升MAE[35]原始非对称编码器-解码器架构的计算效率。在内存消耗方面,我们几乎能将特征图的总内存减少一半,这对于在显存有限的GPU上预训练十亿级视频Transformer尤为重要。

Results on data scaling. We study the influence of pretraining data size on the VideoMAE V2 pre-training. In this experiment, we pre-train the video models with backbones from ViT-B, ViT-L, ViT-H, to ViT $\mathbf{\nabla}^{\cdot}\mathbf{g}$ on our built UnlabeledHybrid dataset with around $1.35\mathbf{M}$ videos. The fine-tuning accuracy is shown in Table 3 on the Kinetics-400 and Table 4 on the Sth-Sth V2. We first compare our performance with the original VideoMAE pre-training. We find that for all backbones, our large-scale pre-training obtains better performance than the original VideoMAE pre-trained on the small-scale datasets of Kinetics-400 or Sth-Sth V2. Meanwhile, we see that the performance gap between two pretraining data datasets becomes more evident as the modal capacity scales up. In particular, on the Sth-Sth V2 dataset, our pre-trained ViT-H outperforms the original VideoMAE by $2.0%$ . It implies that data scale is also important for video masked auto encoding. Meanwhile, we compare with MAE-ST of IG-uncurated pre-training (1M clips). With the same ViT-L backbone, our pre-trained model outperforms it by $1%$ on the Kinetics-400 dataset. This result shows that data quality and diversity might be another important factor.

数据规模扩展的结果。我们研究了预训练数据规模对VideoMAE V2预训练的影响。本实验中,我们在自建的UnlabeledHybrid数据集(约135万视频)上,使用ViT-B、ViT-L、ViT-H至ViT $\mathbf{\nabla}^{\cdot}\mathbf{g}$ 骨干网络进行视频模型预训练。表3和表4分别展示了Kinetics-400和Sth-Sth V2的微调准确率。首先与原始VideoMAE小规模预训练(基于Kinetics-400或Sth-Sth V2数据集)对比发现:所有骨干网络在大规模预训练下均表现更优,且模型容量越大时两种预训练数据的性能差距越显著。特别在Sth-Sth V2数据集上,我们的ViT-H预训练模型比原始VideoMAE高出2.0%,表明数据规模对视频掩码自编码同样关键。此外,与基于IG-uncurated预训练的MAE-ST(100万片段)对比发现:相同ViT-L骨干网络下,我们的模型在Kinetics-400数据集上准确率高1%,说明数据质量与多样性可能是另一重要因素。

Results on model scaling. We study the performance trend with different model capacities. We compare the fine-tuning performance of pre-trained models with ViT-B, ViT-L, ViTH, and ViT $\mathrm{g}$ as shown in Table 3 and Table 4. Vit-g is the first billion-level model pre-trained in video domain. We obtain consistent performance improvement with increasing model capacity. For all compared pre-training methods, the performance improvement from ViT-B to ViT-L is more obvious, while the improvement from ViT-L to ViT-H is much smaller. We further scale up the model capacity to ViT $\mathbf{g}$ architecture. We can still boost the fine-tuning performance further on these two benchmarks with a smaller improvement. We also notice that the performance gap between huge and giant model is very small $(0.1%-0.2%)$ in images [108]. We analyze the performance seems to saturate around 87.0 on the Kinetics-400 and 77.0 on the SthSth V2 for methods without using any extra labeled data.

模型缩放结果。我们研究了不同模型容量下的性能趋势,比较了ViT-B、ViT-L、ViT-H和ViT $\mathrm{g}$预训练模型的微调性能,如表3和表4所示。ViT-g是视频领域首个十亿级预训练模型。随着模型容量增加,我们观察到性能持续提升:所有预训练方法从ViT-B扩展到ViT-L时改进更显著,而ViT-L到ViT-H的提升幅度较小。当继续扩展至ViT $\mathbf{g}$架构时,这两个基准上的微调性能仍能获得较小幅度提升。我们注意到图像领域[108]中巨型与超大型模型的性能差距极小$(0.1%-0.2%)$。分析表明,在不使用额外标注数据的方法中,Kinetics-400的性能饱和点约为87.0,SthSth V2约为77.0。

Table 2. Comparison between dual masking and encoder-only masking. We report the computational cost, memory consumption, and running time for comparison. We perform experiments with backbones (ViT-B and ViT-g) and pre-training on two scales of datasets (Sth-Sth V2 and Unlabeled Hybrid). Time is for 1200 epochs on 64 GPUs. 1 is estimated by training 5 epochs.

表 2: 双重掩码与仅编码器掩码的对比。我们报告了计算成本、内存消耗和运行时间以进行比较。实验采用不同骨干网络 (ViT-B 和 ViT-g) 并在两种规模数据集 (Sth-Sth V2 和 Unlabeled Hybrid) 上进行预训练。时间为 64 块 GPU 上训练 1200 个周期的结果。1 表示通过训练 5 个周期估算得出。

| 掩码方式 | 骨干网络 | 预训练数据集 | FLOPs | 内存占用 | 时间 | 加速比 | Top-1 准确率 |

|---|---|---|---|---|---|---|---|

| 编码器掩码 | ViT-B | Something-SomethingV2 | 35.48G | 631M | 28.4h | 70.28 | |

| 双重掩码 | ViT-B | Something-SomethingV2 | 25.87G | 328M | 15.9h | 1.79x | 70.15 |

| 编码器掩码 | ViT-g | UnlabeledHybrid | 263.93G | 1753M | 356h1 | ||

| 双重掩码 | ViT-g | UnlabeledHybrid | 241.61G | 1050M | 241h | 1.48x | 77.00 |

Table 3. Results on the Kinetics-400 dataset. We scale the pre-training of VideoMAE V2 to billion-level $\mathrm{ViT-g}$ model with millionlevel data size. We report the fine-tuning accuracy of multiple view fusion $(5\times3)$ and single view results in the bracket. All models are pre-trained and fine-tuned at the input of $16\times224\times224$ and sampling stride $\tau=4$ .

表 3: Kinetics-400 数据集上的结果。我们将 VideoMAE V2 的预训练扩展到十亿级 $\mathrm{ViT-g}$ 模型和百万级数据规模。我们报告了多视角融合 $(5\times3)$ 的微调准确率,括号内为单视角结果。所有模型均在 $16\times224\times224$ 输入和采样步长 $\tau=4$ 下进行预训练和微调。

| 方法 | 预训练数据 | 数据规模 | 训练周期 | ViT-B | ViT-L | ViT-H | ViT-g |

|---|---|---|---|---|---|---|---|

| MAE-ST[28] | Kinetics400 | 0.24M | 1600 | 81.3 | 84.8 | 85.1 | |

| MAE-ST[28] | IG-uncurated | 1M | 1600 | 84.4 | |||

| VideoMAE V1[90] | Kinetics400 | 0.24M | 1600 | 81.5 | 85.2 | 86.6 | |

| VideoMAE V2 | UnlabeledHybrid | 1.35M | 1200 | 81.5 (77.0) | 85.4 (81.3) | 86.9 (83.2) | 87.2 (83.9) |

| △Acc. with V1 | %0+ | +0.2% | +0.3% |

Table 4. Results on the Something-Something V2 dataset. We scale the pre-training of VideoMAE V2 to billion-level $\mathrm{ViT{-g}}$ model with million-level data size. We report the fine-tuning accuracy of multiple view fusion $(2\times3)$ and single view results in the brackets. All models are pre-trained at input of $16\times224\times224$ and sampling stride $\tau=2$ . Fine-tuning is on the same size as TSN [99] sampling.

表 4. Something-Something V2 数据集上的结果。我们将 VideoMAE V2 的预训练扩展到十亿级 $\mathrm{ViT{-g}}$ 模型,数据规模达百万级。我们报告了多视角融合 $(2\times3)$ 的微调准确率,括号内为单视角结果。所有模型均在 $16\times224\times224$ 输入和采样步长 $\tau=2$ 下进行预训练。微调采样方式与 TSN [99] 相同。

| method | pre-traindata | datasize | epoch | ViT-B | ViT-L | ViT-H | ViT-g |

|---|---|---|---|---|---|---|---|

| MAE-ST[28] | Kinetics400 | 0.24M | 1600 | 72.1 | 74.1 | ||

| MAE-ST[28] | Kinetics700 | 0.55M | 1600 | 73.6 | 75.5 | ||

| VideoMAEV1[90] | Something-SomethingV2 | 0.17M | 2400 | 70.8 | 74.3 | 74.8 | |

| VideoMAEV2 | UnlabeledHybrid | 1.35M | 1200 | 71.2 (69.5) | 75.7 (74.0) | 76.8 (75.5) | 77.0 (75.7) |

| △Acc.withV1 | — | +0.4% | +1.4% | +2.0% |

Table 5. Study on progressive pre-training. We report the finetuning accuracy of multiple view fusion $(5\times3)$ and single view results in the bracket on the Kinetics-400 dataset. The implementation detail is the same with Table 3.

表 5: 渐进式预训练研究。我们在 Kinetics-400 数据集上报告了多视角融合 $(5\times3)$ 的微调准确率,括号内为单视角结果。实现细节与表 3 相同。

| 方法 | 额外监督 | ViT-H | ViT-g |

|---|---|---|---|

| MAE-ST[28] | K600 | 86.8 | |

| VideoMAEV1[90] | K710 | 88.1 (84.6) | |

| VideoMAEV2 | 86.9 (83.2) | 87.2 (83.9) | |

| VideoMAEV2 | K710 | 88.6 (85.0) | 88.5 (85.6) |

| △Acc.withV1 | K710 | +0.5% |

Results on progressive training. We study the influence of the post-pre-training step in our progressive training scheme. To mitigate over-fitting risk and integrate more human supervision into our pre-trained video models, we merge different versions of Kinetics for post-pre-training (intermediate fine-tuning) and evaluate on the Kinetics-400 dataset. The results are reported in Table 5. We observe that the post-pre-training boosts the performance of largescale pre-trained models for both ViT-H and ViT $\mathrm{^{2}}$ . This result agrees with the findings in image domain [6, 62]. We also apply this technique to VideoMAE V1 pre-trained model and it (ViT-H) achieves worse performance $(88.1%$ vs. $88.6%,$ ), demonstrating the effectiveness of large-scale unsupervised pre-training. We also compare with the intermediate fine-tuning of MAE-ST and our performance is better by $1.8%$ with the same ViT-H backbone.