SST: Self-training with Self-adaptive Threshold ing for Semi-supervised Learning

SST: 基于自适应阈值的半监督学习自训练方法

A R T I C L E I N F O

文章信息

A B S T R A C T

摘要

Neural networks have demonstrated exceptional performance in supervised learning, benefiting from abundant high-quality annotated data. However, obtaining such data in real-world scenarios is costly and labor-intensive. Semi-supervised learning (SSL) offers a solution to this problem by utilizing a small amount of labeled data along with a large volume of unlabeled data. Recent studies, such as Semi-ViT and Noisy Student, which employ consistency regular iz ation or pseudo-labeling, have demonstrated significant achievements. However, they still face challenges, particularly in accurately selecting sufficient high-quality pseudo-labels due to their reliance on fixed thresholds. Recent methods such as FlexMatch and FreeMatch have introduced flexible or self-adaptive threshold ing techniques, greatly advancing SSL research. Nonetheless, their process of updating thresholds at each iteration is deemed time-consuming, computationally intensive, and potentially unnecessary. To address these issues, we propose Self-training with Self-adaptive Threshold ing (SST), a novel, effective, and efficient SSL framework. SST integrates with both supervised (Super-SST) and semi-supervised (Semi-SST) learning. SST introduces an innovative Self-Adaptive Threshold ing (SAT) mechanism that adaptively adjusts class-specific thresholds based on the model’s learning progress. SAT ensures the selection of high-quality pseudo-labeled data, mitigating the risks of inaccurate pseudo-labels and confirmation bias (where models reinforce their own mistakes during training). Specifically, SAT prevents the model from prematurely incorporating low-confidence pseudo-labels, reducing error reinforcement and enhancing model performance. Extensive experiments demonstrate that SST achieves state-ofthe-art performance with remarkable efficiency, generalization, and s cal ability across various architectures and datasets. Notably, Semi-SST-ViT-Huge achieves the best results on competitive ImageNet-1K SSL benchmarks (no external data), with $80.7%/84.9%$ Top-1 accuracy using only $1%%10%$ labeled data. Compared to the fully-supervised DeiT-III-ViT-Huge, which achieves $84.8%$ Top-1 accuracy using $100%$ labeled data, our method demonstrates superior performance using only $10%$ labeled data. This indicates a tenfold reduction in human annotation costs, significantly narrowing the performance disparity between semi-supervised and fullysupervised methods. These advancements pave the way for further innovations in SSL and practical applications where obtaining labeled data is either challenging or costly.

神经网络在监督学习中表现出色,这得益于大量高质量的标注数据。然而,在实际场景中获取此类数据成本高昂且费时费力。半监督学习(SSL)通过结合少量标注数据和大量未标注数据,为解决这一问题提供了方案。近期研究如Semi-ViT和Noisy Student采用一致性正则化或伪标签技术取得了显著成果,但仍面临挑战——尤其是依赖固定阈值导致难以准确选择足够高质量的伪标签。FlexMatch和FreeMatch等最新方法引入了灵活或自适应的阈值技术,极大推动了SSL研究,但其逐轮迭代更新阈值的过程被认为耗时、计算密集且可能非必要。

针对这些问题,我们提出了一种新颖、高效且有效的SSL框架——自适应阈值自训练(SST),该框架可同时集成监督学习(Super-SST)与半监督学习(Semi-SST)。SST创新性地设计了自适应阈值(SAT)机制,能够根据模型学习进度动态调整类别特定阈值,确保筛选高质量伪标注数据,从而降低错误伪标签和确认偏误(模型在训练中强化自身错误)的风险。具体而言,SAT能阻止模型过早引入低置信度伪标签,减少错误强化并提升性能。

大量实验表明,SST在不同架构和数据集上均以卓越的效率、泛化性和扩展性实现了最先进性能。值得注意的是,Semi-SST-ViT-Huge在竞争性ImageNet-1K SSL基准测试(无外部数据)中取得最佳结果:仅用$1%/10%$标注数据便达到$80.7%/84.9%$的Top-1准确率。相比使用$100%$标注数据达到$84.8%$准确率的全监督DeiT-III-ViT-Huge,我们的方法仅用$10%$标注数据即展现出更优性能,这意味着人类标注成本可降低十倍,显著缩小了半监督与全监督方法的性能差距。这些进展为SSL创新及标注数据获取困难或成本高昂的实际应用铺平了道路。

1. Introduction

1. 引言

Neural networks have demonstrated exceptional performance in various supervised learning tasks [1, 2, 3], benefiting from abundant high-quality annotated data. However, obtaining sufficient high-quality annotated data in real-world scenarios can be both costly and labor-intensive. Therefore, learning from a few labeled examples while effectively utilizing abundant unlabeled data has become a pressing challenge. Semi-supervised learning (SSL) [4, 5, 6, 7] offers a solution to this problem by utilizing a small amount of labeled data along with a large volume of unlabeled data.

神经网络在各种监督学习任务中表现卓越 [1, 2, 3],这得益于大量高质量的标注数据。然而,在现实场景中获取足够的高质量标注数据既昂贵又耗时。因此,如何从少量标注样本中学习,同时有效利用大量未标注数据,已成为亟待解决的挑战。半监督学习 (SSL) [4, 5, 6, 7] 通过结合少量标注数据和大量未标注数据,为解决这一问题提供了方案。

Consistency regular iz ation, pseudo-labeling, and self-training are prominent SSL methods. Consistency regularization [8, 9, 10, 11, 12, 13, 14, 15] promotes the model to yield consistent predictions for the same unlabeled sample under various perturbations, thereby enhancing model performance. Pseudo-labeling and self-training [16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 11, 6, 28, 29, 30, 31, 32, 33, 34] are closely related in SSL. Pseudo-labeling assigns labels to unlabeled data, while self-training iterative ly uses these pseudo-labels to enhance model performance.

一致性正则化 (Consistency regularization) 、伪标注 (pseudo-labeling) 和自训练 (self-training) 是半监督学习 (SSL) 中的主要方法。一致性正则化 [8, 9, 10, 11, 12, 13, 14, 15] 促使模型在不同扰动下对同一无标注样本产生一致的预测,从而提升模型性能。伪标注与自训练 [16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 11, 6, 28, 29, 30, 31, 32, 33, 34] 在半监督学习中紧密关联——伪标注为无标注数据分配标签,而自训练则迭代使用这些伪标签来优化模型性能。

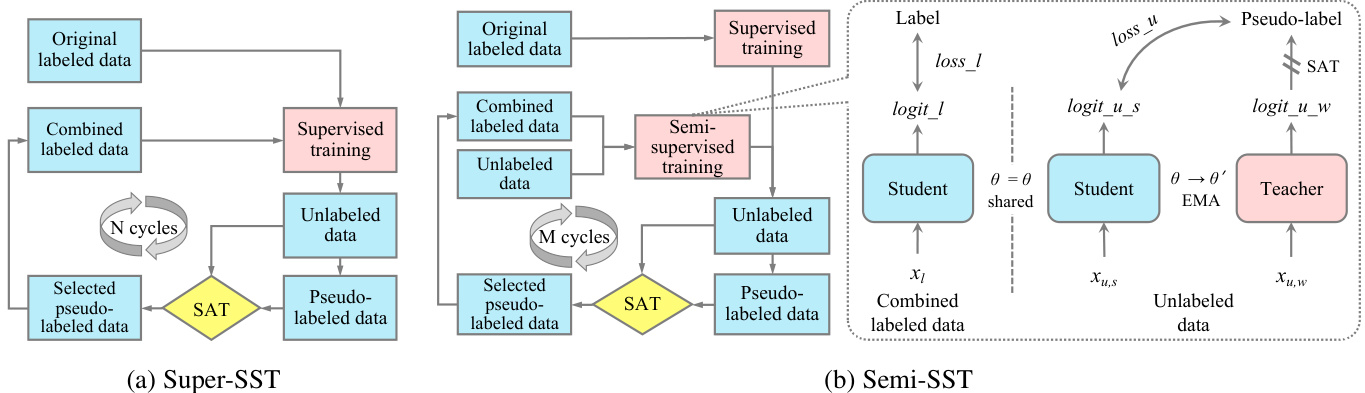

Figure 1: Illustration of the Self-training with Self-adaptive Threshold ing (SST) framework. Our SST can be integrated with either supervised or semi-supervised algorithms, referred to as Super-SST and Semi-SST, respectively. Self-Adaptive Threshold ing (SAT) is a core mechanism in our SST frameworks, accurately and adaptively adjusting class-specific thresholds according to the learning progress of the model. This ensures both high quality and sufficient quantity of selected pseudo-labeled data.

图 1: 自适应阈值自训练 (SST) 框架示意图。我们的 SST 可与监督或半监督算法结合使用,分别称为 Super-SST 和 Semi-SST。自适应阈值 (SAT) 是 SST 框架的核心机制,能根据模型学习进度精准动态调整各类别阈值,确保所选伪标签数据兼具高质量与足量性。

Recent studies, such as Semi-ViT [35] and Noisy Student [27], which employ consistency regular iz ation, pseudolabeling, or self-training, have demonstrated significant achievements. However, they still face challenges, particularly in accurately selecting sufficient high-quality pseudo-labels due to their reliance on fixed thresholds. Recent methods such as FlexMatch [31] and FreeMatch [36] have introduced flexible or self-adaptive threshold ing techniques, greatly advancing SSL research. Nonetheless, their process of updating thresholds at each iteration is deemed time-consuming, computationally intensive, and potentially unnecessary. Moreover, both FlexMatch and FreeMatch predict unlabeled data and update thresholds based on models that are still in training, rather than on converged models with high accuracy. During the early stages of training, using lower-performance models to predict unlabeled data inevitably introduces more inaccurate pseudo-labels, leading to a feedback loop of increasing inaccuracies. This amplifies and reinforces the confirmation bias [25, 37, 38] during training, ultimately resulting in suboptimal performance.

近期研究如Semi-ViT [35]和Noisy Student [27]通过采用一致性正则化、伪标签或自训练策略取得了显著成果。然而这些方法仍面临挑战,尤其是由于依赖固定阈值而难以准确筛选足够数量的高质量伪标签。FlexMatch [31]和FreeMatch [36]等最新技术引入了灵活或自适应的阈值调整机制,极大推动了半监督学习(SSL)研究进展。但它们在每次迭代时更新阈值的流程被认为存在耗时、计算密集且可能非必要的问题。此外,FlexMatch和FreeMatch都基于尚未收敛的训练中模型(而非高精度收敛模型)来预测未标注数据并更新阈值。在训练初期使用低性能模型预测未标注数据,必然会引入更多不准确的伪标签,形成误差不断累积的反馈循环。这种现象会放大并强化训练过程中的确认偏差(confirmation bias) [25,37,38],最终导致模型性能欠佳。

To address the aforementioned issues, we propose Self-training with Self-adaptive Threshold ing (SST), a novel, effective, and efficient SSL framework that significantly advances the field of SSL. As illustrated in Figure 1, our SST framework can be integrated with either supervised or semi-supervised algorithms. We refer to the combination of supervised training and SST as Super-SST, and the combination of semi-supervised training and SST as Semi-SST. Our SST introduces an innovative Self-Adaptive Threshold ing (SAT) mechanism, which accurately and adaptively adjusts class-specific thresholds according to the learning progress of the model. This approach ensures both high quality and sufficient quantity of selected pseudo-labeled data, mitigating the risks of inaccurate pseudo-labels and confirmation bias, thereby enhancing overall model performance.

为解决上述问题,我们提出了一种新颖、高效且有效的自监督学习框架——自适应阈值自训练(Self-training with Self-adaptive Thresholding,简称SST)。如图1所示,该框架可与监督或半监督算法结合使用,其中监督训练与SST的结合称为Super-SST,半监督训练与SST的结合称为Semi-SST。SST的核心创新在于自适应阈值机制(SAT),它能根据模型学习进度动态调整各类别阈值,确保所选伪标签数据兼具高质量与足量性,从而降低伪标签错误和确认偏差的风险,最终提升模型整体性能。

SAT differs from existing dynamic threshold ing methods like FlexMatch and FreeMatch in three key aspects:

SAT与现有动态阈值方法(如FlexMatch和FreeMatch)存在三个关键差异:

• Threshold Derivation: SAT adopts a confidence-based approach, applying a cutoff $C$ to filter out low-confidence probabilities per class, then averaging the remaining probabilities and scaling by a factor $S$ to derive class-specific thresholds. Unlike FlexMatch, which relies on curriculum learning based on predicted unlabeled sample counts and a global threshold, SAT avoids such dependencies. Unlike FreeMatch, which uses exponential moving averages (EMA) and a global threshold without low-confidence filtering, SAT introduces a cutoff mechanism while dispensing with both EMA and global threshold.

• 阈值推导:SAT采用基于置信度的方法,通过设定截断值$C$来过滤每类的低置信度概率,然后对剩余概率取平均值并用缩放因子$S$调整,从而得到类别特定阈值。不同于FlexMatch依赖基于预测未标记样本数量和全局阈值的课程学习机制,SAT避免了此类依赖。与FreeMatch使用指数移动平均(EMA)和全局阈值但未进行低置信度过滤不同,SAT在摒弃EMA和全局阈值的同时引入了截断机制。

• Threshold Updates: SAT updates thresholds once per training cycle using a well-trained model, limiting updates to single digits. This substantially reduces computational overhead and mitigates confirmation bias. In contrast, FlexMatch and FreeMatch update thresholds every iteration (e.g., over 1,000,000 updates) using a model still in training, incurring substantial computational cost and exacerbating confirmation bias during early training.

• 阈值更新: SAT 每个训练周期使用训练良好的模型更新一次阈值,将更新次数限制在个位数。这大幅降低了计算开销并缓解了确认偏差。相比之下,FlexMatch 和 FreeMatch 每次迭代(例如超过 1,000,000 次更新)都使用仍在训练中的模型更新阈值,导致巨大的计算成本并在训练早期加剧确认偏差。

• Empirical Advantages: Extensive empirical evaluations demonstrate SAT’s advantages across multiple benchmarks. For instance, on CIFAR-100 with 400, 2500, and 10000 labels, FreeMatch attains a mean error rate of $28.71%$ using about 240 GPU hours. In contrast, Super-SST and Semi-SST, powered by SAT’s mechanism, achieve lower mean error rates of $22.74%$ and $20.50%$ , respectively, with only 3 and 4 GPU hours, yielding speedups of $80\times$ and $60\times$ .

• 实证优势:大量实证评估表明,SAT在多个基准测试中均展现出优势。例如,在标注量为400、2500和10000的CIFAR-100数据集上,FreeMatch的平均错误率为$28.71%$,耗时约240 GPU小时;而基于SAT机制的Super-SST和Semi-SST仅用3/4 GPU小时就分别实现了$22.74%$和$20.50%$的更优平均错误率,计算效率提升达$80\times$和$60\times$。

Table 1 Comparison of Super-SST and Semi-SST.

表 1: Super-SST与Semi-SST对比

| 对比维度 | Super-SST | Semi-SST |

|---|---|---|

| 核心训练算法 | 监督训练算法 | 半监督训练算法 (EMA-Teacher) |

| 伪标签生成 | 仅离线 | 离线与在线 |

| SAT应用场景 | 离线伪标签筛选 | 离线与在线伪标签筛选 |

| 训练数据 | 离线混合标注数据 (原始数据+SAT筛选伪标签,视作人工标注) | 离线混合标注数据与在线伪标注数据 |

| 损失函数 | 交叉熵损失 (CE) 作用于离线混合标注数据 (L) | 总损失=离线混合标注数据交叉熵损失 + 在线伪标注数据加权交叉熵损失 (C = L + μLu) |

The Super-SST pipeline, illustrated in Figure 1a, consists of several steps: 1) Initialize the model with the original labeled data. 2) Utilize the well-trained, high-performance model to predict the unlabeled data. 3) Apply Self-Adaptive Threshold ing (SAT) to accurately generate or adjust class-specific thresholds. 4) Select high-confidence and reliable pseudo-labels using the class-specific thresholds, reducing inaccurate pseudo-labels and mitigating confirmation bias. 5) Expand the labeled training set by combining the original labeled data with the carefully selected pseudo-labeled data, treating them equally. 6) Optimize the model’s performance using the combined labeled data. 7) Iterative training by returning to step 2, continuously refining the model over multiple cycles until its performance converges.

Super-SST流程如图1a所示,包含以下步骤:1) 使用原始标注数据初始化模型;2) 调用训练完备的高性能模型预测未标注数据;3) 应用自适应阈值(SAT)精确生成或调整类特定阈值;4) 通过类特定阈值筛选高置信度可靠伪标签,减少错误标注并缓解确认偏差;5) 将原始标注数据与精选伪标签数据等权合并,扩展训练集;6) 基于混合标注数据优化模型性能;7) 返回步骤2进行迭代训练,通过多轮循环持续优化模型直至性能收敛。

As illustrated in Figure 1b, Semi-SST enhances Super-SST’s offline pseudo-labeling pipeline by integrating an EMA-Teacher model [35], introducing dynamic online pseudo-labeling to complement the offline process. Specifically, in EMA-Teacher, the teacher model dynamically generates pseudo-labels online, while the student model is trained on both offline combined labeled data and these online pseudo-labeled data. Semi-SST thus employs both offline and online pseudo-labeling mechanisms, with SAT applied in both cases to ensure the selection of high-confidence pseudo-labels. Table 1 outlines the primary distinctions between Super-SST and Semi-SST.

如图 1b 所示,Semi-SST 通过集成 EMA-Teacher 模型 [35] 增强了 Super-SST 的离线伪标注流程,引入动态在线伪标注作为离线流程的补充。具体而言,在 EMA-Teacher 中,教师模型动态生成在线伪标注,而学生模型则在离线组合标注数据和这些在线伪标注数据上进行训练。因此,Semi-SST 同时采用离线和在线伪标注机制,并在两种情况下应用 SAT (Selective Annotation Training) 以确保筛选高置信度的伪标注。表 1 概述了 Super-SST 与 Semi-SST 的主要区别。

Extensive experiments and results strongly confirm the effectiveness, efficiency, generalization, and s cal ability of our methods across both CNN-based and Transformer-based architectures and various datasets, including ImageNet-1K, CIFAR-100, Food-101, and i Naturalist. Compared to supervised and semi-supervised baselines, our methods achieve a relative improvement of $0.7%{-20.7%}$ $(0.2%{-}12.1%)$ in Top-1 accuracy with only $1%$ $(10%)$ labeled data. Notably, Semi-SST-ViT-Huge achieves the best results on competitive ImageNet-1K SSL benchmarks (no external data), with $80.7%/84.9%$ Top-1 accuracy using only $1%/10%$ labeled data. Compared to the fully-supervised DeiT-III-ViT-Huge, which achieves $84.8%$ Top-1 accuracy using $100%$ labeled data, our method demonstrates superior performance using only $10%$ labeled data. This indicates a tenfold reduction in human annotation costs, significantly reducing the performance disparity between semi-supervised and fully-supervised methods. Moreover, our SST methods not only achieve high performance but also demonstrate remarkable efficiency, resulting in an exceptional cost-performance ratio. Furthermore, on the ImageNet-1K benchmark, Super-SST’s accuracy outperforms FlexMatch and FreeMatch by $6.27%$ and $4.99%$ , respectively, requiring only 3 threshold updates, while FlexMatch and FreeMatch demand over 1,000,000 threshold updates.

大量实验和结果有力证实了我们的方法在CNN和Transformer架构以及多种数据集(包括ImageNet-1K、CIFAR-100、Food-101和iNaturalist)上的有效性、高效性、泛化性和可扩展性。与监督学习和半监督学习基线相比,我们的方法仅使用$1%$ ($10%$)标注数据时,Top-1准确率相对提升了$0.7%{-20.7%}$ ($0.2%{-}12.1%$)。值得注意的是,Semi-SST-ViT-Huge在竞争激烈的ImageNet-1K半监督学习基准测试(无外部数据)中取得了最佳结果,仅使用$1%/10%$标注数据就实现了$80.7%/84.9%$的Top-1准确率。相比之下,完全监督的DeiT-III-ViT-Huge使用$100%$标注数据才达到$84.8%$的Top-1准确率,而我们的方法仅需$10%$标注数据就展现出更优性能。这意味着人工标注成本降低了十倍,显著缩小了半监督与全监督方法之间的性能差距。此外,我们的SST方法不仅实现了高性能,还表现出卓越的效率,具有极佳的成本效益比。在ImageNet-1K基准测试中,Super-SST的准确率分别比FlexMatch和FreeMatch高出$6.27%$和$4.99%$,且仅需3次阈值更新,而FlexMatch和FreeMatch则需要超过1,000,000次阈值更新。

In summary, our contributions are three-fold:

总之,我们的贡献主要体现在三个方面:

• We propose an innovative Self-training with Self-adaptive Threshold ing (SST) framework, along with its two variations: Super-SST and Semi-SST, significantly advancing the SSL field. • SST introduces a novel Self-Adaptive Threshold ing (SAT) technique, which accurately and adaptively adjusts class-specific thresholds according to the model’s learning progress. This mechanism ensures both the high

• 我们提出了一种创新的自训练与自适应阈值(Self-training with Self-adaptive Thresholding,SST)框架及其两种变体:Super-SST和Semi-SST,显著推动了自监督学习(SSL)领域的发展。

• SST引入了一种新颖的自适应阈值(Self-Adaptive Thresholding,SAT)技术,能够根据模型的学习进度准确且自适应地调整类别特定阈值。这一机制既保证了高...

Algorithm 1 PyTorch-like Pseudo-code of SST

算法 1: SST 的类 PyTorch 伪代码

Step 1: Initialize the model with original labeled data model $=$ initialize model(labeled data)

步骤1:用原始标注数据初始化模型 model = initialize model(labeled data)

Set hyper parameters max_cycles = 6

设置超参数 max_cycles = 6

or cycle in range(max_cycles): # Step 2: Predict unlabeled data with the well-trained high-performance model outputs $=$ model.predict(unlabeled data) probabilities $=$ softmax(outputs)

或循环范围内(max_cycles): # 步骤2: 用训练好的高性能模型预测未标注数据

outputs $=$ model.predict(未标注数据)

probabilities $=$ softmax(outputs)

Step 3: Generate class-specific thresholds with Self-Adaptive Threshold ing (SAT) class specific thresholds $=$ self adaptive threshold ing(probabilities)

步骤3:通过自适应阈值法(SAT)生成类别特定阈值

类别特定阈值 = 自适应阈值法(概率)

Step 5: Combine labeled data with selected pseudo-labeled data combined labeled data $=$ labeled data $^+$ pseudo labeled data

步骤5:将标注数据与选定的伪标注数据结合

combined labeled data $=$ labeled data $^+$ pseudo labeled data

Step 6: Optimize the model’s performance with the combined labeled data model.train(combined labeled data) # In Semi-SST, unlabeled data is also required here # Step 7: Return to step 2 for iterative training until the model’s performance converges if model.has converged():

步骤6:使用合并的标注数据优化模型性能

model.train(combined labeled data)

在Semi-SST中,此处还需要未标注数据

步骤7:返回步骤2进行迭代训练,直到模型性能收敛

if model.has converged():

Self-trained model is ready for inference self trained model $=$ model

自训练模型已准备好进行推理 自训练模型 $=$ 模型

quality and sufficient quantity of selected pseudo-labeled data, mitigating the risks of inaccurate pseudo-labels and confirmation bias, thereby enhancing overall model performance.

所选伪标签数据的质量和足够数量,减轻了不准确伪标签和确认偏差的风险,从而提升了整体模型性能。

• SST achieves state-of-the-art (SOTA) performance across various architectures and datasets while demonstrating remarkable efficiency, resulting in an exceptional cost-performance ratio. Moreover, SST significantly reduces the reliance on human annotation, bridging the gap between semi-supervised and fully-supervised learning.

• SST 在各种架构和数据集上实现了最先进的 (SOTA) 性能,同时展现出卓越的效率,从而具备出色的性价比。此外,SST 显著降低了对人工标注的依赖,缩小了半监督学习与全监督学习之间的差距。

The organization of this paper is as follows: Section 2 outlines the study’s objectives. Section 3 details the methodology. Section 4 describes the experiments and reports the results. Section 5 reviews related works, highlighting the differences between our methods and previous approaches. Section 6 and 7 presents the limitation and conclusion, respectively.

本文结构如下:第2节概述研究目标。第3节详述方法论。第4节描述实验并报告结果。第5节回顾相关工作,重点说明本研究方法与先前方法的差异。第6节与第7节分别阐述局限性与结论。

2. Research objectives

2. 研究目标

The SSL methods previously discussed in Section 1 either rely on fixed threshold ing or use costly and performancelimited dynamic threshold ing techniques to select pseudo-labels. Both approaches tend to introduce more noisy labels during the early training stages, leading to a feedback loop of increasing inaccuracies and reinforcing confirmation bias, ultimately resulting in suboptimal performance. To overcome these challenges, we propose the SST framework and its core component, the SAT mechanism. These innovations aim to accurately select high-quality and sufficient pseudo-labeled data during training, minimizing the risks of inaccuracies and confirmation bias, thereby enhancing overall model performance. We hope this paper will inspire future research and provide cost-effective solutions for practical applications where acquiring labeled data is challenging or costly.

1.1节讨论的SSL方法要么依赖固定阈值,要么采用昂贵且性能受限的动态阈值技术来选择伪标签。这两种方法在训练初期都容易引入更多噪声标签,导致误差累积的恶性循环并强化确认偏误,最终影响模型性能。为应对这些挑战,我们提出SST框架及其核心组件SAT机制。这些创新旨在训练过程中精准筛选充足的高质量伪标签数据,最大限度降低误差和确认偏误风险,从而提升模型整体性能。我们希望本文能启发后续研究,并为标注数据获取困难或成本高昂的实际应用场景提供经济高效的解决方案。

3. Methodology

3. 方法论

Figure 1 provides a visual overview of SST, while Algorithm 1 presents its pseudo-code implementation. The algorithm is organized into several distinct steps:

图 1: SST的视觉概览,而算法 1 则展示了其伪代码实现。该算法分为以下几个不同步骤:

- Initialization. We initialize the model parameters $\theta$ using the original labeled samples . This initialization phase employs the standard Cross-Entropy (CE) loss Equation 1 to optimize the model’s initial parameters, ensuring a strong starting checkpoint for subsequent training phases.

- 初始化。我们使用原始标注样本 初始化模型参数 $\theta$。该初始化阶段采用标准交叉熵损失 (Cross-Entropy, CE) 公式1来优化模型的初始参数,为后续训练阶段提供稳健的起点。

$$

\mathcal{L}{l}=\frac{1}{N_{l}}\sum_{i=1}^{N_{l}}\mathbf{C}\mathbf{E}\left(f(x_{l i};\theta),y_{l i}\right)

$$

$$

\mathcal{L}{l}=\frac{1}{N_{l}}\sum_{i=1}^{N_{l}}\mathbf{C}\mathbf{E}\left(f(x_{l i};\theta),y_{l i}\right)

$$

- Prediction. We use the well-trained high-performance model $\theta$ to predict all unlabeled data , outputting the probabilities over $N_{c}$ classes.

- 预测。我们使用训练好的高性能模型 $\theta$ 预测所有未标注数据 ,输出 $N_{c}$ 个类别的概率分布。

$$

p_{u i}=\mathrm{softmax}(f(x_{u i};\theta))\quad\mathrm{for}\quad i=1,2,\ldots,N_{u}

$$

$$

p_{u i}=\mathrm{softmax}(f(x_{u i};\theta))\quad\mathrm{for}\quad i=1,2,\ldots,N_{u}

$$

This step produces an $N_{u}\times N_{c}$ probability matrix, where $N_{u}$ represents the number of unlabeled samples and $N_{c}$ denotes the total number of classes. Each element in this matrix corresponds to the predicted probability of a sample belonging to a particular class.

此步骤生成一个 $N_{u}\times N_{c}$ 的概率矩阵,其中 $N_{u}$ 表示未标记样本的数量,$N_{c}$ 代表类别总数。该矩阵中的每个元素对应样本属于特定类别的预测概率。

- Self-Adaptive Threshold ing (SAT). With the generated $N_{u}\times N_{c}$ probability matrix, we proceed to apply the SAT mechanism. The SAT mechanism is a pivotal component of the SST framework, designed to accurately and adaptively adjust class-specific thresholds according to the learning progress of the model. SAT mechanism involves several sub-steps.

- 自适应阈值调整 (SAT)。通过生成的 $N_{u}\times N_{c}$ 概率矩阵,我们开始应用SAT机制。该机制是SST框架的核心组件,旨在根据模型的学习进度精准且自适应地调整各类别特定阈值。SAT机制包含以下子步骤。

- Sorting: Given the $N_{u}\times N_{c}$ probability matrix $\mathbf{P}$ , where $\mathbf{P}{i,j}$ indicates the predicted probability of the $i\cdot$ -th unlabeled sample belonging to the $j$ -th class, we sort the probabilities for each class $j$ in descending order. Let $\mathbf{P}_{:,j}$ denote the vector of probabilities for class $j$ .

- 排序: 给定 $N_{u}\times N_{c}$ 概率矩阵 $\mathbf{P}$ ,其中 $\mathbf{P}{i,j}$ 表示第 $i$ 个未标记样本属于第 $j$ 个类别的预测概率。我们对每个类别 $j$ 的概率向量 $\mathbf{P}_{:,j}$ 进行降序排序。

$$

\mathbf{P}{:,j}^{\mathrm{sorted}}=\mathrm{sort}(\mathbf{P}_{:,j},\mathrm{descending})

$$

$$

\mathbf{P}{:,j}^{\mathrm{sorted}}=\mathrm{sort}(\mathbf{P}_{:,j},\mathrm{descending})

$$

$$

\mathbf{P}{:,j}^{\mathrm{filtered}}={p\in\mathbf{P}_{:,j}^{\mathrm{sorted}}\mid p>C}

$$

$$

\mathbf{P}{:,j}^{\mathrm{filtered}}={p\in\mathbf{P}_{:,j}^{\mathrm{sorted}}\mid p>C}

$$

- Average Calculation: We compute the average of the remaining probabilities for each class $j$ :

- 平均值计算:我们计算每个类别 $j$ 剩余概率的平均值:

$$

\bar{p}{j}=\frac{1}{|\mathbf{P}{:,j}^{\mathrm{filtered}}|}\sum_{p\in\mathbf{P}_{:,j}^{\mathrm{filtered}}}p

$$

$$

\bar{p}{j}=\frac{1}{|\mathbf{P}{:,j}^{\mathrm{filtered}}|}\sum_{p\in\mathbf{P}_{:,j}^{\mathrm{filtered}}}p

$$

where $\mathbf{\underline{{|}}P_{:,j}^{\mathrm{filtered}}|}$ is the number of filtered probabilities for class $j$ .

其中 $\mathbf{\underline{{|}}P_{:,j}^{\mathrm{filtered}}|}$ 表示类别 $j$ 的过滤后概率数量。

- |Thresho|ld Scaling: We then multiply the average value of each class $j$ by a scaling factor $S$ to obtain the class-specific thresholds 𝜏𝑗 :

- 阈值缩放 (Threshold Scaling): 我们将每个类别 $j$ 的平均值乘以缩放因子 $S$,得到类别特定阈值 𝜏𝑗:

$$

\tau_{j}=S\cdot\bar{p}{j}\quad\mathrm{for}\quad j=1,2,\dots,N_{c}

$$

$$

\tau_{j}=S\cdot\bar{p}{j}\quad\mathrm{for}\quad j=1,2,\dots,N_{c}

$$

The SAT mechanism ensures that lower, less reliable probabilities do not influence the threshold computation, thereby maintaining the reliability and quality of the pseudo-labeling process.

SAT机制确保较低且可靠性较差的概率不会影响阈值计算,从而维持伪标签过程的可靠性和质量。

- Selection. We use the class-specific thresholds to select high-confidence and reliable pseudo-labels, reducing the introduction of inaccurate pseudo-labels and mitigating confirmation bias. For each unlabeled sample ,p tlhe eisu dsoel-leacbteeld ias s par op d sue cued do -blya $\hat{y}{u i}=\arg\operatorname*{max}{j}p_{u i,j}$ .associated confidence $\hat{p}{u i}=\operatorname*{max}{j}p_{u i,j}$ . An $x_{u i}$ $\hat{p}{u i}>\tau_{\hat{y}_{u i}}$

- 选择。我们使用类别特定阈值 来筛选高置信度且可靠的伪标签,减少不准确伪标签的引入并缓解确认偏差。对于每个无标签样本 ,其伪标签被选择为 $\hat{y}{u i}=\arg\operatorname*{max}{j}p_{u i,j}$,关联置信度为 $\hat{p}{u i}=\operatorname*{max}{j}p_{u i,j}$。当 $\hat{p}{u i}>\tau_{\hat{y}{u i}}$ 时,样本 $x_{u i}$ 被选中。

$$

\hat{y}{u i}=\arg\operatorname*{max}{j}p_{u i,j}

$$

$$

\hat{y}{u i}=\arg\operatorname*{max}{j}p_{u i,j}

$$

$$

\hat{p}{u i}=\operatorname*{max}{j}p_{u i,j}

$$

$$

\hat{p}{u i}=\operatorname*{max}{j}p_{u i,j}

$$

- Combination. We combine the labeled data with the carefully selected high-confidence pseudo-labeled data, thereby expanding the labeled training set.

- 组合。我们将标注数据与精心筛选的高置信度伪标注数据相结合,从而扩展标注训练集。

$$

{(x_{i},y_{i})}{i=1}^{N_{l+u}}={(x_{l i},y_{l i})}{i=1}^{N_{l}}\cup{(x_{u i},\hat{y}{u i})\mid\hat{p}{u i}>\tau_{\hat{y}{u i}}}{i=1}^{N_{u}}

$$

$$

{(x_{i},y_{i})}{i=1}^{N_{l+u}}={(x_{l i},y_{l i})}{i=1}^{N_{l}}\cup{(x_{u i},\hat{y}{u i})\mid\hat{p}{u i}>\tau_{\hat{y}{u i}}}{i=1}^{N_{u}}

$$

- Optimization. In the Super-SST framework, the model is optimized on the offline combined labeled data using the standard CE loss, as defined in Equation 1. As discussed in Section 1, the high-confidence pseudo-labeled data included in the combined labeled data are selected offline via SAT and are treated as equally reliable as human-labeled data. In contrast, Semi-SST is built upon an EMA-Teacher framework, where the teacher model dynamically generates pseudo-labels during training, and the student model is trained on both offline combined labeled data and online pseudo-labeled data. We optimize the student model’s performance using the total loss function $\mathcal{L}$ , which consists of the CE loss for offline combined labeled data $\mathcal{L}{l}$ and the CE loss for online pseudo-labeled data $\mathcal{L}{u}$ , weighted by a factor $\mu$ to control the contribution of the $\mathcal{L}_{u}$ . This formulation ensures that the model benefits from both high-confidence offline pseudo-labels and dynamic online pseudo-labeling, with SAT filtering applied in both cases to maintain pseudo-label reliability.

- 优化。在Super-SST框架中,模型通过标准交叉熵损失(CE loss)在离线组合标注数据上进行优化,如公式1所定义。如第1节所述,组合标注数据中包含的高置信度伪标签数据是通过SAT离线筛选的,其可靠性被视为与人工标注数据等同。相比之下,Semi-SST基于EMA-Teacher框架构建,其中教师模型在训练期间动态生成伪标签,学生模型则在离线组合标注数据和在线伪标签数据上进行训练。我们使用总损失函数$\mathcal{L}$优化学生模型性能,该函数由离线组合标注数据的CE损失$\mathcal{L}{l}$和在线伪标签数据的CE损失$\mathcal{L}{u}$组成,并通过系数$\mu$控制$\mathcal{L}_{u}$的贡献权重。这种设计确保模型既能利用高置信度的离线伪标签,又能受益于动态在线伪标注技术,且两种情况下都应用SAT过滤机制以保证伪标签可靠性。

$$

\mathcal{L}=\mathcal{L}{l}+\mu\mathcal{L}_{u},

$$

$$

\mathcal{L}=\mathcal{L}{l}+\mu\mathcal{L}_{u},

$$

$$

\mathcal{L}{u}=\frac{1}{{{N}{u}}}\sum_{i=1}^{{N}{u}}\left[{\hat{p}{u i}}>{{\tau}{\hat{y}{u i}}}\right]\cdot\mathbb{C}\mathbb{E}\left({f({x}{u i};\theta),\hat{y}_{u i}}\right),

$$

$$

\mathcal{L}{u}=\frac{1}{{{N}{u}}}\sum_{i=1}^{{N}{u}}\left[{\hat{p}{u i}}>{{\tau}{\hat{y}{u i}}}\right]\cdot\mathbb{C}\mathbb{E}\left({f({x}{u i};\theta),\hat{y}_{u i}}\right),

$$

where $[\cdot]$ is the indicator function, and $\tau_{\hat{y}{u i}}$ is the threshold of class $\hat{y}_{u i}$ .

其中 $[\cdot]$ 是指示函数,$\tau_{\hat{y}{u i}}$ 是类别 $\hat{y}_{u i}$ 的阈值。

As illustrated in Figure 1b, for an unlabeled sample $x_{u}$ , both weak and strong augmentations are applied, generating $x_{u,w}$ and $x_{u,s}$ , respectively. The weakly-augmented $x_{u,w}$ is processed by the teacher network, outputting probabilities over classes. The pseudo-label is generated as described previously. The pseudo-labels selected by SAT can be used to guide the student model’s learning on the strongly-augmented $x_{u,s}$ , minimizing the CE loss. In Semi-SST’s semi-supervised training, we utilize the EMA-Teacher algorithm, updating the teacher model parameters $\theta^{\prime}$ through exponential moving average (EMA) from the student model parameters $\theta$ :

如图 1b 所示,对于未标记样本 $x_{u}$,同时应用弱增强和强增强,分别生成 $x_{u,w}$ 和 $x_{u,s}$。弱增强的 $x_{u,w}$ 通过教师网络处理,输出类别概率。伪标签的生成方式如前所述。SAT 筛选的伪标签可用于指导学生模型在强增强样本 $x_{u,s}$ 上的学习,从而最小化交叉熵损失。在 Semi-SST 的半监督训练中,我们采用 EMA-Teacher 算法,通过学生模型参数 $\theta$ 的指数移动平均 (EMA) 更新教师模型参数 $\theta^{\prime}$:

$$

\theta^{\prime}:=m\theta^{\prime}+(1-m)\theta,

$$

$$

\theta^{\prime}:=m\theta^{\prime}+(1-m)\theta,

$$

where the momentum decay $m$ is a number close to 1, such as 0.9999.

动量衰减 $m$ 是一个接近1的数,例如0.9999。

- Iterative training. Return to the prediction step for iterative training, continuously refining the model over multiple cycles until its performance converges. More details of the pipeline can be found in Figure 1 and Algorithm 1.

- 迭代训练。返回预测步骤进行迭代训练,通过多轮循环持续优化模型直至性能收敛。更多流程细节见图1和算法1。

4. Experiments and results

4. 实验与结果

4.1. Datasets and implementation details

4.1. 数据集与实现细节

Datasets. As shown in Table 2, we conduct experiments on eight publicly accessible benchmark datasets: ImageNet1K, Food-101, i Naturalist, CIFAR-100, CIFAR-10, SVHN, STL-10, and Clothing-1M. These datasets collectively provide a comprehensive benchmark for evaluating the performance of SSL algorithms across a wide variety of domains and complexities. All SSL experiments in this paper are performed on randomly sampled labeled data from the training set of each dataset.

数据集。如表 2 所示,我们在八个公开可用的基准数据集上进行了实验:ImageNet1K、Food-101、i Naturalist、CIFAR-100、CIFAR-10、SVHN、STL-10 和 Clothing-1M。这些数据集共同为评估自监督学习 (SSL) 算法在不同领域和复杂度下的性能提供了全面的基准。本文中所有 SSL 实验均在从各数据集训练集中随机采样的带标签数据上进行。

• ImageNet-1K [1] covers a broad spectrum of real-world objects, which is extensively used in computer vision tasks. It contains approximately 1.28M training images and 50K validation images, divided into 1,000 categories.

• ImageNet-1K [1] 涵盖了现实世界物体的广泛类别,被广泛应用于计算机视觉任务。它包含约128万张训练图像和5万张验证图像,划分为1000个类别。

Table 2 All experiments in this paper are performed on eight publicly accessible benchmark datasets.

表 2 本文所有实验均在八个公开可用的基准数据集上进行。

| 数据集 | 训练数据量 | 测试数据量 | 类别数 |

|---|---|---|---|

| ImageNet-1K[1] | 1,281,167 | 50,000 | 1,000 |

| iNaturalist [40] | 265,213 | 3,030 | 1,010 |

| Food-101[39] | 75,750 | 25,250 | 101 |

| CIFAR-100[41] | 50,000 | 10,000 | 100 |

| CIFAR-10[41] | 50,000 | 10,000 | 10 |

| SVHN [42] | 73,257 | 26,032 | 10 |

| STL-10 [43] | 5,000 | 8,000 | 10 |

| Clothing-1M [44] | 1,047,570 | 10,526 | 14 |

• Food-101 [39] consists of 101K food images across 101 categories. Each class contains 1,000 images, divided into 750 training images and 250 test images.

• Food-101 [39] 包含101个类别的10.1万张食物图像。每个类别包含1,000张图像,其中750张为训练图像,250张为测试图像。

• i Naturalist [40] is derived from the i Naturalist community, which documents biodiversity across the globe. It contains 256,213 training images and 3,030 test images, covering 1,010 classes of living organisms.

• i Naturalist [40] 源自记录全球生物多样性的 i Naturalist 社区,包含 256,213 张训练图像和 3,030 张测试图像,涵盖 1,010 类生物体。

• CIFAR-100 [41] covers a diverse range of real-world objects and animals. It contains 60K $32\times32$ images divided into 100 categories, with each class containing 500 training images and 100 testing images.

• CIFAR-100 [41] 涵盖了多样化的现实世界物体和动物。它包含 6 万张 $32\times32$ 图像,分为 100 个类别,每个类别包含 500 张训练图像和 100 张测试图像。

• CIFAR-10 [41] comprises 60K $32\times32$ color images divided into 10 distinct categories, with each class containing 5,000 training images and 1,000 testing images.

• CIFAR-10 [41] 包含 6 万张 $32\times32$ 的彩色图像,分为 10 个不同类别,每个类别包含 5,000 张训练图像和 1,000 张测试图像。

• SVHN [42] is a real-world dataset for digit recognition obtained from house numbers in Google Street View images. It contains 73,257 training samples and 26,032 testing samples across 10 digit classes, with an additional 531,131 supplementary images.

• SVHN [42] 是一个真实场景下的数字识别数据集,源自 Google 街景图像中的门牌号。它包含 10 个数字类别的 73,257 个训练样本和 26,032 个测试样本,另有 531,131 张补充图像。

• STL-10 [43] consists of 13K $.96\times96$ color images divided into 10 classes. Each category includes 500 labeled training images and 800 testing images, supplemented by 100K unlabeled images.

• STL-10 [43] 包含13,000张96×96像素的彩色图像,分为10个类别。每个类别包含500张带标注的训练图像和800张测试图像,并额外提供10万张未标注图像。

• Clothing-1M [44] is a large-scale dataset containing approximately 1 million images of clothing collected from online shopping websites. The images are annotated with noisy labels across 14 categories, and the dataset is split into 47,570 clean labeled training images, 1M noisy labeled training images, 14,313 validation images and 10,526 testing images, making it a challenging benchmark for learning with noisy labels.

• Clothing-1M [44] 是一个包含约100万张从购物网站收集的服装图像的大规模数据集。这些图像被标注了14个类别的噪声标签,数据集划分为47,570张干净标注的训练图像、100万张噪声标注的训练图像、14,313张验证图像和10,526张测试图像,使其成为带噪声标签学习的一个具有挑战性的基准。

Architectures. For transformer neural networks, we utilize the standard Vision Transformer (ViT) architectures [45], which consist of a stack of Transformer blocks. Each block includes a multi-head self-attention layer and a multi-layer perceptron (MLP) block. We use average pooling of the ViT output for classification. Following Semi-ViT, we directly use the pretrained ViT-Small from DINO [46], ViT-Base and ViT-Huge from MAE [47], which have learned useful visual representations for downstream tasks. For convolutional neural networks, we utilize the standard ConvNeXt architecture [48], which incorporates mixup strategy [49] for enhanced results, instead of the traditional ResNet [50]. Note that self-supervised pre training is optional in our methods. We train the ConvNeXt architecture from scratch without any pre training.

架构。对于Transformer神经网络,我们采用标准的Vision Transformer (ViT)架构[45],该架构由一系列Transformer模块堆叠而成。每个模块包含多头自注意力层和多层感知机(MLP)模块。我们使用ViT输出的平均池化进行分类。遵循Semi-ViT的做法,我们直接使用DINO[46]预训练的ViT-Small、MAE[47]提供的ViT-Base和ViT-Huge,这些模型已学习到对下游任务有用的视觉表征。对于卷积神经网络,我们采用标准ConvNeXt架构[48],该架构融合了mixup策略[49]以提升性能,而非传统的ResNet[50]。需注意自监督预训练在我们的方法中是可选项。ConvNeXt架构完全从头开始训练,未使用任何预训练权重。

Implementation details. Our training protocol largely follows the good practice in Semi-ViT, with minor differences. The optimization of model is performed using AdamW [51], with linear learning rate scaling rule [52] $l r=b a s e_l r\times b a t c h s i z e/256$ and cosine learning rate decay schedule [53]. Additionally, we incorporate label smoothing [54], drop path [55], and cutmix [56] in our experiments. For fair comparisons, we use a center crop of $224\times224$ during training and testing. The data augmentation strategy follows the methods described in [35, 57, 58]. Detailed hyper-parameter settings are provided in Table 10.

实现细节。我们的训练方案基本遵循 Semi-ViT 的良好实践,仅有细微差异。模型优化使用 AdamW [51] ,采用线性学习率缩放规则 [52] $l r=b a s e_l r\times b a t c h s i z e/256$ 和余弦学习率衰减策略 [53] 。此外,我们在实验中引入了标签平滑 [54] 、随机路径丢弃 [55] 和 CutMix [56] 。为确保公平比较,训练和测试阶段均采用 $224\times224$ 的中心裁剪。数据增强策略遵循 [35, 57, 58] 所述方法。详细超参数设置见 表 10 。

4.2. Comparison with baselines

4.2. 与基线对比

Table 3 presents a comparison of Super-SST against supervised baselines and Semi-SST against semi-supervised baselines. This comparison covers both CNN-based and Transformer-based architectures across various datasets, including ImageNet-1K, CIFAR-100, Food-101, and i Naturalist, with $1%$ and $10%$ labeled data. Due to computational resource constraints, we only performed experiments with ConvNeXt-T on $10%$ ImageNet-1K labels.

表 3: Super-SST与监督基线方法、Semi-SST与半监督基线方法的对比结果。该对比涵盖了基于CNN和Transformer的多种架构,涉及ImageNet-1K、CIFAR-100、Food-101及iNaturalist数据集,并测试了1%和10%标注数据比例。由于计算资源限制,我们仅在10%标注数据的ImageNet-1K上进行了ConvNeXt-T架构的实验。

Table 3 Comparison with both supervised and semi-supervised baselines across various datasets and architectures. The performance gaps to the baselines are indicated in brackets. The results of supervised baseline are implemented by us. Semi-ViT did not conduct experiments on CIFAR-100, resulting in the absence of corresponding results.

表 3 不同数据集和架构下有监督与半监督基线的对比。括号内表示与基线的性能差距。有监督基线结果由我们实现。Semi-ViT未在CIFAR-100上进行实验,故缺少相应结果。

| 数据集 | 架构 | 参数量 | 方法 | 1% | 10% |

|---|---|---|---|---|---|

| ImageNet-1K | ViT-Small | 22M | Supervised Super-SST (ours) | 60.3 70.4 (+10.1) | 74.3 78.3 (+4.0) |

| Semi-ViT Semi-SST (ours) | 68.0 71.4 (+3.4) | 77.1 78.6 (+1.5) | |||

| ViT-Huge | 632M | Supervised Super-SST (ours) | 73.7 80.3 (+6.6) | 81.8 84.8 (+3.0) | |

| Semi-ViT Semi-SST (ours) | 80.0 80.7 (+0.7) | 84.3 84.9 (+0.6) | |||

| ConvNeXt-T | 28M | Supervised Super-SST (ours) | 65.8 77.9 (+12.1) | ||

| Semi-ViT Semi-SST (ours) | 74.1 78.6 (+4.5) | ||||

| CIFAR-100 | ViT-Small | 22M | Supervised Super-SST (ours) | 53.7 68.1 (+14.4) | 79.8 84.1 (+4.3) |

| Semi-ViT Semi-SST (ours) | 72.3 | 84.7 | |||

| Food-101 | ViT-Base | 86M | Supervised Super-SST (ours) | 62.7 83.4 (+20.7) | 84.6 91.1 (+6.5) |

| Semi-ViT Semi-SST (ours) | 82.1 86.5 (+4.4) | 91.3 | |||

| iNaturalist | ViT-Base | 86M | Supervised Super-SST (ours) | 22.6 | 91.5 (+0.2) 57.5 |

| Semi-ViT Semi-SST (ours) | 25.6 (+3.0) 32.3 33.4 (+1.1) | 65.3 (+7.8) 67.7 68.1 (+0.4) |

Effectiveness and generalization. As shown in Table 3, both Super-SST and Semi-SST consistently outperform their respective supervised and semi-supervised baselines across various datasets, demonstrating strong generalization capabilities. Notably, both CNN and Transformer architectures benefit significantly from Super-SST and Semi-SST, underscoring their effectiveness and robustness across different architectures.

效果与泛化性。如表 3 所示,Super-SST 和 Semi-SST 在各类数据集上均稳定超越其对应的全监督与半监督基线方法,展现出强大的泛化能力。值得注意的是,CNN 和 Transformer 架构均显著受益于 Super-SST 和 Semi-SST,这印证了二者在不同架构间的有效性与鲁棒性。

Data Efficiency. Table 3 also demonstrates that both Super-SST and Semi-SST maintain robust performance improvements across different percentages of labeled data. They achieve a relative improvement of $0.7%-20.7%$ with $1%$ labeled data and $0.2%{-}12.1%$ with $10%$ labeled data in Top-1 accuracy. The improvements are more pronounced with a smaller percentage of labeled data $(e.g.,1%)$ , indicating that Super-SST and Semi-SST are particularly effective in low-data regimes, making them suitable for data-efficient learning. As the amount of labeled data increases from $1%$ to $10%$ , the relative improvements decrease, indicating diminishing returns with more labeled data.

数据效率。表 3 还表明,Super-SST 和 Semi-SST 在不同比例的标注数据下均保持了稳健的性能提升。在 Top-1 准确率上,它们使用 1% 标注数据时实现了 $0.7%-20.7%$ 的相对提升,使用 10% 标注数据时实现了 $0.2%{-}12.1%$ 的相对提升。标注数据比例较小时 (例如 1%),改进更为显著,这表明 Super-SST 和 Semi-SST 在低数据场景下特别有效,适用于高效数据学习。随着标注数据量从 $1%$ 增加到 $10%$,相对改进逐渐减小,表明更多标注数据带来的收益递减。

S cal ability. As presented in Table 3, both Super-SST and Semi-SST show significant improvements with larger architectures like ViT-Huge, indicating better s cal ability with increased model capacity. For example, with $1%/10%$ ImageNet-1K labeled data and ViT-Huge, Super-SST shows a $6.6%/3.0%$ relative improvement over the supervised baseline, while Semi-SST shows a $0.7%/0.6%$ relative improvement over the semi-supervised baseline.

可扩展性。如表 3 所示,Super-SST 和 Semi-SST 在使用 ViT-Huge 等更大架构时均显示出显著提升,表明模型容量增加时具有更好的可扩展性。例如,在 $1%/10%$ ImageNet-1K 标注数据和 ViT-Huge 条件下,Super-SST 相比监督基线实现了 $6.6%/3.0%$ 的相对提升,而 Semi-SST 在半监督基线上实现了 $0.7%/0.6%$ 的相对提升。

Super-SST vs. Semi-SST. Both Super-SST and Semi-SST demonstrate competitive performance across various datasets and architectures, with Semi-SST generally having a slight edge. As shown in Table 3, Semi-SST consistently achieves higher Top-1 accuracy compared to Super-SST. However, Super-SST typically delivers a larger relative improvement over its baselines. For example, using $1%$ ImageNet-1K labeled data and ViT-Small, Super-SST / Semi-SST achieves $70.4%/71.4%$ Top-1 accuracy, while the relative improvement over supervised / semi-supervised baseline is $10.1%/3.4%$ .

Super-SST vs. Semi-SST。Super-SST和Semi-SST在各类数据集与架构中均展现出竞争力,其中Semi-SST通常略占优势。如表3所示,Semi-SST的Top-1准确率始终高于Super-SST。但Super-SST相比其基线通常能带来更大的相对提升。例如,在使用$1%$ ImageNet-1K标注数据和ViT-Small架构时,Super-SST/Semi-SST分别达到$70.4%/71.4%$的Top-1准确率,而相较于监督/半监督基线的相对提升幅度为$10.1%/3.4%$。

Table 4 Comparison with previous SOTA SSL methods across both CNN-based and Transformer-based architectures on $1%$ and $10%$ ImageNet-1K labeled data. Our Super-SST and Semi-SST methods significantly outperform previous SOTA SSL counterparts.

表 4: 在基于 CNN 和 Transformer 架构的 $1%$ 和 $10%$ ImageNet-1K 标注数据上,与之前 SOTA (State-of-the-Art) SSL (自监督学习) 方法的对比。我们的 Super-SST 和 Semi-SST 方法显著优于之前的 SOTA SSL 方法。

| 方法 | 架构 | 参数量 | ImageNet-1K |

|---|---|---|---|

| 1% | |||

| NNO | |||

| UDA [11] | ResNet-50 | 26M | - |

| SimCLRv2 [59] | ResNet-50 | 26M | 60.0 |

| FixMatch [6] | ResNet-50 | 26M | - |

| MPL [28] | ResNet-50 | 26M | - |

| EMAN [29] | ResNet-50 | 26M | 63.0 |

| CoMatch [60] | ResNet-50 | 26M | 66.0 |

| PAWS [61] | ResNet-50 | 26M | 66.5 |

| SimMatch [62] | ResNet-50 | 26M | 67.2 |

| SimMatchV2 [63] | ResNet-50 | 26M | 71.9 |

| Semi-ViT [35] | ConvNeXt-T | 28M | 71.3 |

| Super-SST (ours) | ConvNeXt-T | 28M | 74.1 |

| Semi-SST (ours) | ConvNeXt-T | 28M | - |

| Transformer | |||

| SemiFormer [64] | ViT-S+Conv | 42M | - |

| SimMatchV2 [63] | ViT-Small | 22M | - |

| Semi-ViT [35] | ViT-Small | 22M | 63.7 |

| Semi-ViT [35] | ViT-Base | 86M | 68.0 |

| Semi-ViT [35] | ViT-Large | 307M | 71.0 |

| Semi-ViT [35] | ViT-Huge | 632M | 77.3 |

| - | ViT-Huge | 632M | 80.0 |

| Super-SST (ours) | ViT-Small | 22M | 70.4 |

| Super-SST (ours, distilled) | ViT-Small | 22M | 76.9 |

| Semi-SST (ours) | ViT-Small | 22M | 71.4 |

| Super-SST (ours) | ViT-Huge | 632M | 80.3 |

| Semi-SST (ours) | ViT-Huge | 632M | 80.7 |

In summary, Table 3 demonstrates that Super-SST and Semi-SST offer significant performance improvements across various datasets and architectures, particularly excelling in low-data scenarios and scaling well with model capacity. While Semi-SST often achieves higher Top-1 accuracy compared to Super-SST, Super-SST generally delivers larger improvement over its baselines. Our methods are effective, scalable, and broadly applicable, making them valuable for practical applications where labeled data is limited.

表 3 表明,Super-SST 和 Semi-SST 在各种数据集和架构上均实现了显著的性能提升,尤其在低数据场景下表现优异,并能随着模型容量扩展保持良好效果。虽然 Semi-SST 的 Top-1 准确率通常高于 Super-SST,但 Super-SST 相比其基线模型的改进幅度更大。我们的方法高效、可扩展且普适性强,对于标注数据有限的实际应用场景具有重要价值。

4.3. Comparison with SOTA SSL models

4.3. 与SOTA SSL模型的对比

Table 4 presents a comprehensive comparison between our methods and previous SOTA SSL methods, including UDA [11], SimCLRv2 [59], FixMatch [6], MPL [28], EMAN [29], CoMatch [60], PAWS [61], SimMatch [62], SimMatchV2 [63], and Semi-ViT [35], focusing on their performance with $1%$ and $10%$ ImageNet-1K labeled data. Our methods are evaluated across both CNN-based and Transformer-based architectures. Following Semi-ViT, we utilize the recently proposed ConvNeXt instead of the traditional ResNet.

表 4: 全面对比了我们的方法与先前 SOTA (state-of-the-art) SSL (半监督学习) 方法在 $1%$ 和 $10%$ ImageNet-1K 标注数据下的性能表现,包括 UDA [11]、SimCLRv2 [59]、FixMatch [6]、MPL [28]、EMAN [29]、CoMatch [60]、PAWS [61]、SimMatch [62]、SimMatchV2 [63] 和 Semi-ViT [35]。我们的方法在基于 CNN 和 Transformer 的架构上均进行了评估。遵循 Semi-ViT 的做法,我们采用最新提出的 ConvNeXt 替代传统 ResNet。

Superiority and robustness. For CNN-based models, Super-SST and Semi-SST achieve $77.9%$ and $78.6%$ Top-1 accuracy, respectively, with ConvNeXt-T on $10%$ ImageNet-1K labeled data, surpassing all previous counterparts. For Transformer-based models, Super-SST / Semi-SST achieves $70.4%\textit{/}71.4%$ Top-1 accuracy with ViT-Small on $1%$ labeled data, and $78.3%/78.6%$ on $10%$ labeled data, significantly outperforming previous counterparts. Both Super-SST and Semi-SST consistently outperform their counterparts across CNN-based and Transformer-based architectures, highlighting the superiority and robustness of our methods.

卓越性与鲁棒性。基于CNN的模型中,Super-SST和Semi-SST在ConvNeXt-T架构下仅使用10%的ImageNet-1K标注数据,分别取得77.9%和78.6%的Top-1准确率,超越所有同类方法。基于Transformer的模型中,Super-SST/Semi-SST在ViT-Small架构下仅用1%标注数据达到70.4%/71.4%的Top-1准确率,使用10%标注数据时提升至78.3%/78.6%,显著优于先前方法。无论是CNN还是Transformer架构,Super-SST与Semi-SST均展现出稳定的性能优势,证明了我们方法的卓越性和鲁棒性。

S cal ability and data-efficiency. Scalable and data-efficient algorithms are crucial to semi-supervised learning. As demonstrated in Table 4, both Super-SST and Semi-SST can easily scale up to larger architectures like ViT-Huge. Notably, Semi-SST-ViT-Huge achieves the best $80.7%/84.9%$ Top-1 accuracy on $1%/10%$ labeled data, setting new SOTA performance on the highly competitive ImageNet-1K SSL benchmarks without external data. Moreover, larger architectures like ViT-Huge generally achieve higher accuracy compared to smaller architectures, demonstrating that larger architectures are strong data-efficient learners. This trend is consistent in both $1%$ and $10%$ labeled data scenarios, underscoring the potential for larger models to better utilize scarce labeled data.

可扩展性和数据效率。可扩展且数据高效的算法对半监督学习至关重要。如表4所示,Super-SST和Semi-SST都能轻松扩展到ViT-Huge等更大架构。值得注意的是,Semi-SST-ViT-Huge在1%/10%标注数据上实现了80.7%/84.9%的Top-1准确率,在没有外部数据的情况下,在竞争激烈的ImageNet-1K SSL基准测试中创造了新的SOTA性能。此外,与较小架构相比,ViT-Huge等较大架构通常能获得更高准确率,表明较大架构是强大的数据高效学习器。这一趋势在1%和10%标注数据场景中保持一致,凸显了较大模型更好利用稀缺标注数据的潜力。

Table 5 Error Rates $(%)$ and Rankings on Classical SSL Benchmarks. The experimental settings in this table are consistent with those of SimMatchV2 [63] and USB [72], with all non-ours results directly sourced from these studies. Error rates and standard deviations are reported as the average over three runs. For each experimental setting, the best result is highlighted in bold, while the second-best is underlined.

表 5: 经典 SSL 基准测试的错误率 $(%)$ 和排名。本表中的实验设置与 SimMatchV2 [63] 和 USB [72] 保持一致,所有非本研究的实验结果均直接引自这些文献。错误率和标准差报告为三次运行的平均值。每种实验设置下的最佳结果以粗体标出,次优结果以下划线标出。

| Dataset | CIFAR-10 | CIFAR-100 | SVHN | STL-10 | Friedman Final | Mean | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| #Label | 40 | 250 | 4000 | 400 | 2500 | 10000 | 40 | 250 | 1000 | 40 | 250 | 1000 | rank | rank | errorrate |

| Fully-Supervised | 4.57±0.06 | 18.96±0.06 | 2.14±0.01 | = | |||||||||||

| Supervised | 77.18±1.32 | 56.24±3.41 | 16.10±0.32 | 89.60±0.43 | 58.33±1.41 | 36.83±0.21 | 82.68±1.91 | 24.17±1.65 | 12.19±0.23 | 75.4±0.66 | 55.07±1.83 | 35.42±0.48 | |||

| 13.63±0.07 | 87.67±0.79 | 36.73±0.05 | 80.07±1.22 | 13.46±0.61 | 6.90±0.22 | 74.89±0.57 | 52.20±2.11 | 31.34±0.64 | 17 | ||||||

| II-Model Pseudo-Labeling | 76.35±1.69 | 48.73±1.07 | 15.32±0.35 | 88.18±0.89 | 56.40±0.69 | 9.37±0.42 | 51.90±1.87 | 16.17 | 48.20 | ||||||

| Mean Teacher | 75.95±1.86 72.42±2.10 | 51.12±2.91 | 8.29±0.10 | 79.96±0.53 | 55.37±0.48 44.37±0.60 | 36.58±0.12 | 75.98±5.36 | 16.47±0.59 3.44±0.02 | 3.28±0.07 | 74.02±0.47 72.90±0.83 | 49.30±2.09 | 30.77±0.04 | 16.08 13.67 | 16 14 | 48.42 40.01 |

| VAT | 78.58±2.78 | 37.56±4.90 28.87±3.62 | 10.90±0.16 | 83.60±4.21 | 46.20±0.80 | 31.39±0.11 32.14±0.31 | 49.34±7.90 84.12±3.18 | 3.38±0.12 | 2.87±0.18 | 73.33±0.47 | 57.78±1.47 | 27.92±1.65 40.98±0.96 | 14.67 | 15 | 45.23 |

| MixMatch | 35.18±3.87 | 13.00±0.80 | 6.55±0.05 | 64.91±3.34 | 39.29±0.13 | 27.74±0.27 | 27.77±5.43 | 4.69±0.46 | 3.85±0.28 | 49.84±0.58 | 32.05±1.16 | 20.17±0.67 | 12.92 | 13 | 27.09 |

| ReMixMatch | 8.13±0.58 | 6.34±0.22 | 4.65±0.09 | 41.60±1.48 | 25.72±0.07 | 20.04±0.13 | 16.43±3.77 | 5.65±0.35 | 5.36±0.58 | 27.87±3.85 | 11.14±0.52 | 6.44±0.15 | 9.50 | 12 | 14.95 |

| UDA | 10.01±3.34 | 5.23±0.08 | 4.36±0.09 | 45.48±0.37 | 27.51±0.28 | 23.12±0.45 | 5.28±4.02 | 1.93±0.03 | 1.94±0.02 | 40.094.03 | 10.11±1.15 | 6.23±0.28 | 8.00 | 10 | 15.11 |

| FixMatch | 12.66±4.49 | 4.95±0.10 | 4.26±0.01 | 45.38±2.07 | 27.71 ±0.42 | 22.06±0.10 | 3.37±1.01 | 1.97±0.01 | 2.02±0.03 | 38.19±4.76 | 8.64±0.84 | 5.82±0.06 | 6.83 | 7 | 14.75 |

| Dash | 9.29±3.28 | 5.16±0.28 | 4.36±0.10 | 47.49±1.05 | 27.47 ±0.38 | 21.89±0.16 | 5.26±2.02 | 2.01±0.01 | 2.08±0.09 | 42.00±4.94 | 10.50±1.37 | 6.30±0.49 | 8.25 | 11 | 15.32 |

| CoMatch | 6.51±1.18 | 5.35±0.14 | 4.27±0.12 | 53.41±2.36 | 29.78±0.11 | 22.11±0.22 | 8.20±5.32 | 2.16±0.04 | 2.01±0.04 | 13.74±4.20 | 7.63±0.94 | 5.71 ±0.08 | 7.17 | 8 | 13.41 |

| CRMatch | 13.62±2.62 | 4.61±0.17 | 3.65±0.04 | 37.76±1.45 | 24.13±0.16 | 19.89±0.23 | 2.60±0.77 | 1.98±0.04 | 1.95±0.03 | 33.73±1.17 | 14.87 ±5.09 | 6.53±0.36 | 5.67 | 4 | 13.78 |

| FlexMatch | 5.29±0.29 | 4.97 ±0.07 | 4.24±0.06 | 40.73±1.44 | 26.17±0.18 | 21.75±0.15 | 5.422.83 | 8.74±3.32 | 7.90±0.30 | 29.12±5.04 | 9.85±1.35 | 6.08±0.34 | 7.58 | 9 | 14.19 |

| AdaMatch | 5.09±0.21 | 5.13±0.05 | 4.36±0.05 | 37.08±1.35 | 26.66±0.33 | 21.99±0.15 | 6.14±5.35 | 2.13±0.04 | 2.02±0.05 | 19.95±5.17 | 8.59±0.43 | 6.01±0.02 | 6.00 | 5 | 12.10 |

| SimMatch | 5.38±0.01 | 5.36±0.08 | 4.41±0.07 | 39.32±0.72 | 26.21±0.37 | 21.50±0.11 | 7.60±2.11 | 2.48±0.61 | 2.05±0.05 | 16.98±4.24 | 8.27±0.40 | 5.74±0.31 | 6.58 | 6 | 12.11 |

| SimMatchV2 | 4.90±0.16 | 5.04±0.09 | 4.33±0.16 | 36.68±0.86 | 26.66±0.38 | 21.37±0.20 | 7.92±2.80 | 2.92±0.81 | 2.85±0.91 | 15.85±2.62 | 7.54±0.81 | 5.65±0.26 | 5.25 | 3 | 11.81 |

| Super-SST (ours) | 9.59±0.32 | 3.37±0.22 | 1.61±0.18 | 35.50±0.58 | 14.20±0.17 | 29.41±1.55 | 4.17±0.49 | 3.10±0.32 | |||||||

| Semi-SST (ours) | 6.35±0.28 | 2.42±0.13 | 1.41±0.10 | 31.39±0.47 | 18.51±0.36 16.62±0.28 | 13.50±0.14 | 23.18±1.38 | 3.36±0.17 | 2.97±0.11 | 7.33±0.31 3.920.20 | 1.59±0.12 1.40±0.10 | 1.45±0.10 1.36±0.08 | 5.08 3.58 | 2-1 | 10.82 8.99 |

Knowledge distillation. We have shown that larger architectures like ViT-Huge generally achieve higher accuracy than smaller ones, particularly in low-data scenarios. However, large models with high capacity require significant computational resources during deployment, making them impractical and uneconomical for real-world applications. Conversely, small models are easier to deploy but tend to perform worse compared to large models. This raises an important question: how can we train a small, high-performance model suitable for practical deployment in low-data scenarios? To address this, we conduct huge-to-small knowledge distillation [65, 66, 67, 68, 69, 70, 71] experiments. Initially, we train a teacher model (i.e., ViT-Huge) using Super-SST on $1%/10%$ original labeled data, achieving $80.3%/84.8%$ Top-1 accuracy. We then use the well-trained teacher model and SAT technique to obtain selected pseudo-labeled data. Finally, we distill the knowledge from the teacher model into the student model (i.e., ViT-Small). The student model was supervised trained on a combination of few original labeled data and selected pseudo-labeled data, achieving $76.9%$ and $80.3%$ Top-1 accuracy in $1%$ and $10%$ low-data scenarios, respectively. This represents a substantial $6.5%$ and $2.0%$ relative improvement over the Super-SST-ViT-Small. Thus, we successfully develop a compact, lightweight and high-performance model that is well-suited for practical deployment.

知识蒸馏。我们已经证明,像ViT-Huge这样的大型架构通常比小型架构获得更高的准确率,尤其是在低数据场景下。然而,高容量的大型模型在部署时需要大量计算资源,这使得它们在实际应用中不切实际且不经济。相反,小型模型更容易部署,但性能往往不如大型模型。这就引出了一个重要问题:如何在低数据场景下训练出适合实际部署的小型高性能模型?为了解决这个问题,我们进行了大模型到小模型的知识蒸馏[65, 66, 67, 68, 69, 70, 71]实验。首先,我们使用Super-SST在$1%/10%$的原始标记数据上训练教师模型(即ViT-Huge),达到了$80.3%/84.8%$的Top-1准确率。然后,我们使用训练好的教师模型和SAT技术获取选定的伪标记数据。最后,我们将教师模型的知识蒸馏到学生模型(即ViT-Small)中。学生模型在少量原始标记数据和选定伪标记数据的组合上进行监督训练,在$1%$和$10%$的低数据场景下分别达到了$76.9%$和$80.3%$的Top-1准确率。这相对于Super-SST-ViT-Small分别实现了显著的$6.5%$和$2.0%$的相对提升。因此,我们成功开发出了一个紧凑、轻量且高性能的模型,非常适合实际部署。

The results presented in Table 5 clearly highlight the superior performance of Semi-SST and Super-SST compared to existing SSL methods across multiple benchmarks. Notably, Semi-SST achieves the lowest mean error rate of $8.99%$ , ranking first overall, while Super-SST follows closely with a mean error rate of $10.82%$ , securing the second position. Notably, despite the availability of 531,131 additional training images in SVHN and 100,000 unlabeled images in STL-10, our method achieves competitive performance without leveraging these extra resources, underscoring its data-efficient learning capability. To further assess the statistical significance of our results, we conducted a Friedman test, which yielded a p-value of $3.11\times10^{-25}$ , indicating a statistically significant difference among the evaluated methods. The Friedman rankings for Semi-SST and Super-SST are 1st and 2nd, respectively, suggesting their consistent advantage across multiple benchmarks. These results provide strong statistical support for the effectiveness of our approach while reducing the likelihood that the observed improvements are due to random variation. Despite Semi-SST and Super-SST demonstrate strong overall performance, they exhibit relatively higher error rates on the SVHN dataset with only 40 labeled samples. This suggests that our methods, like other SSL techniques, may face challenges in scenarios with extremely limited labeled data. Future work could explore enhancements to further improve performance in such cases. To ensure consistency with the experimental configurations in this paper, we utilize a self-supervised pretrained ViT-Small from DINO, which differs from the architectures used in prior works such as SimMatchV2 [63] and USB [72]. While this setup may result in a less direct comparison, it contributes valuable data points to the research community, offering insights into the performance of transformer-based architectures in SSL.

表5所示结果清晰表明,Semi-SST和Super-SST在多项基准测试中均优于现有SSL方法。其中Semi-SST以$8.99%$的平均错误率位居榜首,Super-SST以$10.82%$紧随其后。值得注意的是,尽管SVHN提供额外531,131张训练图像且STL-10包含10万张未标注图像,我们的方法在不使用这些额外资源的情况下仍保持竞争力,凸显其高效的数据学习能力。Friedman检验得出$3.11\times10^{-25}$的p值,证实各方法间存在统计显著性差异——Semi-SST与Super-SST分列Friedman排名第一、二位,表明其在多基准测试中的稳定优势。这些结果为方法有效性提供了强统计支撑,同时降低观测改进源于随机变异的可能性。尽管整体表现优异,但Semi-SST和Super-SST在仅含40个标注样本的SVHN数据集上错误率相对较高,这说明与其他SSL技术类似,我们的方法在标注数据极度稀缺的场景仍存在挑战。未来工作可探索针对性优化方案。为确保实验配置一致性,我们采用DINO自监督预训练的ViT-Small架构,这与SimMatchV2 [63]、USB [72]等先前研究的架构存在差异。虽然这种设置可能导致对比不够直接,但为研究社区提供了Transformer架构在SSL中性能的宝贵数据参考。

Table 6 Comparison with fully-supervised methods on $100%$ , $10%$ , and $1%$ ImageNet-1K labeled data. † indicates result at a resolution of $224\times224$ for fair comparisons. Our methods significantly reduce the reliance on human annotation.

表 6: 在 $100%$、$10%$ 和 $1%$ ImageNet-1K 标注数据上与全监督方法的对比。† 表示分辨率为 $224\times224$ 的公平比较结果。我们的方法显著降低了对人工标注的依赖。

| 方法 | 架构 | 参数量 | ImageNet-1K |

|---|---|---|---|

| 100% | |||

| DeiT [73] | ViT-Small | 22M | 79.8 |

| Super-SST (ours) | ViT-Small | 22M | |

| Super-SST (ours, distilled) | ViT-Small | 22M | |

| Semi-SST (ours) | ViT-Small | 22M | - |

| DeiT-1llI [74] | ViT-Huge | 632M | 84.8 |

| Super-SST (ours) | ViT- |