ChatCAD+: Towards a Universal and Reliable Interactive CAD using LLMs

ChatCAD+: 基于大语言模型的通用可靠交互式CAD系统

Zihao Zhao, Sheng Wang, Jinchen Gu, Yitao Zhu, Lanzhuju Mei, Zixu Zhuang, Zhiming Cui, Qian Wang, Dinggang Shen, Fellow, IEEE

Zihao Zhao, Sheng Wang, Jinchen Gu, Yitao Zhu, Lanzhuju Mei, Zixu Zhuang, Zhiming Cui, Qian Wang, Dinggang Shen, Fellow, IEEE

Abstract— The integration of Computer-Aided Diagnosis (CAD) with Large Language Models (LLMs) presents a promising frontier in clinical applications, notably in automating diagnostic processes akin to those performed by radiologists and providing consultations similar to a virtual family doctor. Despite the promising potential of this integration, current works face at least two limitations: (1) From the perspective of a radiologist, existing studies typically have a restricted scope of applicable imaging domains, failing to meet the diagnostic needs of different patients. Also, the insufficient diagnostic capability of LLMs further undermine the quality and reliability of the generated medical reports. (2) Current LLMs lack the requisite depth in medical expertise, rendering them less effective as virtual family doctors due to the potential unreliability of the advice provided during patient consultations. To address these limitations, we introduce ChatCAD+, to be universal and reliable. Specifically, it is featured by two main modules: (1) Reliable Report Generation and (2) Reliable Interaction. The Reliable Report Generation module is capable of interpreting medical images from diverse domains and generate high-quality medical reports via our proposed hierarchical in-context learning. Concurrently, the interaction module leverages up-to-date information from reputable medical websites to provide reliable medical advice. Together, these designed modules synergize to closely align with the expertise of human medical professionals, offering enhanced consistency and reliability for interpretation and advice. The source code is available at GitHub.

摘要—计算机辅助诊断(CAD)与大语言模型的结合为临床应用开辟了前景广阔的领域,尤其在实现类似放射科医师的自动化诊断流程和提供虚拟家庭医生般的咨询服务方面。尽管这种结合潜力巨大,现有研究仍面临至少两个局限:(1) 从放射科医师视角看,现有研究通常局限于特定影像领域,难以满足不同患者的诊断需求。同时,大语言模型诊断能力的不足进一步影响了生成医疗报告的质量与可靠性。(2) 当前大语言模型缺乏足够的医学专业深度,导致其在作为虚拟家庭医生时可能提供不可靠的诊疗建议。为解决这些局限,我们推出具备普适性与可靠性的ChatCAD+系统,其核心包含两大模块:(1) 可靠报告生成模块通过提出的分层上下文学习技术,能解读多领域医学影像并生成高质量报告;(2) 可靠交互模块利用权威医疗网站的最新信息提供可信诊疗建议。这些模块协同工作,使系统表现更接近人类医疗专家的专业水平,显著提升诊断解读与建议的一致性和可靠性。源代码已发布于GitHub平台。

Index Terms— Large Language Models, Multi-modality System, Medical Dialogue, Computer-Assisted Diagnosis

索引术语 - 大语言模型 (Large Language Models)、多模态系统 (Multi-modality System)、医疗对话、计算机辅助诊断

Zihao Zhao, Sheng Wang, Jinchen Gu and Yitao Zhu contributed equally and are listed as first authors.

赵子豪、王升、顾金辰和朱一涛贡献均等,并列为第一作者。

Zihao Zhao, Jinchen Gu, Yitao Zhu, Lanzhuju Mei, and Zhiming Cui are with the School of Biomedical Engineering, Shanghai Tech University, Shanghai 201210, China (email: zi hao zhao 10@gmail.com, gujch12022,zhuyt, meilzhj, cuizm @shanghai tech.edu.cn)

赵子豪、顾金辰、朱逸韬、梅兰竹菊和崔志明就职于上海科技大学生物医学工程学院,上海 201210,中国 (email: zihao.zhao10@gmail.com, gujch12022, zhuyt, meilzhj, cuizm@shanghaitech.edu.cn)

Fig. 1. Overview of our proposed ChatCAD $^+$ system. (a) For patients seeking a diagnosis, ChatCAD $^+$ generates reliable medical reports based on the input medical image(s) by referring to local report database. (b) Additionally, for any inquiry from patients, ChatCAD $^+$ retrieves related knowledge from online database and lets large language model generate reliable response.

图 1: 我们提出的 ChatCAD$^+$系统概述。(a) 对于寻求诊断的患者,ChatCAD$^+$通过参考本地报告数据库,基于输入的医学图像生成可靠的医疗报告。(b) 此外,针对患者的任何查询,ChatCAD$^+$会从在线数据库中检索相关知识,并让大语言模型生成可靠回答。

Corresponding authors: Qian Wang and Dinggang Shen.

通讯作者:Qian Wang 和 Dinggang Shen。

I. INTRODUCTION

I. 引言

Sheng Wang and Zixu Zhuang are with the School of Biomedical Engineering, Shanghai Jiao Tong University, Shanghai 200030, China, Shanghai United Imaging Intelligence Co., Ltd., Shanghai 200230, China, and also Shanghai Tech University, Shanghai 201210, China. (email: {wsheng, zixuzhuang}@sjtu.edu.cn)

王盛和庄子旭任职于上海交通大学生物医学工程学院(上海 200030)、上海联影智能医疗科技有限公司(上海 200230),同时隶属于上海科技大学(上海 201210)。(email: {wsheng, zixuzhuang}@sjtu.edu.cn)

Qian Wang and Dinggang Shen are with the School of Biomedical Engineering & State Key Laboratory of Advanced Medical Materials and Devices, Shanghai Tech University, Shanghai 201210, China, and Shanghai Clinical Research and Trial Center, Shanghai 201210, China. Dinggang Shen is also with Shanghai United Imaging Intelligence Co. Ltd., Shanghai 200230, China. (e-mail: {qianwang, dgshen}@shanghai tech.edu.cn)

王倩和沈定刚就职于上海科技大学生物医学工程学院及国家先进医疗材料与器械重点实验室(上海 201210),同时隶属于上海临床研究中心(上海 201210)。沈定刚还任职于上海联影智能医疗科技有限公司(上海 200230)(邮箱: {qianwang, dgshen}@shanghaitech.edu.cn)

ARGE Language Models (LLMs) have emerged as artificial intelligence that has been extensively trained on text data. Drawing on the combined power of sophisticated deep learning techniques, large-scale datasets, and increased model sizes, LLMs demonstrate extraordinary capabilities in understanding and generating human-like text. This is substantiated by significant projects like ChatGPT [1] and LLaMA [2]. These techniques are highly suitable for a wide range of scenarios, such as customer service, marketing, education, and healthcare consultation. Notably, ChatGPT has passed part of the US medical licensing exams, highlighting the potential of LLMs in the medical domain [3], [4].

大语言模型 (LLM) 已成为一种经过海量文本数据训练的人工智能技术。凭借复杂的深度学习技术、大规模数据集和不断增长的模型规模,大语言模型在理解和生成类人文本方面展现出非凡能力。ChatGPT [1] 和LLaMA [2] 等重大项目证实了这一点。这些技术非常适合客服、营销、教育和医疗咨询等广泛场景。值得注意的是,ChatGPT 已通过美国医师执照考试部分科目 [3] [4],彰显了大语言模型在医疗领域的潜力。

So far, several studies have been conducted to integrate LLMs into Computer-Assisted Diagnosis (CAD) systems of medical images. Conventional CAD typically operates following pure computer vision [5], [6] or vision-language paradigms [7], [8]. LLMs have shown ability to effectively interpret findings from medical images, thus mitigating limitations of only visual interpretation. For example, in our pilot study, we leverage the intermediate results obtained from image CAD networks and then utilize LLMs to generate final diagnostic reports [9].

目前已有若干研究致力于将大语言模型(LLM)整合到医学影像的计算机辅助诊断(CAD)系统中。传统CAD系统通常遵循纯计算机视觉[5][6]或视觉-语言范式[7][8]运行。研究表明,大语言模型能有效解析医学影像的检查结果,从而弥补纯视觉解读的局限性。例如在我们的试点研究中,我们利用图像CAD网络生成的中间结果,通过大语言模型最终生成诊断报告[9]。

Although some efforts have been made to combine CAD networks and LLMs [9]–[11], it should be noted that these studies are limited in their scopes, which often focus on specific image domains. That is, such a system may only support a single image modality, organ, or application (such as chest X-ray), which greatly limits general iz ability in the real clinical workflow. The primary reason for this limitation comes from notable topologic and semantic variations observed among medical image data, which present distinct challenges when attempting to encode various images with a unified model. Furthermore, although LLMs have demonstrated the capability to articulate medical concepts and clinical interpretations [12], [13], there still exists a significant gap between LLMs and radiologists. This gap undoubtedly undermines patient confidence in a LLM-generated report. Consequently, these issues hinder practical utilization of LLMs in automated generation of medical reports. In addition, it is also art i cul able that the integration of LLMs and CADs may lead to medical dialogue systems [14]–[16]. In this way, patients will be able to interact through LLMs and acquire more medical advice and explanation, while this functionality is often missing in conventional CAD systems. However, existing studies show that the general LLMs typically produce medical advice based solely on their encoded knowledge, without considering specific knowledge in the medical domain [15]. As a result, patients may receive unreliable responses, dwarfing trust in CADs and thereby hindering the use of such systems in real medical scenarios.

尽管已有研究尝试将CAD网络与大语言模型结合[9]–[11],但需注意这些研究往往局限于特定影像领域。此类系统通常仅支持单一影像模态、器官或应用场景(如胸部X光),极大限制了实际临床工作流程中的泛化能力。这种局限性的主要根源在于医学影像数据存在显著的拓扑结构和语义差异,使得统一模型编码多样化图像面临独特挑战。此外,尽管大语言模型已展现出阐述医学概念和临床解读的能力[12][13],但其与放射科医师之间仍存在显著差距,这种差距无疑会削弱患者对大语言模型生成报告的信任度,从而阻碍其在医学报告自动化生成中的实际应用。值得注意的是,大语言模型与CAD系统的结合可能催生医疗对话系统[14]–[16],使患者能通过大语言模型交互获取更多医疗建议与解释——这一功能在传统CAD系统中往往缺失。然而现有研究表明,通用大语言模型通常仅基于编码知识生成医疗建议,未充分考虑医学领域的专业知识[15],可能导致患者获得不可靠的反馈,进而削弱对CAD系统的信任,阻碍此类系统在真实医疗场景中的应用。

In order to tackle the challenges mentioned above, we propose ChatCAD $^+$ in this paper. And our contributions are made in the following aspects. (1) Universal image interpretation. Due to the difficulty in obtaining a unified CAD network tackling various images currently, ChatCAD $^+$ incorporates a domain identification module to work with a variety of CAD models (c.f. Fig. 2(a)). ChatCAD $^+$ can adaptively select a corresponding model given the input medical image. The tentative output of the CAD network is converted into text description to reflect image features, making it applicable for diagnostic reporting subsequently. (2) Hierarchical incontext learning for enhanced report generation. Top $k$ reports that are semantically similar to the LLM-generated report are retrieved from a clinic database via the proposed retrieval module (c.f. Fig. 3. The retrieved $k$ reports then serve as in-context examples to refine the LLM-generated report. (3) Knowledge-based reliable interaction. As illustrated in Fig. 1(b), ChatCAD $^+$ does not directly provide medical advice. Instead, it first seeks help via our proposed knowledge retrieval module for obtaining relevant knowledge from professional sources, e.g. Merck Manuals, Mayo Clinic, and Cleveland Clinic. Then, the LLM considers the retrieved knowledge as a reference to provide reliable medical advice.

为应对上述挑战,本文提出ChatCAD$^+$,主要贡献体现在以下方面:(1) 通用影像解读。针对当前难以构建统一处理各类影像的CAD网络,ChatCAD$^+$引入领域识别模块协同多CAD模型工作(见图2(a))。系统能根据输入医学影像自适应选择对应模型,并将CAD网络的初步输出转化为文本描述以反映影像特征,为后续诊断报告生成提供支持。(2) 分层上下文学习增强报告生成。通过提出的检索模块从临床数据库中获取与大语言模型生成报告语义相似的前$k$篇报告(见图3),这些报告作为上下文示例用于优化生成结果。(3) 基于知识的可靠交互。如图1(b)所示,ChatCAD$^+$不直接提供医疗建议,而是先通过知识检索模块从专业资源(如默克手册、梅奥诊所、克利夫兰诊所)获取相关知识,再由大语言模型参考检索结果提供可靠医疗建议。

In summary, our ChatCAD $^+$ for the first time builds a universal and reliable medical dialogue system. The improved quality of answers and diagnostic reports of the chatbot reveals potential of LLMs in interactive medical consultation.

总之,我们的ChatCAD$^+$首次构建了一个通用且可靠的医疗对话系统。该聊天机器人回答质量和诊断报告的提升,揭示了大语言模型在交互式医疗咨询中的潜力。

II. RELATED WORKS

II. 相关工作

A. Large Language Models in Healthcare

A. 医疗领域的大语言模型

Following the impressive potential demonstrated by ChatGPT with its 100B-scale parameters, researchers have further expanded the application of language models in healthcare unprecedentedly, yielding highly promising results. MedPaLM [17] was developed using meticulously curated biomedical corpora and human feedback, showcasing its potential with notable achievements, including a remarkable $67.6%$ accuracy on the MedQA exam. Notably, ChatGPT, which did not receive specific medical training, successfully passed all three parts of the USMLE and achieved an overall accuracy of over $50%$ across all exams, with several exams surpassing $60%$ accuracy [18]. ChatDoctor [14], fine-tuned on the LLaMA model using synthetic clinical QA data generated by ChatGPT, also incorporated medical domain knowledge to provide reliable responses. However, our ChatCAD+ distinguishes its reliable interaction from ChatDoctor in several aspects. First, ChatDoctor relies on Wikipedia, which is editable for all users and may compromise the rigor and reliability required in the medical domain. Second, ChatDoctor simply divides the knowledge of each medical topic into equal sections and relies on rule-based ranking to select relevant information. In contrast, our reliable interaction leverages the Merck Manual [19], a comprehensive and trusted medical knowledge resource. It can organize medical knowledge into a hierarchical structure, thus enabling us to fully exploit the reasoning capabilities of the LLM in recursively retrieving the most pertinent and valuable knowledge.

继ChatGPT凭借其千亿级参数展现出惊人潜力后,研究人员进一步前所未有地扩展了语言模型在医疗领域的应用,并取得了极具前景的成果。MedPaLM[17]通过精心整理的生物医学语料库和人类反馈开发而成,在MedQA考试中取得67.6%的惊人准确率等显著成就,展现了其潜力。值得注意的是,未接受专门医学训练的ChatGPT成功通过美国医师执照考试(USMLE)全部三个部分,所有考试总体准确率超过50%,其中多项考试突破60%[18]。基于LLaMA模型微调的ChatDoctor[14]利用ChatGPT生成的合成临床问答数据,同时结合医学领域知识来提供可靠回答。但我们的ChatCAD+在可靠交互方面与ChatDoctor存在多项差异:首先,ChatDoctor依赖可公开编辑的维基百科,可能影响医学领域所需的严谨性与可靠性;其次,ChatDoctor仅将每个医学主题知识简单均分,并依赖基于规则的排序来选取相关信息。相比之下,我们的可靠交互机制依托权威医学知识库《默克诊疗手册》[19],能够将医学知识组织为层级结构,从而充分发挥大语言模型在递归检索最相关、最有价值知识时的推理能力。

B. Multi-modality Large Models

B. 多模态大模型

In present times, multi-modality large models are typically constructed based on pre-trained models rather than training them from scratch. Frozen [20] performed fine-tuning on an image encoder, utilizing its outputs as soft prompts for the language model. Flamingo [21] introduced cross-attention layers into the LLM to incorporate visual features, exclusively pre-training these new layers. BLIP-2 [22] leveraged both frozen image encoders and frozen LLMs, connecting them through their proposed Q-Former. Following the architecture of BLIP-2, XrayGPT [23] was specifically developed on chest $\boldsymbol{\mathrm X}$ -ray. While the training cost for XrayGPT is demanding, our proposed ChatCAD $^+$ can seamlessly integrate existing pretrained CAD models without requiring further training. This highlights the inherent advantage of ChatCAD $^+$ in continual learning scenarios, where additional CAD models can be effortlessly incorporated.

当前,多模态大模型通常基于预训练模型构建,而非从头训练。Frozen [20] 对图像编码器进行微调,将其输出作为语言模型的软提示。Flamingo [21] 在大语言模型中引入交叉注意力层以融合视觉特征,仅对这些新层进行预训练。BLIP-2 [22] 同时采用冻结图像编码器和冻结大语言模型,通过其提出的 Q-Former 实现两者连接。基于 BLIP-2 架构,XrayGPT [23] 专门针对胸部 $\boldsymbol{\mathrm X}$ 光影像开发。虽然 XrayGPT 的训练成本较高,但我们提出的 ChatCAD $^+$ 能无缝集成现有预训练 CAD 模型而无需额外训练。这凸显了 ChatCAD $^+$ 在持续学习场景中的固有优势——可轻松整合新增的 CAD 模型。

In more recent studies, researchers have taken a different approach, i.e., designing prompts to allow LLMs to utilize vision models for avoiding training altogether. For instance, VisualChatGPT [24] established a connection between ChatGPT and a series of visual foundation models, enabling exchange of images during conversations. ChatCAD [9] linked existing CAD models with LLMs to enhance diagnostic accuracy and improve patient care. Following a similar paradigm as ChatCAD [9], Visual Med-Alpaca [25] incorporated multiple vision models to generate text descriptions for medical images.

在最近的研究中,研究者们采取了不同的方法,即设计提示词让大语言模型(LLM)直接调用视觉模型,从而完全避免训练过程。例如,VisualChatGPT [24] 在ChatGPT与一系列视觉基础模型之间建立了连接,实现了对话过程中的图像交互。ChatCAD [9] 将现有CAD模型与大语言模型结合,以提高诊断精度并改善患者护理。Visual Med-Alpaca [25] 沿用了与ChatCAD [9] 相似的范式,通过整合多个视觉模型来生成医学图像的文本描述。

Fig. 2. Overview of the reliable report generation. (a) Universal interpretation: To enhance the vision capability of LLMs, multiple CAD models are incorporated. The numerical results obtained from these models are transformed into visual descriptions following the rule of prob2text. (b) Hierarchical in-context learning: The LLM initially generates a preliminary report based on the visual description, which is then enhanced through in-context learning using retrieved semantically similar reports. LLM and CLIP models are kept frozen, while CAD models are denoted as trainable as they can be continually trained and updated without interfering with other components.

图 2: 可靠报告生成流程概览。(a) 通用解释: 为增强大语言模型的视觉能力,系统整合了多个CAD模型。这些模型生成的数值结果通过prob2text规则转化为视觉描述。(b) 分层上下文学习: 大语言模型首先生成基于视觉描述的初步报告,随后通过检索语义相似报告进行上下文学习来优化结果。大语言模型和CLIP模型保持冻结状态,CAD模型标注为可训练状态,因其可持续训练更新而不影响其他组件。

However, when compared to our study, it is limited to a single medical imaging domain and also lacks reliability in both report generation and interactive question answering.

然而,与我们的研究相比,它仅限于单一医学影像领域,且在报告生成和交互式问答方面都缺乏可靠性。

III. METHOD

III. 方法

The proposed ${\mathrm{ChatCAD+}}$ is a multi-modality system capable of processing both image and text inputs (as illustrated in Fig. 1) through reliable report generation and reliable interaction, respectively. It is important to note that, in this paper, the term “modality” refers to language and image, which differs from the commonly used concept of medical image modality. The reliable report generation treats medical image as input, identifies its domain, and provides enhanced medical reports by referencing a local report database. Simultaneously, for the user’s text query, the reliable interaction will retrieve clinically-sound knowledge from a professional knowledge database, thereby serving as a reliable reference for the LLM (e.g. ChatGPT) to enhance the reliability its response.

提出的 ${\mathrm{ChatCAD+}}$ 是一个多模态系统,能够分别通过可靠报告生成和可靠交互处理图像与文本输入(如图 1 所示)。需要注意的是,本文中的"模态"指语言和图像,这与常用的医学影像模态概念不同。可靠报告生成以医学影像为输入,识别其领域,并通过参考本地报告数据库生成增强版医学报告。同时,针对用户的文本查询,可靠交互会从专业知识库中检索临床可靠知识,从而为大语言模型(如 ChatGPT)提供可靠参考,以提升其回答的可靠性。

A. Reliable Report Generation

A. 可靠的报告生成

This section outlines our proposed reliable report generation, as depicted in Fig. 2, which encompasses two primary stages: universal interpretation and hierarchical in-context generation. Section III-A.1 illustrates the paradigm to interpret medical images from diverse domains into descriptions that can be understood by LLMs. The resulting visual description then serves as the input for subsequent hierarchical in-context learning. Following this, Section III-A.2 elucidates hierarchical in-context learning, within which the retrieval module (in Fig. 3) acts as key component.

本节概述了我们提出的可靠报告生成方法,如图 2 所示,该方法包含两个主要阶段:通用解释和分层上下文生成。第 III-A.1 节阐述了将来自不同领域的医学图像解释为大语言模型可理解的描述范式。生成的视觉描述随后作为后续分层上下文学习的输入。接着,第 III-A.2 节阐明了分层上下文学习,其中检索模块 (如图 3 所示) 作为关键组件。

TABLE I THE ILLUSTRATION OF PROB2TEXT. P3 IS THE DEFAULT SETTING. CAD Model $\rightarrow$ (disease: prob)

| P1 (direct) | "{disease} score: {prob}" |

| P2 (simplistic) | probE[0, 0.5): “No Finding' prob∈[0.5,1]:“Theprediction is{disease}” |

| P3( (illustrative) | probE[0, 0.2): “No sign of {disease}” probE[0.2, 0.5): “Small possibility of {disease}” prob∈[0.5,0.9):“Patient is likely tohave {disease} prob∈[0.9,1]:“Definitelyhave {disease} |

表 1: PROB2TEXT 的说明。P3 为默认设置。CAD 模型 $\rightarrow$ (疾病: 概率)

| P1 (直接) | "{疾病} 分数: {概率}" |

| P2 (简化) | 概率∈[0, 0.5): "无发现" 概率∈[0.5,1]: "预测为{疾病}" |

| P3 (示例) | 概率∈[0, 0.2): "无{疾病}迹象" 概率∈[0.2, 0.5): "{疾病}可能性较小" 概率∈[0.5,0.9): "患者可能有{疾病}" 概率∈[0.9,1]: "肯定患有{疾病}" |

- Universal Interpretation: The process of universal interpretation can be divided into three steps. Initially, the proposed domain identification module is employed to determine the specific domain of the medical image. Subsequently, the corresponding domain-specific model is activated to interpret the image. Finally, the interpretation result is converted into a text prompt for describing visual information via the rulebased prob2text module for further processing.

- 通用解读:通用解读过程可分为三个步骤。首先,采用提出的领域识别模块确定医学图像的具体领域。随后,激活对应的领域专用模型对图像进行解读。最后,通过基于规则的prob2text模块将解读结果转换为描述视觉信息的文本提示以供后续处理。

Domain identification chooses CAD models to interpret the input medical image, which is fundamental for subsequent operations of ChatCAD+. To achieve this, we employ a method of visual-language contrast that computes the cosine similarity between the input image and textual representations of various potential domains. This approach takes advantage of language’s ability in densely describing the features of a particular type of image, as well as its ease of extensibility. In particular, we employ the pre-trained BiomedCLIP model [26] to encode both the medical image and the text associated with domains of interest. In this study, we demonstrate upon three domains: chest X-ray, dental X-ray, and knee MRI. The workflow is depicted in the upper right portion of Fig. 2. Assuming there are three domains $\mathrm{D}_ {1},\mathrm{D}_ {2},\mathrm{D}_ {3}$ , along with their textual representations $\mathbf{M}_ {1},\mathbf{M}_ {2},\mathbf{M}_ {3}$ , and also a visual representation denoted as I for the input image, we define

领域识别通过选择CAD模型来解读输入的医学图像,这是ChatCAD+后续操作的基础。为此,我们采用了一种视觉-语言对比方法,计算输入图像与各潜在领域文本表征之间的余弦相似度。该方法利用了语言在密集描述特定类型图像特征方面的优势,以及其易于扩展的特性。具体而言,我们使用预训练的BiomedCLIP模型[26]对医学图像和关注领域的相关文本进行编码。本研究展示了在胸部X光、牙科X光和膝关节MRI三个领域的应用效果,工作流程如图2右上部分所示。假设存在三个领域$\mathrm{D}_ {1},\mathrm{D}_ {2},\mathrm{D}_ {3}$及其文本表征$\mathbf{M}_ {1},\mathbf{M}_ {2},\mathbf{M}_ {3}$,以及表示输入图像的视觉表征I,我们定义

Fig. 3. The illustration of the retrieval module within reliable report generation. It adopts the TF-IDF algorithm to preserve the semantics of each report and converts it into a latent embedding during offline modeling and online inference. To facilitate highly efficient retrieval, we perform spherical projection on all TF-IDF embeddings, whether during building or querying. In this manner, we can utilize the KD-Tree structure to store these data and implement retrieval with a low time complexity.

图 3: 可靠报告生成中的检索模块示意图。该模块采用TF-IDF算法保留每份报告的语义,并在离线建模和在线推理阶段将其转换为潜在嵌入向量。为实现高效检索,无论构建还是查询阶段,我们都对所有TF-IDF嵌入向量进行球面投影。通过这种方式,可利用KD-Tree结构存储数据,并以较低时间复杂度实现检索。

$$

\mathbf{D}_ {\mathrm{pred}}=\operatorname* {argmax}_ {i\in{1,2,3}}\frac{{I M}_ {i}}{\Vert\mathbf{I}\Vert:\left\Vert\mathbf{M}_ {i}\right\Vert},

$$

$$

\mathbf{D}_ {\mathrm{pred}}=\operatorname* {argmax}_ {i\in{1,2,3}}\frac{{I M}_ {i}}{\Vert\mathbf{I}\Vert:\left\Vert\mathbf{M}_ {i}\right\Vert},

$$

where $\mathrm{D_ {pred}}$ denotes the prediction of the medical image domain. The module thereby can call the domain-specific CAD model to analyze visual information given $\mathrm{D_ {pred}}$ .

其中 $\mathrm{D_ {pred}}$ 表示医学图像领域的预测。该模块因此可以调用特定领域的 CAD (Computer-Aided Design) 模型来分析给定 $\mathrm{D_ {pred}}$ 的视觉信息。

Since most CAD models generate outputs that can hardly be understood by language models, further processing is needed to bridge this gap. For example, an image diagnosis model typically outputs tensors representing the likelihood of certain clinical findings. To establish a link between image and text, these tensors are transformed into textual descriptions according to diagnostic-related rules, which is denoted as prob2text in Fig. 2(a). The prob2text module has been designed to present clinically relevant information in a manner that is more easily interpret able by LLMs. The details of the prompt design are illustrated in Table I. Using chest X-ray as an example, we follow the three types (P1-P3) of prompt designs in [9] and adopt P3 (illustrative) as the recommended setting in this study. Concretely, it employs a grading system that maps the numerical scores into clinically illustrative description of disease severity. The scores are divided into four levels based on their magnitude: “No sign”, “Small possibility”, “Likely”, and “Definitely”. The corresponding texts are then used to describe the likelihood of different observations in chest X-ray, providing a concise and informative summary of the patient’s condition. The prompt design for dental X-ray and knee MRI are in similar ways. And other prompt designs, such as P1 and P2 [9], will be discussed in experiments below.

由于大多数CAD模型的输出结果难以被语言模型理解,因此需要进一步处理以弥合这一差距。例如,影像诊断模型通常输出表示特定临床发现可能性的张量。为了建立影像与文本之间的联系,这些张量会根据诊断相关规则转换为文本描述(如图2(a)中的prob2text模块所示)。prob2text模块的设计旨在以更易于大语言模型理解的方式呈现临床相关信息。提示设计的详细信息如表1所示。以胸部X光为例,我们遵循文献[9]中提出的三种提示设计类型(P1-P3),并采用P3(说明性)作为本研究的推荐设置。具体而言,该系统采用分级机制将数值评分映射为疾病严重程度的临床描述性说明。根据数值大小将评分划分为四个等级:"无迹象"、"可能性小"、"很可能"和"确定"。生成的对应文本用于描述胸部X光中不同观察结果的可能性,从而为患者状况提供简明且信息丰富的总结。牙科X光和膝关节MRI的提示设计采用类似方法。其他提示设计(如文献[9]中的P1和P2)将在后续实验部分讨论。

- Hierarchical In-context Learning: The proposed strategy for hierarchical in-context learning, as shown in Fig. 2(b), consists of (1) preliminary report generation and (2) retrievalbased in-context enhancement.

- 分层上下文学习:如图 2(b) 所示,提出的分层上下文学习策略包括 (1) 初步报告生成和 (2) 基于检索的上下文增强。

In the first step, the LLM utilizes the visual description to generate a preliminary diagnostic report. This process is facilitated through prompts such as “Write a report based on results from Network(s).” It is important to note that the generation of this preliminary report relies solely on the capability of the LLM and is still far from matching capability of human specialists. As a result, even powerful LLMs like ChatGPT still struggle to achieve satisfactory results.

第一步,大语言模型(LLM)利用视觉描述生成初步诊断报告。该过程通过"根据Network(s)结果撰写报告"等提示词实现。需要注意的是,此初步报告的生成仅依赖大语言模型的能力,仍远未达到人类专家的水平。因此,即便是ChatGPT等强大模型,其输出结果仍难以令人满意。

To address this concern, the next step involves retrieving the top $\cdot k$ reports that share similar semantics with the preliminary report as in-context examples. The retrieval module is designed to preserve semantic information and ensure efficient implementation. Specifically, we employ TF-IDF [27] to model the semantics of each report, and the KD-Tree [28] data structure is utilized to facilitate high-speed retrieval. Fig. 3 illustrates the detailed pipeline of the proposed retrieval module. For simplification, we use chest $\mathrm{X}$ -ray in MIMIC-CXR as an example in this section. To better preserve disease-related information in the report, we focus on 17 medical terms related to thoracic diseases during the modeling of TF-IDF embeddings, which form a term set $\tau$ . Assuming the training set of MIMIC-CXR as $\mathcal{D}$ , the TF-IDF score for each term $t\in\mathcal T$ in each document $d\in\mathcal{D}$ is computed as:

为解决这一问题,下一步将检索与初步报告语义相似的前$\cdot k$份报告作为上下文示例。该检索模块旨在保留语义信息并确保高效实现。具体而言,我们采用TF-IDF [27]对每份报告的语义进行建模,并利用KD-Tree [28]数据结构实现高速检索。图3展示了该检索模块的详细流程。为简化说明,本节以MIMIC-CXR中的胸部$\mathrm{X}$光为例。为了更好地保留报告中与疾病相关的信息,我们在构建TF-IDF嵌入时聚焦于17个与胸部疾病相关的医学术语,这些术语构成集合$\tau$。假设MIMIC-CXR的训练集为$\mathcal{D}$,则每个文档$d\in\mathcal{D}$中每个术语$t\in\mathcal T$的TF-IDF得分计算如下:

$$

\begin{array}{r l}&{\mathrm{TF-IDF}(t,d)=\mathrm{TF}(t,d)\cdot\mathrm{IDF}(t)}\ &{=\frac{|{w\in d:w=t}|}{|d|}\cdot\log\frac{|\mathcal{D}|}{|{d\in\mathcal{D}:t\in d}|},}\end{array}

$$

$$

\begin{array}{r l}&{\mathrm{TF-IDF}(t,d)=\mathrm{TF}(t,d)\cdot\mathrm{IDF}(t)}\ &{=\frac{|{w\in d:w=t}|}{|d|}\cdot\log\frac{|\mathcal{D}|}{|{d\in\mathcal{D}:t\in d}|},}\end{array}

$$

where $w$ indicates a word within the document $d$ . In brief, $\mathrm{TF}(t,d)$ represents the frequency of term $t$ occurring in $d$ , and $\mathrm{IDF}(t)$ denotes the inverse of the frequency that document $d$ occurs in the training set $\mathcal{D}$ . Following this, we define the TF-IDF embedding (TIE) of a document $d$ as:

其中 $w$ 表示文档 $d$ 中的一个词。简而言之,$\mathrm{TF}(t,d)$ 表示术语 $t$ 在 $d$ 中出现的频率,$\mathrm{IDF}(t)$ 表示文档 $d$ 在训练集 $\mathcal{D}$ 中出现频率的倒数。接着,我们将文档 $d$ 的 TF-IDF 嵌入 (TIE) 定义为:

$$

\mathrm{TIE}(d)={\mathrm{TF}\mathrm{-}\mathrm{IDF}(t,d)|t\in\mathcal{T}}.

$$

$$

\mathrm{TIE}(d)={\mathrm{TF}\mathrm{-}\mathrm{IDF}(t,d)|t\in\mathcal{T}}.

$$

After calculating the TIEs for all $\textit{d}\in\textit{D}$ , we organize them using the KD-Tree [28] data structure, which enables fast online querying. The KD-Tree is a space-partitioning data structure that can implement $k$ -nearest-neighbor querying in $O(\log(n))$ time on average, making it suitable for information searching. However, since the implementation of KD-Tree relies on $L_ {2}$ distance instead of cosine similarity, it cannot be directly applied to our TIEs. To address this issue, we project all TIEs onto the surface of a unit hyper sphere, as shown in the right panel of Fig. 3. After spherical projection, we have:

在计算完所有 $\textit{d}\in\textit{D}$ 的 TIEs 后,我们使用 KD-Tree [28] 数据结构对其进行组织,以实现快速在线查询。KD-Tree 是一种空间划分数据结构,平均能在 $O(\log(n))$ 时间内实现 $k$ 近邻查询,适合信息检索。但由于 KD-Tree 的实现依赖于 $L_ {2}$ 距离而非余弦相似度,无法直接应用于我们的 TIEs。为解决该问题,如图 3 右面板所示,我们将所有 TIEs 投影至单位超球面。经球面投影后可得:

$$

L_ {2}(\frac{\vec{q}}{|\vec{q}|},\frac{\vec{v}}{|\vec{v}|})=2r\cdot\mathrm{sin}(\frac{\theta}{2}),

$$

$$

L_ {2}(\frac{\vec{q}}{|\vec{q}|},\frac{\vec{v}}{|\vec{v}|})=2r\cdot\mathrm{sin}(\frac{\theta}{2}),

$$

where $\vec{q}$ and $\vec{v}$ represent the TIE of the query report and a selected report, respectively. $r$ indicates the radius of the hyper sphere (radius $_ {\mathrm{{}}=1}$ in this study), and $\theta\in[0,\pi]$ stands for the angle between $\frac{\vec{q}}{\left|\vec{q}\right|}$ and 前· . The $L_ {2}$ distance between two TIEs will monotonically increase as a function of the angle between them, which favors the application of the KD-Tree data structure for efficient retrieval.

其中 $\vec{q}$ 和 $\vec{v}$ 分别表示查询报告和选定报告的 TIE (Tissue Identity Embedding)。$r$ 表示超球面的半径 (本研究中 radius$_ {\mathrm{{}}=1}$),$\theta\in[0,\pi]$ 表示 $\frac{\vec{q}}{\left|\vec{q}\right|}$ 与前· 之间的夹角。两个 TIE 之间的 $L_ {2}$ 距离会随它们夹角单调递增,这一特性有利于应用 KD-Tree 数据结构实现高效检索。

During online querying, the KD-Tree engine retrieves the top $\cdot k$ (i.e., $k{=}3$ ) reports that share the most similar semantics with the query report (i.e., preliminary report illustrated in Fig. 2). It then asks the LLM to refine its preliminary report with the retrieved $k$ reports as references, and generate the enhanced report as the final output.

在线查询时,KD-Tree引擎会检索与查询报告(即图2所示的初步报告)语义最相似的前$\cdot k$ (即$k{=}3$)份报告。随后,它会让大语言模型以检索到的$k$份报告为参考来完善其初步报告,并生成增强版报告作为最终输出。

Fig. 4. Overview of the reliable interaction. (a) The illustration of structured medical knowledge database, organized as a tree-like dictionary, where each medical topic has multiple sections while sections can be further divided into subsections. (b) A LLM-based knowledge retrieval method is proposed to search relevant knowledge in a backtrack manner. (c) The LLM is prompted to answer the question based on the retrieved knowledge.

图 4: 可靠交互概述。(a) 结构化医学知识库示意图,以树状字典形式组织,每个医学主题包含多个章节,章节可进一步细分为子章节。(b) 提出基于大语言模型的回溯式知识检索方法。(c) 引导大语言模型根据检索到的知识回答问题。

B. Reliable Interaction

B. 可靠交互

This section outlines the reliable interaction, which is responsible for answering the user’s text query. It serves a dual role within our proposed ChatCAD+. First, reliable interaction can function as a sequential module of reliable report generation to enhance interpret ability and understand ability of medical reports for patients. In this capacity, it takes the generated report from previous module as the input, and provides patients with explanations of involved clinical terms1. Second, it can also operate independently to answer clinicalrelated questions and provide reliable medical advice.

本节概述了可靠交互功能,该功能负责响应用户的文本查询。在我们提出的ChatCAD+框架中,可靠交互具有双重作用:首先,它可作为可靠报告生成流程的后续模块,通过解释临床术语1来增强患者对医疗报告的可理解性;其次,该功能也可独立运行,用于解答临床相关问题并提供可靠的医疗建议。

The proposed ChatCAD $^+$ offers reliable interaction via the construction of a professional knowledge database and LLM-based knowledge retrieval. In this study, we demonstrate using the Merck Manuals. The Merck Manuals are a series of healthcare reference books that provide evidence-based information on the diagnosis and treatment of diseases and medical conditions.

提出的ChatCAD$^+$通过构建专业知识数据库和基于大语言模型的知识检索,提供可靠的交互。在本研究中,我们以《默克诊疗手册》为例进行演示。《默克诊疗手册》是一系列医疗参考书籍,提供基于证据的疾病诊断和治疗信息。

The implementation of LLM-based knowledge retrieval leverages the Chain-of-Thought (CoT) technique, which is widely known for its ability to enhance the performance of LLM in problem-solving. CoT breaks down a problem into a series of sub-problems and then synthesizes the answers to these sub-problems progressively for solving the original problem. Humans typically do not read the entire knowledge base but rather skim through topic titles to find what they are looking for. Inspired by this, we have designed a prompt system that automatically guides the LLM to execute such searches. Correspondingly, we have structured the Merck Manuals database as a hierarchical dictionary, with topic titles of different levels serving as keys, as shown in Fig 4(a). Fig. 4(b) demonstrates the proposed knowledge retrieval methods. Initially, we provide the LLM with only titles of five related medical topics in the database, and then ask the LLM to select the most relevant topic to begin the retrieval. Once the LLM has made its choice, we provide it with the content of “abstract” section and present names of all other sections subject to this topic. Given that medical topics often exhibit hierarchical organization across three or four tiers, we repeat this process iterative ly, empowering the LLM to efficiently navigate through all tiers of a given medical topic. Upon identifying relevant medical knowledge, the LLM is prompted to return the retrieved knowledge, terminating the process. Otherwise, in the case that the LLM finds none of the provided information as relevant, our algorithm would backtrack to the parent tier, which makes it a kind of depth-first search [30]. Finally, the LLM is prompted to provide reliable response based on the retrieved knowledge (in Fig. 4(c)).

基于大语言模型(LLM)的知识检索实现采用了思维链(Chain-of-Thought, CoT)技术,该技术以提升大语言模型解决问题能力而广为人知。CoT将问题分解为一系列子问题,然后逐步综合这些子问题的答案来解决原始问题。人类通常不会阅读整个知识库,而是通过浏览主题标题来寻找所需内容。受此启发,我们设计了一个提示系统,自动引导大语言模型执行此类搜索。相应地,我们将默克手册数据库构建为分层字典结构,以不同层级的主题标题作为键值,如图4(a)所示。图4(b)展示了提出的知识检索方法。首先,我们仅向大语言模型提供数据库中五个相关医学主题的标题,然后要求其选择最相关的主题开始检索。当大语言模型做出选择后,我们会提供"摘要"部分的内容,并展示该主题下所有其他章节的名称。鉴于医学主题通常具有三到四层的层级结构,我们迭代重复这一过程,使大语言模型能够有效遍历给定医学主题的所有层级。当识别到相关医学知识时,系统会提示大语言模型返回检索到的知识并终止流程。若大语言模型认为提供的所有信息都不相关,我们的算法会回溯到父级层级,这使其成为一种深度优先搜索[30]。最终,系统会提示大语言模型基于检索到的知识提供可靠响应(如图4(c)所示)。

IV. EXPERIMENTAL RESULTS

IV. 实验结果

To comprehensively assess our proposed ${\mathrm{ChatCAD+}}$ , we conducted evaluations across three crucial aspects that influence its effectiveness in real-world scenarios: (1) the capability to handle medical images from different domains, (2) report generation quality, and (3) the efficacy in clinical question answering. This section commences with an introduction to the datasets used and implementation details of ${\mathrm{ChatCAD+}}$ . Then, we delve into the evaluation of these aspects in turn.

为了全面评估我们提出的 ${\mathrm{ChatCAD+}}$,我们从三个关键维度进行了评测,这些维度会影响其在实际医疗场景中的有效性:(1) 处理多领域医学影像的能力,(2) 报告生成质量,(3) 临床问答效能。本节首先介绍所用数据集及 ${\mathrm{ChatCAD+}}$ 的实现细节,随后依次展开这三个维度的评估分析。

A. Dataset and implementations

A. 数据集与实现

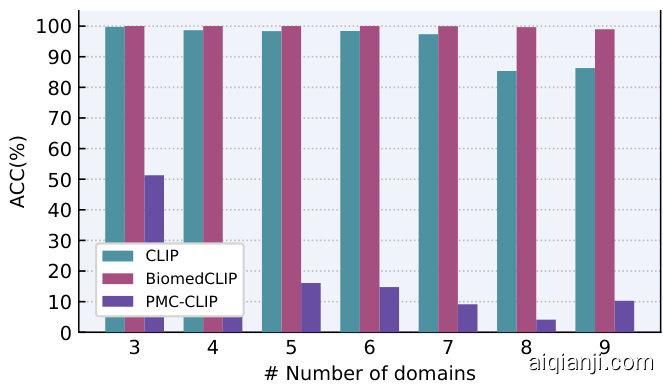

Domain identification: To investigate the robustness of domain identification, which is the fundamental component of our proposed universal CAD system, we crafted a dataset covering nine medical imaging domains by randomly selecting samples from different datasets. The statistics of this dataset are presented in Table II. During the evaluation, we progressively increase the number of domains following the left-toright order in Table II and report the accuracy (ACC) in each step. Since the pre-trained CLIP model is utilized to perform domain identification (as shown in Fig. 2), we also investigated the impact of different CLIPs in Sec. IV-B.

领域识别:为了探究领域识别的鲁棒性(这是我们提出的通用CAD系统的核心组件),我们通过从不同数据集中随机选取样本,构建了一个涵盖九个医学影像领域的数据集。该数据集的统计信息如表II所示。评估过程中,我们按照表II从左到右的顺序逐步增加领域数量,并记录每一步的准确率(ACC)。由于采用了预训练的CLIP模型进行领域识别(如图2所示),我们在第IV-B节还研究了不同CLIP模型的影响。

TABLE II STATISTICS OF THE COMPOSED DATASET FOR THE EVALUATION OF DOMAIN IDENTIFICATION.

| chestX-ray | dentalX-ray | knee X-ray | mammography | chest CT | fundus | endoscopy | dermoscopy | blood cell | |

| number | 400 | 360 | 800 | 360 | 320 | 120 | 200 | 360 | 200 |

| source | MedFMC[31] | Tufts Dental [32] | OAI [33] | INbreast [34] | COVID-CT[35] | DIARETDB1[36] | MedFMC[31] | ISIC2016[37] | PBC [38] |

表 II: 用于领域识别评估的合成数据集统计

| chestX-ray | dentalX-ray | knee X-ray | mammography | chest CT | fundus | endoscopy | dermoscopy | blood cell | |

|---|---|---|---|---|---|---|---|---|---|

| number | 400 | 360 | 800 | 360 | 320 | 120 | 200 | 360 | 200 |

| source | MedFMC[31] | Tufts Dental[32] | OAI[33] | INbreast[34] | COVID-CT[35] | DIARETDB1[36] | MedFMC[31] | ISIC2016[37] | PBC[38] |

Fig. 5. Evaluation of domain identification using different CLIPs.

图 5: 使用不同CLIP模型进行领域识别的评估。

Report generation: For a fair comparison, the report generation performance of ${\mathrm{ChatCAD+}}$ is assessed on the public MIMIC-CXR dataset [39]. The MIMIC-CXR is a large public dataset of chest X-ray images, associated radiology reports, and other clinical data. The dataset consists of 377,110 deidentified chest X-ray images and associated 227,827 radiology reports. The MIMIC-CXR dataset allows us to measure the quality of diagnostic accuracy and report generation, as most report generation methods are designed specifically for chest X-ray. The quality of report generation was tested on the official test set of the MIMIC-CXR dataset, focusing on five findings (card iomega ly, edema, consolidation, at elect as is, and pleural effusion). Since ChatGPT was adopted as the default LLM in report generation experiments, we randomly selected 200 samples for each finding of interest due to limitations on the per-hour accesses of OpenAI API-Key, resulting in a total of 1,000 test samples. To evaluate the performance of report generation, we used several widelyused Natural Language Generation (NLG) metrics, including BLEU [40], METEOR [41], and ROUGE-L [42]. Specifically, BLEU measures the similarity between generated and groundtruth reports by counting word overlap and we denote Corpus BLEU as C-BLEU by default. METEOR considers synonym substitution and evaluates performance on both sentence level and report level. Meanwhile, ROUGE-L evaluates the length of the longest common sub sequence. We also measured clinical efficacy (CE) of reports using the open-source CheXbert library [43]. CheXbert takes text reports as input and generates multi-label classification labels for each report, with each label corresponding to one of the pre-defined thoracic diseases.

报告生成:为确保公平比较,${\mathrm{ChatCAD+}}$的报告生成性能在公开的MIMIC-CXR数据集[39]上进行评估。MIMIC-CXR是一个包含胸部X光图像、相关放射学报告和其他临床数据的大型公开数据集,包含377,110张去标识化胸部X光图像和227,827份相关放射学报告。由于大多数报告生成方法专为胸部X光设计,该数据集可用于评估诊断准确性和报告生成质量。报告生成质量测试在MIMIC-CXR官方测试集上进行,重点关注五种症状(心脏增大、肺水肿、肺实变、肺不张和胸腔积液)。由于采用ChatGPT作为报告生成实验的默认大语言模型,受限于OpenAI API-Key的每小时调用次数,我们为每种目标症状随机选取200个样本,共计1,000个测试样本。评估报告生成性能时,我们采用多种广泛使用的自然语言生成(NLG)指标,包括BLEU[40]、METEOR[41]和ROUGE-L[42]。具体而言,BLEU通过统计词汇重叠度衡量生成报告与标准报告的相似性(默认使用语料库BLEU即C-BLEU),METEOR考虑同义词替换并在句子级和报告级评估性能,ROUGE-L则评估最长公共子序列长度。我们还使用开源CheXbert库[43]测量报告的临床效能(CE),该库可将文本报告转化为多标签分类结果,每个标签对应预定义的胸部疾病类型。

Based on these extracted multi-hot labels, we compute precision (PR), recall (RC), and F1-score (F1) on 1,000 test samples. These metrics provide additional insight into the performance of report generation. If not specified, we choose P3 as a default prompt design in universal interpretation and $k{=}3$ for hierarchical in-context learning.

基于这些提取的多热标签,我们在1000个测试样本上计算了精确率(PR)、召回率(RC)和F1分数(F1)。这些指标为报告生成性能提供了更多洞察。如无特别说明,在通用解释中我们默认选择P3作为提示设计,在分层上下文学习中使用$k{=}3$。

Clinical question answering: The evaluation of reliable interaction was performed using a subset of the CMExam dataset [44]. CMExam is a collection of multiple-choice questions with official explanations, sourced from the Chinese National Medical Licensing Examination. Since CMExam covers a diversity of areas, including many clinical-unrelated questions, we focused on a subset of the CMExam test set that specifically addressed “Disease Diagnosis” and “Disease Treatment,” while excluding questions belonging to the department of “Traditional Chinese Medicine”. This resulted in a subset of 2190 question-answer pairs. Since the CMExam dataset is completely in Chinese, we selected ChatGLM2 [45] and ChatGLM3 [45], two 6B scale models, as the LLMs for validation. These models have demonstrated superior Chinese language capabilities compared to other open-source LLMs. We employed the official prompt design of CMExam, which required the LLM to make a choice and provide the accompanying explanation simultaneously. We acknowledge that our proposed LLM-based knowledge retrieval involves recursive requests to the LLM and will consume a large amount of tokens, making experiments using ChatGPT and GPT-4 un affordable for us. In experimental results, we report ACC and F1 for multi-choice selection, and NLG metrics for reason explanation.

临床问答:使用CMExam数据集[44]的子集进行了可靠交互的评估。CMExam是中国国家医学资格考试中收集的带有官方解释的多选题合集。由于CMExam涵盖多个领域,包含许多与临床无关的问题,我们专注于CMExam测试集中专门涉及"疾病诊断"和"疾病治疗"的子集,同时排除了属于"中医"科室的问题。最终得到2190个问答对的子集。由于CMExam数据集完全为中文,我们选择了ChatGLM2[45]和ChatGLM3[45]这两个60亿参数规模的大语言模型作为验证对象。与其他开源大语言模型相比,这些模型已展现出卓越的中文能力。我们采用CMExam官方设计的提示模板,要求大语言模型同时做出选择并提供相应解释。我们注意到,基于大语言模型的知识检索涉及递归请求,将消耗大量token,因此无法承担使用ChatGPT和GPT-4进行实验的成本。在实验结果中,我们报告多选题选择的准确率(ACC)和F1值,以及原因解释的自然语言生成(NLG)指标。

Implementation of domain-specific CADs: In Section IIIA.1, we proposed to incorporate domain-specific CAD models to interpret images from different medical imaging domain. In this study, we adopted Chest CADs, Tooth CADs, and Knee CADs. Chest CADs is composed of a thoracic disease classifier [46] and a chest X -ray report generation network [7]. The former [46] was trained on the official training split of the CheXpert [47] dataset, using a learning rate $(l r)$ of $1e^{-4}$ for 10 epochs. The report generation network [7] was trained on the training split of MIMIC-CXR [39] using a single NVIDIA A100 GPU. We used the Adam optimizer with a learning rate of $5e^{-5}$ . For Tooth CAD, we utilized the periodontal diagnosis model proposed in [48], which was trained on 300 panoramic dental X-ray images collected from real-world clinics with a size of $2903\mathrm{x}1536$ . The training was conducted on a NVIDIA A100 GPU with 80GB memory for a total of 200 epochs. We set the learning rate as $2e^{-5}$ using the Adam optimizer. For Knee CAD, we adopted the model proposed in [49], which was trained on 964 knee MRIs captured using a Philips Achieva 3.0T TX MRI scanner from Shanghai Sixth People’s Hospital. The Adam optimizer was used with a weight decay of $1e^{-4}$ .

特定领域CAD系统的实现:在第三节A.1部分,我们提出整合特定领域CAD模型来解析不同医学影像领域的图像。本研究采用了胸部CAD、牙齿CAD和膝关节CAD系统。胸部CAD系统由胸科疾病分类器[46]和胸部X光报告生成网络[7]构成。前者[46]基于CheXpert数据集[47]的官方训练集进行训练,学习率(lr)设为1e−4,训练10个周期;报告生成网络[7]使用单块NVIDIA A100 GPU在MIMIC-CXR[39]训练集上完成训练,采用学习率为5e−5的Adam优化器。牙齿CAD系统采用文献[48]提出的牙周诊断模型,该模型基于临床收集的300张尺寸为2903×1536的全景牙科X光片,在80GB显存的NVIDIA A100 GPU上训练200个周期,设置2e−5学习率并使用Adam优化器。膝关节CAD系统采用文献[49]提出的模型,其训练数据来自上海市第六人民医院Philips Achieva 3.0T TX磁共振扫描仪采集的964例膝关节MRI图像,训练时使用Adam优化器并设置1e−4的权重衰减系数。

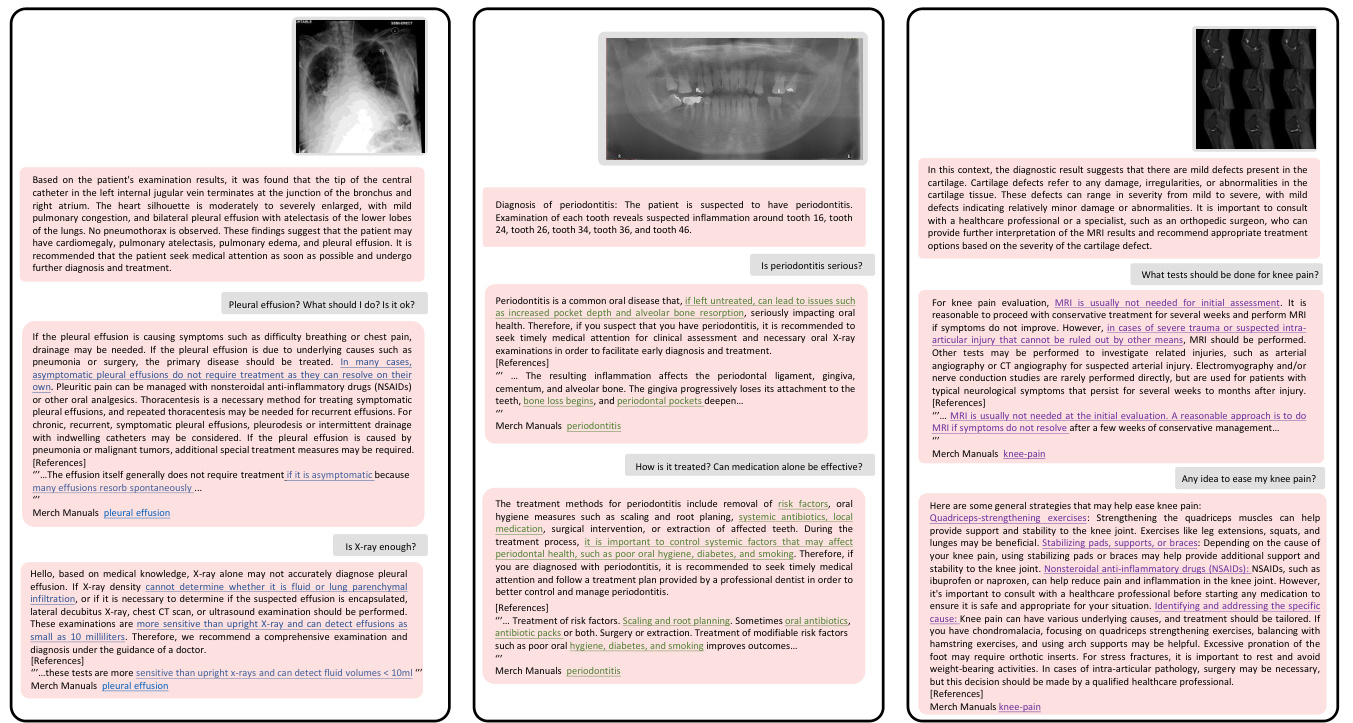

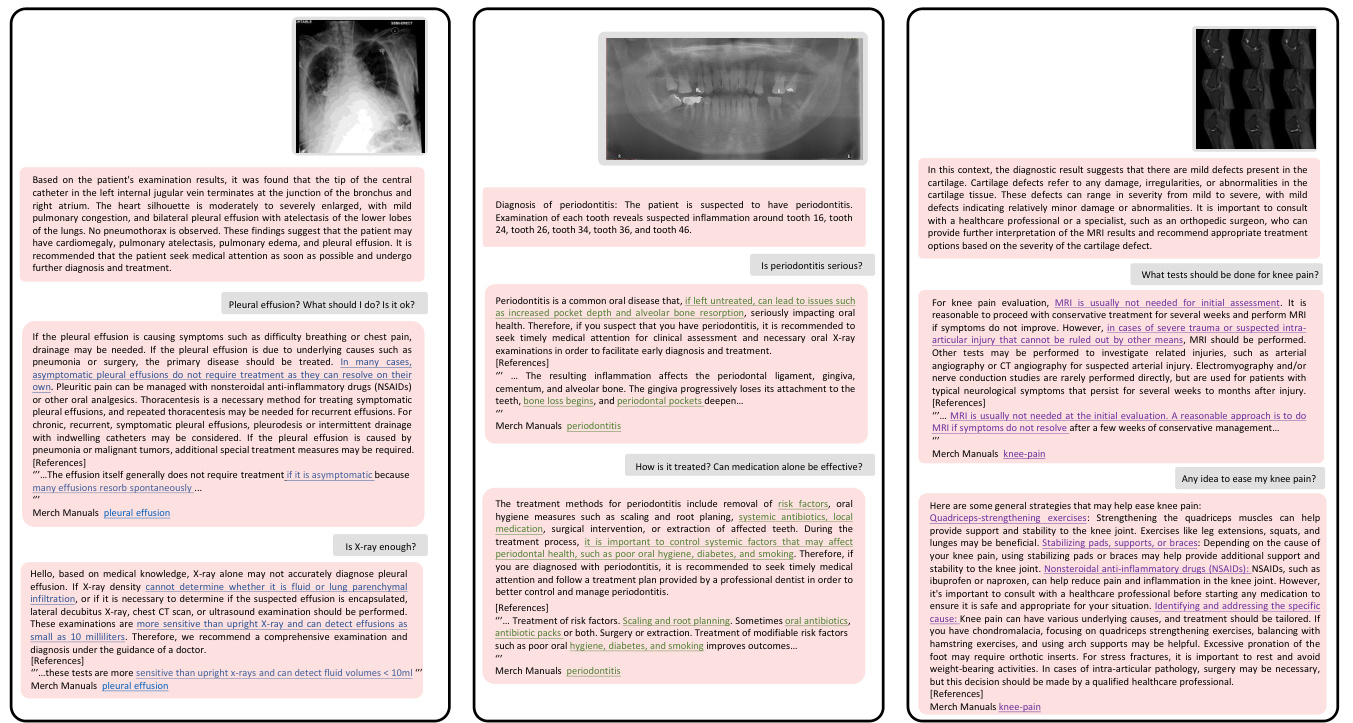

Fig. 6. Examples of universal and interactive medical consultation using ${\mathsf{C h a t C A D+}}$ , with ChatGPT as the default LLM. The underlined text signifies information obtained from reputable medical websites, which is not comprehensively incorporated in ChatGPT.

图 6: 使用 ${\mathsf{C h a t C A D+}}$ (默认大语言模型为ChatGPT)进行通用交互式医疗咨询的案例。下划线文本表示来自权威医疗网站的信息,这些信息未全面整合到ChatGPT中。

The learning rate was set as $3e^{-5}$ for Transformer and graph attention network components, and $3e^{-4}$ for others.

Transformer 和 graph attention network 组件的学习率设置为 $3e^{-5}$,其他部分设置为 $3e^{-4}$。

B. Evaluation of a Universal System

B. 通用系统评估

To investigate the effectiveness of ${\mathrm{ChatCAD+}}$ as a universal CAD system, we evaluated it by (1) quantitatively investigating its domain identification capability when encountering images from various domains, and (2) qualitatively investigating its ability to provide reliable response in different scenarios.

为了验证 ${\mathrm{ChatCAD+}}$ 作为通用CAD系统的有效性,我们从两方面进行评估:(1) 定量分析其遇到不同领域图像时的领域识别能力,(2) 定性研究其在多种场景下提供可靠反馈的能力。

- Robustness of domain identification: In Fig. 5, we visualize the domain identification performance of different CLIP models. Compared with the baseline CLIP model, BiomedCLIP [26] achieves an impressive performance, showing $100%$ accuracy on 2560 images from 7 different domains. It can even maintain an accuracy of $98.9%$ when all 9 domains are involved, while the performance of CLIP is degraded to $86.3%$ . These observations demonstrate that BiomedCLIP has an more in-depth understanding of professional medical knowledge compared with CLIP, and thus is suitable for domain identification in our proposed ChatCAD+.

- 领域识别的鲁棒性:在图5中,我们可视化了不同CLIP模型的领域识别性能。与基线CLIP模型相比,BiomedCLIP [26] 表现出色,在来自7个不同领域的2560张图像上实现了100%的准确率。即使涉及全部9个领域时,它仍能保持98.9%的准确率,而CLIP的性能则降至86.3%。这些结果表明,相比CLIP,BiomedCLIP对专业医学知识有更深入的理解,因此适合用于我们提出的ChatCAD+中的领域识别任务。

However, to our surprise, PMC-CLIP [50] performs rather poorly on this task. As shown in Fig. 5, it only achieves approximate $50%$ accuracy when three domains are involved. We hypothesize that this can be attributed to its lack of zeroshot capability. While PMC-CLIP has demonstrated promising quality fof fine-tuning on donwstream tasks, its lack of zeroshot inference capability may hinder its wide-speard application. In conclusion, BiomedCLIP can be a better choice than both CLIP and PMC-CLIP for domain identification.

然而出乎意料的是,PMC-CLIP [50] 在该任务中表现相当糟糕。如图 5 所示,当涉及三个领域时,其准确率仅达到约 $50%$ 。我们推测这可能归因于其缺乏零样本 (zero-shot) 能力。尽管 PMC-CLIP 在下游任务微调中展现出良好性能,但其零样本推理能力的缺失可能限制其广泛应用。综上所述,BiomedCLIP 在领域识别任务上是比 CLIP 和 PMC-CLIP 更优的选择。

- Reliability of consultations: Fig. 6 demonstrates the universal application and reliability of our proposed method. The ChatCAD $^+$ can process different medical images and provide proper diagnostic reports. Users can interact with ChatCAD $^+$ and ask additional questions for further clarification. By leveraging external medical knowledge, the enhanced ${\mathrm{ChatCAD+}}$ is capable of delivering medical advice in a more professional manner. In Fig. 6, each reply from ChatCAD $^+$ includes a reference, and the pertinent knowledge obtained from the external database is underlined for emphasis. For instance, in the scenario where the question is raised about whether a chest X-ray is sufficient for diagnosing pleural effusion, ChatGPT would simply recommend a CT scan without providing a convincing explanation. In contrast, ChatCAD $^+$ is capable of informing the patient that CT scans have the capability to detect effusions as small as 10 milliliters, thereby providing a more detailed and informative response.

- 咨询可靠性:图6展示了我们提出方法的普适性和可靠性。ChatCAD$^+$能够处理不同的医学图像并提供准确的诊断报告。用户可与ChatCAD$^+$交互并提出额外问题以获得进一步说明。通过利用外部医学知识,增强版${\mathrm{ChatCAD+}}$能够以更专业的方式提供医疗建议。在图6中,ChatCAD$^+$的每个回复都包含参考文献,且从外部数据库获取的相关知识会以下划线强调。例如,当被问及胸部X光是否足以诊断胸腔积液时,ChatGPT仅会建议进行CT扫描而不提供令人信服的解释。相比之下,ChatCAD$^+$能够告知患者CT扫描可检测小至10毫升的积液,从而提供更详细且信息丰富的答复。

C. Evaluation of Report Generation

C. 报告生成评估

We begin by comparing our approach to state-of-the-art (SOTA) report generation methods, while also examining the impact of various prompt designs. Subsequently, we assess the general iz ability of our reliable report generation model by utilizing diverse LLMs. Following this, we explore the impact of hierarchical in-context learning by changing the number of retrieved reports. We then proceed to qualitatively showcase the advanced capabilities of ${\mathrm{ChatCAD+}}$ in report generation. Finally, we compare our specialist-based system against others’ generalist medical AI systems.

我们首先将我们的方法与最先进的 (SOTA) 报告生成方法进行比较,同时考察不同提示设计的影响。随后,我们通过使用多样化的大语言模型来评估可靠报告生成模型的泛化能力。接着,我们通过改变检索报告的数量来探索分层上下文学习的影响。然后,我们定性展示了 ${\mathrm{ChatCAD+}}$ 在报告生成中的先进能力。最后,我们将基于专科医生的系统与其他通用医疗 AI 系统进行对比。

- Comparison with SOTA methods: The numerical results of different methods was compared and presented in Table III. The advantage of ${\mathrm{ChatCAD+}}$ lies in its superior performance across multiple evaluation metrics compared to both previous models and different prompt settings. Particularly, ${\mathrm{ChatCAD+}}$ (P3) stands out with the highest scores in terms of C-BLEU, METEOR, REC, and F1. Despite the inferiority in specific metrics, ChatCAD $^+$ (P3) still holds a significant advantage across other metrics, which validates its advanced overall performance. Note that ${\mathrm{ChatCAD+}}$ (P3) shows a significant advantage over ChatCAD (P3) on all NLG and CE metrics, which underscores the rationality of hierarchical in-context learning. These results suggest that ${\mathrm{ChatCAD+}}$ (P3) is capable of generating high-quality responses that are not only fluent and grammatical but also clinically accurate, making it a promising model for conversational tasks.

- 与SOTA方法的比较:不同方法的数值结果对比见表III。${\mathrm{ChatCAD+}}$的优势在于,与先前模型及不同提示设置相比,其在多项评估指标上均表现出更优性能。特别是${\mathrm{ChatCAD+}}$ (P3)在C-BLEU、METEOR、REC和F1指标上均取得最高分。尽管在某些特定指标上略逊,ChatCAD$^+$ (P3)在其他指标上仍保持显著优势,这验证了其整体性能的先进性。值得注意的是,${\mathrm{ChatCAD+}}$ (P3)在全部NLG和CE指标上均显著优于ChatCAD (P3),这印证了分层上下文学习策略的合理性。结果表明,${\mathrm{ChatCAD+}}$ (P3)能够生成既流畅合规又符合临床准确性的高质量响应,使其成为对话任务中极具前景的模型。

TABLE III COMPARATIVE RESULTS WITH PREVIOUS STUDIES AND DIFFERENT PROMPT SETTINGS. P3 IS THE DEFAULT PROMPT SETTING IN THIS STUDY.

| Model | Size | C-BLEU | ROUGE-L | METEOR | PRE | REC | F1 |

| R2GenCMN [7] | 244M | 3.525 | 18.351 | 21.045 | 0.578 | 0.411 | 0.458 |

| VLCI [8] | 357M | 3.834 | 16.217 | 20.806 | 0.617 | 0.299 | 0.377 |

| ChatCAD (P3) | 256M+175B | 3.594 | 16.791 | 23.146 | 0.526 | 0.603 | 0.553 |

| ChatCAD+ (P1) | 256M+175B | 4.073 | 16.820 | 22.700 | 0.548 | 0.484 | 0.502 |

| ChatCAD+ (P2) | 256M+175B | 4.407 | 17.266 | 23.302 | 0.538 | 0.597 | 0.557 |

| ChatCAD+ (P3) | 256M+175B | 4.409 | 17.344 | 24.337 | 0.531 | 0.615 | 0.564 |

表 III 与先前研究和不同提示设置的对比结果。P3 是本研究的默认提示设置。

| 模型 | 大小 | C-BLEU | ROUGE-L | METEOR | PRE | REC | F1 |

|---|---|---|---|---|---|---|---|

| R2GenCMN [7] | 244M | 3.525 | 18.351 | 21.045 | 0.578 | 0.411 | 0.458 |

| VLCI [8] | 357M | 3.834 | 16.217 | 20.806 | 0.617 | 0.299 | 0.377 |

| ChatCAD (P3) | 256M+175B | 3.594 | 16.791 | 23.146 | 0.526 | 0.603 | 0.553 |

| ChatCAD+ (P1) | 256M+175B | 4.073 | 16.820 | 22.700 | 0.548 | 0.484 | 0.502 |

| ChatCAD+ (P2) | 256M+175B | 4.407 | 17.266 | 23.302 | 0.538 | 0.597 | 0.557 |

| ChatCAD+ (P3) | 256M+175B | 4.409 | 17.344 | 24.337 | 0.531 | 0.615 | 0.564 |

TABLE IV EVALUATION OF RELIABLE REPORT GENERATION USING DIFFERENT LLMS.

| Model | Size | Reliable | C-BLEU | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ROUGE-L | METEOR | PRE | REC | F1 |

| ChatGLM2 [45] | 6B | × | 2.073 | 16.999 | 3.426 | 0.926 | 0.313 | 14.119 | 23.008 | 0.518 | 0.632 | 0.553 |

| ← | 3.236 | 25.172 | 5.268 | 1.461 | 0.566 | 15.559 | 23.397 | 0.501 | 0.639 | 0.555 | ||

| PMC-LLaMA [51] | 7B | × | 1.709 | 15.003 | 2.432 | 0.748 | 0.313 | 13.983 | 20.590 | 0.492 | 0.702 | 0.532 |

| 1.059 | 6.5059 | 1.591 | 0.504 | 0.241 | 8.140 | 18.551 | 0.477 | 0.664 | 0.525 | |||

| MedAlpaca [52] | 7B | × | 1.554 | 30.710 | 6.143 | 1.906 | 0.819 | 12.729 | 11.865 | 0.526 | 0.464 | 0.484 |

| 3.031 | 30.795 | 7.107 | 2.408 | 1.107 | 14.761 | 15.711 | 0.507 | 0.518 | 0.505 | |||

| Mistral [53] | 7B | × | 3.261 | 27.806 | 6.068 | 1.463 | 0.458 | 16.132 | 21.999 | 0.558 | 0.444 | 0.485 |

| ← | 3.547 | 30.851 | 7.178 | 1.698 | 0.493 | 17.493 | 22.347 | 0.543 | 0.597 | 0.558 | ||

| LLaMA [2] | 7B | 0.807 | 7.575 | 1.265 | 0.337 | 0.128 | 10.016 | 17.194 | 0.495 | 0.589 | 0.519 | |

| 0.966 | 7.790 | 1.525 | 0.423 | 0.178 | 10.237 | 18.344 | 0.478 | 0.625 | 0.535 | |||

| LLaMA2 [54] | 7B | × | 1.298 | 14.929 | 2.717 | 0.531 | 0.132 | 12.016 | 19.958 | 0.481 | 0.588 | 0.519 |

| ← | 3.579 | 24.798 | 5.826 | 1.638 | 0.693 | 16.850 | 23.950 | 0.512 | 0.561 | 0.528 | ||

| LLaMA [2] | 13B | × | 0.810 | 6.824 | 1.393 | 0.331 | 0.113 | 8.959 | 16.647 | 0.486 | 0.452 | 0.459 |

| 0.973 | 8.295 | 1.521 | 0.439 | 0.197 | 9.813 | 18.073 | 0.498 | 0.465 | 0.463 | |||

| LLaMA2 [54] | 13B | × | 2.940 | 23.536 | 5.173 | 1.390 | 0.441 | 15.053 | 21.938 | 0.531 | 0.564 | 0.538 |

| 3.650 | 25.080 | 6.148 | 1.728 | 0.666 | 16.646 | 24.015 | 0.533 | 0.571 | 0.543 | |||

| ChatGPT [1] | 175B | × | 3.594 | 28.453 | 6.371 | 1.656 | 0.556 | 16.791 | 23.146 | 0.526 | 0.603 | |

| 4.409 | 31.625 | 7.570 | 2.107 | 0.760 | 17.344 | 24.337 | 0.531 | 0.615 | 0.553 0.564 |

表 IV 使用不同大语言模型生成可靠报告的评估结果

| 模型 | 规模 | 可靠 | C-BLEU | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ROUGE-L | METEOR | PRE | REC | F1 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ChatGLM2 [45] | 6B | × | 2.073 | 16.999 | 3.426 | 0.926 | 0.313 | 14.119 | 23.008 | 0.518 | 0.632 | 0.553 |

| ← | 3.236 | 25.172 | 5.268 | 1.461 | 0.566 | 15.559 | 23.397 | 0.501 | 0.639 | 0.555 | ||

| PMC-LLaMA [51] | 7B | × | 1.709 | 15.003 | 2.432 | 0.748 | 0.313 | 13.983 | 20.590 | 0.492 | 0.702 | 0.532 |

| 1.059 | 6.5059 | 1.591 | 0.504 | 0.241 | 8.140 | 18.551 | 0.477 | 0.664 | 0.525 | |||

| MedAlpaca [52] | 7B | × | 1.554 | 30.710 | 6.143 | 1.906 | 0.819 | 12.729 | 11.865 | 0.526 | 0.464 | 0.484 |

| 3.031 | 30.795 | 7.107 | 2.408 | 1.107 | 14.761 | 15.711 | 0.507 | 0.518 | 0.505 | |||

| Mistral [53] | 7B | × | 3.261 | 27.806 | 6.068 | 1.463 | 0.458 | 16.132 | 21.999 | 0.558 | 0.444 | 0.485 |

| ← | 3.547 | 30.851 | 7.178 | 1.698 | 0.493 | 17.493 | 22.347 | 0.543 | 0.597 | 0.558 | ||

| LLaM |