Towards Unified Conversational Recommend er Systems via Knowledge-Enhanced Prompt Learning

基于知识增强提示学习的统一对话推荐系统研究

ABSTRACT

摘要

Conversational recommend er systems (CRS) aim to pro actively elicit user preference and recommend high-quality items through natural language conversations. Typically, a CRS consists of a recom mend ation module to predict preferred items for users and a conversation module to generate appropriate responses. To develop an effective CRS, it is essential to seamlessly integrate the two modules. Existing works either design semantic alignment strategies, or share knowledge resources and representations between the two modules. However, these approaches still rely on different architectures or techniques to develop the two modules, making it difficult for effective module integration.

对话推荐系统 (Conversational Recommender System, CRS) 旨在通过自然语言对话主动获取用户偏好并推荐高质量物品。通常,CRS由推荐模块和对话模块组成:推荐模块预测用户偏好的物品,对话模块生成合适的回复。为了构建高效的CRS,必须无缝整合这两个模块。现有研究要么设计语义对齐策略,要么在两个模块间共享知识资源和表征。然而,这些方法仍依赖不同架构或技术来开发两个模块,导致难以实现有效的模块集成。

To address this problem, we propose a unified CRS model named UniCRS based on knowledge-enhanced prompt learning. Our approach unifies the recommendation and conversation subtasks into the prompt learning paradigm, and utilizes knowledge-enhanced prompts based on a fixed pre-trained language model (PLM) to fulfill both subtasks in a unified approach. In the prompt design, we include fused knowledge representations, task-specific soft tokens, and the dialogue context, which can provide sufficient contextual information to adapt the PLM for the CRS task. Besides, for the recommendation subtask, we also incorporate the generated response template as an important part of the prompt, to enhance the information interaction between the two subtasks. Extensive experiments on two public CRS datasets have demonstrated the effectiveness of our approach. Our code is publicly available at the link: https://github.com/RUCAIBox/UniCRS.

为解决这一问题,我们提出了一种基于知识增强提示学习的统一CRS模型UniCRS。该方法将推荐和对话子任务统一到提示学习范式中,并利用基于固定预训练语言模型(PLM)的知识增强提示,以统一方式完成这两个子任务。在提示设计中,我们融合了知识表示、任务特定的软token以及对话上下文,这些都能为PLM适应CRS任务提供充分的上下文信息。此外,针对推荐子任务,我们还将生成的响应模板作为提示的重要组成部分,以增强两个子任务间的信息交互。在两个公开CRS数据集上的大量实验证明了我们方法的有效性。代码已开源:https://github.com/RUCAIBox/UniCRS。

CCS CONCEPTS

CCS概念

• Information systems $\rightarrow$ Recommend er systems.

• 信息系统 $\rightarrow$ 推荐系统

KEYWORDS

关键词

Conversational Recommend er System; Pre-trained Language Model; Prompt Learning

会话推荐系统;预训练语言模型;提示学习

ACM Reference Format:

ACM 参考文献格式:

Xiaolei Wang, Kun Zhou, Ji-Rong Wen, and Wayne Xin ZhaoB. 2022. Towards Unified Conversational Recommend er Systems via Knowledge-Enhanced Prompt Learning. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD ’22), August 14–18, 2022, Washington, DC, USA. ACM, New York, NY, USA, 9 pages. https://doi.org/10.1145/ 3534678.3539382

Xiaolei Wang、Kun Zhou、Ji-Rong Wen和Wayne Xin ZhaoB. 2022. 基于知识增强提示学习的统一对话推荐系统研究. 见: 第28届ACM SIGKDD知识发现与数据挖掘会议论文集 (KDD '22), 2022年8月14–18日, 美国华盛顿特区. ACM, 美国纽约, 9页. https://doi.org/10.1145/3534678.3539382

1 INTRODUCTION

1 引言

With the widespread of intelligent assistants, conversational recommender systems (CRSs) have become an emerging research topic, which provide the recommendation service to users through natural language conversations [5, 15]. From the perspective of functions, CRSs should be able to fulfill two major subtasks, a recommendation subtask that predicts items from a candidate set to users and a conversation subtask that generates appropriate questions or responses.

随着智能助手的普及,对话式推荐系统(CRS)已成为新兴研究课题,它通过自然语言对话为用户提供推荐服务[5, 15]。从功能角度看,CRS需要完成两个核心子任务:推荐子任务(从候选集中预测用户可能喜欢的物品)和对话子任务(生成恰当的问题或回复)。

To fulfill these two subtasks, existing methods [4, 16, 35] usually set up two separate modules for each subtask, namely the recommendation module and the conversation module. Since the two subtasks are highly coupled, it has been widely recognized that a capable CRS should be able to seamlessly integrate these two modules [4, 16, 30, 35], in order to share useful features or knowledge between them. One line of works incorporate shared knowledge resources (e.g., knowledge graphs [4] and reviews [22]) and their representations to enhance the semantic interaction. Another line of works design special representation alignment strategies, such as pre-training tasks and regular iz ation terms (e.g., mutual information maximization [35] and contrastive learning [38]), to guarantee the semantic consistency of the two modules.

为了实现这两个子任务,现有方法[4, 16, 35]通常为每个子任务设置两个独立模块,即推荐模块和对话模块。由于这两个子任务高度耦合,学界普遍认为一个合格的对话推荐系统(CRS)应当能够无缝整合这两个模块[4, 16, 30, 35],以实现特征或知识的共享。一类研究通过引入共享知识资源(如知识图谱[4]和用户评论[22])及其表征来增强语义交互;另一类研究则设计特殊的表征对齐策略(如预训练任务和正则化项),例如互信息最大化[35]和对比学习[38],来确保两个模块的语义一致性。

Table 1: An illustrative case of the semantic inconsistency between the recommendation and conversation modules in existing CRS methods. The mentioned movies and entities are marked in italic blue and red, respectively. Compared with the baseline, the generated response of our model is more consistent with the predicted recommendation.

| USER: HUMAN: | Hello!I amlookingforsomemovies. What kinds of movie do you like? I like animated movies such as Frozen (2013). |

| USER: | I donotlikeanimatedfilms.Iwouldlovetosee a movie like Pretty Woman (1990) starring Julia Roberts. Know any thataresimilar? |

| KGSF: | Recommendation:Frozen2(2019) Response:Pretty Woman (1990)is a great movie. |

| OURS: | Recommendation:MyBestFriend'sWedding(1997) Response: Have you seen My Best Friend's Wedding (1997)? Julia Roberts also stars in it. |

| HUMAN: | PrettyWoman(1990) )wasagoodone.Ifyouareinit forJuliaRobertsyoucantryR RunawayBride(1999) |

表 1: 现有对话推荐系统(CRS)方法中推荐模块与会话模块语义不一致的典型案例。提及的电影和实体分别用斜体蓝色和红色标注。相比基线模型,我们的模型生成回复与预测推荐结果具有更高一致性。

| USER: HUMAN: | 你好!我想找些电影看。你喜欢什么类型的电影?我喜欢《冰雪奇缘》(2013)这类动画电影。 |

| USER: | 我不喜欢动画电影。我想看朱莉娅·罗伯茨主演的《风月俏佳人》(1990)这类电影。有类似的推荐吗? |

| KGSF: | 推荐:《冰雪奇缘2》(2019) 回复:《风月俏佳人》(1990)是部好电影。 |

| OURS: | 推荐:《我最好朋友的婚礼》(1997) 回复:你看过《我最好朋友的婚礼》(1997)吗?也是朱莉娅·罗伯茨主演的。 |

| HUMAN: | 《风月俏佳人》(1990)确实不错。如果喜欢朱莉娅·罗伯茨,你可以试试《落跑新娘》(1999)。 |

Despite the progress of existing CRS methods, the fundamental issue of semantic inconsistency between the recommendation and conversation modules has not been well addressed. Figure 1 shows an inconsistent case of the prediction from a representative CRS model, KGSF [35], which utilizes mutual information maximization to align the semantic representations. Although the recommendation module predicts the movie “Frozen 2 (2019)”, the conversation module seems to be unaware of such a recommendation result and generates a mismatched response that contains another movie “Pretty Woman (1990)”. Even if we can utilize heuristic constraints to enforce the generation of the recommended movie, it cannot fundamentally resolve the semantic inconsistency of the two modules. In essence, such a problem is caused by two major issues in existing methods. First, most of these methods develop the two modules with different architectures or techniques. Even with some shared knowledge or components, it is still difficult to effectively associate the two modules seamlessly. Second, results from one module cannot be perceived and utilized by the other. For example, there is no way to leverage the generated response when predicting the recommendation results in KGSF [35]. To summarize, the root of semantic inconsistency is the different architecture designs and working mechanisms of the two modules.

尽管现有CRS方法取得了进展,但推荐模块与会话模块之间的语义不一致这一根本问题仍未得到很好解决。图1展示了代表性CRS模型KGSF[35]的预测不一致案例,该模型利用互信息最大化来对齐语义表征。虽然推荐模块预测了电影《冰雪奇缘2(2019)》,但会话模块似乎未感知该推荐结果,生成了包含另一部电影《风月俏佳人(1990)》的不匹配回复。即便采用启发式约束强制生成推荐电影,也无法从根本上解决两个模块的语义不一致问题。本质上,该问题源于现有方法的两个主要缺陷:首先,多数方法采用不同架构或技术开发两个模块,即使存在共享知识或组件,仍难以有效无缝关联;其次,模块间无法感知和利用彼此的输出结果,例如KGSF[35]在预测推荐结果时无法利用已生成的对话响应。究其根源,语义不一致源于两个模块架构设计和工作机制的差异性。

To address the above issues, we aim to develop a more effective CRS that implements both the recommendation and conversation modules in a unified manner. Our approach is inspired by the great success of pre-trained language models (PLMs) [2, 8, 12], which have been shown effective as a general solution to a variety of tasks even in very different settings. In particular, the recently proposed paradigm prompt learning [2, 8, 29] further unifies the use of PLMs on different tasks in a simple yet flexible manner. Generally speaking, prompt learning augments or extends the original input of

为解决上述问题,我们致力于开发一种更高效的CRS,以统一方式实现推荐与会话模块。该方法受预训练语言模型(PLMs) [2,8,12] 巨大成功的启发,这些模型已被证明可作为通用解决方案适用于多种任务,即使在差异显著的情境中。特别是最新提出的提示学习(prompt learning)范式 [2,8,29],以简洁灵活的方式进一步统一了PLMs在不同任务中的应用。简言之,提示学习通过扩充或延伸原始输入...

PLMs by prepending explicit or latent tokens, which might contain demonstrations, instructions, or learnable embeddings. Such a paradigm can unify different task formats or data forms to a large extent. For CRSs, since the two subtasks aim to fulfill specific goals based on the same conversational semantics, it is feasible to develop a unified CRS approach based on prompt learning.

通过在显式或潜在token前添加可能包含演示、指令或可学习嵌入的内容,PLMs能够很大程度上统一不同的任务格式或数据形式。对于CRS而言,由于两个子任务都基于相同的对话语义来实现特定目标,因此基于提示学习开发统一的CRS方法是可行的。

To this end, in this paper, we propose a novel unified CRS model based on knowledge-enhanced prompt learning, namely UniCRS. For the base PLM, we utilize DialoGPT [33] since it has been pretrained on a large-scale dialogue corpus. In our approach, the base PLM is fixed in solving the two subtasks, without fine-tuning or continual pre-training. To better inject the task knowledge into the base PLM, we first design a semantic fusion module that can capture the semantic association between words from dialogue texts and entities from knowledge graphs (KGs). The major technical contribution of our approach lies in that we formulate the two subtasks in the form of prompt learning, and design specific prompts for each subtask. In our prompt design, we include the dialogue context (specific tokens), task-specific soft tokens (latent vectors), and fused knowledge representations (latent vectors), which can provide sufficient semantic information about the dialogue context, task instructions, and background knowledge. Moreover, for recom mend ation, we incorporate the generated response templates from the conversation module into the prompt, which can further enhance the information interaction between the two subtasks.

为此,本文提出了一种基于知识增强提示学习的新型统一CRS模型UniCRS。我们选用DialoGPT [33] 作为基础PLM (预训练语言模型),因其已在大规模对话语料上进行预训练。该方法中,基础PLM在解决两个子任务时保持固定参数,不进行微调或持续预训练。为更好地将任务知识注入基础PLM,我们首先设计了语义融合模块,用于捕捉对话文本中的词语与知识图谱(KGs)实体间的语义关联。本方法的主要技术贡献在于:以提示学习形式构建两个子任务,并为每个子任务设计特定提示模板。在提示设计中,我们整合了对话上下文(特定token)、任务相关软token(潜在向量)和融合知识表征(潜在向量),这些要素能充分提供对话上下文、任务指令和背景知识的语义信息。此外,在推荐任务中,我们将对话模块生成的响应模板融入提示,进一步加强两个子任务间的信息交互。

To validate the effectiveness of our approach, we conduct experiments on two public CRS datasets. Experimental results show that our UniCRS outperforms several competitive methods on both the recommendation and conversation subtasks, especially when training data is limited. Our main contributions are summarized as: (1) To the best of our knowledge, it is the first time that a unified CRS has been developed in a general prompt learning way. (2) Our approach formulates the subtasks of CRS into a unified form of prompt learning, and designs task-specific prompts with corresponding optimization methods. (3) Extensive experiments on two public CRS datasets have demonstrated the effectiveness of our approach in both the recom mend ation and conversation tasks.

为验证我们方法的有效性,我们在两个公开的CRS数据集上进行了实验。实验结果表明,UniCRS在推荐和对话子任务上均优于多种竞争方法,尤其在训练数据有限时表现更优。我们的主要贡献可总结为:(1) 据我们所知,这是首次以通用提示学习 (prompt learning) 方式开发统一CRS的尝试。(2) 本方法将CRS子任务统一为提示学习形式,并设计了任务特定的提示及对应优化方法。(3) 在两个公开CRS数据集上的大量实验证明了该方法在推荐和对话任务中的有效性。

2 RELATED WORK

2 相关工作

Our work is related to the following two research directions, namely conversational recommendation and prompt learning.

我们的工作涉及以下两个研究方向,即对话式推荐和提示学习。

2.1 Conversational Recommendation

2.1 对话式推荐

With the rapid development of dialogue systems [3, 33], conversational recommend er systems (CRSs) have emerged as a research topic, which aim to provide accurate recommendations through conversational interactions with users [5, 7, 28]. A major category of CRS studies rely on pre-defined actions (e.g., intent slots or item attributes) to interact with users [5, 28, 36]. They focus on accomplishing the recommendation task within as few turns as possible. They adopt the multi-armed bandit model [5, 31] or reinforcement learning [28] to find the optimal interaction strategy. However, methods that belong to this category mostly rely on pre-defined actions and templates to generate responses, which largely limit their usage in various scenarios. Another category of CRS studies aim to generate both accurate recommendations and human-like responses [10, 15, 37]. To achieve this, these works usually devise a recommendation module and a conversation module to implement the two functions, respectively. However, such a design raises the issue of semantic inconsistency, and it is essential to seamlessly integrate the two modules as a system. Existing works mostly either share the knowledge resources and their representations [4, 22], or design semantic alignment pre-training tasks [35] and regularization terms [38]. However, it is still difficult for the effective integration of the two modules due to their different architectures or techniques. For example, it has been pointed out that the generated responses from the conversation module do not always match the predicted items from the recommendation module [18]. Our work follows the latter category and adopts prompt learning based on pre-trained language models (PLM) to unify the recommendation and conversation subtasks. In this way, the two subtasks can be formulated in a unified manner with elaborately designed prompts.

随着对话系统 [3, 33] 的快速发展,会话推荐系统 (Conversational Recommender Systems, CRSs) 已成为一个研究热点,其目标是通过与用户的对话交互提供精准推荐 [5, 7, 28]。一类主要的 CRS 研究依赖于预定义动作 (如意图槽或物品属性) 与用户交互 [5, 28, 36],重点关注以尽可能少的对话轮次完成推荐任务,采用多臂老虎机模型 [5, 31] 或强化学习 [28] 来寻找最优交互策略。但这类方法大多依赖预定义动作和模板生成回复,极大限制了其应用场景的多样性。另一类 CRS 研究则致力于同时生成精准推荐和拟人化回复 [10, 15, 37],通常设计推荐模块和对话模块分别实现这两个功能。然而这种设计会引发语义不一致问题,关键在于如何将两个模块无缝整合为统一系统。现有工作主要通过共享知识资源及其表征 [4, 22],或设计语义对齐预训练任务 [35] 与正则化项 [38] 来实现整合。但由于模块架构或技术差异,两者仍难以有效融合,例如对话模块生成的回复与推荐模块预测的物品常出现不匹配现象 [18]。本研究属于第二类方向,采用基于预训练语言模型 (Pre-trained Language Model, PLM) 的提示学习 (prompt learning) 来统一推荐和对话子任务,通过精心设计的提示 (prompt) 实现两个子任务的统一建模。

2.2 Prompt Learning

2.2 提示学习 (Prompt Learning)

Recent years have witnessed the remarkable performance of PLMs on a variety of tasks [6, 14]. Most of PLMs are pre-trained with the objective of language modeling but are fine-tuned on downstream tasks with quite different objectives. To overcome the gap between pre-training and fine-tuning, prompt learning (a.k.a., prompttuning) has been proposed [9, 19], which relies on carefully designed prompts to reformulate the downstream tasks as the pre-training task. Early works mostly incorporate manually crafted discrete prompts to guide the PLM [2, 24]. Recently, a surge of works focus on automatically optimizing discrete prompts for specific tasks [8, 12] and achieving comparable performance with manual prompts. However, these methods still rely on generative models or complex rules to control the quality of prompts. In contrast, some works propose to use learnable continuous prompts that can be directly optimized [13, 17]. On top of this, several works devise prompt pre-training tasks [9] or knowledgeable prompts [11] to improve the quality of the continuous prompts. In this work, we reformulate both the recommendation and conversation subtasks as the pre-training task of a PLM by prompt learning. In addition, to provide the PLM with task-related knowledge of CRS, we enhance the prompts with the information from an external KG and perform semantic fusion for prompt learning.

近年来,预训练语言模型(PLM)在各种任务中展现出卓越性能[6,14]。大多数PLM以语言建模为目标进行预训练,但在目标任务微调时却采用差异显著的优化目标。为弥合预训练与微调之间的鸿沟,研究者提出了提示学习(prompt learning,亦称prompt tuning)[9,19],该方法通过精心设计的提示(prompt)将下游任务重构为预训练任务形式。早期研究主要采用人工设计的离散提示来引导PLM[2,24]。近期大量工作聚焦于针对特定任务自动优化离散提示[8,12],其性能已可比拟人工提示。然而这些方法仍依赖生成式模型或复杂规则来控制提示质量。相比之下,部分研究提出直接优化可学习的连续提示[13,17]。在此基础上,一些工作设计了提示预训练任务[9]或知识增强提示[11]来提升连续提示质量。本文通过提示学习将推荐与会话子任务统一重构为PLM的预训练任务。此外,为向PLM注入对话推荐系统(CRS)相关领域知识,我们利用外部知识图谱(KG)信息增强提示表示,并通过语义融合优化提示学习。

3 PROBLEM STATEMENT

3 问题陈述

Conversational recommend er systems (CRSs) aim to conduct item recommendation through multi-turn natural language conversations. At each turn, the system either makes recommendations or asks clarification questions, based on the currently learned user preference. Such a process ends until the user accepts the recommended items or leaves. Typically, a CRS consists of two modules, i.e., the recommend er module and the conversation module, which are responsible for the recommendation and the response generation tasks, respectively. These two modules should be seamlessly integrated to generate consistent results, in order to fulfill the convers at ional recommendation task.

对话推荐系统 (CRS) 旨在通过多轮自然语言对话进行物品推荐。在每一轮交互中,系统会根据当前学习的用户偏好,要么进行推荐,要么提出澄清问题。该过程将持续到用户接受推荐物品或离开为止。典型的CRS包含两个模块:推荐模块和对话模块,分别负责推荐任务和回复生成任务。这两个模块需要无缝集成以生成一致的结果,从而完成对话式推荐任务。

Formally, let $u$ denote a user, 𝑖 denote an item from the item set $\boldsymbol{\underbar{\boldsymbol{\jmath}}}$ , and $\boldsymbol{w}$ denote a word from the vocabulary $\mathcal{V}$ . A conversation is denoted as $C={s_{t}}{t=1}^{n}$ , where $s_{t}$ denotes the utterance at the $t$ -th turn and each utterance $s_{t}={w_{j}}{j=1}^{m}$ consists of a sequence of words from the vocabulary $_\mathcal{V}$ .

形式上,设 $u$ 表示用户,$i$ 表示物品集 $\boldsymbol{\underbar{\boldsymbol{\jmath}}}$ 中的物品,$\boldsymbol{w}$ 表示词汇表 $\mathcal{V}$ 中的单词。对话表示为 $C={s_{t}}{t=1}^{n}$,其中 $s_{t}$ 表示第 $t$ 轮的语句,每个语句 $s_{t}={w_{j}}{j=1}^{m}$ 由词汇表 $_\mathcal{V}$ 中的单词序列构成。

With the above definitions, the task of conversational recommendation is defined as follows. At the $t$ -th turn, given the dialogue history $C={s_{j}}{j=1}^{t-1}$ and the item set $\boldsymbol{\mathit{I}}$ , the system should (1) select a set of candidate items $\mathcal{T}{t}$ from the entire item set to recommend, and (2) generate the response $R=s_{t}$ that includes the items in $\mathcal{T}{t}$ . Note that $\mathcal{T}_{t}$ might be empty, when there is no need for recommendation.

基于上述定义,对话式推荐任务定义如下:在第$t$轮对话时,给定对话历史$C={s_{j}}{j=1}^{t-1}$和物品集合$\boldsymbol{\mathit{I}}$,系统需要:(1) 从整个物品集合中筛选候选推荐项$\mathcal{T}{t}$;(2) 生成包含$\mathcal{T}{t}$中物品的响应$R=s_{t}$。需注意当无需推荐时,$\mathcal{T}_{t}$可能为空集。

4APPROACH

4 方法

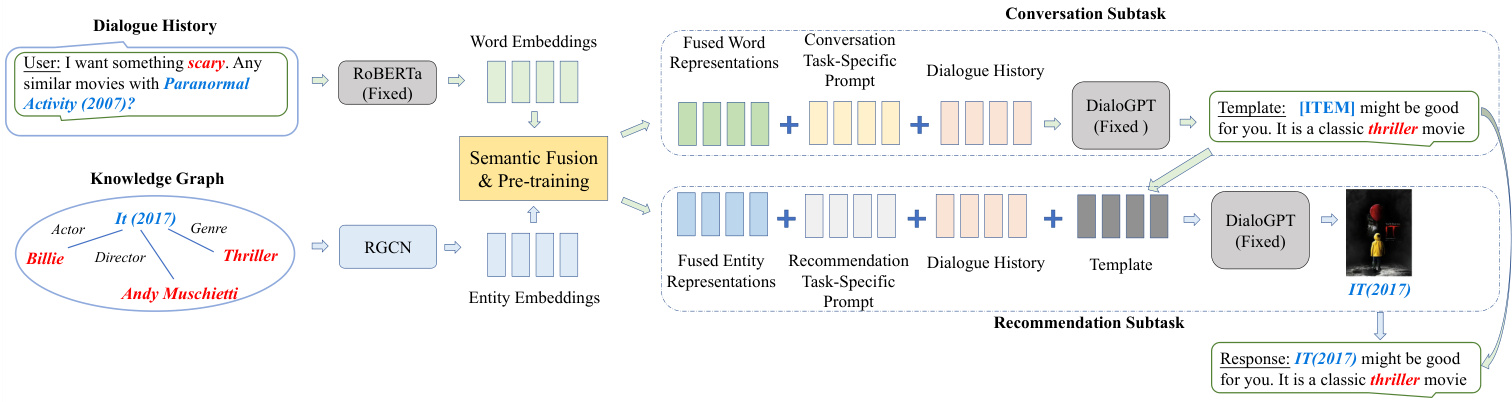

In this section, we present a unified CRS approach with knowledgeenhanced prompt learning based on a PLM, namely UniCRS. We first give an overview of our approach, then discuss how to fuse semantics from words and entities as part of the prompts, and finally present the knowledge-enhanced prompting approach to the CRS task. The overall architecture of our proposed model is presented in Figure 1.

在本节中,我们提出了一种基于预训练语言模型(PLM)、融合知识增强提示学习的统一对话推荐系统(CRS)方法——UniCRS。首先概述方法框架,接着阐述如何融合词语与实体语义作为提示模板组成部分,最后介绍面向CRS任务的知识增强提示学习方法。我们提出的模型整体架构如图1所示。

4.1 Overview of the Approach

4.1 方法概述

Previous studies on CRS [4, 15, 35] usually develop specific modules for the recommendation and conversation subtasks respectively, and they need to connect the two modules in order to fulfill the task goal of CRS. Different from existing CRS methods, we aim to develop a unified approach with prompt learning based on PLM.

以往关于CRS的研究[4,15,35]通常分别为推荐和对话子任务开发特定模块,并需要连接这两个模块以实现CRS的任务目标。与现有CRS方法不同,我们旨在基于PLM开发一种采用提示学习(prompt learning)的统一方法。

The Base PLM. In our approach, we take DialoGPT [33] as our base PLM. DialoGPT adopts a Transformer-based auto regressive architecture and is pre-trained on a large-scale dialogue corpus extracted from Reddit. It has been shown that DialoGPT can generate coherent and informative responses, making it a suitable base model for the CRS task [18, 29]. Let $f(\cdot\mid\Theta_{p l m})$ denote the base PLM parameterized by $\Theta_{p l m}$ , taking a token sequence as input and producing contextual i zed representations for each token. Unless otherwise specified, we will use the representation of the last token from DialoGPT for subsequent prediction or generation tasks.

基础PLM。在我们的方法中,采用DialoGPT [33]作为基础PLM。DialoGPT采用基于Transformer的自回归架构,并在从Reddit提取的大规模对话语料库上进行预训练。研究表明,DialoGPT能够生成连贯且信息丰富的响应,使其成为CRS任务的合适基础模型 [18, 29]。设 $f(\cdot\mid\Theta_{p l m})$ 表示由 $\Theta_{p l m}$ 参数化的基础PLM,它以token序列作为输入,并为每个token生成上下文表示。除非另有说明,我们将使用DialoGPT最后一个token的表示进行后续预测或生成任务。

A Unified Prompt-Based Approach to CRS. Given the dialogue history ${s_{j}}{j=1}^{t-1}$ at the $t$ -th turn, we concatenate each utterance into a text sequence 𝐶 = {𝑤𝑘 }𝑘=𝑊1 . The basic idea is to encode the dialogue history $C$ , obtain its contextual i zed representations, and solve the recommendation and conversation subtasks via generation (i.e., generating either the recommended items or the response utterance), with the base PLM. In this way, the two subtasks can be fulfilled in a unified approach. However, since the base PLM is fixed, it is difficult to achieve satisfactory performance compared with fine-tuning due to lack of task adaptation. Therefore, we adopt the prompting apborately designed or learned prompt tokens, denoted by ${p_{k}}{k=1}^{n_{P}}$ $\overset{\cdot}{n}\overset{\cdot}{P}$ is the number of prompt tokens). In practice, prompt tokens can be either explicit tokens or latent vectors. It has been shown that prompting is an effective paradigm to leverage the knowledge of PLMs to solve various tasks without fine-tuning [2, 8].

基于提示的统一CRS方法。给定第$t$轮对话历史${s_{j}}{j=1}^{t-1}$,我们将每个话语连接成文本序列𝐶 = {𝑤𝑘 }𝑘=𝑊1。基本思路是编码对话历史$C$,获取其上下文表征,并通过生成(即生成推荐项或响应话语)利用基础PLM (预训练语言模型) 解决推荐和对话子任务。通过这种方式,两个子任务能以统一方法完成。但由于基础PLM固定,缺乏任务适配性,其性能往往难以达到微调效果。因此我们采用提示方法,通过精心设计或学习的提示token(记为${p_{k}}{k=1}^{n_{P}}$,其中$\overset{\cdot}{n}\overset{\cdot}{P}$为提示token数量)来增强模型。实际应用中,提示token既可以是显式token也可以是潜在向量。研究表明,提示是一种无需微调即可利用PLM知识解决各类任务的有效范式[2, 8]。

Prompt-augmented Dialogue Context. By incorporating the prompts, the original dialogue history $C$ can be extended to a longer sequence (called context sequence), denoted as 𝐶:

提示增强的对话上下文。通过整合提示词,原始对话历史 $C$ 可扩展为更长的序列(称为上下文序列),记作 𝐶:

Figure 1: The overview of the proposed framework UniCRS. Blocks in grey indicate that their parameters are frozen, while other parameters are tunable. We first perform pre-training to fuse semantics from both words and entities, then prompt the PLM to generate the response template and use the template as part of the prompt for recommendation. Finally, the recommended items are filled into the template as a complete response.

图 1: 提出的UniCRS框架概述。灰色模块表示其参数被冻结,其余参数可调。我们首先进行预训练以融合单词和实体的语义,然后提示PLM生成响应模板,并将该模板作为推荐提示的一部分。最后,将推荐项填入模板形成完整响应。

$$

\widetilde{C}\to p_{1},\dots,p_{n_{P}},w_{1}\cdot\cdot\cdot w_{n_{W}}.

$$

$$

\widetilde{C}\to p_{1},\dots,p_{n_{P}},w_{1}\cdot\cdot\cdot w_{n_{W}}.

$$

As before, we utilize the base PLM to obtain contextual i zed representations of the context sequence for solving the recommendation and conversation subtasks. In order to better adapt to the task characteristics, we can construct and learn different prompts, and obtain corresponding context sequences denoted as $\widetilde{C}{r e c}$ for recommendation and $\widetilde{C}_{c o n}$ for conversation.

与之前一样,我们利用基础PLM获取上下文序列的语境化表征,以解决推荐和对话子任务。为了更好地适应任务特性,我们可以构建并学习不同的提示,得到对应的上下文序列,分别表示为$\widetilde{C}{rec}$(推荐)和$\widetilde{C}_{con}$(对话)。

To implement such a unified approach, we identify two major problems to solve: (1) how to fuse conversational semantics and related knowledge semantics in order to adapt the base PLM for CRS (Section 4.2), and (2) how to design and learn suitable prompts for the recommendation and conversation subtasks (Section 4.3). In what follows, we will introduce the two parts in detail.

为实现这一统一方法,我们明确了两个待解决的核心问题:(1) 如何融合对话语义与相关知识语义,使基础预训练语言模型(PLM)适配对话推荐系统(CRS)(第4.2节);(2) 如何为推荐和对话子任务设计并学习合适的提示(第4.3节)。下文将详细阐述这两个部分。

4.2 Semantic Fusion for Prompt Learning

4.2 提示学习的语义融合

Since DialoGPT is pre-trained on a general dialogue corpus, it lacks the specific capacity for the CRS task and cannot be directly used. Following previous studies [4, 35], we incorporate KGs as the taskspecific knowledge resources, since it involves useful knowledge about entities and items mentioned in the dialogue. However, it has been found that there is a large semantic gap between the semantic spaces of dialogues and KGs [35, 38]. We need to first fuse the two semantic spaces for effective knowledge alignment and enrichment. Specially, the purpose of this step is to fuse the token and entity embeddings from different encoders.

由于DialoGPT是在通用对话语料库上预训练的,它缺乏针对CRS任务的特有能力,无法直接使用。遵循先前研究[4, 35],我们将知识图谱(KG)作为任务特定的知识资源,因为它包含对话中提到的实体和项目的有用知识。然而,研究发现对话与知识图谱的语义空间之间存在较大语义鸿沟[35, 38]。我们需要先融合这两个语义空间以实现有效的知识对齐和丰富。具体而言,此步骤的目的是融合来自不同编码器的token和实体嵌入。

Encoding Word Tokens and KG Entities. Given a dialogue history $C$ , we first separately encode the dialogue words and KG entities that appear in $C$ into word embeddings and entity embeddings. To complement our base PLM DialoGPT (a unidirectional decoder), we employ another fixed PLM RoBERTa [20] (a bi-directional encoder) to derive the word embeddings. The contextual i zed token representations derived from the fixed encoder RoBERTa are concatenated into a word embedding matrix, i.e., $\mathbf T=[h_{1}^{T};\dots;h_{n_{W}}^{T}]$ For entity embeddings, following previous works [4, 35], we first perform entity linking based on an external KG DBpedia [1], and then obtain the corresponding entity embeddings via a relational graph neural networks (RGCN) [25], which can model the relational semantics through information propagation and aggregation over the KG. Similarly, the derived entity embedding matrix is denoted as $\mathbf{E}=[h_{1}^{E};\dots;{\dot{h}}{n_{E}}^{E}]$ , where $n_{E}$ is the number of mentioned entities in the dialogue history.

编码词Token与知识图谱实体。给定对话历史$C$,我们首先分别将$C$中出现的对话词汇和知识图谱实体编码为词嵌入和实体嵌入。为补充基础单向解码器PLM DialoGPT,我们采用另一个固定参数的双向编码器PLM RoBERTa [20]生成词嵌入。从固定编码器RoBERTa获取的上下文Token表征被拼接为词嵌入矩阵$\mathbf{T}=[h_{1}^{T};\dots;h_{n_{W}}^{T}]$。对于实体嵌入,遵循先前研究[4,35],我们首先基于外部知识图谱DBpedia [1]进行实体链接,随后通过关系图神经网络(RGCN) [25]获取对应实体嵌入,该网络能通过知识图谱上的信息传播与聚合建模关系语义。类似地,生成的实体嵌入矩阵表示为$\mathbf{E}=[h_{1}^{E};\dots;{\dot{h}}{n_{E}}^{E}]$,其中$n_{E}$为对话历史中提及的实体数量。

Word-Entity Semantic Fusion. In order to bridge the semantic gap between words and entities, we use a cross interaction mechanism to associate the two kinds of semantic representations via a bilinear transformation:

词-实体语义融合。为了弥合词语与实体之间的语义鸿沟,我们采用交叉交互机制,通过双线性变换将两种语义表征进行关联:

$$

\begin{array}{r l}&{\mathbf{A}=\mathbf{T}^{\top}\mathbf{W}\mathbf{E},}\ &{\widetilde{\mathbf{T}}=\mathbf{T}+\mathbf{E}\mathbf{A},}\ &{\widetilde{\mathbf{E}}=\mathbf{E}+\mathbf{T}\mathbf{A}^{\top},}\end{array}

$$

$$

\begin{array}{r l}&{\mathbf{A}=\mathbf{T}^{\top}\mathbf{W}\mathbf{E},}\ &{\widetilde{\mathbf{T}}=\mathbf{T}+\mathbf{E}\mathbf{A},}\ &{\widetilde{\mathbf{E}}=\mathbf{E}+\mathbf{T}\mathbf{A}^{\top},}\end{array}

$$

where A is the affinity matrix between the two representations, W is the transformation matrix, $\widetilde{\sf T}$ is the fused word representations, and $\widetilde{\mathbf E}$ is the fused entity repr e sent at ions. Here we use the bilinear transformation between $\boldsymbol{\mathrm{T~}}$ and E for simplicity, and leave the further exploration of complex interaction mechanisms for future work.

其中A表示两种表征间的亲和矩阵,W是变换矩阵,$\widetilde{\sf T}$为融合后的词表征,$\widetilde{\mathbf E}$为融合后的实体表征。此处为简化计算,我们采用$\boldsymbol{\mathrm{T~}}$与E之间的双线性变换,更复杂的交互机制留待未来研究。

Pre-training the Fusion Module. After semantic fusion, we can establish the semantic association between words and entities. However, such a module involves additional learnable parameters, denoted as $\Theta_{f u s e}$ . To better optimize the parameters of the fusion module, we propose a prompt-based pre-training approach that leverages the self-supervision signals from the dialogues. Specifically, we prepend the fused entity representations E (Eq. 4) and append the response to the dialogue context, namely $\widetilde{C}{p r e}=[\widetilde{\mathbf{E}};C;\bar{R]}$ , where we use the bold font to denote the latent vectors (E) and the plain font to denote the explicit tokens $(C,R)$ . For this pre-training task, we simply utilize the prompt-augmented context sequence $\widetilde{C}_{p r e}$ to predict the entities appearing in the response. The prediction probability of the entity $e$ is formulated as:

预训练融合模块。经过语义融合后,我们可以建立词语与实体间的语义关联。但该模块涉及额外可学习参数 $\Theta_{f u s e}$ 。为更好地优化融合模块参数,我们提出基于提示词(prompt)的预训练方法,利用对话中的自监督信号。具体而言,我们将融合后的实体表示E(式4)前置,并将响应追加至对话上下文,即 $\widetilde{C}{p r e}=[\widetilde{\mathbf{E}};C;\bar{R]}$ ,其中粗体表示潜在向量(E),普通字体表示显式token $(C,R)$ 。在此预训练任务中,我们直接使用提示词增强的上下文序列 $\widetilde{C}_{p r e}$ 来预测响应中出现的实体。实体 $e$ 的预测概率公式为:

$$

\operatorname*{Pr}(e\mid\widetilde{C}{p r e})=\operatorname{Softmax}(h_{u}\cdot h_{e}),

$$

$$

\operatorname*{Pr}(e\mid\widetilde{C}{p r e})=\operatorname{Softmax}(h_{u}\cdot h_{e}),

$$

where $h_{u}=\mathrm{Pooling}[f(\widetilde{C}{p r e}\mid\Theta_{p l m};\Theta_{f u s e})]$ is the learned representation of the context by pooling the contextual i zed representations of all the tokens in $\bar{C}{p r e}$ , and $\pmb{h}{e}$ is the fused entity represen- tation for the entity $e$ . N ote that only the parameters of the fusion module $\Theta_{f u s e}$ are required to optimize, while the parameters of the base PLM $\Theta_{p l m}$ are fixed. We adopt the cross-entropy loss for the pre-training task.

其中 $h_{u}=\mathrm{Pooling}[f(\widetilde{C}{p r e}\mid\Theta_{p l m};\Theta_{f u s e})]$ 是通过对 $\bar{C}{p r e}$ 中所有token的上下文表征进行池化学习到的上下文表示,$\pmb{h}{e}$ 是实体 $e$ 的融合实体表征。注意仅需优化融合模块参数 $\Theta_{f u s e}$,而基础PLM的参数 $\Theta_{p l m}$ 保持固定。我们采用交叉熵损失作为预训练任务目标。

After semantic fusion, we obtain the fused knowledge representations for words and entities from the dialogue history, namely T (Eq. 3) and $\widetilde{\mathbf E}$ (Eq. 4), respectively. These representations are subs equently us ed as part of prompts, as shown in Section 4.3.

经过语义融合后,我们分别从对话历史中获得了词语和实体的融合知识表示,即 T (式 3) 和 $\widetilde{\mathbf E}$ (式 4)。这些表示随后被用作提示(prompt)的一部分,如第4.3节所示。

4.3 Subtask-specific Prompt Design

4.3 子任务特定提示设计

Though the base PLM is fixed without fine-tuning, we can design specific prompts to adapt it to different subtasks of CRS. For each subtask (either recommendation or conversation), the major design of prompting consists of three parts, namely the dialogue history, subtask-specific soft tokens, and fused knowledge representations. For recommendation, we further incorporate the generated response templates as additional prompt tokens. Next, we describe the specific prompting designs for the two subtasks in detail.

虽然基础预训练语言模型(PLM)未经微调是固定的,但我们可以设计特定提示(prompt)使其适配对话推荐系统(CRS)的各个子任务。针对每个子任务(推荐或对话),提示设计主要包含三部分:对话历史、子任务专属软token(soft tokens)以及融合知识表征(fused knowledge representations)。对于推荐任务,我们还会将生成的响应模板作为额外提示token加入。接下来详细阐述两个子任务的提示设计方案。

4.3.1 Prompt for Response Generation. The subtask of response generation aims to generate informative utterances in order to clarify user preferences or reply to users’ utterances. The prompting design mainly enhances the textual semantics for better dialogue understanding and response generation.

4.3.1 回复生成的提示。回复生成子任务旨在生成信息性话语,以澄清用户偏好或回应用户话语。提示设计主要通过增强文本语义来优化对话理解和回复生成。

The Prompt Design. The prompt for response generation consists of the original dialogue history (in the form of word tokens $C$ ), generation-specific soft tokens (in the form of latent vectors $\mathbf{P}_{g e n}$ ) and fused textual context (in the form of latent vectors $\widetilde{\mathbf{T}},$ ), which is formally denoted as:

提示设计。用于生成回复的提示由原始对话历史(以词元 $C$ 形式表示)、生成专用软标记(以潜在向量 $\mathbf{P}_{g e n}$ 形式表示)和融合文本上下文(以潜在向量 $\widetilde{\mathbf{T}}$ 形式表示)组成,其正式表达式为:

$$

\widetilde{C}{g e n}\rightarrow[\widetilde{\textbf{T}};\mathbf{P}_{g e n};C],

$$

$$

\widetilde{C}{g e n}\rightarrow[\widetilde{\textbf{T}};\mathbf{P}_{g e n};C],

$$

where we use the bold and plain fonts to denote soft and hard token sequences, respectively. In this design, the subtask-specific prompts $\mathbf{P}_{g e n}$ instruct the PLM by the signal from the generation task, the KG-enhanced textual representations T (Eq. 3), and the original dialogue history $C$ .

我们使用粗体和普通字体分别表示软token序列和硬token序列。在该设计中,特定子任务提示 $\mathbf{P}_{g e n}$ 通过生成任务的信号、知识图谱增强的文本表示 T (式3) 以及原始对话历史 $C$ 来指导PLM。

Prompt Learning. In the above prompting design, the only tunable parameters are the fused textual representations T that have been pre-trained, and generation-specific soft token s $\mathbf{P}{g e n}$ . They are denoted as $\Theta_{g e n}$ . We use the prompt-augmented context $\widetilde{C}{g e n}$ to derive the prediction loss for learning $\Theta_{g e n}$ , which is formal lye given as:

提示学习 (Prompt Learning)。在上述提示设计中,唯一可调参数是经过预训练的融合文本表示 T 和生成专用软token $\mathbf{P}{g e n}$,它们被记为 $\Theta_{g e n}$。我们使用提示增强的上下文 $\widetilde{C}{g e n}$ 来推导预测损失以学习 $\Theta_{g e n}$,其形式化表达式为:

$$

\begin{array}{l}{{{\displaystyle{\cal L}{g e n}(\Theta_{g e n})=-\frac{1}{N}\sum_{j=1}^{N}\log\operatorname*{Pr}(R_{j}\mid\widetilde{C}{g e n}^{(j)};\Theta_{g e n})}}}\ {{~=-\displaystyle{\frac{1}{N}\sum_{i=1}^{N}\sum_{j=1}^{l_{i}}\log\operatorname*{Pr}(w_{i,j}\mid\widetilde{C}{g e n}^{(j)};\Theta_{g e n};w_{<j})},}}\end{array}

$$

$$

\begin{array}{l}{{{\displaystyle{\cal L}{g e n}(\Theta_{g e n})=-\frac{1}{N}\sum_{j=1}^{N}\log\operatorname*{Pr}(R_{j}\mid\widetilde{C}{g e n}^{(j)};\Theta_{g e n})}}}\ {{~=-\displaystyle{\frac{1}{N}\sum_{i=1}^{N}\sum_{j=1}^{l_{i}}\log\operatorname*{Pr}(w_{i,j}\mid\widetilde{C}{g e n}^{(j)};\Theta_{g e n};w_{<j})},}}\end{array}

$$

where $N$ is the number of training instances (a pair of the context and target utterances), and $l_{i}$ is the length of the $i$ -th target utterance, and $w_{<j}$ denotes the words proceeding the $j$ -th position.

其中 $N$ 是训练实例的数量(上下文和目标话语的对),$l_{i}$ 是第 $i$ 个目标话语的长度,$w_{<j}$ 表示第 $j$ 个位置之前的单词。

Response Template Generation. Besides sharing the base PLM, we find that it is also important to share intermediate results of different subtasks to achieve more consistent final results. For example, given the generated response of the conversation task, the PLM might be able to predict more relevant recommendations according to such extra contextual information. Based on this intuition, we propose to include response templates as part of the prompt for the recommendation subtask. Specifically, we add a special token [ITEM] into the vocabulary $_\mathcal{V}$ of the base PLM and replace all the items that appear in the response with the [ITEM] token. At each time step, the PLM generates either the special token [ITEM] or a general token from the original vocabulary. All the slots will be filled after the recommended items are generated.

响应模板生成。除了共享基础PLM外,我们发现共享不同子任务的中间结果对实现更一致的最终结果也很重要。例如,根据对话任务生成的响应,PLM可能能够利用这些额外的上下文信息预测更相关的推荐。基于这一直觉,我们建议将响应模板作为推荐子任务提示的一部分。具体来说,我们在基础PLM的词表$_\mathcal{V}$中添加一个特殊token [ITEM],并将响应中出现的所有物品替换为[ITEM] token。在每个时间步,PLM会生成特殊token [ITEM]或原始词表中的通用token。所有空缺将在推荐物品生成后被填充。

4.3.2 Prompt for Item Recommendation. The subtask of recommendation aims to predict items that a user might be interested in. The prompting design mainly enhances the user preference semantics, in order to predict more satisfactory recommendations.

4.3.2 物品推荐提示词设计

推荐子任务旨在预测用户可能感兴趣的物品。提示设计主要通过增强用户偏好语义,以预测更符合需求的推荐结果。

The Prompt Design. The item recommendation prompts consist of the original dialogue history $C$ (in the form of word tokens), recommendation-specific soft tokens $\mathbf{P}_{r e c}$ (in the form of latent vectors), fused entity context $\widetilde{\mathbf E}$ (in the form of latent vectors), and the response template $S$ (in the form of word tokens), formally described as:

提示设计。物品推荐提示由原始对话历史 $C$(以词token形式)、推荐专用软token $\mathbf{P}_{r e c}$(以潜在向量形式)、融合实体上下文 $\widetilde{\mathbf E}$(以潜在向量形式)以及响应模板 $S$(以词token形式)组成,其形式化描述为:

$$

\widetilde{C}{r e c}\rightarrow[\widetilde{\bf E};{\bf P}_{r e c};C;S],

$$

$$

\widetilde{C}{r e c}\rightarrow[\widetilde{\bf E};{\bf P}_{r e c};C;S],

$$

where the subtask-specific prompts $\mathbf{P}_{r e c}$ instruct the PLM by the signal from the recommendation task, the KG-enhanced entity representations $\widetilde{\mathbf E}$ (Eq. 4), the original dialogue history $C$ , and the response templa te $S$ .

其中特定子任务提示 $\mathbf{P}_{r e c}$ 通过推荐任务的信号指导PLM,知识图谱增强的实体表示 $\widetilde{\mathbf E}$ (式4)、原始对话历史 $C$ 以及响应模板 $S$。

A key difference between the prompts of the two subtasks is that we utilize entity representations for recommendation, and word representations for generation. This is because their prediction targets are items and sentences, respectively. Besides, we have a special design for recommendation, where we include the response template as part of the prompts. This can enhance the subtask connections and alleviate the risk of semantic inconsistency.

两个子任务提示语的关键区别在于:推荐任务使用实体表征 (entity representations),生成任务采用词语表征 (word representations)。这是因为它们的预测目标分别是物品和句子。此外,我们对推荐任务进行了特殊设计——将回复模板作为提示语的一部分,这能增强子任务关联性并降低语义不一致风险。

Prompt Learning. In the above prompting design, the only tunable parameters are the fused entity representations E that have been pre-trained, and recommendation-specific soft t okens $\mathbf{P}{g e n}$ . They are denoted as $\Theta_{r e c}$ . We utilize the prompt-augmented context $\widetilde{C}{r e c}$ to derive the prediction loss for learning $\Theta_{r e c}$ , which is form ally given as:

提示学习 (Prompt Learning)。在上述提示设计中,唯一可调参数是经过预训练的融合实体表征 E 和推荐专用软token $\mathbf{P}{g e n}$。它们被记为 $\Theta_{r e c}$。我们利用提示增强上下文 $\widetilde{C}{r e c}$ 来推导预测损失以学习 $\Theta_{r e c}$,其形式化表达式为:

$$

L_{r e c}(\Theta_{r e c})=-\sum_{j=1}^{N}\sum_{i=1}^{M}\big[y_{j,i}\cdot\log\operatorname*{Pr}{j}(i)+(1-y_{j,i})\cdot\log(1-\operatorname*{Pr}_{j}(i))\big],

$$

$$

L_{r e c}(\Theta_{r e c})=-\sum_{j=1}^{N}\sum_{i=1}^{M}\big[y_{j,i}\cdot\log\operatorname*{Pr}{j}(i)+(1-y_{j,i})\cdot\log(1-\operatorname*{Pr}_{j}(i))\big],

$$

where $N$ is the number of training instances (a pair of the context and a target item), $M$ is the total number of items, $y_{j,i}$ denotes a binary ground-truth label which is equal to 1 when item 𝑖 is the correct label for the $j$ -th training instance, and $\mathrm{Pr}{j}(i)$ is an abbreviation of $\mathrm{Pr}(i\mid\widetilde{C}{r e c}^{(j)};\Theta_{r e c})$ , which is computed following a similar way in Eq. 5 by