Style-Based Global Appearance Flow for Virtual Try-On

基于风格的全局外观流虚拟试穿

Figure 1. Our global appearance flow based try-on model has a clear advantage over existing local flow based SOTA methods such as Cloth-flow [13] and PF-AFN [10], especially when there are large mis-alignment between reference and garment images (top row), and difficult poses/occlusions (bottom row).

图 1: 我们基于全局外观流的试穿模型相比现有基于局部流的SOTA方法(如Cloth-flow [13]和PF-AFN [10])具有明显优势,特别是在参考图像与服装图像存在较大错位时(上图),以及困难姿势/遮挡情况下(下图)。

Abstract

摘要

Image-based virtual try-on aims to fit an in-shop garment into a clothed person image. To achieve this, a key step is garment warping which spatially aligns the target garment with the corresponding body parts in the person image. Prior methods typically adopt a local appearance flow estimation model. They are thus intrinsically susceptible to difficult body poses/occlusions and large mis-alignments between person and garment images (see Fig. 1). To overcome this limitation, a novel global appearance flow estimation model is proposed in this work. For the first time, a StyleGAN based architecture is adopted for appearance flow estimation. This enables us to take advantage of a global style vector to encode a whole-image context to cope with the aforementioned challenges. To guide the StyleGAN flow generator to pay more attention to local garment deformation, a flow refinement module is introduced to add local context. Experiment results on a popular virtual tryon benchmark show that our method achieves new stateof-the-art performance. It is particularly effective in a ‘inthe-wild’ application scenario where the reference image is full-body resulting in a large mis-alignment with the garment image (Fig. 1 Top). Code is available at: https: //github.com/SenHe/Flow-Style-VTON.

基于图像的虚拟试穿旨在将店内服装贴合到穿衣人物图像上。实现这一目标的关键步骤是服装变形 (garment warping),即在空间上将目标服装与人物图像中对应的身体部位对齐。现有方法通常采用局部外观流估计模型,因此本质上难以处理复杂身体姿态/遮挡以及人物与服装图像间的大幅错位问题 (见图 1)。为突破这一局限,本文提出了一种新颖的全局外观流估计模型。我们首次采用基于 StyleGAN 的架构进行外观流估计,通过全局风格向量编码全图上下文信息来应对上述挑战。为使 StyleGAN 流生成器更关注局部服装形变,还引入了流优化模块来添加局部上下文。在主流虚拟试穿基准测试中,本方法取得了最先进的性能表现,尤其适用于"真实场景"下参考图像为全身像导致与服装图像大幅错位的应用场景 (图 1 顶部)。代码已开源:https://github.com/SenHe/Flow-Style-VTON。

1. Introduction

1. 引言

The transition from offline in-shop retail to e-commerce has been accelerated by the recent pandemic caused lock downs. In 2020, retail e-commerce sales worldwide amounted to 4.28 trillion US dollars and e-retail revenues are projected to grow to 5.4 trillion US dollars in 2022. However, when it comes to fashion, one of key offline experiences missed by the on-line shoppers is the changing room where a garment item can be tried-on. To re- duce the return cost for the online retailers and give shoppers the same offline experience online, image-based virtual try-on (VTON) has been studied intensively recently [9, 10, 13, 14, 19, 24, 38, 39, 42, 43].

疫情导致的封锁加速了从线下实体零售向电子商务的转型。2020年全球零售电商销售额达4.28万亿美元,预计2022年将增长至5.4万亿美元。然而在时尚领域,线上购物者最缺失的线下体验之一就是试衣间功能。为降低线上零售商的退货成本并提供媲美线下的试穿体验,基于图像的虚拟试穿(VTON)技术近年来受到广泛研究 [9, 10, 13, 14, 19, 24, 38, 39, 42, 43]。

A VTON model aims to fit an in-shop garment into a person image. A key objective of a VTON model is to align the in-shop garment with the corresponding body parts in the person image. This is due to the fact that the in-shop garment is usually not spatially aligned with the person image (see Fig. 1). Without the spatial alignment, directly applying advanced detail-preserving image to image translation models [18, 30] to fuse the texture in person image and garment image will result in unrealistic effect in the generated try-on image, especially in the occluded and misaligned regions.

VTON模型旨在将店铺中的服装适配到人物图像上。VTON模型的一个关键目标是将店铺服装与人物图像中对应的身体部位对齐。这是因为店铺服装通常与人物图像在空间上并不对齐(见图1)。若缺乏空间对齐,直接应用先进的细节保留图像转换模型[18,30]来融合人物图像和服装图像的纹理,会导致生成的试穿图像出现不真实的效果,尤其是在遮挡和未对齐区域。

Previous methods address this alignment problem through garment warping, i.e., they first warp the in-shop garment, which is then concatenated with the person image and fed into an image to image translation model for the final try-on image generation. Many of them [9,14,19,38,42, 43] adopt a Thin Plate Spline (TPS) [7] based on the warping method, exploiting the correlation between features extracted from the person and garment images. However, as analyzed in previous works [5, 13, 42], TPS has limitations in handling complex warping, e.g., when different regions in the garment require different deformations. As a result, recent SOTA methods [10, 13] estimate dense appearance flow [45] to warp the garment. This involves training a network to predict the dense appearance flow field representing the deformation required to align the garment with the corresponding body parts.

先前的方法通过服装变形来解决这一对齐问题,即先对店内服装进行变形处理,再将其与人物图像拼接后输入图像到图像转换模型,最终生成试穿效果图。许多研究[9,14,19,38,42,43]采用基于薄板样条(TPS)[7]的变形方法,利用从人物和服装图像中提取的特征相关性。但如先前工作[5,13,42]所述,TPS在处理复杂变形时存在局限,例如当服装不同区域需要差异化形变时。因此,近期SOTA方法[10,13]通过估计密集外观流[45]来实现服装变形,该方法训练网络预测表示服装与对应身体部位对齐所需形变的密集外观流场。

However, existing appearance flow estimation methods are limited in accurate garment warping due to the lack of global context. More specifically, all existing methods are based on local feature’s correspondence, e.g., local feature concatenation or correlation 1, developed for optical flow estimation [6, 17]. To estimate the appearance flow, they make the unrealistic assumption that the corresponding regions from the person image and the in-shop garment are located in the same local receptive filed of the feature extractor. When there is a large mis-alignment between the garment and corresponding body parts (Fig. 1 Top), current appearance flow based methods will deteriorate drastically and generate unsatisfactory results. Lacking a global context also make existing flow-based VTON methods vulnerable to difficult poses/occlusions (Fig. 1 Bottom) when correspondences have to be searched beyond a local neighborhood. This severely limits the use of these methods ‘inthe-wild’, whereby a user may have a full-body picture of herself/himself as the person image to try-on multiple garment items (e.g., top, bottom, and shoes).

然而,现有的外观流估计方法由于缺乏全局上下文,在精确服装变形方面存在局限。具体而言,所有现有方法都基于局部特征对应关系(例如用于光流估计的局部特征拼接或相关性方法 [6, 17])。这些方法在估计外观流时,不切实际地假设人物图像和店内服装的对应区域位于特征提取器的同一局部感受野内。当服装与对应身体部位存在严重错位时(图 1 顶部),当前基于外观流的方法性能会急剧下降并生成不理想的结果。全局上下文的缺失还使现有基于流的虚拟试穿(VTON)方法难以处理困难姿势/遮挡情况(图 1 底部),此时必须在局部邻域之外搜索对应关系。这严重限制了这些方法在"野外"场景中的应用——用户可能使用自己的全身照片作为人物图像来试穿多件服装(例如上衣、下装和鞋子)。

To overcome this limitation, a novel global appearance flow estimation model is proposed in this work. Specifically, for the first time, a StyleGAN [21, 22] architecture for dense appearance flow estimation. This differs fundamentally from existing methods [6, 10, 13, 17] which employ a U-Net [30] architecture to preserve local spatial context. Using a global style vector extracted from the whole reference and garment images makes it easy for our model to capture global context. However, it also raises an important question: can it capture local spatial context crucial for local alignments? After all, a single style vector seemingly has lost local spatial context. To answer this question, we first note that StyleGAN has been successfully applied to local face image manipulation tasks, where different style vectors can generate the same face at different viewpoints [34] and different shapes [15, 28]. This suggests that a global style vector does have local spatial context encoded. However, we also note that the vanilla StyleGAN architecture [21, 22], though much more robust against large mis-alignment and difficult poses/occlusions compared to U-Net, is weaker when it comes to local deformation modeling. We therefore introduce a local flow refinement module in the existing StyleGAN generator to have the better of both worlds.

为克服这一局限性,本研究提出了一种新颖的全局外观流估计模型。具体而言,我们首次采用StyleGAN [21, 22]架构进行密集外观流估计。这与现有采用U-Net [30]架构以保持局部空间上下文的方法[6, 10, 13, 17]存在本质区别。通过从参考图像和服装图像中提取全局风格向量,我们的模型能轻松捕获全局上下文。但这也引出一个关键问题:它能否捕获对局部对齐至关重要的局部空间上下文?毕竟单一风格向量看似已丢失局部空间信息。针对该问题,我们首先注意到StyleGAN已成功应用于局部人脸图像编辑任务,其中不同风格向量可生成不同视角[34]和不同形状[15, 28]的同一人脸。这表明全局风格向量确实编码了局部空间上下文。然而我们也发现,与U-Net相比,原始StyleGAN架构[21, 22]虽对大错位和复杂姿态/遮挡更具鲁棒性,但在局部形变建模方面较弱。因此我们在现有StyleGAN生成器中引入局部流优化模块,以实现优势互补。

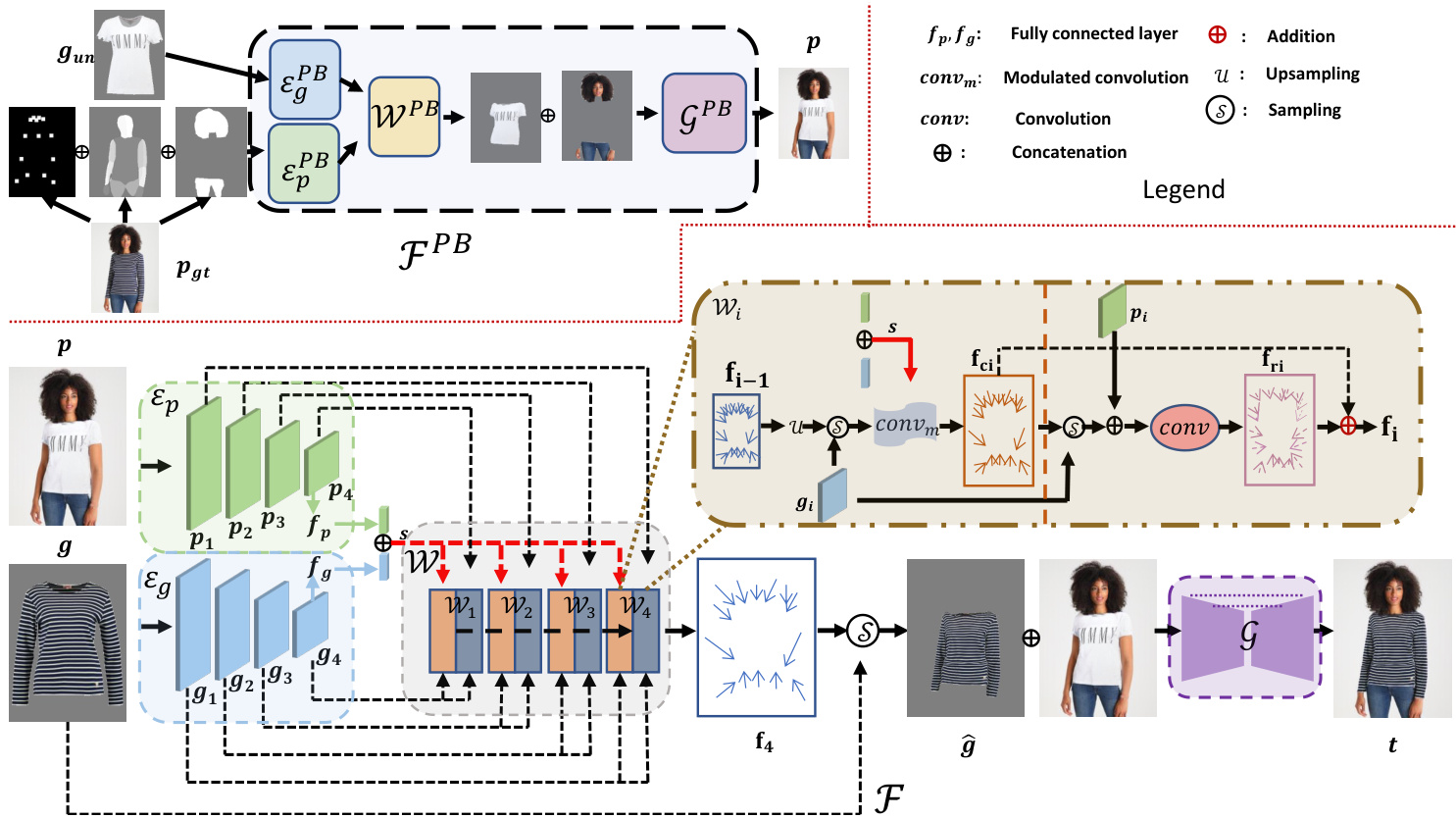

Concretely, our StyleGAN-based warping module ( $\mathcal{W}$ in Fig. 2) consists of stacked warping blocks that takes as inputs a global style vector, garment features and person features. The global style vector is computed from the lowest resolution feature maps of the person image and the in-shop garment for global context modeling. In each warping block in the generator, the global style vector is used to modulate the feature channels which takes in the corresponding garment feature map to estimate the appearance flow. To enable our flow-estimator to model the fine-grained local appearance flow, e.g., the arm and hand regions in Fig. 5, in each warping block on top of the style based appearance flow estimation part, we introduce a refinement layer. This refinement layer first warps the garment feature map, which is subsequently concatenated with the person feature map at the same resolution and then used to predict the local detailed appearance flow.

具体来说,我们基于StyleGAN的变形模块 (图2中的$\mathcal{W}$) 由堆叠的变形块组成,输入包括全局风格向量、服装特征和人物特征。全局风格向量通过人物图像和店内服装的最低分辨率特征图计算得出,用于全局上下文建模。在生成器的每个变形块中,全局风格向量用于调制特征通道,该通道接收相应的服装特征图以估计外观流。为了使我们的流估计器能够建模细粒度的局部外观流 (例如图5中的手臂和手部区域),在每个基于风格的外观流估计部分的变形块之上,我们引入了一个细化层。该细化层首先对服装特征图进行变形,随后将其与相同分辨率的人物特征图拼接,再用于预测局部细节外观流。

The contributions of this work are as follow: (1) We propose a novel style-based appearance flow method to warp the garment in virtual try-on. This global flow estimation approach makes our VTON model much robust against large mis-alignments between person and garment images. This makes our method more applicable to ‘in-the-wild’ application where a full-body person image with natural poses is used (see in Fig. 1). (2) We conduct extensive experiments to validate our method, demonstrating clearly that it is superior to existing state-of-the-art alternatives.

本工作的贡献如下:(1) 我们提出了一种基于风格的外观流方法用于虚拟试衣中的服装形变。这种全局流估计方法使我们的VTON模型对人物与服装图像间的严重错位具有更强鲁棒性,使得该方法更适用于使用自然姿态全身人物图像的"野外"应用场景(见图1)。(2) 我们进行了大量实验验证该方法,明确证明其优于现有最先进方案。

2. Related Work

2. 相关工作

Image based virtual try-on Image based (2D) VTON can be categorized into parser-based methods and parserfree methods. Their main difference is whether an off-theshelf human parser2 is required in the inference stage.

基于图像的虚拟试穿

基于图像(2D)的虚拟试穿可分为基于解析器的方法和无解析器方法。它们的主要区别在于推理阶段是否需要使用现成的人体解析器2。

Parser-based methods apply a human segmentation map to mask the garment region in the input person image for warping parameter estimation. The masked person image is concatenated with the warped garment and then fed into a generator for target try-on image generation. Most methods [9, 13, 14, 38, 42, 43] apply a pre-trained human parser [11] to parse the person image into several pre-defined semantic regions, e.g., head, top, and pants. For better try-on image generation, [42] also transforms the segmentation map to match the target garment. The transformed parsing result, together with the warped garment and the masked person image are used for final try-on image generation. The reliance on a parser make these methods sensitive to bad human parsing results [10, 19] which inevitably lead to inaccurate warping and try-on results.

基于解析器的方法通过应用人体分割图来掩码输入人物图像中的服装区域,以估计形变参数。掩码后的人物图像与形变后的服装拼接,随后输入生成器以生成目标试穿图像。大多数方法 [9, 13, 14, 38, 42, 43] 采用预训练的人体解析器 [11] 将人物图像解析为多个预定义的语义区域,例如头部、上衣和裤子。为了生成更优质的试穿图像,[42] 还会调整分割图以匹配目标服装。调整后的解析结果、形变后的服装以及掩码后的人物图像共同用于最终试穿图像的生成。这类方法对解析器的依赖使其容易受到不良人体解析结果 [10, 19] 的影响,进而不可避免地导致形变和试穿效果不准确。

In contrast, parser-free methods [10,19], in the inference stage, only takes as inputs the person image the garment image. They are designed specifically to eliminate the negative effects induced by the bad parsing results. Those methods usually first train a parser-based teacher model and then distill a parser-free student model. [19] proposed a pipeline which distills the garment warping module and try-on generation network using paired triplets. [10] further improved [19] by introducing cycle-consistency for better distillation.

相比之下,无解析器方法 [10,19] 在推理阶段仅需输入人物图像和服装图像。这类方法专门设计用于消除因解析结果不佳带来的负面影响。它们通常先训练一个基于解析器的教师模型,再蒸馏出无解析器的学生模型。[19] 提出了一种流程,利用配对三元组蒸馏服装变形模块和试穿生成网络。[10] 通过引入循环一致性进一步改进了 [19] 的方案,以实现更好的蒸馏效果。

Our method is also a parser free method. However, our method focuses on the design of the garment warping part, where we propose a novel global appearance flow based garment warping module.

我们的方法同样无需解析器。然而,我们的方法聚焦于服装变形部分的设计,提出了一种基于全局外观流 (global appearance flow) 的新型服装变形模块。

3D virtual try-on Compared to image based VTON, 3D VTON provides better try-on experience (e.g., allowing being viewed with arbitrary views and poses), yet is also more challenging. Most 3D VTON works [2, 27] rely on 3D parametric human body models [25] and need scanned 3D datasets for training. Collecting large scale 3D datasets is expensive and laborious, thus posing a constraint on the s cal ability of a 3D VTON model. To overcome this problem, recently [44] applied non-parametric dual human depth model [8] for monocular to 3D VTON. However, existing 3D VTON still generate inferior texture details compared to the 2D methods.

3D虚拟试穿

与基于图像的虚拟试穿(VTON)相比,3D虚拟试穿能提供更好的试穿体验(例如支持任意视角和姿势查看),但也更具挑战性。大多数3D虚拟试穿研究[2,27]依赖于3D参数化人体模型[25],并需要扫描的3D数据集进行训练。采集大规模3D数据集成本高昂且费力,因此限制了3D虚拟试穿模型的可扩展性。为解决这一问题,近期研究[44]采用非参数化双人体深度模型[8]实现单目到3D的虚拟试穿。然而,现有3D虚拟试穿方法生成的纹理细节仍逊色于2D方法。

StyleGAN for image manipulation StyleGAN [21, 22] has revolutionized the research on image manipulation [28, 33, 41] lately. Its successful application on the image manipulation tasks often thanks to its suitability in learning a highly disentangled latent space. Recent efforts have been focused on unsupervised latent semantics discovery [4,34,37]. [24] applied pose conditioned StyleGAN for virtual try-on. However, their model cannot preserve garment details and is slow during inference.

用于图像处理的StyleGAN

StyleGAN [21, 22] 近期彻底改变了图像处理领域的研究 [28, 33, 41]。其在图像处理任务中的成功应用,往往归功于其高度解耦的潜在空间学习特性。当前研究重点集中于无监督潜在语义发现 [4,34,37]。[24] 采用姿态条件化的StyleGAN实现虚拟试穿,但其模型无法保留服装细节且推理速度较慢。

The design of our garment warping network is inspired from StyleGAN in image manipulation, especially its super performance in shape deformation [28, 34]. Instead of us- ing style modulation to generate the warped garment, we use style modulation to predict the implicit appearance flow which is then used to warp the garment via sampling. This design is much more suited to garment detail-preserving compared to [24].

我们的服装变形网络设计灵感源自图像处理中的StyleGAN,特别是其在形状变形方面的卓越表现 [28, 34]。不同于使用风格调制直接生成变形后的服装,我们通过风格调制预测隐式外观流(implicit appearance flow),再借助采样实现服装变形。相较于 [24] 的方案,这种设计能更好地保留服装细节。

Appearance flow In the context of VTON, appearance flow was first introduced by [13]. Since then, it has gained more attention and adopted by recent state-of-the-art VTON models [5, 10]. Fundamentally, appearance flow is used as a sampling grid for garment warping, it is thus information lossless and superior in detail preserving. Beyond VTON, appearance flow is also popular in other tasks. [45] applied it for novel view synthesis. [1, 29] also applied the idea of appearance flow to warp the feature map for person pose transfer. Different from all these existing appearance flow estimation methods, our method, via style modulation, applies a global style vector to estimate the appearance flow. Our method is thus intrinsically superior in its ability to coping with large mis-alignments.

外观流 (appearance flow)

在虚拟试衣 (VTON) 领域,[13] 首次提出了外观流的概念。此后,该技术受到广泛关注,并被当前最先进的 VTON 模型 [5, 10] 所采用。本质上,外观流作为服装形变的采样网格使用,因此具有信息无损和细节保留的优势。除虚拟试衣外,外观流在其他任务中也颇受欢迎。[45] 将其应用于新视角合成,[1, 29] 则利用外观流思想对特征图进行形变以实现人体姿态迁移。与现有外观流估计方法不同,我们通过风格调制 (style modulation) 技术,采用全局风格向量来估计外观流。因此,我们的方法在应对大幅错位情况时具有先天优势。

3. Methodology

3. 方法论

3.1. Problem definition

3.1. 问题定义

Given a person image $(\boldsymbol{p} \in~\mathbb{R}^{3\times H\times W})$ and an in-shop garment image $(g\in\mathbb{R}^{3\times H\times W})$ , the goal of virtual try-on is to generate a try-on image $(t\in\mathbb{R}^{3\times H\times W})$ where the garment in $g$ fits to the corresponding parts in $p$ . In addition, in the generated $t$ , both details from $g$ and non-garment regions in $p$ should be preserved. In other words, the same person in $p$ should appear unchanged in $t$ except now wearing g.

给定一张人物图像 $(\boldsymbol{p} \in~\mathbb{R}^{3\times H\times W})$ 和一张店内服装图像 $(g\in\mathbb{R}^{3\times H\times W})$ ,虚拟试穿的目标是生成一张试穿图像 $(t\in\mathbb{R}^{3\times H\times W})$ ,其中 $g$ 中的服装适配 $p$ 的对应部位。此外,在生成的 $t$ 中,应保留 $g$ 的细节和 $p$ 的非服装区域。换句话说, $p$ 中的同一个人物在 $t$ 中应保持不变,只是现在穿着 $g$ 。

To eliminate the negative effect of inaccurate human parsing, our proposed model ( $\mathcal{F}$ in Fig. 2) is designed to be a parser-free model. Following the strategy adopted by existing parser-free models [10,19], we first pre-train a parserbased model $(\mathcal{F}^{P B})$ . It is then used as a teacher for knowledge distillation to help train the final parser-free model $\mathcal{F}$ . Both $\mathcal{F}$ and ${\mathcal{F}}^{P B}$ consist of three parts, i.e., two feature extractors (EpP $(\mathcal{E}{p}^{P B},\mathcal{E}_{g}^{P B}$ B, EgP B in FP B and Ep, Eg in F), warping module $\mathcal{W}^{\bar{P}B}$ in ${\mathcal{F}}^{P B}$ and $\mathcal{W}$ in $\mathcal{F}$ ), and a generator $(\mathcal{G}^{P B}$ in ${\mathcal{F}}^{P B}$ and $\mathcal{G}$ in $\mathcal{F}$ ). Each of them will be detailed in the following sections.

为消除人工解析不准确带来的负面影响,我们提出的模型(图2中的$\mathcal{F}$)设计为无解析器模型。遵循现有无解析器模型[10,19]采用的策略,我们首先预训练一个基于解析器的模型$(\mathcal{F}^{PB})$,随后将其作为教师模型进行知识蒸馏,以训练最终的无解析器模型$\mathcal{F}$。$\mathcal{F}$和${\mathcal{F}}^{PB}$均由三部分组成:两个特征提取器(FP B中的EpP $(\mathcal{E}{p}^{PB},\mathcal{E}_{g}^{PB}$ B、EgP B以及F中的Ep、Eg),变形模块(${\mathcal{F}}^{PB}$中的$\mathcal{W}^{\bar{P}B}$和$\mathcal{F}$中的$\mathcal{W}$),以及生成器(${\mathcal{F}}^{PB}$中的$\mathcal{G}^{PB}$和$\mathcal{F}$中的$\mathcal{G}$)。各部分细节将在后续章节展开说明。

3.2. Pre-training a parser-based model

3.2. 基于解析器的模型预训练

As per standard in existing parser-free models [10, 19], a parser-based model ${\mathcal{F}}^{P B}$ is first trained. It is used in two ways in the subsequent training of the proposed parser-free model $\mathcal{F}$ : (a) to generate person image $(p)$ to be used by $\mathcal{F}$ as input and (b) to supervise the training of $\mathcal{F}$ via knowledge distillation.

根据现有无解析器模型的通用做法 [10, 19],首先训练基于解析器的模型 ${\mathcal{F}}^{P B}$。该模型在后续提出的无解析器模型 $\mathcal{F}$ 训练中以两种方式使用:(a) 生成供 $\mathcal{F}$ 使用的人体图像 $(p)$ 作为输入;(b) 通过知识蒸馏监督 $\mathcal{F}$ 的训练。

Concretely, ${\mathcal{F}}{P B}$ takes as inputs the semantic representation (segmentation $\mathrm{map}^{3}$ , keypoint pose and dense pose) of a real person image $(p_{g t}\in\mathbb{R}^{3\times H\times W})$ in the training set and an unpaired garment $(g_{u n}\in\mathbb{R}^{3\times H\times W})$ . The output of ${\mathcal{F}}{P B}$ is the image $p$ where the original person is wearing $g_{u n}$ . $p$ will serve as the input for $\mathcal{F}$ during training. This design, according to [10], benefits from the fact that we now have paired person image $p_{g t}$ and garment image $g$ in $p_{g t}$ to train the parser-free model $\mathcal{F}$ , that is:

具体来说,${\mathcal{F}}{P B}$ 的输入是训练集中真实人物图像 $(p_{g t}\in\mathbb{R}^{3\times H\times W})$ 的语义表示(分割 $\mathrm{map}^{3}$、关键点姿态和密集姿态)以及未配对的服装 $(g_{u n}\in\mathbb{R}^{3\times H\times W})$。${\mathcal{F}}{P B}$ 的输出是原始人物穿着 $g_{u n}$ 的图像 $p$。在训练过程中,$p$ 将作为 $\mathcal{F}$ 的输入。根据 [10],这种设计得益于我们现在拥有配对的真实人物图像 $p_{g t}$ 和 $p_{g t}$ 中的服装图像 $g$ 来训练无需解析器的模型 $\mathcal{F}$,即:

Figure 2. A schematic of our framework. The pre-trained parser based model ${\mathcal{F}}^{P B}$ generates an output image as the input of parser free model $\mathcal{F}$ . The two feature extractors in $\mathcal{F}$ extract the feature of person image and garment image, respectively. A style vector is extracted from the lowest resolution feature maps from person image and the garment image. The warping module takes in the style vector and feature maps from the person image and garment image, and output an appearance flow map. The appearance flow is then used to warp the garment. Finally, the warped garment is concatenated with person image and fed into the generator to generate the target try-on image. Note that ${\mathcal{F}}_{P B}$ is only used during training.

图 2: 我们的框架示意图。基于预训练解析器的模型 ${\mathcal{F}}^{P B}$ 生成输出图像作为无解析器模型 $\mathcal{F}$ 的输入。$\mathcal{F}$ 中的两个特征提取器分别提取人物图像和服装图像的特征。从人物图像和服装图像的最低分辨率特征图中提取风格向量。变形模块接收风格向量以及人物图像和服装图像的特征图,输出外观流图。随后利用该外观流对服装进行变形处理。最终,变形后的服装与人物图像拼接后输入生成器,生成目标试穿图像。请注意 ${\mathcal{F}}_{P B}$ 仅在训练阶段使用。

$$

\mathcal{F}^{}=\underset{\mathcal{F}}{\arg\operatorname*{min}}\lVert t-p_{g t}\rVert,

$$

$$

\mathcal{F}^{}=\underset{\mathcal{F}}{\arg\operatorname*{min}}\lVert t-p_{g t}\rVert,

$$

3.3. Feature extraction

3.3. 特征提取

We apply two convolutional encoders $\mathcal{E}{p}$ and ${\mathcal{E}}{g}.$ ) to extract the features of $p$ and $g$ . Both $\mathcal{E}{p}$ and $\mathcal{E}{g}$ share the same architecture, composed of stacked residual blocks. The extracted features from $\mathcal{E}{p}$ and $\mathcal{E}{g}$ can be represented as ${p_{i}}{1}^{N}$ and ${g_{i}}{1}^{N}$ ( $N=4$ in Fig. 2 for simplicity), where $p_{i}\in\mathbb{R}^{c_{i}\times h_{i}\times w_{i}}$ and $g_{i}\in\mathbb{R}^{c_{i}\times h_{i}\times w_{i}}$ are the feature maps extracted from the corresponding residual block in $\mathcal{E}{p}$ and $\mathcal{E}_{g}$ , respectively. The extracted feature maps will be used in $\mathcal{W}$ to predict the appearance flow.

我们应用两个卷积编码器 $\mathcal{E}{p}$ 和 ${\mathcal{E}}{g}$ ) 来提取 $p$ 和 $g$ 的特征。$\mathcal{E}{p}$ 和 $\mathcal{E}{g}$ 共享相同的架构,由堆叠的残差块组成。从 $\mathcal{E}{p}$ 和 $\mathcal{E}{g}$ 提取的特征可以表示为 ${p_{i}}{1}^{N}$ 和 ${g_{i}}{1}^{N}$ ( 图 2 中为简化起见设 $N=4$ ),其中 $p_{i}\in\mathbb{R}^{c_{i}\times h_{i}\times w_{i}}$ 和 $g_{i}\in\mathbb{R}^{c_{i}\times h_{i}\times w_{i}}$ 分别是从 $\mathcal{E}{p}$ 和 $\mathcal{E}_{g}$ 的对应残差块中提取的特征图。提取的特征图将在 $\mathcal{W}$ 中用于预测外观流。

3.4. Style based appearance flow estimation

3.4. 基于风格的外观流估计

The main novel component of the proposed model is a style-based global appearance flow estimation module. Different from previous methods that estimate appearance flow based on local feature correspondence [10, 13], originally proposed in optical flow estimation [6, 17], our method, based on a global style vector, first estimates a coarse appearance flow via style modulation and then refine the predicted coarse appearance flow based on local feature correspondence.

所提模型的主要创新组件是一个基于风格的全局外观流估计模块。不同于先前基于局部特征对应关系估计外观流的方法 [10, 13](最初由光流估计领域提出 [6, 17]),我们的方法基于全局风格向量,先通过风格调制估计粗略外观流,再基于局部特征对应关系细化预测的粗略外观流。

As illustrated in Fig. 2, our warping module $(\mathcal{W})$ consists of N stacked warping blocks $({\mathcal{W}{i}}{1}^{N})$ , each block is composed of a style-based appearance flow prediction layer (orange rectangle) and a local correspondence based appearance flow refinement layer (blue rectangle). Concretely, we first extract a global style vector $\mathbf{\Psi}(s\in\mathbb{R}^{c})$ ) using the features output from the $N^{t h}$ (final) blocks of $\mathcal{E}{p}$ and $\mathcal{E}{g}$ , denoted as $p_{N}$ and $g_{N}$ , as:

如图 2 所示,我们的变形模块 $(\mathcal{W})$ 由 N 个堆叠的变形块 $({\mathcal{W}{i}}{1}^{N})$ 组成,每个块包含一个基于风格的外观流预测层 (橙色矩形) 和一个基于局部对应关系的外观流优化层 (蓝色矩形)。具体而言,我们首先利用 $\mathcal{E}{p}$ 和 $\mathcal{E}{g}$ 的第 $N^{t h}$ (最终) 块输出的特征 $p_{N}$ 和 $g_{N}$ 提取全局风格向量 $\mathbf{\Psi}(s\in\mathbb{R}^{c})$),计算方式如下:

$$

s=[f_{p}(p_{N}),f_{g}(g_{N})],

$$

$$

s=[f_{p}(p_{N}),f_{g}(g_{N})],

$$

where $f_{p}$ and $f_{g}$ are fully connected layers, and $\left[\cdot,\cdot\right]$ denotes concatenation. Intrinsically, the extracted global style vector s contains the global information of the person and garment, e.g., position, structure, etc. Similar to style based image manipulation [15, 28, 33, 34], we expect the global style vector $s$ capture the required deformation for warping $g$ into $p$ . It is thus used for style modulation in a StyleGAN style generator for estimating a appearance flow field.

其中 $f_{p}$ 和 $f_{g}$ 是全连接层,$\left[\cdot,\cdot\right]$ 表示拼接操作。本质上,提取的全局风格向量 s 包含了人物和服装的全局信息,例如位置、结构等。与基于风格的图像操作类似 [15, 28, 33, 34],我们希望全局风格向量 $s$ 能捕捉将 $g$ 变形为 $p$ 所需的形变信息。因此,该向量被用于 StyleGAN 风格生成器中的风格调制,以估计外观流场。

More specifically, in the style-based appearance flow prediction layer of each block $\mathcal{W}_{i}$ , we apply style modulation to predict a coarse flow:

具体而言,在每个块 $\mathcal{W}_{i}$ 的基于风格的外观流预测层中,我们应用风格调制来预测粗略流:

$$

\mathbf{f_{ci}}=c o n v_{m}(S(g_{N+1-i},\mathcal{U}(\mathbf{f_{i-1}})),s),

$$

$$

\mathbf{f_{ci}}=c o n v_{m}(S(g_{N+1-i},\mathcal{U}(\mathbf{f_{i-1}})),s),

$$

where $c o n v_{m}$ denotes modulated convolution [21], $S(\cdot,\cdot)$ is the sampling operator, $\mathcal{U}$ is the upsampling operator, and $\mathbf{f_{i-1}}\in\mathbb{R}^{2\times h_{i-1}\times w_{i-1}}$ is the predicted flow from last warping block. Note that the first block $\mathcal{W}{1}$ in $\mathcal{W}$ only takes in the lowest resolution garment feature map and the style vector, i.e., $\mathbf{f_{c1}}=c o n v_{m}(g_{N},s)$ . As can be seen from Equation 3, the predicted $\mathbf{f_{ci}}$ depends on the garment feature map and the global style vector. It thus has a global receptive field and is capable to cope with large mis-alignments between the garment and person images. However, as the style vector $s$ is a global representation, as a trade-off, it has a limited ability to accurately estimate the local fine-grained appearance flow (as shown in Fig. 5). The coarse flow is thus in need of a local refinement.

其中 $conv_{m}$ 表示调制卷积 [21],$S(\cdot,\cdot)$ 为采样算子,$\mathcal{U}$ 为上采样算子,$\mathbf{f_{i-1}}\in\mathbb{R}^{2\times h_{i-1}\times w_{i-1}}$ 是来自上一个变形块的预测光流。注意 $\mathcal{W}$ 中的首个块 $\mathcal{W}{1}$ 仅接收最低分辨率的服装特征图和风格向量,即 $\mathbf{f_{c1}}=conv_{m}(g_{N},s)$。如公式3所示,预测的 $\mathbf{f_{ci}}$ 依赖于服装特征图和全局风格向量,因此具有全局感受野,能够处理服装与人物图像间的大幅错位。但由于风格向量 $s$ 是全局表征,作为权衡,其精确估计局部细粒度外观光流的能力有限(如图5所示),因此需要对粗光流进行局部优化。

To refine $\mathbf{f_{ci}}$ , we introduce a local correspondence based appearance flow refinement layer in each block $\mathcal{W}_{i}$ . It aims to estimate a local fine-grained appearance flow:

为了优化 $\mathbf{f_{ci}}$ ,我们在每个模块 $\mathcal{W}_{i}$ 中引入了一个基于局部对应关系的外观流精修层。该层旨在估计局部细粒度的外观流:

$$

\mathbf f_{\mathbf r\mathbf i}=c o n v([S(g_{N+1-i},\mathbf f_{\mathbf c i}),p_{N+1-i}]),

$$

$$

\mathbf f_{\mathbf r\mathbf i}=c o n v([S(g_{N+1-i},\mathbf f_{\mathbf c i}),p_{N+1-i}]),

$$

where $\mathbf{f_{ri}}$ is the predicted refinement flow, and conv denotes convolution. Fundamentally, the refinement layer es