MCTrack: A Unified 3D Multi-Object Tracking Framework for Autonomous Driving

MCTrack: 自动驾驶的统一3D多目标跟踪框架

Abstract

摘要

This paper introduces MCTrack, a new 3D multi-object tracking method that achieves state-of-the-art (SOTA) performance across KITTI, nuScenes, and Waymo datasets. Addressing the gap in existing tracking paradigms, which often perform well on specific datasets but lack generaliz ability, MCTrack offers a unified solution. Additionally, we have standardized the format of perceptual results across various datasets, termed Base Version, facilitating researchers in the field of multi-object tracking (MOT) to concentrate on the core algorithmic development without the undue burden of data preprocessing. Finally, recognizing the limitations of current evaluation metrics, we propose a novel set that assesses motion information output, such as velocity and acceleration, crucial for downstream tasks. The source codes of the proposed method are available at this link: https://github.com/megvii-research/MCTrack

本文介绍了一种新型3D多目标跟踪方法MCTrack,该方法在KITTI、nuScenes和Waymo数据集上均实现了最先进(SOTA)性能。针对现有跟踪范式在特定数据集表现优异但泛化能力不足的问题,MCTrack提供了统一解决方案。此外,我们统一了各数据集的感知结果格式(称为Base Version),使多目标跟踪(MOT)领域的研究者能专注于核心算法开发,无需承担繁重的数据预处理工作。最后,针对现有评估指标的局限性,我们提出了一套新指标用于评估运动信息输出(如速度和加速度),这对下游任务至关重要。该方法的源代码详见:https://github.com/megvii-research/MCTrack

1. Introduction

1. 引言

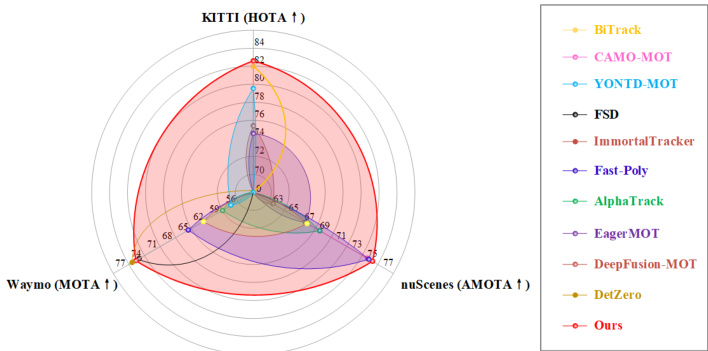

3D multi-object tracking plays an essential role in the field of autonomous driving, as it serves as a bridge between perception and planning tasks. The tracking results directly affect the performance of trajectory prediction, which in turn influences the planning and control of the ego vehicle. Currently, common tracking paradigms include tracking-bydetection (TBD) [58, 61, 62], tracking-by-attention (TBA) [14, 48, 69], and joint detection and tracking (JDT) [3, 59]. Generally, the TBD paradigm approach tends to outperform the TBA and JDT paradigm methods in both performance and computational resource efficiency. Commonly used datasets include KITTI [22], Waymo [49], and nuScenes [5], which exhibit significant differences in terms of collection scenarios, regions, weather, and time. Furthermore, the difficulty and format of different datasets vary considerably. Researchers often need to write multiple preprocessing programs to adapt to different datasets. The variability across datasets typically results in these methods attaining SOTA performance solely within the confines of a particular dataset, with less impressive results observed on alternate datasets [26, 31, 32, 54], as shown in Fig.1. For instance, DetZero [36] achieved SOTA performance on the Waymo dataset but was not tested on other datasets. Fast-Poly [32] achieved SOTA performance on the nuScenes dataset but had mediocre performance on the Waymo dataset. Similarly, DeepFusion [58] performed well on the KITTI dataset but exhibited average performance on the nuScenes dataset. Furthermore, in terms of performance evaluation, existing metrics such as CLEAR [4], AMOTA [61], HOTA [35], IDF1 [43], etc., mainly judge whether the trajectory is correctly connected. They fall short, however, in evaluating the precision of subsequent motion information—key information such as velocity, acceleration, and angular velocity—which is crucial for fulfilling the requirements of downstream prediction and planning tasks [31, 54, 58].

3D多目标跟踪在自动驾驶领域发挥着至关重要的作用,它是感知与规划任务之间的桥梁。跟踪结果直接影响轨迹预测的性能,进而影响自车的规划与控制。目前常见的跟踪范式包括检测后跟踪 (TBD) [58, 61, 62]、注意力跟踪 (TBA) [14, 48, 69] 以及联合检测与跟踪 (JDT) [3, 59]。总体而言,TBD范式方法在性能和计算资源效率上往往优于TBA和JDT范式方法。常用数据集包括KITTI [22]、Waymo [49]和nuScenes [5],这些数据集在采集场景、区域、天气和时间方面存在显著差异。此外,不同数据集的难度和格式差异较大,研究人员通常需要编写多个预处理程序以适应不同数据集。数据集的差异性通常导致这些方法仅在特定数据集范围内达到SOTA性能,而在其他数据集上表现平平 [26, 31, 32, 54],如图1所示。例如,DetZero [36]在Waymo数据集上取得了SOTA性能,但未在其他数据集上进行测试;Fast-Poly [32]在nuScenes数据集上表现优异,但在Waymo数据集上表现一般;DeepFusion [58]在KITTI数据集上表现良好,却在nuScenes数据集上呈现平均水平。此外,在性能评估方面,现有指标如CLEAR [4]、AMOTA [61]、HOTA [35]、IDF1 [43]等主要判断轨迹是否正确连接,但未能充分评估后续运动信息(如速度、加速度和角速度等关键信息)的精度,而这些信息对满足下游预测和规划任务的需求至关重要 [31, 54, 58]。

Figure 1. The comparison of the proposed method with SOTA methods across different datasets. For the first time, we have achieved SOTA performance on all three datasets.

图 1: 提出的方法与 SOTA (State Of The Art) 方法在不同数据集上的对比。我们首次在全部三个数据集上实现了 SOTA 性能。

Addressing the noted challenges, we first introduced the Base Version format to standardize perception results (i.e., detections) across different datasets. This unified format greatly aids researchers by allowing them to focus on advancing MOT algorithms, un encumbered by datasetspecific discrepancies.

针对上述挑战,我们首先引入了基础版本 (Base Version) 格式来标准化不同数据集间的感知结果(即检测结果)。这一统一格式使研究人员能专注于推进多目标跟踪 (MOT) 算法发展,无需受限于数据集差异。

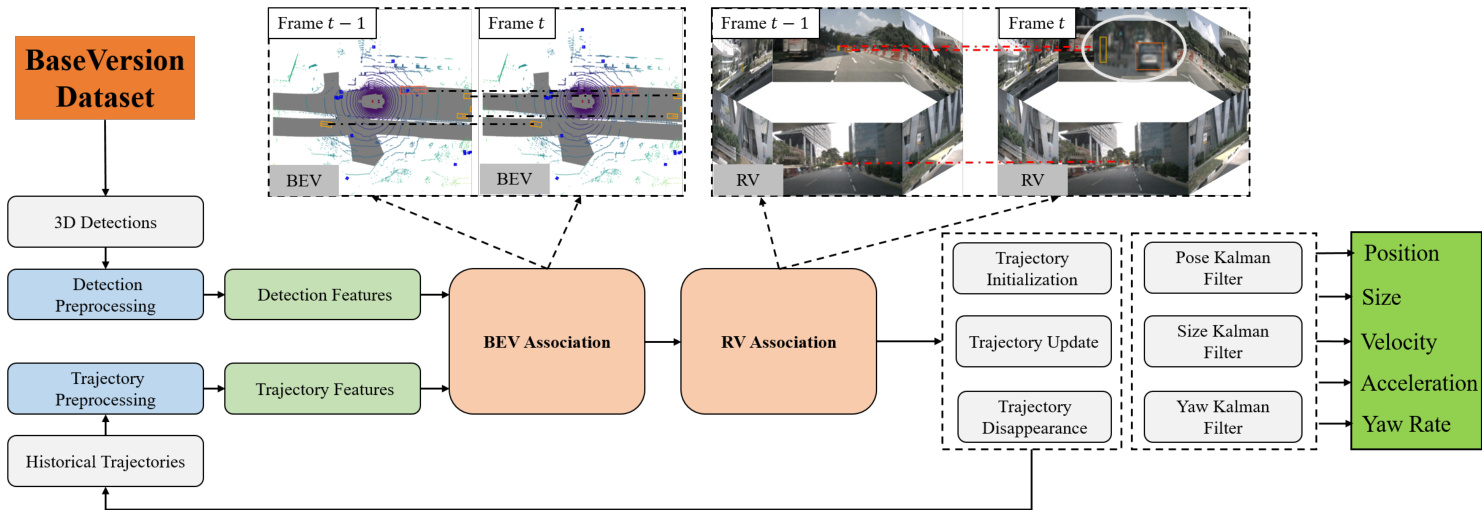

Secondly, this paper proposes a unified multi-object tracking framework called MCTrack. To our best knowledge, our method is the first to achieve SOTA performance across the three most popular tracking datasets: KITTI, nuScenes, and Waymo. Specifically, it ranks first in both the KITTI and nuScenes datasets, and second in the Waymo dataset. It is worth noting that the detector used for the firstplace ranking in Waymo dataset is significantly superior to the detector we employed. Moreover, this method is designed from the perspective of practical engineering applications, with the proposed modules addressing real-world issues. For example, our two-stage matching strategy involves the first stage, which performs most of the trajectory matching on the bird’s-eye view (BEV) plane. However, for camera-based perception results, matching on the BEV plane can encounter challenges due to the instability of depth information, which can be as inaccurate as 10 meters in practical engineering scenarios. To address this, trajectories that fail to match in the BEV plane are projected onto the image plane for secondary matching. This process effectively avoids issues such ID-Switch (IDSW) and Fragmentation (Frag) caused by inaccurate depth information, further improving the accuracy and reliability of tracking.

其次,本文提出了一个统一的多目标跟踪框架MCTrack。据我们所知,该方法首次在KITTI、nuScenes和Waymo三大主流跟踪数据集上均达到SOTA性能:具体表现为KITTI和nuScenes双榜第一,Waymo榜单第二。值得注意的是,Waymo榜单第一名使用的检测器性能显著优于我们采用的检测器。该方法的工程设计以实际应用为导向,所提模块均针对现实问题。例如两阶段匹配策略中,第一阶段在鸟瞰图(BEV)平面完成大部分轨迹匹配,但对于基于相机的感知结果,由于深度信息的不稳定性(实际工程中可能存在10米量级的误差),BEV平面匹配可能失效。为此,我们将BEV平面匹配失败的轨迹投影至图像平面进行二次匹配,有效避免了因深度误差导致的ID切换(IDSW)和轨迹断裂(Frag)问题,进一步提升了跟踪的准确性和鲁棒性。

Finally, this paper introduces a set of metrics for evaluating the motion information output by MOT systems, including speed, acceleration, and angular velocity. We hope that researchers will not only focus on the correct linking of trajectories but also consider how to accurately provide the motion information needed for downstream prediction and planning after correct matching, such as speed and acceleration.

最后,本文提出了一套用于评估多目标跟踪(MOT)系统输出运动信息的指标,包括速度、加速度和角速度。我们希望研究者们不仅关注轨迹的正确关联,还能考虑在正确匹配后如何准确提供下游预测和规划所需的运动信息,例如速度和加速度。

2. Related Work

2. 相关工作

2.1. Datasets

2.1. 数据集

Multi-object tracking can be categorized based on spatial dimensions into 2D tracking on the image plane and 3D tracking in the real world. Common datasets for 2D tracking methods include MOT17 [39], MOT20 [15], Dance- Track [50], etc., which typically calculate 2D IoU or appearance feature similarity on the image plane for matching [1, 7, 37]. However, due to the lack of three-dimensional information of objects in the real world, these methods are not suitable for applications like autonomous driving. 3D tracking methods often utilize datasets such as KITTI [22], nuScenes [5], Waymo [49], which provide abundant sensor information to capture the three-dimensional information of objects in the real world. Regrettably, there is a significant format difference among these three datasets, and researchers often need to perform various preprocessing steps to adapt their pipeline, especially for TBD methods, where different detection formats pose a considerable challenge to researchers. To address this issue, this paper standardizes the format of perceptual results (detections) from the three datasets, allowing researchers to focus better on the study of tracking algorithms.

多目标跟踪可根据空间维度分为图像平面上的2D跟踪和现实世界中的3D跟踪。2D跟踪方法常用数据集包括MOT17 [39]、MOT20 [15]、DanceTrack [50]等,这些方法通常通过计算图像平面上的2D IoU或外观特征相似度进行匹配 [1, 7, 37]。然而由于缺乏物体在现实世界中的三维信息,此类方法不适用于自动驾驶等场景。3D跟踪方法常采用KITTI [22]、nuScenes [5]、Waymo [49]等数据集,这些数据集提供丰富的传感器信息以捕捉物体在现实世界中的三维信息。遗憾的是,这三个数据集存在显著的格式差异,研究者往往需要进行各种预处理以适应流程,特别是对于TBD方法而言,不同的检测格式给研究者带来了巨大挑战。为解决该问题,本文统一了三个数据集感知结果(检测)的格式,使研究者能更专注于跟踪算法的研究。

2.2. MOT Paradigm

2.2. MOT范式

Common paradigms in multi-object tracking currently include Tracking-by-Detection (TBD) [58, 70], Joint Detection and Tracking (JDT) [3, 59], Tracking-by-Attention (TBA) [14, 48], and Referring Multi-Object Tracking (RMOT) [19, 63]. JDT, TBA, and RMOT paradigms typically rely on image feature information, requiring GPU resources for processing. However, for the computing power available in current autonomous vehicles, supporting the GPU resources needed for MOT tasks is impractical. Moreover, the performance of these paradigms is often not as effective as the TBD approach. Therefore, this study focuses on TBD-based tracking methods, aiming to design a unified 3D multi-object tracking framework that accommodates the computational constraints of autonomous vehicles.

当前多目标跟踪的常见范式包括检测跟踪(TBD)[58,70]、联合检测跟踪(JDT)[3,59]、注意力跟踪(TBA)[14,48]和指代多目标跟踪(RMOT)[19,63]。JDT、TBA和RMOT范式通常依赖图像特征信息,需要GPU资源进行处理。然而就当前自动驾驶车辆的计算能力而言,支持MOT任务所需的GPU资源并不现实。此外,这些范式的性能往往不如TBD方法。因此,本研究聚焦于基于TBD的跟踪方法,旨在设计一个适应自动驾驶车辆计算限制的统一3D多目标跟踪框架。

2.3. Data Association

2.3. 数据关联

In current 2D and 3D multi-object tracking methods, cost functions such as IoU, GIoU [42], DIoU [72], Euclidean distance, and appearance similarity are commonly used [1, 7]. Some of these cost functions only consider the similarity between two bounding boxes, while others focus solely on the distance between the centers of the boxes. None of them can ensure good performance for each category in every dataset. The Ro GDIoU proposed in this paper, which takes into account both shape similarity and center distance, effectively addresses these issues. Moreover, in terms of matching strategy, most methods adopt a two-stage approach: the first stage uses a set of thresholds for matching, and the second stage relaxes these thresholds for another round of matching. Although this method offers certain improvements, it can still fail when there are significant fluctuations in the perceived depth. Therefore, this paper introduces a secondary matching strategy based on the BEV plane and the Range View (RV) plane, which solves this problem effectively by matching from different perspectives.

在当前2D和3D多目标跟踪方法中,常用IoU、GIoU [42]、DIoU [72]、欧氏距离和外观相似度等成本函数 [1, 7]。其中部分成本函数仅考虑两个边界框之间的相似性,另一些则仅关注框中心距离。这些方法均无法保证在每个数据集的每个类别上都表现良好。本文提出的Ro GDIoU兼顾形状相似性与中心距离,有效解决了上述问题。此外,在匹配策略方面,多数方法采用两阶段方案:第一阶段使用一组阈值进行匹配,第二阶段放宽阈值进行二次匹配。尽管该方法有一定改进,但在感知深度存在显著波动时仍可能失效。为此,本文提出基于BEV平面和Range View (RV)平面的二次匹配策略,通过多视角匹配有效解决了该问题。

2.4. MOT Evaluation Metrics

2.4. MOT 评估指标

The earliest multi-object tracking evaluation metric, CLEAR, was proposed in reference [4], including metrics such as MOTA and MOTP. Subsequently, improvements based on CLEAR have led to the development of IDF1 [43], HOTA [35], AMOTA [61], and so on. These metrics primarily assess the correctness of trajectory connections, that is, whether trajectories are continuous and consistent, and whether there are breaks or ID switches. However, they do not take into account the motion information that must be output after a trajectory is correctly connected in a multiobject tracking task, such as velocity, acceleration, and angular velocity. This motion information is crucial for downstream tasks like trajectory prediction and planning. In light of this, this paper introduces a new set of evaluation metrics that focus on the motion information output by MOT tasks, which we refer to as motion metrics. We encourage researchers in the MOT field to focus not only on the accurate association of trajectories but also on the quality and suitability of the trajectory outputs to meet the requirements of downstream tasks.

最早的多目标跟踪评估指标CLEAR由文献[4]提出,包含MOTA、MOTP等指标。随后基于CLEAR的改进衍生出IDF1 [43]、HOTA [35]、AMOTA [61]等指标。这些指标主要评估轨迹连接的准确性,即轨迹是否连续一致、是否存在断裂或ID切换,但未考虑多目标跟踪任务中轨迹正确连接后必须输出的运动信息(如速度、加速度、角速度)。这些运动信息对轨迹预测、规划等下游任务至关重要。鉴于此,本文提出一套聚焦MOT任务输出运动信息的评估指标,称为运动指标(motion metrics),倡导MOT领域研究者不仅关注轨迹关联的准确性,还应关注轨迹输出的质量与适用性,以满足下游任务需求。

3. MCTrack

3. MCTrack

We present MCTrack, a streamlined, efficient, and unified 3D multi-object tracking method designed for autonomous driving. The overall framework is illustrated in Fig.2, and detailed descriptions of each component are provided below.

我们提出 MCTrack,一种专为自动驾驶设计的简洁、高效且统一的 3D 多目标跟踪方法。整体框架如图 2 所示,各组件的详细描述如下。

3.1. Data Preprocessing

3.1. 数据预处理

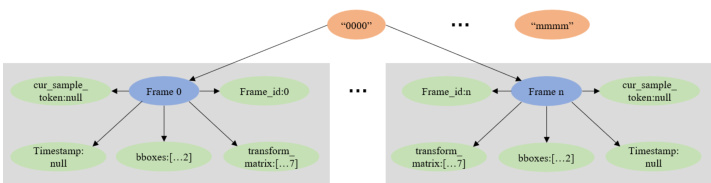

To validate the performance of a unified pipeline (PPL) across different datasets and to facilitate its use by researchers, we standardized the format of detection data from various datasets, referring to it as the Base Version format. This format encapsulates the position of obstacles within the global coordinate system, organized by scene ID, frame sequence, and other pertinent parameters. As depicted in Figure 3, the structure includes a comprehensive scene index with all associated frames. Each frame is detailed with frame number, timestamp, unique token, detection boxes, transformation matrix, and additional relevant data.

为验证统一流程(PPL)在不同数据集上的性能并方便研究者使用,我们将各数据集的检测数据格式标准化,称为基础版本格式。该格式通过场景ID、帧序列等参数组织,封装了障碍物在全球坐标系中的位置信息。如图3所示,该结构包含完整的场景索引及关联帧数据。每帧详细记录帧编号、时间戳、唯一Token、检测框、变换矩阵及其他相关数据。

For each detection box, we archive details such as “detection score,” “category,” “global xyz,” “lwh,” “global orientation” (expressed as a quaternion), “global yaw” in radians, “global velocity,” “global acceleration.” For more detailed explanations, please refer to our code repository.

对于每个检测框,我们存档以下详细信息:"检测分数"、"类别"、"全局xyz坐标"、"长宽高(lwh)"、"全局朝向"(以四元数表示)、"全局偏航角"(弧度制)、"全局速度"、"全局加速度"。更详细的说明请参考我们的代码仓库。

Figure 3. Base Version data format overview.

图 3: 基础版本数据格式概览

3.2. MCTrack Pipeline

3.2. MCTrack 流程

3.2.1 Kalman Filter

3.2.1 卡尔曼滤波器

Currently, most 3D MOT methods [31, 64, 70] incorporate position, size, heading, and score into the Kalman filter modeling, esulting in a state vector $S={x,y,z,l,w,h,\theta,s c o r e,v_{x},v_{y},v_{z}}$ that can have up to 11 dimensions, represented using a unified motion equation, such as constant velocity or constant acceleration models. It is important to note that in this paper, $\theta$ specifically denotes the heading angle. However, this modeling approach has the following issues: Firstly, different state variables may have varying units (e.g., meters, degrees) and magnitudes (e.g., position might be in the meter range, while scores could range from 0 to 1), which can lead to numerical stability problems. Secondly, some state variables exhibit nonlinear relationships (such as the periodic nature of angles), while others are linear (such as dimensions), making it challenging to represent them with a unified motion equation. Furthermore, combining all state variables into a single model increases the dimensionality of the state vector, thereby increasing computational complexity. This may reduce the efficiency of the filter, particularly in real-time applications. Therefore, we decouple the position, size, and heading angle, applying different Kalman filters to each component.

目前,大多数3D MOT方法[31, 64, 70]将位置、尺寸、航向角和得分纳入卡尔曼滤波建模,形成一个最多11维的状态向量 $S={x,y,z,l,w,h,\theta,s c o r e,v_{x},v_{y},v_{z}}$ ,并使用统一运动方程(如匀速或匀加速模型)表示。需注意本文中 $\theta$ 特指航向角。但该建模方式存在以下问题:首先,不同状态变量可能具有不同单位(如米、度)和量级(如位置可能为米级,而得分范围在0到1之间),易导致数值稳定性问题;其次,部分状态变量呈非线性关系(如角度周期性),而另一些是线性的(如尺寸),难以用统一运动方程表征;此外,将所有状态变量合并为单一模型会增加状态向量维度,从而提升计算复杂度,可能降低滤波效率(尤其在实时应用中)。因此,我们将位置、尺寸和航向角解耦,对每个分量应用不同的卡尔曼滤波器。

For position, we only need to model the center point $x,y$ in the BEV plane using a constant acceleration motion model. The state and observation vectors are defined as follows:

对于位置,我们只需在BEV平面上使用恒定加速度运动模型对中心点 $x,y$ 进行建模。状态向量和观测向量定义如下:

$$

S_{\mathsf{p}}={x,y,v_{x},v_{y},a_{x},a_{y}}\quad M_{\mathsf{p}}={x,y,v_{x},v_{y}}

$$

$$

S_{\mathsf{p}}={x,y,v_{x},v_{y},a_{x},a_{y}}\quad M_{\mathsf{p}}={x,y,v_{x},v_{y}}

$$

It should be noted that, theoretically, the size of the same object should remain constant. However, due to potential errors in the perception process, we rely on filters to ensure the stability and continuity of the size.

需要注意的是,理论上同一物体的尺寸应保持恒定。然而,由于感知过程中可能存在误差,我们依赖滤波器来确保尺寸的稳定性和连续性。

Here, $\theta_{p}$ denotes the heading angle provided by perception, while $\theta_{v}$ represents the heading angle calculated from velocity, that is $\theta_{v}=\arctan(v_{y}/v_{x})$ .

这里,$\theta_{p}$ 表示感知提供的航向角,而 $\theta_{v}$ 代表根据速度计算得出的航向角,即 $\theta_{v}=\arctan(v_{y}/v_{x})$。

3.2.2 Cost Function

3.2.2 成本函数

As indicated in reference [73], GIoU fails to distinguish the relative positional relationship when two boxes are con

如文献[73]所述,当两个边界框相...

Figure 2. Overview of our unified 3D MOT framework MCTrack. Our input involves converting datasets such as KITTI, nuScenes, and Waymo into a unified format known as Base Version. The entire pipeline operates within the world coordinate system. Initially, we project 3D point coordinates from the world coordinate system onto the BEV plane for the primary matching phase. Subsequently, unmatched trajectory boxes and detection boxes are projected onto the image plane for secondary matching. Finally, the state of the trajectories is updated, along with the Kalman filter. Our output includes motion information such as position, velocity, and acceleration, which are essential for downstream tasks like prediction and planning.

图 2: 我们的统一3D MOT框架MCTrack概述。输入涉及将KITTI、nuScenes和Waymo等数据集转换为统一格式Base Version。整个流程在世界坐标系中运行。首先,我们将世界坐标系中的3D点坐标投影到BEV平面进行主匹配阶段。随后,将未匹配的轨迹框和检测框投影到图像平面进行次匹配。最后更新轨迹状态及卡尔曼滤波器。输出包含位置、速度、加速度等运动信息,这些对预测和规划等下游任务至关重要。

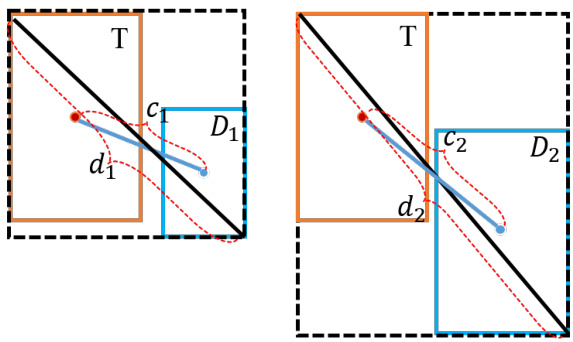

Figure 4. The problem existing in the tracking field with $D I o U$ .

图 4: 跟踪领域存在的 $D I o U$ 问题

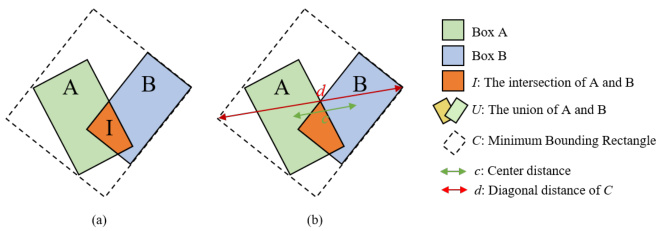

When $\frac{c_{1}}{d_{1}}=\frac{c_{2}}{d_{2}}$ is w s tained within one another, effectively reducing to IoU. Similarly, for DIoU, problems also exist, as shown in Fig.4. When the IoU of two boxes is 0 and the center distances are equal, it is also difficult to determine the similarity between the two boxes. Our extensive experiments reveal that using only Euclidean distance or IoU and its variants as the cost metric is inadequate for capturing similarity across all categories. However, combining distance and IoU yields better results. To address these limitations, we propose $R o_G D I o U$ , an IoU variant based on the BEV plane that incorporates the heading angle of the detection box by integrating $G I o U$ and $D I o U$ . Fig.5 shows a schematic of the $R o_G D I o U$ calculation, and the corresponding pseudocode is provided in Algorithm 1.

当 $\frac{c_{1}}{d_{1}}=\frac{c_{2}}{d_{2}}$ 时,两者相互包含,实际上退化为 IoU。类似地,DIoU 也存在问题,如图 4 所示。当两个框的 IoU 为 0 且中心距离相等时,同样难以确定两个框之间的相似性。我们的大量实验表明,仅使用欧氏距离或 IoU 及其变体作为成本度量不足以捕捉所有类别的相似性。然而,结合距离和 IoU 能取得更好的效果。为了解决这些局限性,我们提出了 $R o_G D I o U$,这是一种基于 BEV 平面的 IoU 变体,通过整合 $G I o U$ 和 $D I o U$ 来融入检测框的航向角。图 5 展示了 $R o_G D I o U$ 的计算示意图,相应的伪代码见算法 1。

Where $\omega_{1}$ and $\omega_{2}$ represent the weights for $I o U$ and Euclidean distance respectively, and $\omega_{1}+\omega_{2}=2$ . When two bounding boxes perfectly match, Ro IoU = 1, CC−U = dc22

其中 $\omega_{1}$ 和 $\omega_{2}$ 分别表示 $I o U$ 和欧氏距离的权重,且 $\omega_{1}+\omega_{2}=2$。当两个边界框完全匹配时,Ro IoU = 1,CC−U = dc22

Figure 5. Schematic of Ro GDIoU calculation.

图 5: Ro GDIoU计算示意图。

Algorithm 1: Pseudo-code of Ro GDIoU

算法 1: Ro GDIoU 伪代码

| Input:Detectionboundingbox Bd = (cd,yd,zd,ld, wd,hd,θd) and Trajectory bounding box Bt=(xt,yt,t,lt,wt,ht,θt) Output:Ro_GDIoU Bbev,Bbey = Fglobal→bev(Bd,Bt); Calculate the area ofintersection |

| I = Finter(Bbev, Bbev); Calculate the area of union Calculate the minimum enclosing rectangle C = Frect(Bgev, Bbev); |

| Calculate theEuclideandistancebetween thecenter |

| Calculatethediagonal distance of of theminimum enclosing rectangle d = Fdist (Bbev, Bbev); |

| Ro-IoU = u; Ro-GDIoU = Ro-IoU - w1 · u .3- d2 |

输入:检测边界框 Bd = (cd, yd, zd, ld, wd, hd, θd) 和轨迹边界框 Bt = (xt, yt, t, lt, wt, ht, θt)

输出:Ro_GDIoU

Bbev, Bbey = Fglobal→bev(Bd, Bt);

计算交集面积 I = Finter(Bbev, Bbev);

计算并集面积

计算最小外接矩形 C = Frect(Bgev, Bbev);

计算中心点之间的欧氏距离

计算最小外接矩形的对角线距离 d = Fdist(Bbev, Bbev);

Ro-IoU = u;

Ro-GDIoU = Ro-IoU - w1 · u .3- d2

which means $R o_G D I o U=1$ . When two boxes are far away, $R o_I o U~=~0$ , $\scriptstyle{\frac{C-U}{C}}={\frac{c^{2}}{d^{2}}}\to1$ , which means $R o_G D I o U=-2$ .

这意味着当$R o_G D I o U=1$时。当两个框相距较远时,$R o_I o U~=~0$,$\scriptstyle{\frac{C-U}{C}}={\frac{c^{2}}{d^{2}}}\to1$,即$R o_G D I o U=-2$。

where, $\mathcal{F}(\cdot)$ represents the motion equation, and in this case, we adopt the constant velocity model. The variable $\Delta\tau$ represents the time difference between the current frame and the previous frame.

其中,$\mathcal{F}(\cdot)$ 代表运动方程,此处采用恒定速度模型。变量 $\Delta\tau$ 表示当前帧与前一帧之间的时间差。

The backward prediction can be computed as follows:

反向预测可按如下方式计算:

$$

D_{\tau-1}^{i}=\mathscr{F}^{-1}(x_{\tau}^{\mathrm{d}},y_{\tau}^{\mathrm{d}},v_{{\mathrm{x}},\tau}^{\mathrm{d}},v_{{\mathrm{y}},\tau}^{\mathrm{d}},-\Delta\tau).

$$

$$

D_{\tau-1}^{i}=\mathscr{F}^{-1}(x_{\tau}^{\mathrm{d}},y_{\tau}^{\mathrm{d}},v_{{\mathrm{x}},\tau}^{\mathrm{d}},v_{{\mathrm{y}},\tau}^{\mathrm{d}},-\Delta\tau).

$$

Ultimately, the cost function between the detection box and the trajectory box is computed by the following formula:

最终,检测框与轨迹框之间的成本函数通过以下公式计算:

$$

\mathcal{L}{c o s t}=\alpha\cdot\mathcal{C}(D_{\tau},T_{\tau})+(1-\alpha)\cdot\mathcal{C}(D_{\tau-1},T_{\tau-1}).

$$

$$

\mathcal{L}{c o s t}=\alpha\cdot\mathcal{C}(D_{\tau},T_{\tau})+(1-\alpha)\cdot\mathcal{C}(D_{\tau-1},T_{\tau-1}).

$$

where, $\alpha\in[0,1]$ , and $C$ represents $R o_G D I o U$ .

其中,$\alpha\in[0,1]$,$C$ 表示 $R o_G D I o U$。

3.2.3 Two-Stage Matching

3.2.3 两阶段匹配

Similar to most methods [31, 70], our pipeline also utilizes a two-stage matching process, with the specific flow shown in Pseudocode 2. However, the key difference is that our two-stage matching is performed from different perspectives, rather than by adjusting thresholds within the same perspective.

与大多数方法 [31, 70] 类似,我们的流程同样采用两阶段匹配策略,具体流程如伪代码 2 所示。但关键区别在于:我们的两阶段匹配是从不同视角进行的,而非通过调整同一视角内的阈值实现。

The calculations for $T_{\mathrm{bev}}$ and $D_{\mathrm{{bev}}}$ are illustrated in equation 7. For the calculation of SDIoU, please consult the approach detailed in [57]. We define the coordinate information of the detection or trajectory box as $X=[x,y,z,l,w,h,\theta]$ . According to equation 7, we can determine the corresponding 8 corners, denoted as $C=$ $[P_{0},P_{1},P_{2},P_{3},P_{4},P_{5},P_{6},P_{7}]$ . Among these corners, we select the points with indices [2, 3, 7, 6] to represent the 4 points on the BEV plane.

$T_{\mathrm{bev}}$和$D_{\mathrm{bev}}$的计算如公式7所示。关于SDIoU的计算方法,请参考[57]中详述的方法。我们将检测或轨迹框的坐标信息定义为$X=[x,y,z,l,w,h,\theta]$。根据公式7,可以确定对应的8个角点,记为$C=[P_{0},P_{1},P_{2},P_{3},P_{4},P_{5},P_{6},P_{7}]$。在这些角点中,我们选择索引为[2,3,7,6]的点来表示BEV平面上的4个点。

$$

C=R\cdot P+T.

$$

$$

C=R\cdot P+T.

$$

$$

P=\left[{\frac{l}{2}}{\frac{l}{2}}{\frac{l}{2}}{\frac{l}{2}}{\frac{l}{2}}{\frac{l}{2}}{\frac{l}{3}}{\frac{\ddot{l}}{2}}-{\frac{l}{2}}{\frac{l}{2}}-{\frac{l}{2}}{\frac{\ddot{l}}{2}}-{\frac{l}{2}}{\frac{\ddot{l}}{2}}\right]\nonumber

$$

$$

P=\left[{\frac{l}{2}}{\frac{l}{2}}{\frac{l}{2}}{\frac{l}{2}}{\frac{l}{2}}{\frac{l}{2}}{\frac{l}{3}}{\frac{\ddot{l}}{2}}-{\frac{l}{2}}{\frac{l}{2}}-{\frac{l}{2}}{\frac{\ddot{l}}{2}}-{\frac{l}{2}}{\frac{\ddot{l}}{2}}\right]\nonumber

$$

$$

R=\left[\begin{array}{c c c}{\cos(\theta)}&{-\sin(\theta)}&{0}\ {\sin(\theta)}&{\cos(\theta)}&{0}\ {0}&{0}&{1}\end{array}\right],\quad T=\left[\begin{array}{l}{x}\ {y}\ {z}\end{array}\right].

$$

$$

R=\left[\begin{array}{c c c}{\cos(\theta)}&{-\sin(\theta)}&{0}\ {\sin(\theta)}&{\cos(\theta)}&{0}\ {0}&{0}&{1}\end{array}\right],\quad T=\left[\begin{array}{l}{x}\ {y}\ {z}\end{array}\right].

$$

Algorithm 2: Pseudo-code of Two-stage Matching

算法 2: 两阶段匹配伪代码

| 输入: T-1时刻的轨迹框T, T时刻的检测框D

输出: 匹配索引M

/第一阶段匹配:BEV平面/

Tbev, Dbev = F3d→bev(T, D)

计算代价Lbev = Ro-GDIoU(Dbev, Tbev)

匹配对Mbev = Hungarian(Lbev, thresholdbev)

/第二阶段匹配:RV平面/

for dbev in Dbev do

if dbev not in Mbev[:, 0] then

dbev → Dres

end

end

for tbev in Tbev do

if tbev not in Mbev[:, 1] then

tbev → Tres

end

end

4. New MOT Evaluation Metrics

4. 新型多目标跟踪评估指标

4.1. Static Metrics

4.1. 静态指标

Traditional MOT evaluation primarily relies on metrics such as CLEAR [4], AMOTA [61], HOTA [35], and IDF1 [43]. These metrics focus on assessing the correctness and consistency of trajectory connections. In this paper, we refer to these metrics as static metrics. However, static metrics do not consider the motion information of trajectories after they are connected, such as speed, acceleration, and angular velocity. In fields like autonomous driving and robotics, accurate motion information is crucial for downstream prediction, planning, and control tasks. Therefore, relying solely on static metrics may not fully reflect the actual perfor- mance and application value of a tracking system.

传统多目标跟踪(MOT)评估主要依赖CLEAR [4]、AMOTA [61]、HOTA [35]和IDF1 [43]等指标。这些指标侧重于评估轨迹连接的正确性和一致性。本文将这些指标称为静态指标。然而,静态指标并未考虑轨迹连接后的运动信息,例如速度、加速度和角速度。在自动驾驶和机器人等领域,精确的运动信息对下游预测、规划和控制任务至关重要。因此,仅依赖静态指标可能无法充分反映跟踪系统的实际性能和应用价值。

Introducing motion metrics into MOT evaluation to assess the motion characteristics and accuracy of trajectories becomes particularly important. This not only provides a more comprehensive evaluation of the tracking system’s performance but also enhances its practical application in autonomous driving and robotics, ensuring that the system meets real-world requirements and performs effectively in complex environments.

在MOT评估中引入运动指标以评估轨迹的运动特性和准确性变得尤为重要。这不仅能够更全面地衡量跟踪系统的性能,还能提升其在自动驾驶和机器人领域的实际应用价值,确保系统满足现实需求并在复杂环境中有效运行。

$$

V_{\mathrm{d}}^{S G}=S G\left(V_{\mathrm{d}},w,p\right),

$$

$$

V_{\mathrm{d}}^{S G}=S G\left(V_{\mathrm{d}},w,p\right),

$$

4.2. Motion Metrics

4.2. 运动指标

To address the issue that current MOT evaluation metrics do not adequately consider motion attributes, we propose a series of new motion metrics, including Velocity Angle Error (VAE), Velocity Norm Error (VNE), Velocity Angle Inverse Error (VAIE), Velocity Inversion Ratio (VIR), Velocity Smoothness Error (VSE) and Velocity Delay Error (VDE). These motion metrics aim to comprehensively assess the performance of tracking systems in handling motion features, covering the accuracy and stability of motion information such as speed, angle, and velocity smoothness.

针对当前多目标跟踪(MOT)评估指标未充分考虑运动属性的问题,我们提出了一系列新的运动指标,包括速度角度误差(VAE)、速度范数误差(VNE)、速度角度逆误差(VAIE)、速度反转率(VIR)、速度平滑度误差(VSE)和速度延迟误差(VDE)。这些运动指标旨在全面评估跟踪系统处理运动特征的性能,涵盖速度、角度及速度平滑度等运动信息的准确性和稳定性。

VAE represents the error between the velocity angle obtained from tracking cooperation and the ground truth angle, calculated as:

VAE表示从跟踪合作中获取的速度角度与真实角度之间的误差,计算公式为:

$$

\mathrm{VSE}=|V_{\mathrm{d}}-V_{\mathrm{d}}^{S G})|.

$$

$$

\mathrm{VSE}=|V_{\mathrm{d}}-V_{\mathrm{d}}^{S G})|.

$$

where $w$ and $p$ refer to the window size and polynomial order of the filter, respectively. $V_{\mathrm{d}}^{S G}$ represents the velocity value after being smoothed by the $S G$ filter. A smaller VSE value indicates that the original velocity curve is smoother.

其中 $w$ 和 $p$ 分别表示滤波器的窗口大小和多项式阶数。$V_{\mathrm{d}}^{S G}$ 代表经 $S G$ 滤波器平滑后的速度值。VSE值越小,表明原始速度曲线越平滑。

VDE represents the time delay of the velocity signal obtained by the tracking system relative to the true velocity signal. It is calculated by finding the offset within a given time window, which minimizes the sum of the mean and standard deviation of the difference between the true velocity and the velocity obtained by the tracking system.

VDE表示跟踪系统获取的速度信号相对于真实速度信号的时间延迟。其计算方法是在给定时间窗口内寻找偏移量,使得真实速度与跟踪系统获取速度之差的均值和标准差之和最小。

First, we use a peak detection algorithm to identify the set $v_{g t}^{p}$ of local maxima in the velocity ground truth sequence.

首先,我们使用峰值检测算法来识别速度真实值序列中的局部最大值集合 $v_{g t}^{p}$。

$$

\mathrm{VAE}=(\theta_{g t}-\theta_{d}+\pi)\bmod2\pi-\pi.

$$

$$

\mathrm{VAE}=(\theta_{g t}-\theta_{d}+\pi)\bmod2\pi-\pi.

$$

where $\theta_{\mathrm{gt}}$ denotes the angle calculated from the target speed, and $\theta_{\mathrm{d}}$ denotes the angle calculated from the tracking speed, with both angles ranging from $0^{\circ}$ to $360^{\circ}$ . Given the discontinuity of angles, a $1^{\circ}$ difference from $359^{\circ}$ effectively corresponds to a $2^{\circ}$ separation.

其中 $\theta_{\mathrm{gt}}$ 表示根据目标速度计算的角度,$\theta_{\mathrm{d}}$ 表示根据跟踪速度计算的角度,两个角度的取值范围均为 $0^{\circ}$ 到 $360^{\circ}$。由于角度的不连续性,$359^{\circ}$ 与 $1^{\circ}$ 之间的差值实际上对应 $2^{\circ}$ 的间隔。

VAIE quantifies the angle error when the velocity angle error surpasses a predefined threshold of $\textstyle{\frac{1}{2}}\pi$ . Breaching this threshold typically indicates that the tracking system’s estimation of the target’s velocity direction is directly opposite to the actual direction.

VAIE量化了当速度角度误差超过预设阈值$\textstyle{\frac{1}{2}}\pi$时的角度误差。突破该阈值通常表明跟踪系统对目标速度方向的估计与实际方向完全相反。

$$

\mathrm{VAIE}=\left|\theta_{\mathrm{gt}}-\theta_{\mathrm{d}}\right|,\quad\mathrm{if}\left|\theta_{\mathrm{gt}}-\theta_{\mathrm{d}}\right|>\frac{1}{2}\pi.

$$

$$

\mathrm{VAIE}=\left|\theta_{\mathrm{gt}}-\theta_{\mathrm{d}}\right|,\quad\mathrm{if}\left|\theta_{\mathrm{gt}}-\theta_{\mathrm{d}}\right|>\frac{1}{2}\pi.

$$

The corresponding VIR stands for velocity inverse ratio, which represents the proportion of velocity angle errors that exceed the threshold.

对应的VIR代表速度反比(velocity inverse ratio),表示速度角度误差超过阈值的比例。

where $N$ represents the sequence length of the trajectory.

其中 $N$ 表示轨迹的序列长度。

VNE represents the error between the magnitude of velocity obtained by the tracking system and the true magnitude of velocity, calculated as:

VNE表示跟踪系统获取的速度大小与真实速度大小之间的误差,计算公式为:

$$

\mathrm{VNE}=\left|V_{\mathrm{gt}}-V_{\mathrm{d}}\right|.

$$

$$

\mathrm{VNE}=\left|V_{\mathrm{gt}}-V_{\mathrm{d}}\right|.

$$

where $V_{\mathrm{gt}}$ and $V_{\mathrm{d}}$ represent the actual and predicted velocity magnitudes, respectively.

其中 $V_{\mathrm{gt}}$ 和 $V_{\mathrm{d}}$ 分别表示实际和预测的速度大小。

VSE represents the smoothness error of the velocity obtained from the filter. The smoothed velocity is calculated using the Savitzky-Golay (SG) [45] filter.

VSE 表示从滤波器获得的速度的平滑误差。平滑速度是使用 Savitzky-Golay (SG) [45] 滤波器计算的。

$$

v_{\mathrm{gt}}^{p}={\mathcal{F}}(v_{\mathrm{gt}}),

$$

$$

v_{\mathrm{gt}}^{p}={\mathcal{F}}(v_{\mathrm{gt}}),

$$

Here, ${\mathcal{F}}(\cdot)$ denotes the peak detection function, where the peak points must satisfy the condition $v_{\mathrm{gt}}[t-1]<v_{\mathrm{gt}}[t]>$ $v_{\mathrm{gt}}[t+1]$ . Subsequently, we calculate the difference between the ground truth velocity and the tracking velocity within a given time window.

这里,${\mathcal{F}}(\cdot)$ 表示峰值检测函数,其中峰值点必须满足条件 $v_{\mathrm{gt}}[t-1]<v_{\mathrm{gt}}[t]>$ $v_{\mathrm{gt}}[t+1]$。随后,我们计算给定时间窗口内地面真实速度与跟踪速度之间的差值。

$$

V_{\mathrm{gt}}^{w}=V_{\mathrm{gt}}[t-w/2:t+w/2],

$$

$$

V_{\mathrm{gt}}^{w}=V_{\mathrm{gt}}[t-w/2:t+w/2],

$$

$$

\begin{array}{r}{V_{\mathrm{d},\tau}^{w}=V_{\mathrm{d}}[t-w/2+\tau:t+w/2+\tau],}\ {\tau\in[0,n]\qquad}\end{array}

$$

$$

\begin{array}{r}{V_{\mathrm{d},\tau}^{w}=V_{\mathrm{d}}[t-w/2+\tau:t+w/2+\tau],}\ {\tau\in[0,n]\qquad}\end{array}

$$

$$

\Delta V_{\tau}^{w}={\left\vert v_{\mathrm{gt}}^{i}-v_{\mathrm{d},\tau}^{i}\right\vert\mid(v_{\mathrm{gt}}^{i}\in V_{\mathrm{gt}}^{w},v_{\mathrm{d},\tau}^{i}\in V_{\mathrm{d},\tau}^{w})},

$$

$$

\Delta V_{\tau}^{w}={\left\vert v_{\mathrm{gt}}^{i}-v_{\mathrm{d},\tau}^{i}\right\vert\mid(v_{\mathrm{gt}}^{i}\in V_{\mathrm{gt}}^{w},v_{\mathrm{d},\tau}^{i}\in V_{\mathrm{d},\tau}^{w})},

$$

where $t$ represents the time corresponding to the peak point, $w$ represents the window length, $\tau$ indicates the shift length applied to the velocity window from the tracking system, and $\Delta V_{\tau}^{w}$ represents the set of differences between the true velocity and the tracking velocity. Next, we calculate the mean and standard deviation of set $\Delta V_{\tau}^{w}$ .

其中 $t$ 表示峰值点对应的时间,$w$ 表示窗口长度,$\tau$ 表示跟踪系统中速度窗口应用的偏移长度,$\Delta V_{\tau}^{w}$ 表示真实速度与跟踪速度之间差异的集合。接着,我们计算集合 $\Delta V_{\tau}^{w}$ 的均值和标准差。

$$

M={\mu_{0},\mu_{1},...,\mu_{n}|\mu_{\tau}=\frac{1}{w}\sum_{j=1}^{w}\Delta v_{\tau}^{j}},

$$

$$

M={\mu_{0},\mu_{1},...,\mu_{n}|\mu_{\tau}=\frac{1}{w}\sum_{j=1}^{w}\Delta v_{\tau}^{j}},

$$

$$

\Sigma={\sigma_{0},\sigma_{1},...,\sigma_{n}|\sigma_{\tau}=\sqrt{\frac{1}{w}\sum_{j=1}^{w}\Big(\Delta v_{\tau}^{j}-\mu_{\tau}\Big)^{2}}},

$$

$$

\Sigma={\sigma_{0},\sigma_{1},...,\sigma_{n}|\sigma_{\tau}=\sqrt{\frac{1}{w}\sum_{j=1}^{w}\Big(\Delta v_{\tau}^{j}-\mu_{\tau}\Big)^{2}}},

$$

Finally, the time offset $\tau$ corresponding to the minimum sum of the mean and standard deviation is the VDE.

最后,对应于均值和标准差之和最小的时间偏移 $\tau$ 即为VDE。

$$

\mathrm{VDE}=\tau=\arg\operatorname*{min}_{\tau\in[0,n]}\left(M+\Sigma\right).

$$

$$

\mathrm{VDE}=\tau=\arg\operatorname*{min}_{\tau\in[0,n]}\left(M+\Sigma\right).

$$

where $\tau$ is the timestamp corresponding to the velocity vector and $n$ is the considered time window. It is important to note that for the ground truth of a trajectory, there can be multiple peak points in the time series. The above calculation method only addresses the lag of a single peak point. If there are multiple peak points, the average will be taken to represent the lag of the entire trajectory.

其中 $\tau$ 是对应速度向量的时间戳,$n$ 是考虑的时间窗口。需要注意的是,对于轨迹的真实值,时间序列中可能存在多个峰值点。上述计算方法仅针对单个峰值点的滞后情况。若存在多个峰值点,则将取平均值以代表整条轨迹的滞后量。

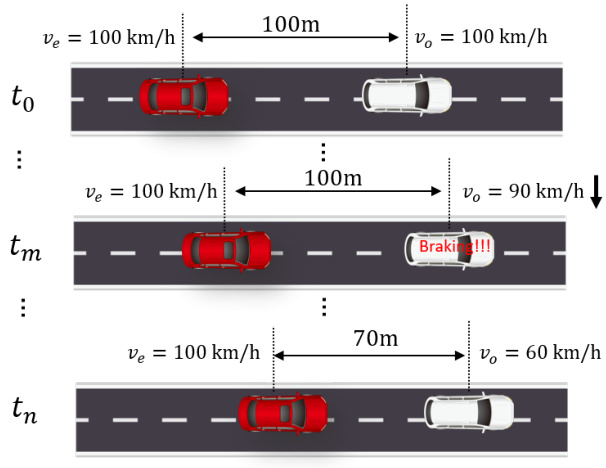

To better illustrate the significance of the VDE metric, we provide a schematic diagram in Fig.6. The diagram shows two vehicles traveling at a speed of 100 kilometers per hour: the red one represents the autonomous vehicle, and the white one represents the obstacle ahead. The initial safe distance between the two vehicles is set at 100 meters. Suppose at time point $t_{m}$ , the leading vehicle begins to decelerate urgently and reduces its speed to 60 kilometers per hour by time point $t_{n}$ . If there is a delay in the autonomous vehicle’s perception of the leading vehicle’s speed, it might mistakenly believe that the leading vehicle is still traveling at 100 kilometers per hour. This can lead to an imperceptible reduction in the safe distance between the two vehicles. It is not until time point $t_{n}$ that the autonomous vehicle finally perceives the deceleration of the leading vehicle, by which time the safe distance may be very close to the limit. Therefore, optimizing the motion information output by the multi-object tracking module is also crucial in autonomous driving.

为了更好地说明VDE指标的重要性,我们在图6中提供了示意图。图中展示了两辆以每小时100公里速度行驶的车辆:红色代表自动驾驶车辆,白色代表前方障碍物。两车初始安全距离设定为100米。假设在时间点$t_{m}$,前车开始紧急减速,并在时间点$t_{n}$将速度降至每小时60公里。若自动驾驶车辆对前车速度的感知存在延迟,可能会误判前车仍保持每小时100公里行驶。这将导致两车安全距离出现不易察觉的缩减。直到时间点$t_{n}$,自动驾驶车辆才最终感知到前车减速,此时安全距离可能已接近极限值。因此,优化多目标跟踪模块输出的运动信息对自动驾驶也至关重要。

Figure 6. A schematic diagram illustrating the impact of motion information lag on practical applications.

图 6: 运动信息滞后对实际应用影响的示意图。

5. Experiment

5. 实验

In this section, we first outline our experimental setup, including the datasets and implementation details. We then conduct a comprehensive comparison between our method and SOTA approaches on the 3D MOT benchmarks of the KITTI, nuScenes, and Waymo datasets. Following this, we evaluate our newly proposed dynamic metrics using various methods. Finally, we provide a series of ablation studies and related analyses to investigate the various design choices in our approach.

在本节中,我们首先概述实验设置,包括数据集和实现细节。随后在KITTI、nuScenes和Waymo数据集的3D MOT基准测试上,对我们的方法与SOTA方案进行全面比较。接着使用多种方法评估新提出的动态指标。最后通过一系列消融实验和相关分析,探讨方法中的各项设计选择。

5.1. Dataset and Implementation Details

5.1. 数据集与实现细节

A. Datasets

A. 数据集

KITTI: The KITTI tracking benchmark [22] consists of 21 training sequences and 29 testing sequences. The training dataset comprises a total of 8,008 frames, with an average of 3.8 detections per frame, while the testing dataset contains 11,095 frames, with an average of 3.5 detections per frame. The point cloud data in KITTI is captured using a Velodyne HDL-64E LiDAR sensor, with a scan frequency of $10\mathrm{Hz}$ . The time interval $\delta$ between scans, used to infer actual velocity and acceleration, is 0.1 seconds. We compare our results on the vehicle category in the test dataset with those of other methods.

KITTI: KITTI跟踪基准[22]包含21个训练序列和29个测试序列。训练数据集共计8,008帧,平均每帧3.8个检测结果;测试数据集包含11,095帧,平均每帧3.5个检测结果。KITTI中的点云数据由Velodyne HDL-64E激光雷达传感器采集,扫描频率为$10\mathrm{Hz}$。用于推断实际速度和加速度的扫描时间间隔$\delta$为0.1秒。我们在测试数据集的车辆类别上与其他方法进行了结果对比。

NuScenes: The nuScenes dataset [5] is a large-scale dataset that contains 1,000 driving sequences, each spanning 20 seconds. LiDAR data in nuScenes is provided at $20~\mathrm{Hz}$ , but the 3D labels are only available at $2\mathrm{Hz}$ . The nuScenes dataset includes seven categories of data, and we evaluate all