Linguistically-Informed Self-Attention for Semantic Role Labeling

基于语言学的自注意力机制在语义角色标注中的应用

Abstract

摘要

Current state-of-the-art semantic role labeling (SRL) uses a deep neural network with no explicit linguistic features. However, prior work has shown that gold syntax trees can dramatically improve SRL decoding, suggesting the possibility of increased accuracy from explicit modeling of syntax. In this work, we present linguistically-informed self-attention (LISA): a neural network model that combines multi-head self-attention with multi-task learning across dependency parsing, part-ofspeech tagging, predicate detection and SRL. Unlike previous models which require significant pre-processing to prepare linguistic features, LISA can incorporate syntax using merely raw tokens as input, encoding the sequence only once to simultaneously perform parsing, predicate detection and role labeling for all predicates. Syntax is incorporated by training one attention head to attend to syntactic parents for each token. Moreover, if a high-quality syntactic parse is already available, it can be beneficially injected at test time without re-training our SRL model. In experiments on CoNLL-2005 SRL, LISA achieves new state-of-the-art performance for a model using predicted predicates and standard word embeddings, attaining $2.5\mathrm{~F}1$ absolute higher than the previous state-of-the-art on newswire and more than $3.5\mathrm{~F}1$ on outof-domain data, nearly $10%$ reduction in error. On ConLL-2012 English SRL we also show an improvement of more than $2.5\mathrm{~F}1$ . LISA also out-performs the state-of-the-art with con textually-encoded (ELMo) word represent at ions, by nearly $1.0\mathrm{~F}1$ on news and more than $2.0\mathrm{F}1$ on out-of-domain text.

当前最先进的语义角色标注(SRL)采用不带显式语言特征的深度神经网络。然而先前研究表明,黄金标准句法树能显著提升SRL解码效果,这暗示通过显式句法建模可能提高准确率。本文提出语言信息自注意力机制(LISA):该神经网络模型将多头自注意力与依存句法分析、词性标注、谓词检测及SRL的多任务学习相结合。不同于需要大量预处理来准备语言特征的先前模型,LISA仅需原始token作为输入即可融入句法信息,仅需单次编码就能同步执行所有谓词的句法分析、谓词检测和角色标注。通过训练一个注意力头来关注每个token的句法父节点实现句法整合。此外,若已存在高质量句法分析结果,无需重新训练SRL模型即可在测试阶段有效注入该信息。在CoNLL-2005 SRL实验中,LISA在使用预测谓词和标准词嵌入的模型中达到新state-of-the-art性能,新闻报道数据F1值绝对提升2.5,域外数据提升超过3.5 F1,错误率降低近10%。在CoNLL-2012英语SRL任务上同样实现超过2.5 F1的提升。使用上下文编码(ELMo)词表征时,LISA在新闻文本上以近1.0 F1优势、域外文本以超过2.0 F1优势超越现有最佳模型。

1 Introduction

1 引言

Semantic role labeling (SRL) extracts a high-level representation of meaning from a sentence, labeling e.g. who did what to whom. Explicit representations of such semantic information have been shown to improve results in challenging downstream tasks such as dialog systems (Tur et al., 2005; Chen et al., 2013), machine reading (Berant et al., 2014; Wang et al., 2015) and translation (Liu and Gildea, 2010; Bazrafshan and Gildea, 2013).

语义角色标注 (SRL) 从句子中提取出高层次的意义表示,例如标注谁对谁做了什么。这种语义信息的显式表示已被证明能提升对话系统 (Tur et al., 2005; Chen et al., 2013)、机器阅读理解 (Berant et al., 2014; Wang et al., 2015) 和机器翻译 (Liu and Gildea, 2010; Bazrafshan and Gildea, 2013) 等具有挑战性的下游任务的效果。

Though syntax was long considered an obvious prerequisite for SRL systems (Levin, 1993; Punyakanok et al., 2008), recently deep neural network architectures have surpassed syntacticallyinformed models (Zhou and Xu, 2015; Marcheggiani et al., 2017; He et al., 2017; Tan et al., 2018; He et al., 2018), achieving state-of-the art SRL performance with no explicit modeling of syntax. An additional benefit of these end-to-end models is that they require just raw tokens and (usually) detected predicates as input, whereas richer linguistic features typically require extraction by an auxiliary pipeline of models.

尽管句法长期以来被视为语义角色标注(SRL)系统的先决条件(Levin, 1993; Punyakanok et al., 2008),但近年来深度神经网络架构已超越基于句法的模型(Zhou and Xu, 2015; Marcheggiani et al., 2017; He et al., 2017; Tan et al., 2018; He et al., 2018),在不显式建模句法的情况下实现了最先进的SRL性能。这些端到端模型的另一个优势是仅需原始token和(通常)检测到的谓词作为输入,而更丰富的语言特征通常需要通过辅助模型流水线来提取。

Still, recent work (Roth and Lapata, 2016; He et al., 2017; March egg ian i and Titov, 2017) indi- cates that neural network models could see even higher accuracy gains by leveraging syntactic information rather than ignoring it. He et al. (2017) indicate that many of the errors made by a syntaxfree neural network on SRL are tied to certain syntactic confusions such as prepositional phrase attachment, and show that while constrained inference using a relatively low-accuracy predicted parse can provide small improvements in SRL accuracy, providing a gold-quality parse leads to substantial gains. March egg ian i and Titov (2017) incorporate syntax from a high-quality parser (Ki per wasser and Goldberg, 2016) using graph convolutional neural networks (Kipf and Welling, 2017), but like He et al. (2017) they attain only small increases over a model with no syntactic parse, and even perform worse than a syntax-free model on out-of-domain data. These works suggest that though syntax has the potential to improve neural network SRL models, we have not yet designed an architecture which maximizes the benefits of auxiliary syntactic information.

然而,近期研究 (Roth and Lapata, 2016; He et al., 2017; March egg ian i and Titov, 2017) 表明,神经网络模型通过利用句法信息而非忽略它,可能获得更高的准确率提升。He et al. (2017) 指出,无句法神经网络在语义角色标注 (SRL) 中的许多错误与特定句法混淆(如介词短语依附)相关,并证明虽然使用相对低准确率的预测句法进行约束推理只能小幅提升 SRL 准确率,但提供高质量标注句法可带来显著增益。March egg ian i and Titov (2017) 通过图卷积神经网络 (Kipf and Welling, 2017) 整合了来自高质量句法分析器 (Ki per wasser and Goldberg, 2016) 的句法信息,但如同 He et al. (2017) 一样,他们仅比无句法分析模型获得微小提升,在域外数据上甚至表现更差。这些研究表明,尽管句法有潜力改进神经网络 SRL 模型,但我们尚未设计出能最大化辅助句法信息效益的架构。

In response, we propose linguistically-informed self-attention (LISA): a model that combines multi-task learning (Caruana, 1993) with stacked layers of multi-head self-attention (Vaswani et al., 2017); the model is trained to: (1) jointly predict parts of speech and predicates; (2) perform parsing; and (3) attend to syntactic parse parents, while (4) assigning semantic role labels. Whereas prior work typically requires separate models to provide linguistic analysis, including most syntaxfree neural models which still rely on external predicate detection, our model is truly end-to-end: earlier layers are trained to predict prerequisite parts-of-speech and predicates, the latter of which are supplied to later layers for scoring. Though prior work re-encodes each sentence to predict each desired task and again with respect to each predicate to perform SRL, we more efficiently encode each sentence only once, predict its predicates, part-of-speech tags and labeled syntactic parse, then predict the semantic roles for all predicates in the sentence in parallel. The model is trained such that, as syntactic parsing models improve, providing high-quality parses at test time will improve its performance, allowing the model to leverage updated parsing models without requiring re-training.

为此,我们提出语言信息自注意力机制 (linguistically-informed self-attention, LISA) :该模型结合了多任务学习 (Caruana, 1993) 与多层多头自注意力机制 (Vaswani et al., 2017) ,训练目标包括: (1) 联合预测词性和谓词; (2) 执行句法分析; (3) 关注句法分析父节点;同时 (4) 分配语义角色标签。现有研究通常需要多个独立模型提供语言学分析(包括大多数不依赖句法的神经模型仍需外部谓词检测),而我们的模型实现了真正的端到端:浅层网络负责预测词性和谓词(后者将作为深层网络的输入进行评分)。传统方法需对每个句子重复编码以预测不同任务,并针对每个谓词重新编码以实现语义角色标注 (SRL) ,而本模型仅需单次编码即可并行完成句子级谓词预测、词性标注、句法分析树构建,以及所有谓词的语义角色标注。模型设计使得测试阶段句法分析模型的性能提升(提供更高质量的分析树)可直接提升本模型表现,无需重新训练即可兼容更新的句法分析模型。

In experiments on the CoNLL-2005 and CoNLL-2012 datasets we show that our linguistically-informed models out-perform the syntax-free state-of-the-art. On CoNLL-2005 with predicted predicates and standard word embeddings, our single model out-performs the previous state-of-the-art model on the WSJ test set by $2.5\mathrm{~F}1$ points absolute. On the challenging out-of-domain Brown test set, our model improves substantially over the previous state-of-the-art by more than $3.5\mathrm{F}1$ , a nearly $10%$ reduction in error. On CoNLL-2012, our model gains more than 2.5 F1 absolute over the previous state-of-the-art. Our models also show improvements when using con textually-encoded word representations (Peters et al., 2018), obtaining nearly $1.0\textrm{F}1$ higher than the state-of-the-art on CoNLL-2005 news and more than 2.0 F1 improvement on out-of-domain text.1

在CoNLL-2005和CoNLL-2012数据集上的实验表明,我们的语言学信息模型优于当前最先进的无语法模型。在CoNLL-2005数据集上,使用预测谓词和标准词嵌入时,我们的单一模型在WSJ测试集上比之前的最先进模型绝对提升了$2.5\mathrm{~F}1$分。在具有挑战性的域外Brown测试集上,我们的模型比之前的最先进水平显著提高了超过$3.5\mathrm{F}1$,错误率降低了近$10%$。在CoNLL-2012数据集上,我们的模型比之前的最先进水平绝对提升了超过2.5 F1。当使用上下文编码的词表示(Peters et al., 2018)时,我们的模型也显示出改进,在CoNLL-2005新闻数据上比最先进水平高出近$1.0\textrm{F}1$,在域外文本上提升了超过2.0 F1。1

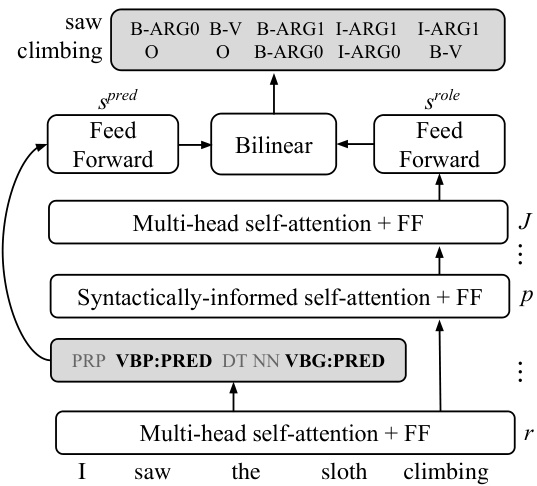

Figure 1: Word embeddings are input to $J$ layers of multi-head self-attention. In layer $p$ one attention head is trained to attend to parse parents (Figure 2). Layer $r$ is input for a joint predicate/POS classifier. Representations from layer $r$ corresponding to predicted predicates are passed to a bilinear operation scoring distinct predicate and role represent at ions to produce per-token SRL predictions with respect to each predicted predicate.

图 1: 词嵌入 (word embeddings) 输入到 $J$ 层多头自注意力 (multi-head self-attention) 机制。在第 $p$ 层中,一个注意力头被训练用于关注解析父节点 (parse parents) (图 2)。第 $r$ 层作为联合谓词/词性分类器 (joint predicate/POS classifier) 的输入。来自第 $r$ 层的表示对应于预测谓词,这些表示被传递到双线性操作 (bilinear operation) 中,对不同的谓词和角色表示进行评分,从而针对每个预测谓词生成每个 Token 的语义角色标注 (SRL) 预测。

2 Model

2 模型

Our goal is to design an efficient neural network model which makes use of linguistic information as effectively as possible in order to perform endto-end SRL. LISA achieves this by combining: (1) A new technique of supervising neural attention to predict syntactic dependencies with (2) multi-task learning across four related tasks.

我们的目标是设计一个高效的神经网络模型,尽可能有效地利用语言信息来实现端到端语义角色标注 (SRL)。LISA通过以下两项技术的结合实现这一目标:(1) 监督神经注意力预测句法依存关系的新技术,以及 (2) 跨四个相关任务的多任务学习。

Figure 1 depicts the overall architecture of our model. The basis for our model is the Transformer encoder introduced by Vaswani et al. (2017): we transform word embeddings into con textually-encoded token representations using stacked multi-head self-attention and feedforward layers (§2.1).

图 1: 展示了我们模型的整体架构。我们的模型基于 Vaswani 等人 (2017) 提出的 Transformer 编码器:通过堆叠的多头自注意力 (multi-head self-attention) 和前馈层 (§2.1),我们将词嵌入 (word embeddings) 转换为上下文编码的 Token 表征。

To incorporate syntax, one self-attention head is trained to attend to each token’s syntactic parent, allowing the model to use this attention head as an oracle for syntactic dependencies. We introduce this syntactically-informed self-attention (Figure 2) in more detail in $\S2.2$ .

为了融入句法信息,我们训练了一个自注意力头(self-attention head)来关注每个token的句法父节点,使模型能够将该注意力头作为句法依赖关系的预测器。我们将在$\S2.2$节详细阐述这种句法感知的自注意力机制(图2)。

Our model is designed for the more realistic setting in which gold predicates are not provided at test-time. Our model predicts predicates and integrates part-of-speech (POS) information into earlier layers by re-purposing representations closer to the input to predict predicate and POS tags us- ing hard parameter sharing (§2.3). We simplify optimization and benefit from shared statistical strength derived from highly correlated POS and predicates by treating tagging and predicate detection as a single task, performing multi-class classification into the joint Cartesian product space of POS and predicate labels.

我们的模型针对测试时不提供黄金谓词这一更现实的场景而设计。该模型通过重新利用靠近输入端的表征来预测谓词和词性 (POS) 标签,采用硬参数共享机制将POS信息整合到更早的层级 (§2.3)。我们将标注任务和谓词检测视为单一任务,通过多类别分类进入POS与谓词标签的笛卡尔积联合空间,从而简化优化过程,并受益于高度相关的POS与谓词之间共享的统计强度。

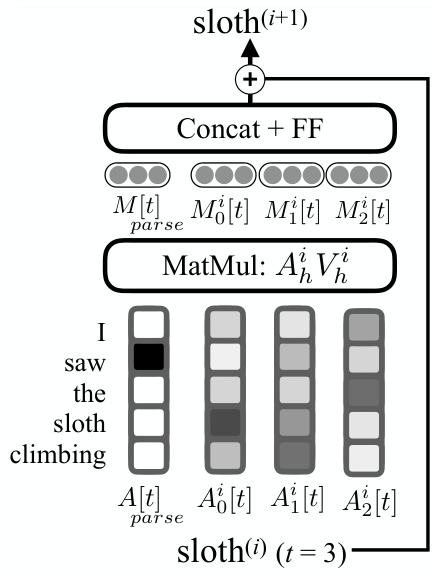

Figure 2: Syntactically-informed self-attention for the query word sloth. Attention weights $A_{p a r s e}$ heavily weight the token’s syntactic governor, saw, in a weighted average over the token values $V_{p a r s e}$ . The other attention heads act as usual, and the attended representations from all heads are concatenated and projected through a feed-forward layer to produce the syntacticallyinformed representation for sloth.

图 2: 针对查询词"sloth"的语法感知自注意力机制。注意力权重 $A_{parse}$ 在token值 $V_{parse}$ 的加权平均中显著偏向该token的语法支配词"saw"。其他注意力头保持常规运作,所有注意力头的关注表示经过拼接后通过前馈层投影,最终生成"sloth"的语法感知表示。

Though typical models, which re-encode the sentence for each predicate, can simplify SRL to token-wise tagging, our joint model requires a different approach to classify roles with respect to each predicate. Con textually encoded tokens are projected to distinct predicate and role embeddings (§2.4), and each predicted predicate is scored with the sequence’s role representations using a bilinear model (Eqn. 6), producing per-label scores for BIO-encoded semantic role labels for each token and each semantic frame.

虽然典型模型(为每个谓词重新编码句子)可以将语义角色标注(SRL)简化为基于token的标注任务,但我们的联合模型需要采用不同方法对每个谓词相关的角色进行分类。经过上下文编码的token会被映射到不同的谓词和角色嵌入空间(§2.4),每个预测谓词通过双线性模型(公式6)与序列的角色表示进行评分,从而为每个token和每个语义框架的BIO编码语义角色标签生成逐标签分数。

The model is trained end-to-end by maximum likelihood using stochastic gradient descent (§2.5).

该模型通过随机梯度下降(见第2.5节)以端到端方式进行最大似然训练。

2.1 Self-attention token encoder

2.1 自注意力Token编码器

The basis for our model is a multi-head selfattention token encoder, recently shown to achieve state-of-the-art performance on SRL (Tan et al., 2018), and which provides a natural mechanism for incorporating syntax, as described in $\S2.2$ . Our implementation replicates Vaswani et al. (2017).

我们模型的基础是一个多头自注意力(multi-head self-attention) token编码器,该架构近期在语义角色标注(SRL)任务中实现了最先进性能 [20],并通过 $\S2.2$ 章节描述的机制天然支持语法信息融合。我们的实现复现了Vaswani等人[21]的工作。

The input to the network is a sequence $\mathcal{X}$ of $T$ token representations $x_{t}$ . In the standard setting these token representations are initialized to pretrained word embeddings, but we also experiment with supplying pre-trained ELMo representations combined with task-specific learned parameters, which have been shown to substantially improve performance of other SRL models (Peters et al., 2018). For experiments with gold predicates, we concatenate a predicate indicator embedding $p_{t}$ following previous work (He et al., 2017).

网络的输入是一个由 $T$ 个token表示 $x_{t}$ 组成的序列 $\mathcal{X}$。在标准设置中,这些token表示初始化为预训练的词嵌入,但我们也尝试提供预训练的ELMo表示与任务特定学习参数相结合的方法,这已被证明能显著提升其他语义角色标注 (SRL) 模型的性能 (Peters et al., 2018)。在使用黄金谓词的实验中,我们按照先前工作 (He et al., 2017) 的方法拼接了一个谓词指示器嵌入 $p_{t}$。

We project2 these input embeddings to a represent ation that is the same size as the output of the self-attention layers. We then add a positional encoding vector computed as a deterministic sinusoidal function of $t$ , since the self-attention has no innate notion of token position.

我们将这些输入嵌入投影到一个与自注意力层输出相同大小的表示。然后加上一个由$t$的确定性正弦函数计算得到的位置编码向量,因为自注意力机制本身不具备token位置的概念。

We feed this token representation as input to a series of $J$ residual multi-head self-attention layers with feed-forward connections. Denoting the $j$ th self-attention layer as $T^{(j)}(\cdot)$ , the output of that layer $s_{t}^{(j)}$ , and $L N(\cdot)$ layer normalization, the following recurrence applied to initial input $c_{t}^{(p)}$

我们将此token表示作为输入,送入一系列包含前馈连接的$J$个残差多头自注意力层。记第$j$个自注意力层为$T^{(j)}(\cdot)$,该层输出为$s_{t}^{(j)}$,$L N(\cdot)$表示层归一化,以下递推关系作用于初始输入$c_{t}^{(p)}$

$$

s_{t}^{(j)}=L N(s_{t}^{(j-1)}+T^{(j)}(s_{t}^{(j-1)}))

$$

$$

s_{t}^{(j)}=L N(s_{t}^{(j-1)}+T^{(j)}(s_{t}^{(j-1)}))

$$

gives our final token representations $s_{t}^{(j)}$ (j ). Each $\bar{T}^{(j)}(\cdot)$ consists of: (a) multi-head self-attention and (b) a feed-forward projection.

给出最终的token表示 $s_{t}^{(j)}$ (j)。每个 $\bar{T}^{(j)}(\cdot)$ 包含:(a) 多头自注意力机制 (multi-head self-attention) 和 (b) 前馈投影层 (feed-forward projection)。

The multi-head self attention consists of $H$ attention heads, each of which learns a distinct attention function to attend to all of the tokens in the sequence. This self-attention is performed for each token for each head, and the results of the $H$ self-attentions are concatenated to form the final self-attended representation for each token.

多头自注意力 (multi-head self attention) 由 $H$ 个注意力头组成,每个头学习不同的注意力函数来处理序列中的所有 token。每个 token 在每个头上都会进行自注意力计算,最终将 $H$ 个自注意力的结果拼接起来,形成每个 token 的最终自注意力表示。

Specifically, consider the matrix $S^{(j-1)}$ of $T$ token representations at layer $j-1$ . For each attention head $h$ , we project this matrix into distinct key, value and query representations K(hj ), V h(j) and $Q_{h}^{(j)}$ of dimensions $T\times d_{k}$ , $T\times d_{q}$ , and $T\times d_{v}$ respectively. We can then multiply $\bar{Q}{h}^{(j)}$ ) by K(hj) $T\times T$ $A{h}^{(j)}$ between each pair of tokens in the sentence. Following Vaswani et al. (2017) we perform scaled dot-product attention: We scale the weights by the inverse square root of their embedding dimension and normalize with the softmax function to produce a distinct distribution for each token over all the tokens in the sentence:

具体来说,考虑层 $j-1$ 的 $T$ 个 token 表示矩阵 $S^{(j-1)}$。对于每个注意力头 $h$,我们将该矩阵投影为维度分别为 $T\times d_{k}$、$T\times d_{q}$ 和 $T\times d_{v}$ 的键 (key)、值 (value) 和查询 (query) 表示 $K_{h}^{(j)}$、$V_{h}^{(j)}$ 和 $Q_{h}^{(j)}$。接着将 $\bar{Q}{h}^{(j)}$ 与 $K{h}^{(j)}$ 相乘,得到句子中每对 token 之间的 $T\times T$ 注意力权重矩阵 $A_{h}^{(j)}$。根据 Vaswani 等人 (2017) 的方法,我们执行缩放点积注意力 (scaled dot-product attention):通过嵌入维度平方根的倒数缩放权重,并用 softmax 函数归一化,为每个 token 生成一个关于句子中所有 token 的独立分布:

$$

A_{h}^{(j)}=\operatorname{softmax}(d_{k}^{-0.5}Q_{h}^{(j)}K_{h}^{(j)^{T}})

$$

$$

A_{h}^{(j)}=\operatorname{softmax}(d_{k}^{-0.5}Q_{h}^{(j)}K_{h}^{(j)^{T}})

$$

These attention weights are then multiplied by V h(j) for each token to obtain the self-attended token representations Mh(j ):

然后将这些注意力权重与每个Token的V h(j)相乘,得到自注意力Token表示Mh(j):

$$

M_{h}^{(j)}=A_{h}^{(j)}V_{h}^{(j)}

$$

$$

M_{h}^{(j)}=A_{h}^{(j)}V_{h}^{(j)}

$$

Row $t$ of $M_{h}^{\left(j\right)}$ , the self-attended representation for token $t$ at layer $j$ , is thus the weighted sum with respect to $t$ (with weights given by $A_{h}^{(j)}$ ) over the token representations in V h(j ).

因此,层 $j$ 中 token $t$ 的自注意力表示 $M_{h}^{\left(j\right)}$ 的第 $t$ 行,是 $V_{h}^{(j)}$ 中 token 表示相对于 $t$ 的加权和(权重由 $A_{h}^{(j)}$ 给出)。

The outputs of all attention heads for each token are concatenated, and this representation is passed to the feed-forward layer, which consists of two linear projections each followed by leaky ReLU activation s (Maas et al., 2013). We add the output of the feed-forward to the initial representation and apply layer normalization to give the final output of self-attention layer $j$ , as in Eqn. 1.

每个token的所有注意力头输出被拼接后,该表征会传入前馈层。前馈层包含两个线性投影,每个投影后接Leaky ReLU激活函数 (Maas et al., 2013)。我们将前馈层输出与初始表征相加,并应用层归一化得到自注意力层$j$的最终输出,如公式1所示。

2.2 Syntactically-informed self-attention

2.2 语法感知自注意力 (Syntactically-informed self-attention)

Typically, neural attention mechanisms are left on their own to learn to attend to relevant inputs. Instead, we propose training the self-attention to attend to specific tokens corresponding to the syntactic structure of the sentence as a mechanism for passing linguistic knowledge to later layers.

通常,神经注意力机制需要自行学习关注相关输入。相反,我们提出训练自注意力机制,使其关注与句子句法结构对应的特定token,以此作为将语言学知识传递至后续层的机制。

Specifically, we replace one attention head with the deep bi-affine model of Dozat and Manning (2017), trained to predict syntactic dependencies. Let $A_{p a r s e}$ be the parse attention weights, at layer $i$ . Its input is the matrix of token representations $S^{(i-1)}$ . As with the other attention heads, we project $S^{(i-1)}$ into key, value and query representations, denoted $K_{p a r s e}$ , $Q_{p a r s e}$ , $V_{p a r s e}$ . Here the key and query projections correspond to parent and dependent representations of the tokens, and we allow their dimensions to differ from the rest of the attention heads to more closely follow the imple ment ation of Dozat and Manning (2017). Unlike the other attention heads which use a dot product to score key-query pairs, we score the compatibility between $K_{p a r s e}$ and $Q_{p a r s e}$ using a bi-affine operator $U_{h e a d s}$ to obtain attention weights:

具体来说,我们将一个注意力头替换为Dozat和Manning (2017) 提出的深度双仿射模型,该模型经过训练用于预测句法依存关系。设 $A_{parse}$ 为第 $i$ 层的解析注意力权重,其输入是token表示矩阵 $S^{(i-1)}$。与其他注意力头类似,我们将 $S^{(i-1)}$ 投影为键、值和查询表示,分别记为 $K_{parse}$、$Q_{parse}$、$V_{parse}$。这里的键和查询投影对应token的父节点和子节点表示,并且我们允许它们的维度与其他注意力头不同,以更贴近Dozat和Manning (2017) 的实现方式。与其他使用点积对键-查询对进行评分的注意力头不同,我们使用双仿射算子 $U_{heads}$ 对 $K_{parse}$ 和 $Q_{parse}$ 之间的兼容性进行评分,从而获得注意力权重:

$$

A_{p a r s e}=\mathrm{softmax}(Q_{p a r s e}U_{h e a d s}K_{p a r s e}^{T})

$$

$$

A_{p a r s e}=\mathrm{softmax}(Q_{p a r s e}U_{h e a d s}K_{p a r s e}^{T})

$$

These attention weights are used to compose a weighted average of the value representations $V_{p a r s e}$ as in the other attention heads.

这些注意力权重用于组合值表示 $V_{p a r s e}$ 的加权平均值,与其他注意力头中的操作相同。

We apply auxiliary supervision at this attention head to encourage it to attend to each token’s parent in a syntactic dependency tree, and to encode information about the token’s dependency label. Denoting the attention weight from token $t$ to a candidate head $q$ as $A_{p a r s e}[t,q]$ , we model the probability of token $t$ having parent $q$ as:

我们在这个注意力头上应用辅助监督,以鼓励它关注句法依存树中每个token的父节点,并编码有关该token依存标签的信息。将token $t$ 到候选头 $q$ 的注意力权重表示为 $A_{p a r s e}[t,q]$ ,我们将token $t$ 以 $q$ 为父节点的概率建模为:

$$

P(q=\mathrm{head}(t)\mid\mathcal{X})=A_{p a r s e}[t,q]

$$

$$

P(q=\mathrm{head}(t)\mid\mathcal{X})=A_{p a r s e}[t,q]

$$

using the attention weights $A_{p a r s e}[t]$ as the distribution over possible heads for token $t$ . We define the root token as having a self-loop. This attention head thus emits a directed graph3 where each token’s parent is the token to which the attention $A_{p a r s e}$ assigns the highest weight.

使用注意力权重 $A_{p a r s e}[t]$ 作为 token $t$ 可能头部分布。我们将根 token 定义为具有自循环。因此,该注意力头会输出一个有向图3,其中每个 token 的父节点是 $A_{p a r s e}$ 分配最高权重的 token。

We also predict dependency labels using perclass bi-affine operations between parent and dependent representations $Q_{p a r s e}$ and $K_{p a r s e}$ to produce per-label scores, with locally normalized probabilities over dependency labels $\overset{\cdot}{y}_{t}^{d e p}$ given by the softmax function. We refer the reader to Dozat and Manning (2017) for more details.

我们还通过父节点和依赖节点表示 $Q_{p a r s e}$ 与 $K_{p a r s e}$ 之间的逐类双仿射操作来预测依存标签,生成逐标签分数,并通过 softmax 函数给出依存标签 $\overset{\cdot}{y}_{t}^{d e p}$ 的局部归一化概率。更多细节请参考 Dozat 和 Manning (2017) 的研究。

This attention head now becomes an oracle for syntax, denoted $\mathcal{P}$ , providing a dependency parse to downstream layers. This model not only predicts its own dependency arcs, but allows for the injection of auxiliary parse information at test time by simply setting $A_{p a r s e}$ to the parse parents produced by e.g. a state-of-the-art parser. In this way, our model can benefit from improved, external parsing models without re-training. Unlike typical multi-task models, ours maintains the ability to leverage external syntactic information.

该注意力头现成为句法的预言器,记为 $\mathcal{P}$,为下游层提供依存解析。该模型不仅能预测自身的依存弧,还能在测试时通过简单地将 $A_{parse}$ 设置为例如最先进解析器生成的解析父节点,从而注入辅助解析信息。如此一来,我们的模型无需重新训练即可受益于改进的外部解析模型。与典型的多任务模型不同,我们的模型保留了利用外部句法信息的能力。

2.3 Multi-task learning

2.3 多任务学习

We also share the parameters of lower layers in our model to predict POS tags and predicates. Following He et al. (2017), we focus on the end-toend setting, where predicates must be predicted on-the-fly. Since we also train our model to predict syntactic dependencies, it is beneficial to give the model knowledge of POS information. While much previous work employs a pipelined approach to both POS tagging for dependency parsing and predicate detection for SRL, we take a multi-task learning (MTL) approach (Caruana,

我们还共享模型中较低层的参数来预测词性 (POS) 标签和谓词。遵循 He 等人 (2017) 的方法,我们专注于端到端设置,其中谓词必须即时预测。由于我们还训练模型预测句法依存关系,因此为模型提供词性信息是有益的。虽然之前许多工作采用流水线方法分别进行依存解析的词性标注和语义角色标注 (SRL) 的谓词检测,但我们采用了多任务学习 (MTL) 方法 (Caruana,

1993), sharing the parameters of earlier layers in our SRL model with a joint POS and predicate detection objective. Since POS is a strong predictor of predicates 4 and the complexity of training a multi-task model increases with the number of tasks, we combine POS tagging and predicate detection into a joint label space: For each POS tag TAG which is observed co-occurring with a predicate, we add a label of the form TAG:PREDICATE. Specifically, we feed the representation $s_{t}^{(r)}$ from a layer $r$ preceding the syntacticallyinformed layer $p$ to a linear classifier to produce per-class scores $r_{t}$ for token $t$ . We compute locally-normalized probabilities using the softmax function: $P(y_{t}^{p r p}\mid\mathcal{X})\propto\exp(r_{t})$ , where ytprp is a label in the joint space.

1993年),在我们的语义角色标注(SRL)模型中共享早期层的参数,以实现词性标注(POS)和谓词检测的联合目标。由于词性标注是谓词的强预测指标[4],且多任务模型的训练复杂度随任务数量增加而提升,我们将词性标注和谓词检测合并为联合标签空间:对于每个与谓词共现的词性标记TAG,我们添加形如TAG:PREDICATE的标签。具体而言,我们将语法感知层$p$之前某层$r$的表征$s_{t}^{(r)}$输入线性分类器,为每个token $t$生成类别分数$r_{t}$。通过softmax函数计算局部归一化概率:$P(y_{t}^{prp}\mid\mathcal{X})\propto\exp(r_{t})$,其中$y_{t}^{prp}$是联合空间中的标签。

2.4 Predicting semantic roles

2.4 语义角色预测

Our final goal is to predict semantic roles for each predicate in the sequence. We score each predicate against each token in the sequence using a bilinear operation, producing per-label scores for each token for each predicate, with predicates and syntax determined by oracles $\nu$ and $\mathcal{P}$ .

我们的最终目标是预测序列中每个谓词的语义角色。我们通过双线性操作对序列中的每个token与每个谓词进行评分,为每个谓词的每个token生成每个标签的得分,其中谓词和句法由预言器$\nu$和$\mathcal{P}$确定。

First, we project each token representation $s_{t}^{(J)}$ to a predicate-specific representation $s_{t}^{p r e d}$ and a role-specific representation $s_{t}^{r o l e}$ . We then provide these representations to a bilinear transformation $U$ for scoring. So, the role label scores $s_{f t}$ for the token at index $t$ with respect to the predicate at index $f$ (i.e. token $t$ and frame $f$ ) are given by:

首先,我们将每个token表示 $s_{t}^{(J)}$ 投影到谓词特定表示 $s_{t}^{p r e d}$ 和角色特定表示 $s_{t}^{r o l e}$。然后,将这些表示提供给双线性变换 $U$ 进行评分。因此,对于索引为 $t$ 的token相对于索引为 $f$ 的谓词(即token $t$ 和帧 $f$)的角色标签分数 $s_{f t}$ 由下式给出:

$$

s_{f t}=(s_{f}^{p r e d})^{T}U s_{t}^{r o l e}

$$

$$

s_{f t}=(s_{f}^{p r e d})^{T}U s_{t}^{r o l e}

$$

which can be computed in parallel across all semantic frames in an entire minibatch. We calculate a locally normalized distribution over role labels for token $t$ in frame $f$ using the softmax function: $P(y_{f t}^{r o l e}\mid\mathcal{P},\mathcal{V},\mathcal{X})\propto\exp(s_{f t})$ .

可以在整个小批次的所有语义帧中并行计算。我们使用softmax函数计算帧$f$中token$t$的角色标签的局部归一化分布:$P(y_{f t}^{r o l e}\mid\mathcal{P},\mathcal{V},\mathcal{X})\propto\exp(s_{f t})$。

At test time, we perform constrained decoding using the Viterbi algorithm to emit valid sequences of BIO tags, using unary scores $s_{f t}$ and the transition probabilities given by the training data.

在测试时,我们使用维特比算法进行约束解码,利用一元分数 $s_{f t}$ 和训练数据提供的转移概率来生成有效的BIO标签序列。

2.5 Training

2.5 训练

We maximize the sum of the likelihoods of the individual tasks. In order to maximize our model’s ability to leverage syntax, during training we clamp $\mathcal{P}$ to the gold parse $(\mathcal{P}{G})$ and $\nu$ to gold predicates $\nu_{G}$ when passing parse and predicate representations to later layers, whereas syntactic head prediction and joint predicate/POS prediction are conditioned only on the input sequence $\mathcal{X}$ . The overall objective is thus:

我们最大化各任务似然的总和。为了增强模型利用句法的能力,在训练过程中,当向后续层传递句法解析和谓词表征时,我们将 $\mathcal{P}$ 固定为黄金解析 $(\mathcal{P}{G})$ ,并将 $\nu$ 固定为黄金谓词 $\nu_{G}$ ,而句法中心词预测及联合谓词/词性预测仅基于输入序列 $\mathcal{X}$ 。因此整体目标函数为:

$$

\begin{array}{r l}{{\frac{1}{T}\sum_{t=1}^{T}\Big[\sum_{f=1}^{F}\log P(y_{f t}^{r o l e}\mid\mathcal{P}{G},\mathcal{V}{G},\mathcal{X})}}\ &{+\log P(y_{t}^{p r p}\mid\mathcal{X})}\ &{+\lambda_{1}\log P(\mathrm{head}(t)\mid\mathcal{X})}\ &{+\lambda_{2}\log P(y_{t}^{d e p}\mid\mathcal{P}_{G},\mathcal{X})\Big]}\end{array}

$$

$$

\begin{array}{r l}{{\frac{1}{T}\sum_{t=1}^{T}\Big[\sum_{f=1}^{F}\log P(y_{f t}^{r o l e}\mid\mathcal{P}{G},\mathcal{V}{G},\mathcal{X})}}\ &{+\log P(y_{t}^{p r p}\mid\mathcal{X})}\ &{+\lambda_{1}\log P(\mathrm{head}(t)\mid\mathcal{X})}\ &{+\lambda_{2}\log P(y_{t}^{d e p}\mid\mathcal{P}_{G},\mathcal{X})\Big]}\end{array}

$$

where $\lambda_{1}$ and $\lambda_{2}$ are penalties on the syntactic attention loss.

其中 $\lambda_{1}$ 和 $\lambda_{2}$ 是句法注意力损失的惩罚项。

We train the model using Nadam (Dozat, 2016) SGD combined with the learning rate schedule in Vaswani et al. (2017). In addition to MTL, we regularize our model using dropout (Srivastava et al., 2014). We use gradient clipping to avoid explod- ing gradients (Bengio et al., 1994; Pascanu et al., 2013). Additional details on optimization and hyper parameters are included in Appendix A.

我们使用Nadam (Dozat, 2016) SGD结合Vaswani等人 (2017) 的学习率调度策略来训练模型。除了多任务学习 (MTL) 外,我们还采用dropout (Srivastava等人, 2014) 对模型进行正则化。通过梯度裁剪防止梯度爆炸 (Bengio等人, 1994; Pascanu等人, 2013)。优化细节和超参数设置详见附录A。

3 Related work

3 相关工作

Early approaches to SRL (Pradhan et al., 2005; Surdeanu et al., 2007; Johansson and Nugues, 2008; Toutanova et al., 2008) focused on developing rich sets of linguistic features as input to a linear model, often combined with complex constrained inference e.g. with an ILP (Punyakanok et al., 2008). Tackstrom et al. (2015) showed that constraints could be enforced more efficiently using a clever dynamic program for exact inference. Sutton and McCallum (2005) modeled syntactic parsing and SRL jointly, and Lewis et al. (2015) jointly modeled SRL and CCG parsing.

早期的语义角色标注(SRL)方法(Pradhan et al., 2005; Surdeanu et al., 2007; Johansson and Nugues, 2008; Toutanova et al., 2008)主要关注开发丰富的语言学特征集作为线性模型的输入,通常结合复杂的约束推理(例如使用整数线性规划(ILP)(Punyakanok et al., 2008))。Tackstrom等人(2015)证明可以通过巧妙的动态规划算法更高效地执行精确推理约束。Sutton和McCallum(2005)联合建模了句法分析和语义角色标注任务,Lewis等人(2015)则联合建模了语义角色标注和组合范畴语法(CCG)分析。

Collobert et al. (2011) were among the first to use a neural network model for SRL, a CNN over word embeddings which failed to out-perform non-neural models. FitzGerald et al. (2015) successfully employed neural networks by embedding lexical i zed features and providing them as factors in the model of Tackstrom et al. (2015).

Collobert等人(2011) 是首批将神经网络模型 (CNN over word embeddings) 应用于语义角色标注 (SRL) 的研究者之一,但该模型未能超越非神经模型。FitzGerald等人(2015) 通过嵌入词汇化特征 (lexicalized features) 并作为Tackstrom等人(2015) 模型的因子,成功应用了神经网络。

More recent neural models are syntax-free. Zhou and $\mathrm{Xu}$ (2015), Marcheggiani et al. (2017) and He et al. (2017) all use variants of deep LSTMs with constrained decoding, while Tan et al. (2018) apply self-attention to obtain state-ofthe-art SRL with gold predicates. Like this work, He et al. (2017) present end-to-end experiments, predicting predicates using an LSTM, and He et al.

更近期的神经模型已不再依赖句法。Zhou和$\mathrm{Xu}$ (2015) 、Marcheggiani等人 (2017) 以及He等人 (2017) 均采用带约束解码的深度LSTM变体,而Tan等人 (2018) 则通过自注意力机制实现了基于黄金谓词的最优语义角色标注 (SRL) 。与本研究类似,He等人 (2017) 进行了端到端实验,使用LSTM预测谓词。

(2018) jointly predict SRL spans and predicates in a model based on that of Lee et al. (2017), obtaining state-of-the-art predicted predicate SRL. Con- current to this work, Peters et al. (2018) and He et al. (2018) report significant gains on PropBank SRL by training a wide LSTM language model and using a task-specific transformation of its hidden representations (ELMo) as a deep, and comput ation ally expensive, alternative to typical word embeddings. We find that LISA obtains further accuracy increases when provided with ELMo word representations, especially on out-of-domain data.

(2018) 基于 Lee 等人 (2017) 的模型联合预测语义角色标注 (SRL) 跨度和谓词,取得了当时最先进的谓词 SRL 预测效果。与此同时,Peters 等人 (2018) 和 He 等人 (2018) 通过训练一个宽 LSTM 大语言模型,并将其隐藏表示 (ELMo) 进行任务特定转换,作为传统词嵌入的深度且计算昂贵的替代方案,在 PropBank SRL 任务上报告了显著提升。我们发现,当提供 ELMo 词表示时,LISA 能获得进一步的准确率提升,尤其在领域外数据上表现更优。

Some work has incorporated syntax into neural models for SRL. Roth and Lapata (2016) incorporate syntax by embedding dependency paths, and similarly March egg ian i and Titov (2017) encode syntax using a graph CNN over a predicted syntax tree, out-performing models without syntax on CoNLL-2009. These works are limited to incorporating partial dependency paths between tokens whereas our technique incorporates the entire parse. Additionally, March egg ian i and Titov (2017) report that their model does not out-perform syntax-free models on out-of-domain data, a setting in which our technique excels.

一些研究已将句法融入神经模型用于语义角色标注(SRL)。Roth和Lapata(2016)通过嵌入依存路径来融合句法信息,类似地,Marcheggiani和Titov(2017)在预测的句法树上使用图卷积神经网络(Graph CNN)编码句法,在CoNLL-2009上超越了无句法模型。这些工作仅限于融合token之间的部分依存路径,而我们的技术整合了完整句法树。此外,Marcheggiani和Titov(2017)报告其模型在领域外数据上未超越无句法模型,而我们的技术在该场景表现优异。

MTL (Caruana, 1993) is popular in NLP, and others have proposed MTL models which incorporate subsets of the tasks we do (Collobert et al., 2011; Zhang and Weiss, 2016; Hashimoto et al., 2017; Peng et al., 2017; Swayamdipta et al., 2017), and we build off work that investigates where and when to combine different tasks to achieve the best results (Søgaard and Goldberg, 2016; Bingel and Søgaard, 2017; Alonso and Plank, 2017). Our specific method of incorporating supervision into self-attention is most similar to the concurrent work of Liu and Lapata (2018), who use edge marginals produced by the matrix-tree algorithm as attention weights for document classification and natural language inference.

MTL (Caruana, 1993) 在自然语言处理领域广受欢迎,其他研究者也提出了包含我们所处理任务子集的MTL模型 (Collobert et al., 2011; Zhang and Weiss, 2016; Hashimoto et al., 2017; Peng et al., 2017; Swayamdipta et al., 2017)。我们的研究基于探索何时何地结合不同任务以获得最佳效果的工作 (Søgaard and Goldberg, 2016; Bingel and Søgaard, 2017; Alonso and Plank, 2017)。我们将监督信息融入自注意力机制的具体方法,与Liu和Lapata (2018) 同期工作最为相似——他们使用矩阵树算法生成的边缘概率作为文档分类和自然语言推理任务的注意力权重。

The question of training on gold versus predicted labels is closely related to learning to search (Daumé III et al., 2009; Ross et al., 2011; Chang et al., 2015) and scheduled sampling (Bengio et al., 2015), with applications in NLP to sequence labeling and transition-based parsing (Choi and Palmer, 2011; Goldberg and Nivre, 2012; Ballesteros et al., 2016). Our approach may be inter- preted as an extension of teacher forcing (Williams and Zipser, 1989) to MTL. We leave exploration of more advanced scheduled sampling techniques to

在黄金标注与预测标注上进行训练的问题,与学习搜索 (Daumé III et al., 2009; Ross et al., 2011; Chang et al., 2015) 和计划采样 (Bengio et al., 2015) 密切相关,这些方法在自然语言处理 (NLP) 中应用于序列标注和基于转移的解析 (Choi and Palmer, 2011; Goldberg and Nivre, 2012; Ballesteros et al., 2016)。我们的方法可以视为教师强制 (Williams and Zipser, 1989) 在多任务学习 (MTL) 中的扩展。我们将更先进的计划采样技术探索留给

future work.

未来工作

4 Experimental results

4 实验结果

We present results on the CoNLL-2005 shared task (Carreras and Marquez, 2005) and the CoNLL-2012 English subset of OntoNotes 5.0 (Pradhan et al., 2013), achieving state-of-the-art results for a single model with predicted predicates on both corpora. We experiment with both standard pre-trained GloVe word embeddings (Pennington et al., 2014) and pre-trained ELMo represent at ions with fine-tuned task-specific parameters (Peters et al., 2018) in order to best compare to prior work. Hyper parameters that resulted in the best performance on the validation set were selected via a small grid search, and models were trained for a maximum of 4 days on one TitanX GPU using early stopping on the validation set. We convert constituencies to dependencies using the Stanford head rules v3.5 (de Marneffe and Manning, 2008). A detailed description of hyperparameter settings and data pre-processing can be found in Appendix A.

我们在CoNLL-2005共享任务(Carreras和Marquez,2005)以及OntoNotes 5.0的CoNLL-2012英文子集(Pradhan等人,2013)上展示了实验结果,在这两个语料库上使用预测谓词的单一模型取得了最先进的结果。为了与先前工作进行最佳对比,我们同时采用了标准预训练的GloVe词嵌入(Pennington等人,2014)和预训练的ELMo表示(Peters等人,2018)配合微调的任务特定参数。通过小规模网格搜索选择在验证集上表现最佳的超参数,并使用TitanX GPU在验证集上采用早停策略进行最长4天的训练。我们使用斯坦福头规则v3.5(de Marneffe和Manning,2008)将选区转换为依存关系。超参数设置和数据预处理的详细说明见附录A。

We compare our LISA models to four strong baselines: For experiments using predicted predicates, we compare to He et al. (2018) and the ensemble model (PoE) from He et al. (2017), as well as a version of our own self-attention model which does not incorporate syntactic information (SA). To compare to more prior work, we present additional results on CoNLL-2005 with models given gold predicates at test time. In these experiments we also compare to Tan et al. (2018), the previous state-of-the art SRL model using gold predicates and standard embeddings.

我们将LISA模型与四个强基线进行比较:在使用预测谓词的实验中,我们对比了He et al. (2018) 、He et al. (2017) 的集成模型 (PoE) ,以及我们自身未融合句法信息的自注意力模型版本 (SA) 。为了与更多先前工作对比,我们在CoNLL-2005测试集上补充了使用真实谓词时的实验结果。这些实验中还对比了Tan et al. (2018) ——此前使用真实谓词和标准嵌入的最先进语义角色标注模型。

We demonstrate that our models benefit from injecting state-of-the-art predicted parses at test time by fixing the attention to parses predicted by Dozat and Manning (2017), the winner of the 2017 CoNLL shared task (Zeman et al., 2017) which we re-train using ELMo embeddings. In all cases, using these parses at test time improves performance.

我们证明,通过在测试时注入最先进的预测解析结果 ,即固定注意力机制为 Dozat 和 Manning (2017) 提出的解析结果(我们使用 ELMo 嵌入重新训练的 2017 年 CoNLL 共享任务冠军模型 [Zeman et al., 2017]),我们的模型性能得到了提升。在所有情况下,测试时使用这些解析结果都能提高性能。

We also evaluate our model using the gold syntactic parse at test time $\left(+\mathbf{Gold}\right)$ , to provide an upper bound for the benefit that syntax could have for SRL using LISA. These experiments show that despite LISA’s strong performance, there remains substantial room for improvement. In $\S4.3$ we perform further analysis comparing SRL models using gold and predicted parses.

我们还使用测试时的黄金句法分析 $\left(+\mathbf{Gold}\right)$ 来评估模型,以确定句法分析对使用LISA进行语义角色标注(SRL)可能带来的收益上限。实验表明,尽管LISA表现优异,仍有很大改进空间。在 $\S4.3$ 节中,我们将进一步分析比较使用黄金句法分析和预测句法分析的SRL模型。

| GloVe | Dev | WSJ Test | Brown Test | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | P | R | F1 | |

| He et al. (2017) PoE He et al. (2018) | 81.8 81.3 | 81.2 81.9 | 81.5 81.6 | 82.0 81.2 | 83.4 83.9 | 82.7 82.5 | 69.7 69.7 | 70.5 71.9 | 70.1 70.8 |

| SA | 83.52 | 81.28 | 82.39 | 84.17 | 83.28 | 83.72 | 72.98 | 70.1 | 71.51 |

| LISA | 83.1 | 81.39 | 82.24 | 84.07 | 83.16 | 83.61 | 73.32 | 70.56 | 71.91 |

| +D&M +Gold | 84.59 87.91 | 82.59 85.73 | 83.58 86.81 | 85.53 | 84.45 | 84.99 | 75.8 | 73.54 | 74.66 |

| ELMo | |||||||||

| He et al. (2018) | 84.9 | 85.7 | 85.3 | 84.8 | 87.2 | 86.0 | 73.9 | 78.4 | 76.1 |

| SA | 85.78 | 84.74 | 85.26 | 86.21 | 85.98 | 86.09 | 77.1 | 75.61 | 76.35 |

| LISA | 86.07 | 84.64 | 85.35 | 86.69 | 86.42 | 86.55 | 78.95 | 77.17 | 78.05 |

| +D&M | 85.83 | 84.51 | 85.17 | 87.13 | 86.67 | 86.90 | 79.02 | 77.49 | 78.25 |

| +Gold | 88.51 | 86.77 | 87.63 |

Table 1: Precision, recall and F1 on the CoNLL-2005 development and test sets.

表 1: CoNLL-2005 开发集和测试集上的精确率 (Precision)、召回率 (Recall) 和 F1 值。

Table 2: Precision, recall and F1 on CoNLL-2005 with gold predicates.

| WSJ测试 | P | R | F1 |

|---|---|---|---|

| He et al. (2018) Tan et al. (2018) | 84.2 84.5 | 83.7 85.2 | 83.9 84.8 |

| SA LISA | 84.7 84.72 | 84.24 84.57 | 84.47 84.64 |

| +D&M | 86.02 | 86.05 | 86.04 |

| Brown测试 | P | R | F1 |

| He et al. (2018) Tan et al. (2018) | 74.2 | 73.1 | 73.7 |

| SA | 73.5 | 74.6 | 74.1 |

| 73.89 | 72.39 | 73.13 | |

| LISA | 74.77 | 74.32 | 74.55 |

| +D&M | 76.65 | 76.44 | 76.54 |

表 2: CoNLL-2005带黄金谓词的精确率、召回率和F1值。

4.1 Semantic role labeling

4.1 语义角色标注

Table 1 lists precision, recall and F1 on the CoNLL-2005 development and test sets using predicted predicates. For models using GloVe embeddings, our syntax-free SA model already achieves a new state-of-the-art by jointly predicting predicates, POS and SRL. LISA with its own parses performs comparably to SA, but when supplied with D&M parses LISA out-performs the previous state-of-the-art by $2.5\mathrm{~F}1$ points. On the out-ofdomain Brown test set, LISA also performs comparably to its syntax-free counterpart with its own parses, but with D&M parses LISA performs exce pti on ally well, more than 3.5 F1 points higher than He et al. (2018). Incorporating ELMo embeddings improves all scores. The gap in SRL F1 between models using LISA and D&M parses is smaller due to LISA’s improved parsing accuracy (see $\S4.2)$ , but LISA with D&M parses still achieves the highest F1: nearly 1.0 absolute F1 higher than the previous state-of-the art on WSJ, and more than 2.0 F1 higher on Brown. In both settings LISA leverages domain-agnostic syntactic information rather than over-fitting to the newswire training data which leads to high performance even on out-of-domain text.

表1列出了使用预测谓词在CoNLL-2005开发和测试集上的精确率(precision)、召回率(recall)和F1值。对于使用GloVe嵌入的模型,我们无句法分析的SA模型通过联合预测谓词、词性标注(POS)和语义角色标注(SRL)已经达到了新的最先进水平。使用自生成句法分析的LISA模型表现与SA相当,但当采用D&M句法分析时,LISA以2.5 F1分的优势超越了之前的最优结果。在跨领域的Brown测试集上,使用自生成句法分析的LISA表现与其无句法版本相当,而采用D&M句法分析时LISA表现尤为出色,比He等人(2018)的成果高出超过3.5 F1分。引入ELMo嵌入后所有指标均得到提升。由于LISA改进了句法分析精度(见$\S4.2$),采用LISA和D&M句法分析的模型在SRL F1上的差距缩小,但采用D&M句法分析的LISA仍保持最高F1值:在WSJ上比之前最优结果高出近1.0绝对F1分,在Brown上则高出超过2.0 F1分。两种配置下LISA都利用了领域无关的句法信息,而非过度拟合新闻训练数据,因此即使在跨领域文本上也表现出色。

To compare to more prior work we also evaluate our models in the artificial setting where gold predicates are provided at test time. For fair comparison we use GloVe embeddings, provide predicate indicator embeddings on the input and reencode the sequence relative to each gold predicate. Here LISA still excels: with D&M parses, LISA out-performs the previous state-of-the-art by more than 2 F1 on both WSJ and Brown.

为了与更多先前工作进行比较,我们还在测试时提供黄金谓词(gold predicates)的人工设定环境下评估模型。为确保公平比较,我们使用GloVe嵌入,在输入端提供谓词指示符嵌入,并针对每个黄金谓词重新编码序列。在此设定下,LISA仍表现优异:使用D&M解析时,LISA在WSJ和Brown数据集上的F1分数均超越先前最优结果2分以上。

Table 3 reports precision, recall and F1 on the CoNLL-2012 test set. We observe performance similar to that observed on ConLL-2005: Using GloVe embeddings our SA baseline already out-performs He et al. (2018) by nearly 1.5 F1. With its own parses, LISA slightly under-performs our syntax-free model, but when provided with stronger D&M parses LISA outperforms the state-of-the-art by more than 2.5 F1. Like CoNLL-2005, ELMo representations improve all models and close the F1 gap between models supplied with LISA and D&M parses. On this dataset ELMo also substantially narrows the difference between models with- and without syntactic information. This suggests that for this challenging dataset, ELMo already encodes much of the information available in the D&M parses. Yet, higher accuracy parses could still yield improvements since providing gold parses increases F1 by 4 points even with ELMo embeddings.

表 3 报告了 CoNLL-2012 测试集上的精确率、召回率和 F1 值。我们观察到的性能与 ConLL-2005 上的结果相似:使用 GloVe 嵌入时,我们的 SA 基线模型已经比 He et al. (2018) 高出近 1.5 个 F1 值。使用自身解析结果时,LISA 略逊于我们的无句法模型,但当提供更强的 D&M 解析时,LISA 以超过 2.5 个 F1 值的优势超越了当前最优方法。与 CoNLL-2005 类似,ELMo 表征提升了所有模型的性能,并缩小了使用 LISA 和 D&M 解析的模型之间的 F1 差距。在该数据集上,ELMo 还大幅缩小了使用和不使用句法信息的模型之间的差异。这表明对于这个具有挑战性的数据集,ELMo 已经编码了 D&M 解析中的大部分信息。然而,更高精度的解析仍可能带来改进,因为即使使用 ELMo 嵌入,提供黄金解析仍能使 F1 值提高 4 个百分点。

Table 3: Precision, recall and F1 on the CoNLL2012 development and test sets. Italics indicate a synthetic upper bound obtained by providing a gold parse at test time.

表 3: CoNLL2012 开发集和测试集上的精确率、召回率和 F1 值。斜体表示在测试时提供黄金解析得到的合成上限。

| Dev | P | R | F1 |

|---|---|---|---|

| GloVe | |||

| He et al. (2018) | 79.2 | 79.7 | 79.4 |

| SA | 82.32 | 79.76 | 81.02 |

| LISA | 81.77 | 79.65 | 80.70 |

| +D&M | 82.97 | 81.14 | 82.05 |

| +Gold | 87.57 | 85.32 | 86.43 |

| ELMo | |||

| He et al. (2018) | 82.1 | 84.0 | 83.0 |

| SA | 84.35 | 82.14 | 83.23 |

| LISA | 84.19 | 82.56 | 83.37 |

| +D&M | 84.09 | 82.65 | 83.36 |

| +Gold | 88.22 | 86.53 | 87.36 |

| Test | P |