DYNAMICS-AWARE UNSUPERVISED DISCOVERY OF SKILLS

动态感知的无监督技能发现

Archit Sharma, Shixiang Gu, Sergey Levine, Vikash Kumar, Karol Hausman Google Brain {architsh,shanegu,slevine,vikashplus,karol haus man}@google.com

Archit Sharma、Shixiang Gu、Sergey Levine、Vikash Kumar、Karol Hausman Google Brain {architsh,shanegu,slevine,vikashplus,karolhausman}@google.com

ABSTRACT

摘要

Conventionally, model-based reinforcement learning (MBRL) aims to learn a global model for the dynamics of the environment. A good model can potentially enable planning algorithms to generate a large variety of behaviors and solve diverse tasks. However, learning an accurate model for complex dynamical systems is difficult, and even then, the model might not generalize well outside the distribution of states on which it was trained. In this work, we combine model-based learning with model-free learning of primitives that make modelbased planning easy. To that end, we aim to answer the question: how can we discover skills whose outcomes are easy to predict? We propose an unsupervised learning algorithm, Dynamics-Aware Discovery of Skills (DADS), which simultaneously discovers predictable behaviors and learns their dynamics. Our method can leverage continuous skill spaces, theoretically, allowing us to learn infinitely many behaviors even for high-dimensional state-spaces. We demonstrate that zero-shot planning in the learned latent space significantly outperforms standard MBRL and model-free goal-conditioned RL, can handle sparsereward tasks, and substantially improves over prior hierarchical RL methods for unsupervised skill discovery. We have open-sourced our implementation at: https://github.com/google-research/dads

传统上,基于模型的强化学习 (MBRL) 旨在学习环境的全局动力学模型。一个优秀的模型有望让规划算法生成多样行为并解决不同任务。然而,为复杂动态系统学习精确模型十分困难,且即便获得准确模型,其在训练状态分布之外也可能泛化不佳。本研究将基于模型的学习与无需模型的技能基元学习相结合,使基于模型的规划更易实现。为此,我们试图解答:如何发现结果易于预测的技能?我们提出无监督学习算法——动态感知技能发现 (DADS),它能同步发现可预测行为并学习其动力学规律。该方法理论上可利用连续技能空间,即使在高维状态空间下也能学习无限多种行为。实验表明,在所学潜空间进行零样本规划显著优于标准MBRL和基于目标的免模型RL,能处理稀疏奖励任务,并大幅改进了现有无监督技能发现的层次化RL方法。项目代码已开源:https://github.com/google-research/dads

Figure 1: A humanoid agent discovers diverse locomotion primitives without any reward using DADS. We show zero-shot generalization to downstream tasks by composing the learned primitives using model predictive control, enabling the agent to follow an online sequence of goals (green markers) without any additional training.

图 1: 人形智能体通过DADS无奖励机制自主发现多样化运动基元。我们展示了通过模型预测控制组合所学基元的零样本下游任务泛化能力,使智能体无需额外训练即可跟随在线目标序列 (绿色标记) 。

1 INTRODUCTION

1 引言

Deep reinforcement learning (RL) enables autonomous learning of diverse and complex tasks with rich sensory inputs, temporally extended goals, and challenging dynamics, such as discrete gameplaying domains (Mnih et al., 2013; Silver et al., 2016), and continuous control domains including locomotion (Schulman et al., 2015; Heess et al., 2017) and manipulation (Rajeswaran et al., 2017; Kalashnikov et al., 2018; Gu et al., 2017). Most of the deep RL approaches learn a Q-function or a policy that are directly optimized for the training task, which limits their generalization to new scenarios. In contrast, MBRL methods (Li & Todorov, 2004; Deisenroth & Rasmussen, 2011; Watter et al., 2015) can acquire dynamics models that may be utilized to perform unseen tasks at test time. While this capability has been demonstrated in some of the recent works (Levine et al., 2016; Nagabandi et al., 2018; Chua et al., 2018b; Kurutach et al., 2018; Ha & Schmid huber,

深度强化学习 (RL) 能够通过丰富的感官输入、时间延伸目标和具有挑战性的动态环境,自主完成多样且复杂的任务,例如离散游戏领域 (Mnih et al., 2013; Silver et al., 2016) 以及包括运动控制 (Schulman et al., 2015; Heess et al., 2017) 和操作任务 (Rajeswaran et al., 2017; Kalashnikov et al., 2018; Gu et al., 2017) 在内的连续控制领域。大多数深度强化学习方法通过学习直接针对训练任务优化的 Q 函数或策略,这限制了它们对新场景的泛化能力。相比之下,基于模型的强化学习 (MBRL) 方法 (Li & Todorov, 2004; Deisenroth & Rasmussen, 2011; Watter et al., 2015) 能够获取动态模型,这些模型可用于在测试时执行未见过的任务。尽管这种能力已在一些近期工作中得到验证 (Levine et al., 2016; Nagabandi et al., 2018; Chua et al., 2018b; Kurutach et al., 2018; Ha & Schmidhuber,

2018), learning an accurate global model that works for all state-action pairs can be exceedingly challenging, especially for high-dimensional system with complex and discontinuous dynamics. The problem is further exacerbated as the learned global model has limited generalization outside of the state distribution it was trained on and exploring the whole state space is generally infeasible. Can we retain the flexibility of model-based RL, while using model-free RL to acquire proficient low-level behaviors under complex dynamics?

2018年),学习一个适用于所有状态-动作对的精确全局模型可能极具挑战性,尤其是对于具有复杂且不连续动态的高维系统。当所学全局模型在其训练状态分布之外的泛化能力有限,且探索整个状态空间通常不可行时,问题会进一步加剧。我们能否在保留基于模型的强化学习灵活性的同时,利用无模型强化学习在复杂动态下获取熟练的低层行为?

While learning a global dynamics model that captures all the different behaviors for the entire statespace can be extremely challenging, learning a model for a specific behavior that acts only in a small part of the state-space can be much easier. For example, consider learning a model for dynamics of all gaits of a quadruped versus a model which only works for a specific gait. If we can learn many such behaviors and their corresponding dynamics, we can leverage model-predictive control to plan in the behavior space, as opposed to planning in the action space. The question then becomes: how do we acquire such behaviors, considering that behaviors could be random and unpredictable? To this end, we propose Dynamics-Aware Discovery of Skills (DADS), an unsupervised RL framework for learning low-level skills using model-free RL with the explicit aim of making model-based control easy. Skills obtained using DADS are directly optimized for predictability, providing a better representation on top of which predictive models can be learned. Crucially, the skills do not require any supervision to learn, and are acquired entirely through autonomous exploration. This means that the repertoire of skills and their predictive model are learned before the agent has been tasked with any goal or reward function. When a task is provided at test-time, the agent utilizes the previously learned skills and model to immediately perform the task without any further training.

虽然学习一个能捕捉整个状态空间所有不同行为的全局动态模型极具挑战性,但针对只在状态空间小范围内运作的特定行为学习模型则简单得多。例如,比较学习四足动物所有步态动态的模型与仅适用于特定步态的模型。如果我们能学习多种此类行为及其对应动态,就能利用模型预测控制在行为空间进行规划,而非动作空间规划。随之而来的问题是:考虑到行为可能随机且不可预测,我们如何获取这些行为?为此,我们提出动态感知技能发现(DADS)——一个通过无模型强化学习来获取底层技能的无监督强化学习框架,其明确目标是简化基于模型的控制。通过DADS获得的技能直接针对可预测性进行优化,为学习预测模型提供了更好的表征基础。关键在于,这些技能的学习无需任何监督,完全通过自主探索获得。这意味着技能库及其预测模型是在智能体被赋予任何目标或奖励函数之前就已习得的。当测试阶段提供任务时,智能体可立即调用预先学习的技能和模型来执行任务,无需额外训练。

The key contribution of our work is an unsupervised reinforcement learning algorithm, DADS, grounded in mutual-information-based exploration. We demonstrate that our objective can embed learned primitives in continuous spaces, which allows us to learn a large, diverse set of skills. Crucially, our algorithm also learns to model the dynamics of the skills, which enables the use of model-based planning algorithms for downstream tasks. We adapt the conventional model predictive control algorithms to plan in the space of primitives, and demonstrate that we can compose the learned primitives to solve downstream tasks without any additional training.

我们工作的核心贡献是一种基于互信息探索的无监督强化学习算法DADS。研究表明,该目标函数能够在连续空间中嵌入习得的基元技能,从而学习到大量多样化的技能组合。关键创新在于,该算法还能同步学习技能动态模型,这使得基于模型的规划算法能够直接应用于下游任务。我们改进了传统模型预测控制算法,使其能够在基元技能空间中进行规划,并验证了无需额外训练即可组合已学习技能来解决下游任务的能力。

2 PRELIMINARIES

2 预备知识

Mutual information can been used as an objective to encourage exploration in reinforcement learning (Houthooft et al., 2016; Mohamed & Rezende, 2015). According to its definition, $\mathcal{I}(X;Y)\stackrel{}{=}$ ${\mathcal{H}}(X)-{\mathcal{H}}(X\mid Y)$ , maximizing mutual information $\mathcal{T}$ with respect to $Y$ amounts to maximizing the entropy $\mathcal{H}$ of $X$ while minimizing the conditional entropy ${\mathcal{H}}(X\mid Y)$ . In the context of RL, $X$ is usually a function of the state and $Y$ a function of actions. Maximizing this objective encourages the state entropy to be high, making the underlying policy to be exploratory. Recently, multiple works (Eysenbach et al., 2018; Gregor et al., 2016; Achiam et al., 2018) apply this idea to learn diverse skills which maximally cover the state space.

互信息可作为强化学习中鼓励探索的目标函数 (Houthooft et al., 2016; Mohamed & Rezende, 2015)。根据其定义 $\mathcal{I}(X;Y)\stackrel{}{=}$ ${\mathcal{H}}(X)-{\mathcal{H}}(X\mid Y)$,最大化关于 $Y$ 的互信息 $\mathcal{T}$ 等价于最大化 $X$ 的熵 $\mathcal{H}$ 同时最小化条件熵 ${\mathcal{H}}(X\mid Y)$。在强化学习背景下,$X$ 通常是状态的函数,$Y$ 是动作的函数。最大化该目标函数会促使状态熵保持较高水平,从而使底层策略具有探索性。近期多项研究 (Eysenbach et al., 2018; Gregor et al., 2016; Achiam et al., 2018) 应用这一思想来学习能最大程度覆盖状态空间的多样化技能。

To leverage planning-based control, MBRL estimates the true dynamics of the environment by learning a model $\hat{p}(s^{\prime}\mid\bar{s},a)$ . This allows it to predict a trajectory of states $\hat{\tau}_ {H}=\left(s_{t},\hat{s}_ {t+1},,\dots\hat{s}_{t+H}\right)$ resulting from a sequence of actions without any additional interaction with the environment. While model-based RL methods have been demonstrated to be sample efficient compared to their modelfree counterparts, learning an effective model for the whole state-space is challenging. An openproblem in model-based RL is to incorporate temporal abstraction in model-based control, to enable high-level planning and move-away from planning at the granular level of actions.

为利用基于规划的控制,MBRL通过学习模型$\hat{p}(s^{\prime}\mid\bar{s},a)$来估计环境的真实动态。这使得它能够预测由一系列动作产生的状态轨迹$\hat{\tau}_ {H}=\left(s_{t},\hat{s}_ {t+1},,\dots\hat{s}_{t+H}\right)$,而无需与环境进行额外交互。尽管基于模型的强化学习方法已被证明比无模型方法更具样本效率,但为整个状态空间学习有效模型具有挑战性。基于模型强化学习中的一个开放问题是在基于模型的控制中融入时间抽象,以实现高层级规划并摆脱动作粒度的规划。

These seemingly unrelated ideas can be combined into a single optimization scheme, where we first discover skills (and their models) without any extrinsic reward and then compose these skills to optimize for the task defined at test time using model-based planning. At train time, we assume a Markov Decision Process (MDP) $\mathcal{M}_ {1}\equiv(\mathcal{S},\mathcal{A},p)$ . The state space $s$ and action space $\mathcal{A}$ are assumed to be continuous, and the $\mathcal{A}$ bounded. We assume the transition dynamics $p$ to be stochastic, such that $p:S\times{\mathcal{A}}\times{\mathcal{S}}\mapsto[0,\infty)$ . We learn a skill-conditioned policy $\pi(a\mid s,z)$ , where the skills $z$ belongs to the space $\mathcal{Z}$ , detailed in Section 3. We assume that the skills are sampled from a prior $p(z)$ over $\mathcal{Z}$ . We simultaneously learn a skill-conditioned transition function $q(s^{\prime}\mid s,z)$ , coined as skill-dynamics, which predicts the transition to the next state $s^{\prime}$ from the current state $s$ for the skill $z$ under the given dynamics $p$ . At test time, we assume an MDP $\mathcal{M}_ {2}\equiv(\mathcal{S},\mathcal{A},p,r)$ , where $S,{\cal A},p$ match those defined in $\mathcal{M}_ {1}$ , and the reward function $r:S\times A\mapsto(-\infty,\infty)$ . We plan in $\mathcal{Z}$ using $q(s^{\prime}\mid s,z)$ to compose the learned skills $z$ for optimizing $r$ in $\mathcal{M}_{2}$ , which we detail in Section 4.

这些看似无关的概念可以整合为单一的优化方案:首先在无外部奖励的情况下发现技能(及其模型),然后利用基于模型的规划在测试时将这些技能组合起来优化任务。训练时,我们假设一个马尔可夫决策过程 (MDP) $\mathcal{M}_ {1}\equiv(\mathcal{S},\mathcal{A},p)$。状态空间 $s$ 和动作空间 $\mathcal{A}$ 为连续空间,且 $\mathcal{A}$ 有界。假设转移动态 $p$ 是随机的,即 $p:S\times{\mathcal{A}}\times{\mathcal{S}}\mapsto[0,\infty)$。我们学习一个技能条件策略 $\pi(a\mid s,z)$,其中技能 $z$ 属于空间 $\mathcal{Z}$(详见第3节)。假设技能从 $\mathcal{Z}$ 上的先验分布 $p(z)$ 中采样得到。同时学习一个技能条件转移函数 $q(s^{\prime}\mid s,z)$(称为技能动态),用于在给定动态 $p$ 下预测技能 $z$ 从当前状态 $s$ 到下一状态 $s^{\prime}$ 的转移。测试时,假设存在 MDP $\mathcal{M}_ {2}\equiv(\mathcal{S},\mathcal{A},p,r)$,其中 $S,{\cal A},p$ 与 $\mathcal{M}_ {1}$ 定义一致,奖励函数为 $r:S\times A\mapsto(-\infty,\infty)$。我们在 $\mathcal{Z}$ 空间利用 $q(s^{\prime}\mid s,z)$ 进行规划,组合已学习技能 $z$ 以优化 $\mathcal{M}_{2}$ 中的 $r$(详见第4节)。

3 DYNAMICS-AWARE DISCOVERY OF SKILLS (DADS)

3 动态感知技能发现 (DADS)

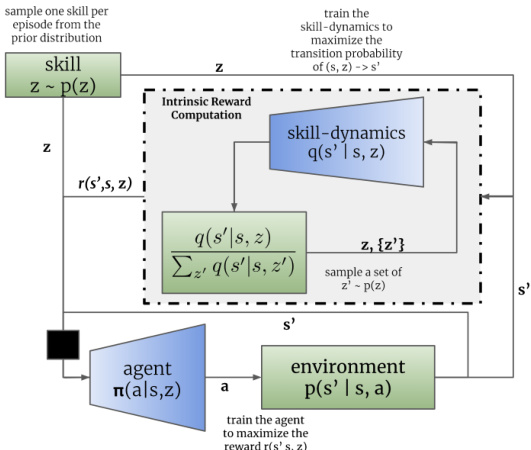

Figure 2: The agent $\pi$ interacts with the environment to produce a transition $s\rightarrow s^{\prime}$ . Intrinsic reward is computed by computing the transition probability under $q$ for the current skill $z$ , compared to random samples from the prior $p(\bar{z})$ . The agent maximizes the intrinsic reward computed for a batch of episodes, while $q$ maximizes the log-probability of the actual transitions of $(s,z)\to s^{\prime}$ .

图 2: 智能体 $\pi$ 与环境交互产生状态转移 $s\rightarrow s^{\prime}$。内在奖励通过计算当前技能 $z$ 在 $q$ 下的转移概率,并与先验分布 $p(\bar{z})$ 的随机样本进行比较得出。智能体最大化由一批回合计算得到的内在奖励,同时 $q$ 最大化实际状态转移 $(s,z)\to s^{\prime}$ 的对数概率。

We use the information theoretic paradigm of mutual information to obtain our unsupervised skill discovery algorithm. In particular, we propose to maximize the mutual information between the next state $s^{\prime}$ and current skill $z$ conditioned on the current state $s$ .

我们采用互信息的信息论范式来开发无监督技能发现算法。具体而言,我们提出最大化下一状态$s^{\prime}$与当前技能$z$在给定当前状态$s$条件下的互信息。

$$

\begin{array}{r}{\mathbb{Z}(s^{\prime};z\mid s)=\mathcal{H}(z\mid s)-\mathcal{H}(z\mid s^{\prime},s)}\ {=\mathcal{H}(s^{\prime}\mid s)-\mathcal{H}(s^{\prime}\mid s,z)}\end{array}

$$

$$

\begin{array}{r}{\mathbb{Z}(s^{\prime};z\mid s)=\mathcal{H}(z\mid s)-\mathcal{H}(z\mid s^{\prime},s)}\ {=\mathcal{H}(s^{\prime}\mid s)-\mathcal{H}(s^{\prime}\mid s,z)}\end{array}

$$

Mutual information in Equation 1 quantifies how much can be known about $s^{\prime}$ given $z$ and $s$ , or symmetrically, $z$ given the transition from $s\rightarrow s^{\prime}$ . From Equation 2, maximizing this objective corresponds to maximizing the diversity of transitions produced in the environment, that is denoted by the entropy $\mathcal{H}(s^{\prime}\mid s)$ , while making $z$ informative about the next state $s^{\prime}$ by minimizing the entropy $\mathcal{H}(s^{\prime}\mid s,z)$ . Intuitively, skills $z$ can be interpreted as abstracted action sequences which are identifiable by the transitions generated in the environment (and not just by the current state). Thus, optimizing this mutual information can be understood as encoding a diverse set of skills in the latent space $\mathcal{Z}$ , while making the transitions for a given $z\in{\mathcal{Z}}$ predictable. We use the entropydecomposition in Equation 2 to connect this objective with model-based control.

式1中的互信息量化了在给定$z$和$s$时能获知多少关于$s^{\prime}$的信息(对称地,也可量化在状态转移$s\rightarrow s^{\prime}$时能获知多少关于$z$的信息)。根据式2,最大化该目标函数等价于最大化环境中状态转移的多样性(即熵$\mathcal{H}(s^{\prime}\mid s)$),同时通过最小化熵$\mathcal{H}(s^{\prime}\mid s,z)$使$z$能有效预测下一状态$s^{\prime}$。直观上,技能$z$可解释为被环境生成的状态转移(而非仅当前状态)所标识的抽象动作序列。因此,优化该互信息可理解为在潜在空间$\mathcal{Z}$中编码多样化的技能集,同时确保给定$z\in{\mathcal{Z}}$时的状态转移具有可预测性。我们利用式2的熵分解将此目标函数与基于模型的控制建立关联。

We want to optimize the our skill-conditioned controller $\pi(a\mid s,z)$ such that the latent space $z\sim p(z)$ is maximally informative about the transitions $s\to s^{\prime}$ . Using the definition of conditional mutual information, we can rewrite Equation 2 as:

我们想要优化技能条件控制器 $\pi(a\mid s,z)$,使得潜在空间 $z\sim p(z)$ 能最大化地反映状态转移 $s\to s^{\prime}$ 的信息。利用条件互信息的定义,我们可以将公式2重写为:

$$

\mathcal{Z}(s^{\prime};z\mid s)=\int p(z,s,s^{\prime})\log\frac{p(s^{\prime}\mid s,z)}{p(s^{\prime}\mid s)}d s^{\prime}d s d z

$$

$$

\mathcal{Z}(s^{\prime};z\mid s)=\int p(z,s,s^{\prime})\log\frac{p(s^{\prime}\mid s,z)}{p(s^{\prime}\mid s)}d s^{\prime}d s d z

$$

We assume the following generative model: $p(z,s,s^{\prime})=p(z)p(s\mid z)p(s^{\prime}\mid s,z)$ , where $p(z)$ is user specified prior over $\mathcal{Z}$ , $p(s|z)$ denotes the stationary state-distribution induced by $\pi(a\mid s,z)$ for a skill $z$ and $p(s^{\prime}\mid s,z)$ denotes the transition distribution under skill $z$ . Note, $p(s^{\prime}\mid s,z)=$ $\begin{array}{r}{\int p(s^{\prime}\mid s,a)\pi(a\mid\dot{s},z)d a}\end{array}$ is intractable to compute because the underlying dynamics are unknown. However, we can variation ally lower bound the objective as follows:

我们假设以下生成模型:$p(z,s,s^{\prime})=p(z)p(s\mid z)p(s^{\prime}\mid s,z)$,其中$p(z)$是用户指定的$\mathcal{Z}$上的先验分布,$p(s|z)$表示由技能$z$的策略$\pi(a\mid s,z)$诱导的稳态分布,$p(s^{\prime}\mid s,z)$表示技能$z$下的转移分布。注意,$p(s^{\prime}\mid s,z)=$$\begin{array}{r}{\int p(s^{\prime}\mid s,a)\pi(a\mid\dot{s},z)d a}\end{array}$由于底层动态未知而难以计算。然而,我们可以通过以下方式变分下界目标:

$$

\begin{array}{r l}&{\mathbb{Z}(s^{\prime};z\mid s)=\mathbb{E}_ {z,s,s^{\prime}\sim p}\Big[\log\frac{p(s^{\prime}\mid s,z)}{p(s^{\prime}\mid s)}\Big]}\ &{\qquad=\mathbb{E}_ {z,s,s^{\prime}\sim p}\Big[\log\frac{q_{\phi}(s^{\prime}\mid s,z)}{p(s^{\prime}\mid s)}\Big]+\mathbb{E}_ {s,z\sim p}\Big[\mathcal{D}_ {K L}(p(s^{\prime}\mid s,z)\mid\mid q_{\phi}(s^{\prime}\mid s,z))\Big]}\ &{\qquad\geq\mathbb{E}_ {z,s,s^{\prime}\sim p}\Big[\log\frac{q_{\phi}(s^{\prime}\mid s,z)}{p(s^{\prime}\mid s)}\Big]}\end{array}

$$

$$

\begin{array}{r l}&{\mathbb{Z}(s^{\prime};z\mid s)=\mathbb{E}_ {z,s,s^{\prime}\sim p}\Big[\log\frac{p(s^{\prime}\mid s,z)}{p(s^{\prime}\mid s)}\Big]}\ &{\qquad=\mathbb{E}_ {z,s,s^{\prime}\sim p}\Big[\log\frac{q_{\phi}(s^{\prime}\mid s,z)}{p(s^{\prime}\mid s)}\Big]+\mathbb{E}_ {s,z\sim p}\Big[\mathcal{D}_ {K L}(p(s^{\prime}\mid s,z)\mid\mid q_{\phi}(s^{\prime}\mid s,z))\Big]}\ &{\qquad\geq\mathbb{E}_ {z,s,s^{\prime}\sim p}\Big[\log\frac{q_{\phi}(s^{\prime}\mid s,z)}{p(s^{\prime}\mid s)}\Big]}\end{array}

$$

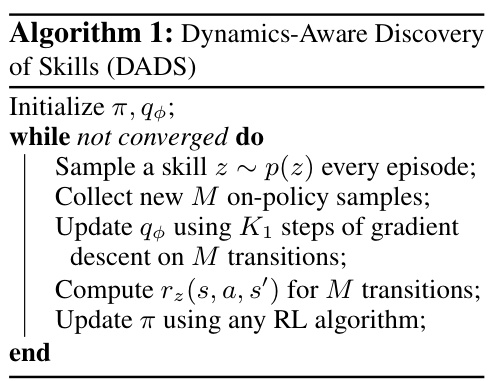

where we have used the non-negativity of KL-divergence, that is $\mathcal{D}_ {K L}\geq0$ . Note, skill-dynamics $q_{\phi}$ represents the variation al approximation for the transition function $p(s^{\prime}\mid s,z)$ , which enables model-based control as described in Section 4. Equation 4 suggests an alternating optimization between $q_{\phi}$ and $\pi$ , summarized in Algorithm 1. In every iteration:

其中我们利用了KL散度的非负性,即 $\mathcal{D}_ {K L}\geq0$ 。注意,技能动态 $q_{\phi}$ 表示对转移函数 $p(s^{\prime}\mid s,z)$ 的变分近似,如第4节所述,这支持基于模型的控制。公式4提出了 $q_{\phi}$ 和 $\pi$ 之间的交替优化,如算法1所总结。在每次迭代中:

(Tighten variation al lower bound) We minimize $D_{K L}(p(s^{\prime}\mid s,z)\mid\mid q_{\phi}(s^{\prime}\mid s,z))$ with respect to the parameters $\phi$ on $z,s\sim p$ to tighten the lower bound. For general function ap proxima tors like neural networks, we can write the gradient for $\phi$ as follows:

(收紧变分下界) 我们最小化 $D_{K L}(p(s^{\prime}\mid s,z)\mid\mid q_{\phi}(s^{\prime}\mid s,z))$ 关于参数 $\phi$ 在 $z,s\sim p$ 上的取值,以收紧下界。对于神经网络等通用函数逼近器,可将 $\phi$ 的梯度表示为:

$$

\begin{array}{r}{\nabla_{\phi}\mathbb{E}_ {s,z}[\mathcal{D}_ {K L}(p(s^{\prime}\mid s,z)\mid\mid q_{\phi}(s^{\prime}\mid s,z))]=\nabla_{\phi}\mathbb{E}_ {z,s,s^{\prime}}\Big[\log\frac{p(s^{\prime}\mid s,z)}{q_{\phi}(s^{\prime}\mid s,z)}\Big]}\ {=-\mathbb{E}_ {z,s,s^{\prime}}\Big[\nabla_{\phi}\log q_{\phi}(s^{\prime}\mid s,z)\Big]}\end{array}

$$

$$

\begin{array}{r}{\nabla_{\phi}\mathbb{E}_ {s,z}[\mathcal{D}_ {K L}(p(s^{\prime}\mid s,z)\mid\mid q_{\phi}(s^{\prime}\mid s,z))]=\nabla_{\phi}\mathbb{E}_ {z,s,s^{\prime}}\Big[\log\frac{p(s^{\prime}\mid s,z)}{q_{\phi}(s^{\prime}\mid s,z)}\Big]}\ {=-\mathbb{E}_ {z,s,s^{\prime}}\Big[\nabla_{\phi}\log q_{\phi}(s^{\prime}\mid s,z)\Big]}\end{array}

$$

which corresponds to maximizing the likelihood of the samples from $p$ under $q_{\phi}$ .

这相当于在 $q_{\phi}$ 下最大化来自 $p$ 的样本的似然。

(Maximize approximate lower bound) After fitting $q_{\phi}$ , we can optimize $\pi$ to maximize $\mathbb{E}_ {z,s,s^{\prime}}[\log q_{\phi}(s^{\prime}\mid s,z)-\log p(s^{\prime}\mid s)]$ . Note, this is a reinforcement-learning style optimization with a reward function $\log q_{\phi}(s^{\prime}\mid s,z)-\log p(s^{\prime}\mid s)$ . However, $\log p(s^{\prime}\mid s)$ is intractable to compute, so we approximate the reward function for $\pi$ :

(最大化近似下界)拟合 $q_{\phi}$ 后,我们可以优化 $\pi$ 以最大化 $\mathbb{E}_ {z,s,s^{\prime}}[\log q_{\phi}(s^{\prime}\mid s,z)-\log p(s^{\prime}\mid s)]$。注意,这是一个强化学习风格的优化,其奖励函数为 $\log q_{\phi}(s^{\prime}\mid s,z)-\log p(s^{\prime}\mid s)$。然而,$\log p(s^{\prime}\mid s)$ 难以计算,因此我们为 $\pi$ 近似奖励函数:

$$

r_{z}(s,a,s^{\prime})=\log\frac{q_{\phi}(s^{\prime}\mid s,z)}{\sum_{i=1}^{L}q_{\phi}(s^{\prime}\mid s,z_{i})}+\log L,\quad z_{i}\sim p(z).

$$

$$

r_{z}(s,a,s^{\prime})=\log\frac{q_{\phi}(s^{\prime}\mid s,z)}{\sum_{i=1}^{L}q_{\phi}(s^{\prime}\mid s,z_{i})}+\log L,\quad z_{i}\sim p(z).

$$

The approximation is motivated as follows: $\begin{array}{r}{p(s^{\prime}\mid s)=\int p(s^{\prime}\mid s,z)p(z\mid s)d z\approx\int q_{\phi}(s^{\prime}\mid}\end{array}$ $\begin{array}{r}{s,z)p(z)d z\approx\frac{1}{L}\sum_{i=1}^{L}q_{\phi}(s^{\prime}\mid s,z_{i})}\end{array}$ for $z_{i}\sim p(z)$ , where $L$ denotes the number o mples from the prior. We are using the marginal of variation al approximation $p(z)$ imate the marginal distribution of transitions. We discuss this approximation in Appendix C. Note, the final reward function $r_{z}$ encourages the policy $\pi$ to produce transitions that are (a) predictable under $q_{\phi}$ (predictability) and (b) different from the transitions produced under $z_{i}\sim p(z)$ (diversity).

该近似方法的动机如下:$\begin{array}{r}{p(s^{\prime}\mid s)=\int p(s^{\prime}\mid s,z)p(z\mid s)d z\approx\int q_{\phi}(s^{\prime}\mid}\end{array}$ $\begin{array}{r}{s,z)p(z)d z\approx\frac{1}{L}\sum_{i=1}^{L}q_{\phi}(s^{\prime}\mid s,z_{i})}\end{array}$ 其中 $z_{i}\sim p(z)$,$L$ 表示从先验分布中采样的数量。我们使用变分近似的边缘分布 $p(z)$ 来近似转移的边缘分布。该近似方法的讨论详见附录C。需注意,最终奖励函数 $r_{z}$ 会激励策略 $\pi$ 产生符合以下特性的状态转移:(a) 在 $q_{\phi}$ 下可预测(可预测性),(b) 与 $z_{i}\sim p(z)$ 下产生的转移不同(多样性)。

To generate samples from $p(z,s,s^{\prime})$ , we use the rollouts from the current policy $\pi$ for multiple samples $z\sim p(z)$ in an episodic setting for a fixed horizon $T$ . We also introduce entropy regularization for $\pi(a\mid s,z)$ , which encourages the policy to discover action-sequences with similar state-transitions and to be clustered under the same skill $z$ , making the policy robust besides encouraging exploration (Haarnoja et al., 2018a). The use of entropy regular iz ation can be justified from an information bottleneck perspective as discussed for Information Maximization algorithm in (Mohamed & Rezende, 2015). This is even more extensively discussed from the graphical model perspective in Appendix B, which connects unsupervised skill discovery and information bottleneck literature, while also revealing the temporal nature of skills $z$ . Details corresponding to implementation and hyper parameters are discussed in Appendix A.

为了从 $p(z,s,s^{\prime})$ 生成样本,我们在固定时间范围 $T$ 的情景设置中,使用当前策略 $\pi$ 的多次采样 $z\sim p(z)$ 进行滚动计算。我们还对 $\pi(a\mid s,z)$ 引入了熵正则化 (entropy regularization) ,这鼓励策略发现具有相似状态转移的动作序列,并将其聚类到同一技能 $z$ 下,从而在促进探索的同时增强策略的鲁棒性 (Haarnoja et al., 2018a) 。从信息瓶颈 (information bottleneck) 的角度来看,熵正则化的使用是合理的,如 (Mohamed & Rezende, 2015) 中关于信息最大化算法 (Information Maximization algorithm) 的讨论所示。附录 B 从图模型的角度对此进行了更广泛的讨论,将无监督技能发现与信息瓶颈文献联系起来,同时揭示了技能 $z$ 的时间特性。实现细节和超参数相关内容详见附录 A。

4 PLANNING USING SKILL DYNAMICS

4 基于技能动态的规划

Given the learned skills $\pi(a\mid s,z)$ and their respective skill-transition dynamics $q_{\phi}(s^{\prime}\mid s,z)$ , we can perform model-based planning in the latent space $\mathcal{Z}$ to optimize for a reward $r$ that is given to the agent at test time. Note, that this essentially allows us to perform zero-shot planning given the unsupervised pre-training procedure described in Section 3.

给定学习到的技能 $\pi(a\mid s,z)$ 及其各自的技能转移动态 $q_{\phi}(s^{\prime}\mid s,z)$,我们可以在潜在空间 $\mathcal{Z}$ 中进行基于模型的规划,以优化测试时赋予智能体的奖励 $r$。值得注意的是,这本质上使我们能够基于第3节描述的无监督预训练过程进行零样本 (Zero-shot) 规划。

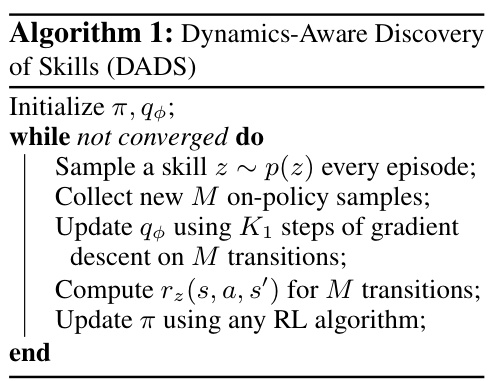

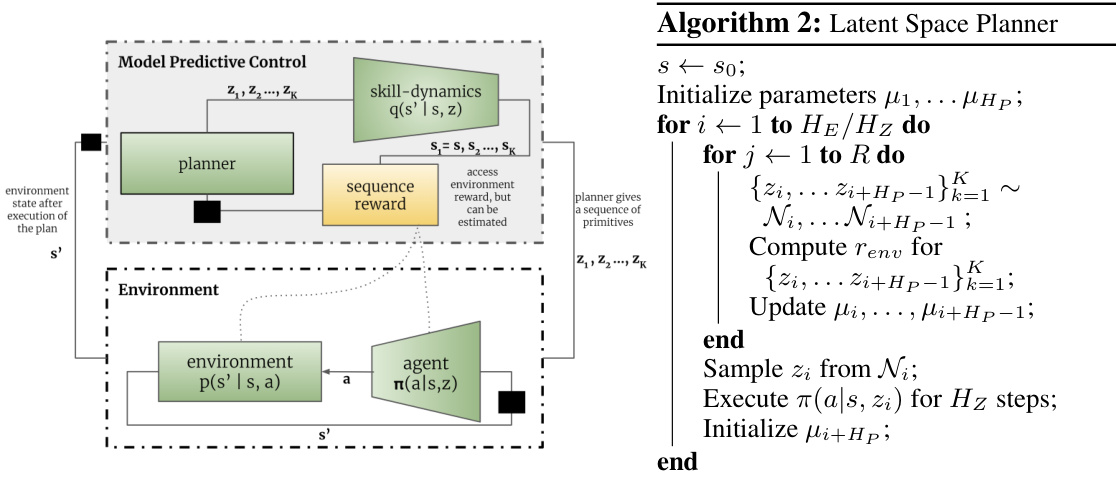

In order to perform planning, we employ the model-predictive-control (MPC) paradigm Garcia et al. (1989), which in a standard setting generates a set of action plans $P_{k}=(a_{k,1},...a_{k,H})\sim P$ for a planning horizon $H$ . The MPC plans can be generated due to the fact that the planner is able to simulate the trajectory $\hat{\tau}_ {k}=\left(s_{k,1},a_{k,1}...s_{k,H+1}\right)$ assuming access to the transition dynamics ${\hat{p}}(s^{\prime}\mid s,a)$ . In addition, each plan computes the reward $r(\hat{\tau}_ {k})$ for its trajectory according to the reward function $r$ that is provided for the test-time task. Following the MPC principle, the planner selects the best plan according to the reward function $r$ and executes its first action $a_{1}$ . The planning algorithm repeats this procedure for the next state iterative ly until it achieves its goal.

为了进行规划,我们采用了模型预测控制 (MPC) 范式 [Garcia et al. (1989)],在标准设置下,它会为规划周期 $H$ 生成一组动作计划 $P_{k}=(a_{k,1},...a_{k,H})\sim P$。MPC 计划之所以能够生成,是因为规划器能够模拟轨迹 $\hat{\tau}_ {k}=\left(s_{k,1},a_{k,1}...s_{k,H+1}\right)$,前提是可以访问转移动态 ${\hat{p}}(s^{\prime}\mid s,a)$。此外,每个计划会根据为测试时任务提供的奖励函数 $r$ 计算其轨迹的奖励 $r(\hat{\tau}_ {k})$。根据 MPC 原则,规划器会按照奖励函数 $r$ 选择最佳计划并执行其第一个动作 $a_{1}$。规划算法会在下一个状态重复此过程,直到达成目标。

We use a similar strategy to design an MPC planner to exploit previously-learned skill-transition dynamics $q_{\phi}(s^{\prime}\mid s,z)$ . Note that unlike conventional model-based RL, we generate a plan $P_{k}={}$ $\big(z_{k,1},\dots:z_{k,H_{P}}\big)$ in the latent space $\mathcal{Z}$ as opposed to the action space $\mathcal{A}$ that would be used by a standard planner. Since the primitives are temporally meaningful, it is beneficial to hold a primitive for a horizon $H_{Z}>1$ , unlike actions which are usually held for a single step. Thus, effectively, the planning horizon for our latent space planner is $H=H_{P}\times H_{Z}$ , enabling longer-horizon planning using fewer primitives. Similar to the standard MPC setting, the latent space planner simulates the trajectory $\hat{\tau}_ {k}=\left(s_{k,1},z_{k,1},a_{k,1},s_{k,2},z_{k,2},a_{k,2},\dots s_{k,H+1}\right)$ and computes the reward $r(\hat{\tau}_ {k})$ . After a small number of trajectory samples, the planner selects the first latent action $z_{1}$ of the best plan, executes it for $H_{Z}$ steps in the environment, and the repeats the process until goal completion.

我们采用类似策略设计了一个MPC规划器,以利用先前学习的技能转移动态$q_{\phi}(s^{\prime}\mid s,z)$。需要注意的是,与传统基于模型的强化学习不同,我们在潜在空间$\mathcal{Z}$中生成计划$P_{k}={}$$\big(z_{k,1},\dots:z_{k,H_{P}}\big)$,而非标准规划器使用的动作空间$\mathcal{A}$。由于基元具有时序意义,保持一个基元持续$H_{Z}>1$步长比单步执行动作更具优势。因此,潜在空间规划器的有效规划范围为$H=H_{P}\times H_{Z}$,使得用更少基元实现更长跨度规划成为可能。与标准MPC设置类似,该规划器模拟轨迹$\hat{\tau}_ {k}=\left(s_{k,1},z_{k,1},a_{k,1},s_{k,2},z_{k,2},a_{k,2},\dots s_{k,H+1}\right)$并计算奖励$r(\hat{\tau}_ {k})$。经过少量轨迹采样后,规划器选择最优计划的第一个潜在动作$z_{1}$,在环境中执行$H_{Z}$步,然后重复该过程直至达成目标。

Figure 3: At test time, the planner executes simulates the transitions in environment using skill-dynamics $q$ , and updates the distribution of plans according to the computed reward on the simulated trajectories. After a few updates to the plan, the first primitive is executed in the environment using the learned agent $\pi$ .

图 3: 在测试阶段,规划器使用技能动力学 $q$ 模拟环境中的状态转移,并根据模拟轨迹上的计算奖励更新计划分布。经过几次计划更新后,第一个基元动作通过已学习的智能体 $\pi$ 在环境中执行。

The latent planner $P$ maintains a distribution of latent plans, each of length $H_{P}$ . Each element in the sequence represents the distribution of the primitive to be executed at that time step. For continuous spaces, each element of the sequence can be modelled using a normal distribution, $\mathcal{N}(\mu_{1},\Sigma),\dots\mathcal{N}(\mu_{H_{P}},\Sigma)$ . We refine the planning distributions for $R$ steps, using $K$ samples of latent plans $P_{k}$ , and compute the $r_{k}$ for the simulated trajectory $\hat{\tau}_{k}$ . The update for the parameters follows that in Model Predictive Path Integral (MPPI) controller Williams et al. (2016):

潜在规划器 $P$ 维持着一组长度为 $H_{P}$ 的潜在计划分布。序列中的每个元素代表该时间步要执行的基本动作分布。对于连续空间,序列的每个元素可以用正态分布建模,即 $\mathcal{N}(\mu_{1},\Sigma),\dots\mathcal{N}(\mu_{H_{P}},\Sigma)$。我们通过采样 $K$ 个潜在计划 $P_{k}$,对规划分布进行 $R$ 步优化,并计算模拟轨迹 $\hat{\tau}_ {k}$ 的奖励 $r_{k}$。参数更新遵循模型预测路径积分 (MPPI) 控制器的规则 (Williams et al., 2016):

$$

\mu_{i}=\sum_{k=1}^{K}\frac{\exp(\gamma r_{k})}{\sum_{p=1}^{K}\exp(\gamma r_{p})}z_{k,i}\quad\forall i=1,\dots{\cal H}_{P}

$$

$$

\mu_{i}=\sum_{k=1}^{K}\frac{\exp(\gamma r_{k})}{\sum_{p=1}^{K}\exp(\gamma r_{p})}z_{k,i}\quad\forall i=1,\dots{\cal H}_{P}

$$

While we keep the covariance matrix of the distributions fixed, it is possible to update that as well as shown in Williams et al. (2016). We show an overview of the planning algorithm in Figure 3, and provide more implementation details in Appendix A.

虽然我们保持分布的协方差矩阵固定,但也可以按照 Williams 等人 (2016) 所示的方法进行更新。我们在图 3 中展示了规划算法的概览,并在附录 A 中提供了更多实现细节。

5 RELATED WORK

5 相关工作

Central to our method is the concept of skill discovery via mutual information maximization. This principle, proposed in prior work that utilized purely model-free unsupervised RL methods (Daniel et al., 2012; Florensa et al., 2017; Eysenbach et al., 2018; Gregor et al., 2016; Warde-Farley et al., 2018; Thomas et al., 2018), aims to learn diverse skills via a disc rim inability objective: a good set of skills is one where it is easy to distinguish the skills from each other, which means they perform distinct tasks and cover the space of possible behaviors. Building on this prior work, we distinguish our skills based on how they modify the original uncontrolled dynamics of the system. This simultaneously encourages the skills to be both diverse and predictable. We also demonstrate that constraining the skills to be predictable makes them more amenable for hierarchical composition and thus, more useful on downstream tasks.

我们方法的核心是通过互信息最大化实现技能发现。这一原则源自先前采用纯无模型无监督强化学习方法的研究 (Daniel et al., 2012; Florensa et al., 2017; Eysenbach et al., 2018; Gregor et al., 2016; Warde-Farley et al., 2018; Thomas et al., 2018) ,其目标是通过可判别性目标学习多样化技能:优质的技能组合应易于相互区分,这意味着它们执行不同任务并覆盖可能的行为空间。基于这些研究基础,我们根据技能如何改变系统原始非受控动态来区分技能。这种方法同时促进技能的多样性和可预测性。我们还证明,约束技能的可预测性使其更适用于层次化组合,从而在下游任务中更具实用性。

Another line of work that is conceptually close to our method copes with intrinsic motivation (Oudeyer & Kaplan, 2009; Oudeyer et al., 2007; Schmid huber, 2010) which is used to drive the agent’s exploration. Examples of such works include empowerment Klyubin et al. (2005); Mohamed & Rezende (2015), count-based exploration Bellemare et al. (2016); Oh et al. (2015); Tang et al. (2017); Fu et al. (2017), information gain about agent’s dynamics Stadie et al. (2015) and forward-inverse dynamics models Pathak et al. (2017). While our method uses an informationtheoretic objective that is similar to these approaches, it is used to learn a variety of skills that can be directly used for model-based planning, which is in contrast to learning a better exploration policy for a single skill.

另一项在概念上与我们方法相近的工作涉及内在动机 (Oudeyer & Kaplan, 2009; Oudeyer et al., 2007; Schmidhuber, 2010),用于驱动智能体的探索。这类工作的例子包括赋能 (Klyubin et al., 2005; Mohamed & Rezende, 2015)、基于计数的探索 (Bellemare et al., 2016; Oh et al., 2015; Tang et al., 2017; Fu et al., 2017)、关于智能体动态的信息增益 (Stadie et al., 2015) 以及前向-逆向动态模型 (Pathak et al., 2017)。虽然我们的方法使用了与这些方法类似的信息论目标,但它用于学习多种可直接用于基于模型规划的技能,这与为单一技能学习更好的探索策略形成对比。

The skills discovered using our approach can also provide extended actions and temporal abstraction, which enable more efficient exploration for the agent to solve various tasks, reminiscent of hierarchical RL (HRL) approaches. This ranges from the classic option-critic architecture (Sutton et al., 1999; Stolle & Precup, 2002; Perkins et al., 1999) to some of the more recent work (Bacon et al., 2017; Vezhnevets et al., 2017; Nachum et al., 2018; Hausman et al., 2018). However, in contrast to end-to-end HRL approaches (Heess et al., 2016; Peng et al., 2017), we can leverage a stable, two-phase learning setup. The primitives learned through our method provide action and temporal abstraction, while planning with skill-dynamics enables hierarchical composition of these primitives, bypassing many problems of end-to-end HRL.

通过我们的方法发现的技能还能提供扩展动作和时间抽象能力,使得智能体在解决各类任务时能进行更高效的探索,这让人联想到分层强化学习 (HRL) 方法。这类方法涵盖从经典选项-评论家架构 (Sutton et al., 1999; Stolle & Precup, 2002; Perkins et al., 1999) 到近年研究 (Bacon et al., 2017; Vezhnevets et al., 2017; Nachum et al., 2018; Hausman et al., 2018) 。但与端到端HRL方法 (Heess et al., 2016; Peng et al., 2017) 不同,我们采用了稳定的两阶段学习框架:通过本方法学习的基础技能提供动作与时间抽象能力,而基于技能动力学的规划则实现了这些基础技能的分层组合,从而规避了端到端HRL的诸多问题。

In the second phase of our approach, we use the learned skill-transition dynamics models to perform model-based planning - an idea that has been explored numerous times in the literature. Model-based reinforcement learning has been traditionally approached with methods that are well-suited for lowdata regimes such as Gaussian Processes (Rasmussen, 2003) showing significant data-efficiency gains over model-free approaches (Deisenroth et al., 2013; Kamthe & Deisenroth, 2017; Kocijan et al., 2004; Ko et al., 2007). More recently, due to the challenges of applying these methods to highdimensional state spaces, MBRL approaches employs Bayesian deep neural networks (Nagabandi et al., 2018; Chua et al., 2018b; Gal et al., 2016; Fu et al., 2016; Lenz et al., 2015) to learn dynamics models. In our approach, we take advantage of the deep dynamics models that are conditioned on the skill being executed, simplifying the modelling problem. In addition, the skills themselves are being learned with the objective of being predictable, further assists with the learning of the dynamics model. There also have been multiple approaches addressing the planning component of MBRL including linear controllers for local models (Levine et al., 2016; Kumar et al., 2016; Chebotar et al., 2017), uncertainty-aware (Chua et al., 2018b; Gal et al., 2016) or deterministic planners (Nagabandi et al., 2018) and stochastic optimization methods (Williams et al., 2016). The main contribution of our work lies in discovering model-based skill primitives that can be further combined by a standard model-based planner, therefore we take advantage of an existing planning approach - Model Predictive Path Integral (Williams et al., 2016) that can leverage our pre-trained setting.

在我们方法的第二阶段,利用学习到的技能转移动态模型进行基于模型的规划——这一思路在文献中已被多次探讨。传统上,基于模型的强化学习采用高斯过程 (Gaussian Processes) [Rasmussen, 2003] 等适用于低数据量场景的方法,相比无模型方法展现出显著的数据效率优势 [Deisenroth et al., 2013; Kamthe & Deisenroth, 2017; Kocijan et al., 2004; Ko et al., 2007]。近年来,针对高维状态空间的建模挑战,基于模型的强化学习方法开始采用贝叶斯深度神经网络 [Nagabandi et al., 2018; Chua et al., 2018b; Gal et al., 2016; Fu et al., 2016; Lenz et al., 2015] 来学习动态模型。我们的方法利用以执行技能为条件的深度动态模型,简化了建模问题。此外,技能本身以可预测性为目标进行学习,进一步辅助动态模型的学习。针对基于模型强化学习的规划环节,现有方法包括局部模型的线性控制器 [Levine et al., 2016; Kumar et al., 2016; Chebotar et al., 2017]、不确定性感知 [Chua et al., 2018b; Gal et al., 2016] 或确定性规划器 [Nagabandi et al., 2018] 以及随机优化方法 [Williams et al., 2016]。本工作的核心贡献在于发现可被标准基于模型规划器进一步组合的模型化技能基元,因此我们采用现有规划方法——模型预测路径积分 (Model Predictive Path Integral) [Williams et al., 2016],该方法能有效利用我们的预训练设置。

6 EXPERIMENTS

6 实验

Through our experiments, we aim to demonstrate that: (a) DADS as a general purpose skill discovery algorithm can scale to high-dimensional problems; (b) discovered skills are amenable to hierarchical composition and; (c) not only is planning in the learned latent space feasible, but it is competitive to strong baselines. In Section 6.1, we provide visualization s and qualitative analysis of the skills learned using DADS. We demonstrate in Section 6.2 and Section 6.4 that optimizing the primitives for predictability renders skills more amenable to temporal composition that can be used for Hierarchical RL.We benchmark against state-of-the-art model-based RL baseline in Section 6.3, and against goal-conditioned RL in Section 6.5.

通过实验,我们旨在证明:(a) DADS作为一种通用技能发现算法能够扩展到高维问题;(b) 发现的技能适合分层组合;(c) 在学习的潜在空间中进行规划不仅可行,而且能与强基线方法竞争。在6.1节中,我们提供了使用DADS学习技能的可视化及定性分析。6.2节和6.4节证明,通过优化基元可预测性可使技能更适用于时序组合,从而用于分层强化学习。6.3节我们对比了最先进的基于模型的强化学习基线方法,6.5节则对比了目标条件强化学习方法。

6.1 QUALITATIVE ANALYSIS

6.1 定性分析

Figure 4: Skills learned on different MuJoCo environments in the OpenAI gym. DADS can discover diverse skills without any extrinsic rewards, even for problems with high-dimensional state and action spaces.

图 4: 在OpenAI gym的不同MuJoCo环境中学习到的技能。DADS无需任何外部奖励即可发现多样化技能,即便面对高维状态和动作空间的问题也是如此。

In this section, we provide a qualitative discussion of the unsupervised skills learned using DADS. We use the MuJoCo environments (Todorov et al., 2012) from the OpenAI gym as our test-bed (Brockman et al., 2016). We find that our proposed algorithm can learn diverse skills without any reward, even in problems with high-dimensional state and actuation, as illustrated in Figure 4. DADS can discover primitives for Half-Cheetah to run forward and backward with multiple different gaits, for Ant to navigate the environment using diverse locomotion primitives and for Humanoid to walk using stable locomotion primitives with diverse gaits and direction. The videos of the discovered primitives are available at: https://sites.google.com/view/dads-skill

在本节中,我们定性讨论使用DADS学习的无监督技能。我们以OpenAI gym中的MuJoCo环境 [Todorov et al., 2012] 作为测试平台 [Brockman et al., 2016] 。如图4所示,我们发现所提算法能在无奖励条件下学习多样化技能,即使在高维状态与执行机构的问题中。DADS能为Half-Cheetah发现前进/后退的多步态运动基元,为Ant发现多种移动基元以实现环境导航,并为人形机器人(Humanoid)发现具有多样步态和方向的稳定运动基元。技能发现视频详见: https://sites.google.com/view/dads-skill

Qualitatively, we find the skills discovered by DADS to be predictable and stable, in line with implicit constraints of the proposed objective. While the Half-Cheetah will learn to run in both backward and forward directions, DADS will d is in centi viz e skills which make Half-Cheetah flip owing to the reduced predictability on landing. Similarly, skills discovered for Ant rarely flip over, and tend to provide stable navigation primitives in the environment. This also in centi viz es the Humanoid, which is characteristically prone to collapsing and extremely unstable by design, to discover gaits which are stable for sustainable locomotion.

定性地看,我们发现DADS发现的技能具有可预测性和稳定性,符合所提出目标的隐含约束。虽然Half-Cheetah会学习向前和向后奔跑,但DADS会优先选择那些使Half-Cheetah翻转的技能,因为着陆时的可预测性降低。类似地,为Ant发现的技能很少会翻转,并倾向于在环境中提供稳定的导航原语。这也促使Humanoid(其设计特性容易倒塌且极不稳定)发现适合持续运动的稳定步态。

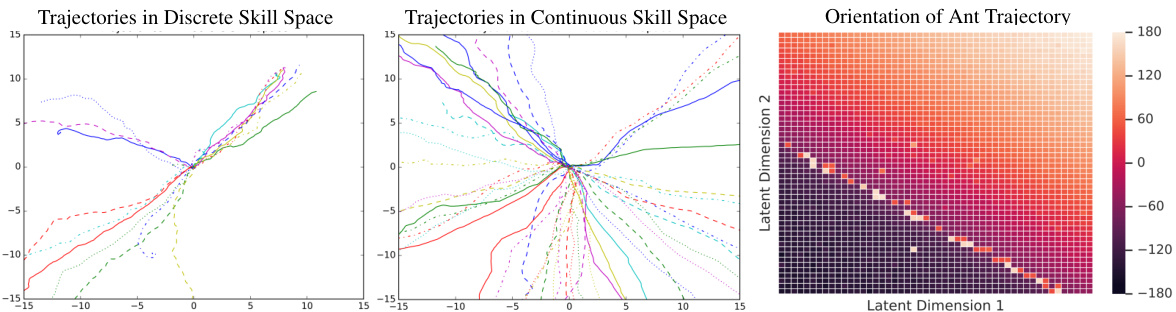

One of the significant advantages of the proposed objective is that it is compatible with continuous skill spaces, which has not been shown in prior work on skill discovery (Eysenbach et al., 2018). Not only does this allow us to embed a large and diverse set of skills into a compact latent space, but also the smoothness of the learned space allows us to interpolate between behaviors generated in the environment. We demonstrate this on the Ant environment (Figure 5), where we learn two-dimensional continuous skill space with a uniform prior over $(-1,1)$ in each dimension, and compare it to a discrete skill space with a uniform prior over 20 skills. Similar to Eysenbach et al. (2018), we restrict the observation space of the skill-dynamics $q$ to the cartesian coordinates $(x,y)$ . We hereby call this the $x$ -y prior, and discuss its role in Section 6.2.

所提目标函数的一大显著优势在于其兼容连续技能空间,这一特性在先前技能发现研究中尚未得到验证 (Eysenbach et al., 2018)。这不仅使我们能够将大量多样化技能嵌入紧凑的潜在空间,所学空间的平滑性还支持对环境生成行为进行插值。我们在Ant环境中对此进行了演示 (图5):通过在每个维度上建立(-1,1)均匀先验的二维连续技能空间,并与20个离散技能的均匀先验空间进行对比。与Eysenbach等人(2018)类似,我们将技能动力学q的观测空间限制在笛卡尔坐标(x,y)内。此处称其为x-y先验,其作用将在6.2节讨论。

Figure 5: (Left, Centre) X-Y traces of Ant skills and (Right) Heatmap to visualize the learned continuous skill space. Traces demonstrate that the continuous space offers far greater diversity of skills, while the heatmap demonstrates that the learned space is smooth, as the orientation of the X-Y trace varies smoothly as a function of the skill.

图 5: (左, 中) 蚂蚁技能的X-Y轨迹 和 (右) 可视化学习连续技能空间的热力图。轨迹表明连续空间提供了更大的技能多样性,而热力图显示学习空间是平滑的,因为X-Y轨迹的方向会随着技能参数平滑变化。

In Figure 5, we project the trajectories of the learned Ant skills from both discrete and continuous spaces onto the Cartesian plane. From the traces of the skills, it is clear that the continuous latent space can generate more diverse trajectories. We demonstrate in Section 6.3, that continuous primitives are more amenable to hierarchical composition and generally perform better on downstream tasks. More importantly, we observe that the learned skill space is semantically meaningful. The heatmap in Figure 5 shows the orientation of the trajectory (with respect to the $x$ -axis) as a function of the skill $z\in{\mathcal{Z}}$ , which varies smoothly as $z$ is varied, with explicit interpolations shown in Appendix D.

在图5中,我们将离散和连续空间中学到的Ant技能轨迹投影到笛卡尔平面上。从技能轨迹可以明显看出,连续潜在空间能生成更多样化的路径。第6.3节将证明,连续基元更适用于层次化组合,且通常在下游任务中表现更优。更重要的是,我们观察到所学技能空间具有语义意义。图5中的热力图展示了轨迹方向(相对于$x$轴)随技能$z\in{\mathcal{Z}}$变化的函数关系,当$z$变化时方向会平滑过渡,具体插值示例见附录D。

6.2 SKILL VARIANCE ANALYSIS

6.2 技能差异分析

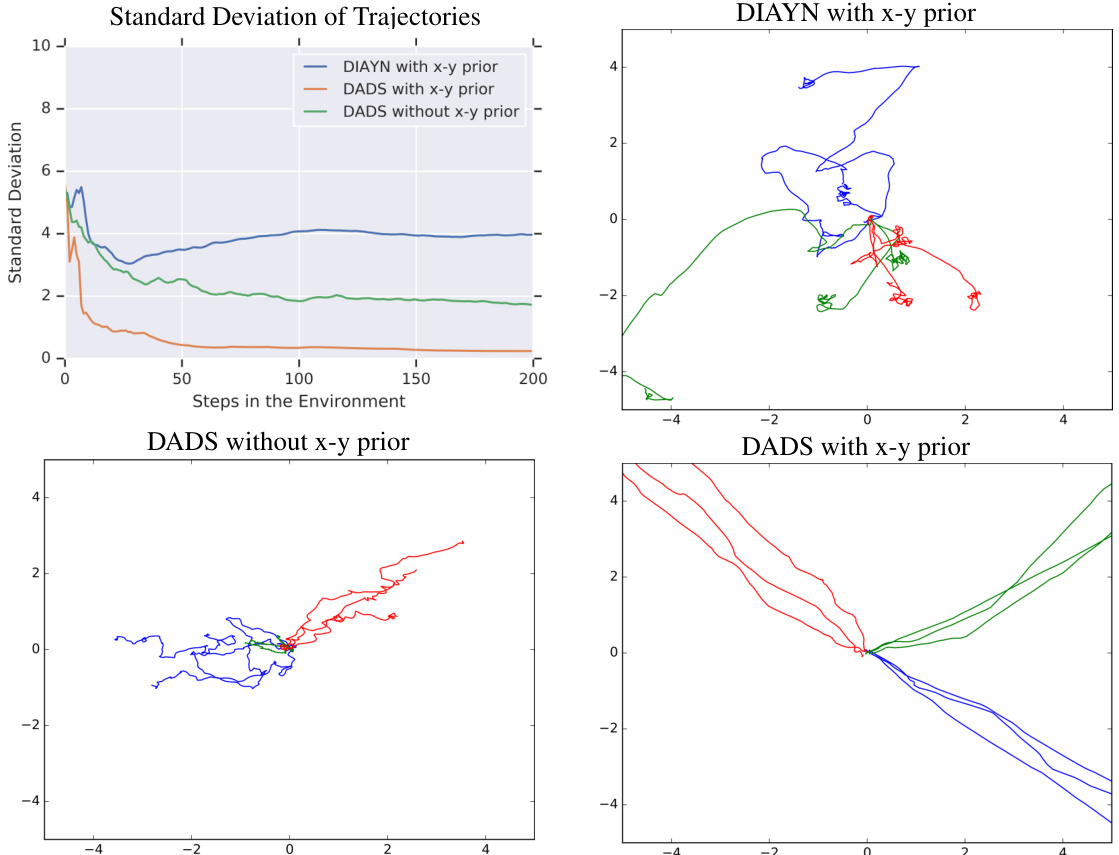

In an unsupervised skill learning setup, it is important to optimize the primitives to be diverse. However, we argue that diversity is not sufficient for the learned primitives to be useful for downstream tasks. Primitives must exhibit low-variance behavior, which enables long-horizon composition of the learned skills in a hierarchical setup. We analyze the variance of the $x{-}y$ trajectories in the environment, where we also benchmark the variance of the primitives learned by DIAYN (Eysenbach et al., 2018). For DIAYN, we use the $x{-}y$ prior for the skill-disc rim in at or, which biases the discovered skills to diversify in the x-y space. This step was necessary for that baseline to obtain a competitive set of navigation skills. Figure 6 (Top-Left) demonstrates that DADS, which optimizes the primitives for predictability and diversity, yields significantly lower-variance primitives when compared to DIAYN, which only optimizes for diversity. This is starkly demonstrated in the plots of X-Y traces of skills learned in different setups. Skills learned by DADS show significant control over the trajectories generated in the environment, while skills from DIAYN exhibit high variance in the environment, which limits their utility for hierarchical control. This is further demonstrated quantitatively in Section 6.4.

在无监督技能学习设置中,优化基元以实现多样性至关重要。但我们认为,仅靠多样性不足以使所学基元对下游任务有用。基元必须表现出低方差行为,才能在分层设置中实现所学技能的长期组合。我们分析了环境中$x{-}y$轨迹的方差,并对比了DIAYN (Eysenbach et al., 2018) 所学基元的方差基准。对于DIAYN,我们在技能判别器中使用$x{-}y$先验,使发现的技能偏向于在x-y空间多样化。这一步骤是该基线获得具有竞争力的导航技能集的必要条件。图6(左上)显示,同时优化可预测性和多样性的DADS,与仅优化多样性的DIAYN相比,产生的基元方差显著更低。在不同设置中学到的技能X-Y轨迹图中,这一点表现得尤为明显。DADS学到的技能对环境生成的轨迹表现出显著控制力,而DIAYN技能在环境中表现出高方差,这限制了它们在分层控制中的实用性。第6.4节进一步定量论证了这一点。

Figure 6: (Top-Left) Standard deviation of Ant’s position as a function of steps in the environment, averaged over multiple skills and normalized by the norm of the position. (Top-Right to Bottom-Left Clockwise) X-Y traces of skills learned using DIAYN with $x{-}y$ prior, DADS with $x{-}y$ prior and DADS without x-y prior, where the same color represents trajectories resulting from the execution of the same skill $z$ in the environment. High variance skills from DIAYN offer limited utility for hierarchical control.

图 6: (左上) 蚂蚁位置的标准差随环境中步数的变化曲线,经多技能平均并按位置范数归一化。(右上至左下顺时针方向) 分别展示使用带$x{-}y$先验的DIAYN、带$x{-}y$先验的DADS以及无$x{-}y$先验的DADS学习到的技能在X-Y平面上的轨迹,同色轨迹表示环境中执行同一技能$z$产生的路径。DIAYN生成的高方差技能在分层控制中实用性有限。

While optimizing for predictability already significantly reduces the variance of the trajectories generated by a primitive, we find that using the $x{-}y$ prior with DADS brings down the skill variance even further. For quantitative benchmarks in the next sections, we assume that the Ant skills are learned using an $x{-}y$ prior on the observation space, for both DADS and DIAYN.

在优化可预测性已显著降低基础动作生成的轨迹方差的基础上,我们发现结合DADS使用$x{-}y$先验能进一步缩小技能方差。针对后续章节的量化基准测试,我们假设Ant技能的学习均采用观测空间的$x{-}y$先验,该方法同时适用于DADS与DIAYN。

6.3 MODEL-BASED REINFORCEMENT LEARNING

6.3 基于模型的强化学习

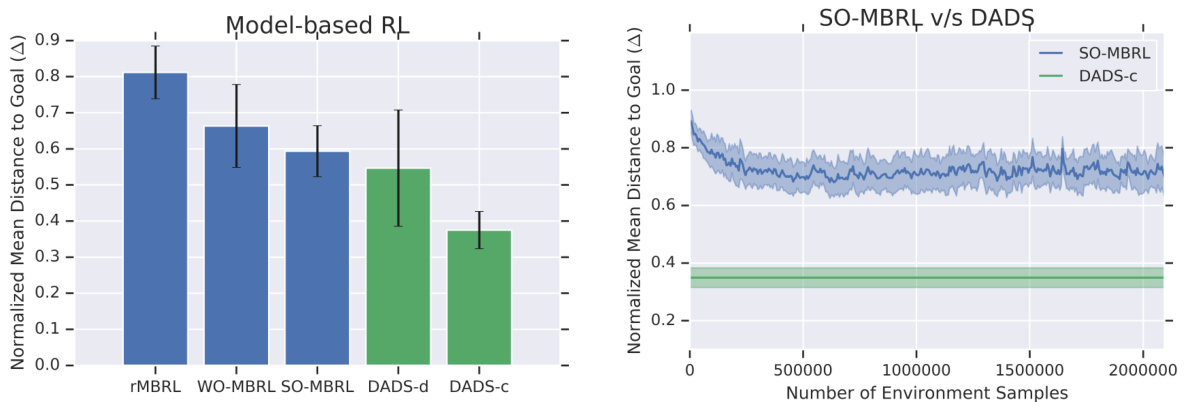

The key utility of learning a parametric model $q_{\phi}(s^{\prime}|s,z)$ is to take advantage of planning algorithms for downstream tasks, which can be extremely sample-efficient. In our setup, we can solve testtime tasks in zero-shot, that is without any learning on the downstream task. We compare with the state-of-the-art model-based RL method (Chua et al., 2018a), which learns a dynamics model parameterized as $p(s^{\prime}|s,a)$ , on the task of the Ant navigating to a specified goal with a dense reward. Given a goal $g$ , reward at any position $u$ is given by $r(\bar{u})=-|g-\bar{u}|_{2}$ . We benchmark our method against the following variants:

学习参数化模型 $q_{\phi}(s^{\prime}|s,z)$ 的核心价值在于能够利用规划算法处理下游任务,这种方式具有极高的样本效率。在我们的框架中,可以零样本解决测试阶段任务,即无需对下游任务进行任何学习。我们与基于模型的最先进强化学习方法 (Chua et al., 2018a) 进行对比,该方法学习的动态模型参数化为 $p(s^{\prime}|s,a)$ ,测试任务为蚂蚁在密集奖励环境下导航至指定目标。给定目标 $g$ 时,任意位置 $\bar{u}$ 的奖励由 $r(\bar{u})=-|g-\bar{u}|_{2}$ 计算。我们通过以下变体对本方法进行基准测试:

• Random-MBRL (rMBRL): We train the model $p(s^{\prime}|s,a)$ on randomly collected trajectories, and test the zero-shot generalization of the model on a distribution of goals.

• 随机MBRL (rMBRL): 我们在随机收集的轨迹上训练模型 $p(s^{\prime}|s,a)$, 并在目标分布上测试该模型的零样本泛化能力。

• Weak-oracle MBRL (WO-MBRL): We train the model $p(s^{\prime}|s,a)$ on trajectories generated by the planner to navigate to a goal, randomly sampled in every episode. The distribution of goals during training matches the distribution at test time. • Strong-oracle MBRL (SO-MBRL): We train the model $p(s^{\prime}|s,a)$ on a trajectories generated by the planner to navigate to a specific goal, which is fixed for both training and test time.

• 弱预言机MBRL (WO-MBRL):我们在规划器生成的轨迹上训练模型 $p(s^{\prime}|s,a)$,使智能体能够导航到每轮随机采样的目标。训练期间的目标分布与测试时保持一致。

• 强预言机MBRL (SO-MBRL):我们在规划器生成的轨迹上训练模型 $p(s^{\prime}|s,a)$,使智能体导航至固定目标,该目标在训练和测试阶段保持不变。

Amongst the variants, only the rMBRL matches our assumptions of having an unsupervised taskagnostic training. Both WO-MBRL and SO-MBRL benefit from goal-directed exploration during training, a significant advantage over DADS, which only uses mutual-information-based exploration.

在众多变体中,只有rMBRL符合我们关于无监督任务无关训练的假设。WO-MBRL和SO-MBRL都受益于训练期间的目标导向探索,这一显著优势超越了仅使用基于互信息探索的DADS。

We use ∆ = PtH=1 H−r(gu)2 as the metric, which represents the distance to the goal g averaged over the episode (wit h the same fixed horizon $H$ for all models and experiments), normalized by the initial distance to the goal $g$ . Therefore, lower $\Delta$ indicates better performance and $0<\Delta\leq1$ (assuming the agent goes closer to the goal). The test set of goals is fixed for all the methods, sampled from $[-15,15]^{2}$ .

我们使用∆ = PtH=1 H−r(gu)2作为指标,它表示在整个回合中到目标g的平均距离(所有模型和实验均采用相同的固定时间范围$H$),并通过初始到目标$g$的距离进行归一化。因此,较低的$\Delta$表示性能更好,且$0<\Delta\leq1$(假设智能体更接近目标)。所有方法的测试目标集均固定,采样自$[-15,15]^{2}$。

Figure 7 demonstrates that the zero-shot planning significantly outperforms all model-based RL baselines, despite the advantage of the baselines being trained on the test goal(s). For the experiment depicted in Figure 7 (Right), DADS has an unsupervised pre-training phase, unlike SO-MBRL which is training directly for the task. A comparison with Random-MBRL shows the significance of mutual-information-based exploration, especially with the right parameter iz ation and priors. This experiment also demonstrates the advantage of learning a continuous space of primitives, which outperforms planning on discrete primitives.

图7表明,零样本规划显著优于所有基于模型的强化学习基线,尽管基线模型在测试目标上经过了训练。在图7(右)所示的实验中,DADS具有无监督预训练阶段,这与直接针对任务进行训练的SO-MBRL不同。与Random-MBRL的对比显示了基于互信息探索的重要性,特别是在正确的参数化和先验条件下。该实验还证明了学习连续基元空间的优势,其性能优于离散基元上的规划。

Figure 7: (Left) The results of the MPPI controller on skills learned using DADS-c (continuous primitives) and DADS-d (discrete primitives) significantly outperforms state-of-the-art model-based RL. (Right) Planning for a new task does not require any additional training and outperforms model-based RL being trained for the specific task.

图 7: (左) 使用DADS-c(连续基元)和DADS-d(离散基元)学习技能后,MPPI控制器的结果显著优于最先进的基于模型的强化学习。(右) 针对新任务的规划无需任何额外训练,其表现优于针对特定任务训练的基于模型的强化学习。

6.4 HIERARCHICAL CONTROL WITH UNSUPERVISED PRIMITIVES

6.4 基于无监督基元的层次化控制

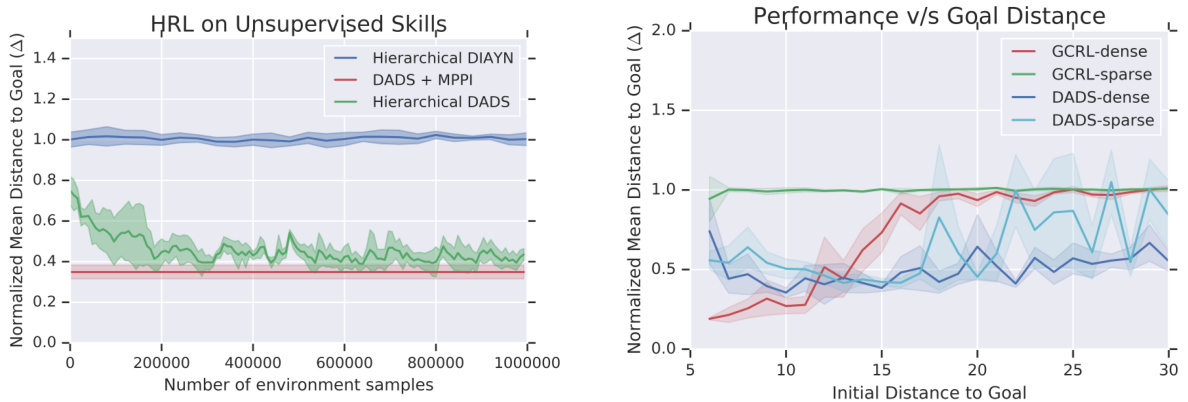

We benchmark hierarchical control for primitives learned without supervision, against our proposed scheme using an MPPI based planner on top of DADS-learned skills. We persist with the task of Ant-navigation as described in 6.3. We benchmark against Hierarchical DIAYN (Eysenbach et al., 2018), which learns the skills using the DIAYN objective, freezes the low-level policy and learns a meta-controller that outputs the skill to be executed for the next $H_{Z}$ steps. We provide the $x{-}y$ prior to the DIAYN’s disc imina tor while learning the skills for the Ant agent. The performance of the meta-controller is constrained by the low-level policy, however, this hierarchical scheme is agnostic to the algorithm used to learn the low-level policy. To contrast the quality of primitives learned by the DADS and DIAYN, we also benchmark against Hierarchical DADS, which learns a meta-controller the same way as Hierarchical DIAYN, but learns the skills using DADS.

我们对无监督学习的原始分层控制进行了基准测试,并与我们提出的方案进行了对比。我们的方案在DADS学习技能的基础上使用了基于MPPI的规划器。我们延续了6.3节中描述的蚂蚁导航任务。我们以分层DIAYN (Eysenbach et al., 2018) 作为基准,该方法通过DIAYN目标学习技能,冻结底层策略并学习一个元控制器,该控制器输出接下来 $H_{Z}$ 步要执行的技能。在为蚂蚁智能体学习技能时,我们向DIAYN的判别器提供了 $x{-}y$ 先验。元控制器的性能受限于底层策略,但该分层方案对学习底层策略的算法是不可知的。为了对比DADS和DIAYN学习的原始技能质量,我们还以分层DADS作为基准,该方法与分层DIAYN学习元控制器的方式相同,但使用DADS学习技能。

From Figure 8 (Left) We find that the meta-controller is unable to compose the skills learned by DIAYN, while the same meta-controller can learn to compose skills by DADS to navigate the Ant to different goals. This result seems to confirm our intuition described in Section 6.2, that the high variance of the DIAYN skills limits their temporal compositional it y. Interestingly, learning a RL meta-controller reaches similar performance to the MPPI controller, taking an additional 200, 000 samples per goal.

从图 8 (左) 我们发现元控制器无法组合 DIAYN 学习的技能,而相同的元控制器可以通过组合 DADS 学习的技能来引导 Ant 到达不同目标。这一结果似乎验证了我们在 6.2 节的直觉:DIAYN 技能的高方差性限制了其时间组合能力。值得注意的是,学习 RL 元控制器达到了与 MPPI 控制器相近的性能,但每个目标需要额外采样 200, 000 次。

Figure 8: (Left) A RL-trained meta-controller is unable to compose primitive learned by DIAYN to navigate Ant to a goal, while it succeeds to do so using the primitives learned by DADS. (Right) Goal-Conditioned RL (GCRL-dense/sparse) does not generalize outside its training distribution, while MPPI controller on learned skills (DADS-dense/sparse) experiences significantly smaller degrade in performance.

图 8: (左) 经过强化学习训练的元控制器无法组合由DIAYN习得的原始动作来引导Ant到达目标,而使用DADS学习的原始动作则能成功实现。(右) 目标条件强化学习(GCRL-dense/sparse)在训练分布之外无法泛化,而基于习得技能的MPPI控制器(DADS-dense/sparse)性能下降幅度显著更小。

6.5 GOAL-CONDITIONED RL

6.5 目标条件强化学习

To demonstrate the benefits of our approach over model-free RL, we benchmark against goalconditioned RL on two versions of Ant-navigation: (a) with a dense reward $r(u)$ and (b) with a sparse reward $r(u)=1$ if $|u-g|_{2}\leq\epsilon$ , else 0. We train the goal-conditioned RL agent using soft actor-critic, where the state variable of the agent is augmented with $u-g$ , the position delta to the goal. The agent gets a randomly sampled goal from $[-10,10]^{2}$ at the beginning of the episode.

为了展示我们的方法相较于无模型强化学习(RL)的优势,我们在两种版本的蚂蚁导航任务上对目标条件强化学习进行了基准测试:(a) 使用密集奖励 $r(u)$ ,(b) 使用稀疏奖励 $r(u)=1$ (当 $|u-g|_{2}\leq\epsilon$ 时),否则为0。我们采用软演员-评论家算法训练目标条件强化学习智能体,其状态变量通过目标位置差 $u-g$ 进行扩展。每轮训练开始时,智能体会从 $[-10,10]^{2}$ 范围内随机采样一个目标。

In Figure 8 (Right), we measure the average performance of the all the methods as a function of the initial distance of the goal, ranging from 5 to 30 metres. For dense reward navigation, we observe that while model-based planning on DADS-learned skills degrades smoothly as the initial distance to goal to increases, goal-conditioned RL experiences a sudden deterioration outside the goal distribution it was trained on. Even within the goal distribution observed during training of goal-conditioned RL model, skill-space planning performs competitively to it. With sparse reward navigation, goal-conditioned RL is unable to navigate, while MPPI demonstrates comparable performance to the dense reward up to about 20 metres. This highlights the utility of learning task-agnostic skills, which makes them more general while showing that latent space planning can be leveraged for tasks requiring long-horizon planning.

在图 8 (右) 中,我们测量了所有方法的平均性能随目标初始距离 (5 至 30 米) 的变化情况。对于密集奖励导航,我们观察到基于 DADS 学习技能的模型规划性能会随着目标初始距离的增加而平稳下降,而目标条件强化学习 (goal-conditioned RL) 在训练目标分布之外会出现突然的性能恶化。即使在目标条件强化学习模型的训练目标分布范围内,技能空间规划的表现也与其相当。在稀疏奖励导航中,目标条件强化学习无法完成导航任务,而 MPPI 在 20 米范围内的表现与密集奖励相当。这凸显了学习任务无关技能的优势:它们更具通用性,同时表明潜空间规划可用于需要长程规划的任务。

7 CONCLUSION

7 结论

We have proposed a novel unsupervised skill learning algorithm that is amenable to model-based planning for hierarchical control on downstream tasks. We show that our skill learning method can scale to high-dimensional state-spaces, while discovering a diverse set of low-variance skills. In addition, we demonstrated that, without any training on the specified task, we can compose the learned skills to outperform competitive model-based baselines that were trained with the knowledge of the test tasks. We plan to extend the algorithm to work with off-policy data, potentially using relabelling tricks (An dry ch owicz et al., 2017; Nachum et al., 2018) and explore more nuanced planning algorithms. We plan to apply the hereby-introduced method to different domains, such as manipulation and enable skill/model discovery directly from images.

我们提出了一种新颖的无监督技能学习算法,该算法适用于下游任务分层控制的基于模型的规划。我们证明,我们的技能学习方法能够扩展到高维状态空间,同时发现一组多样化的低方差技能。此外,我们还展示了,在未对指定任务进行任何训练的情况下,我们可以组合所学技能,以超越那些在测试任务知识基础上训练的基于模型的竞争基线。我们计划将该算法扩展到适用于离策略数据,可能会使用重新标记技巧 (An dry ch owicz et al., 2017; Nachum et al., 2018),并探索更细致的规划算法。我们计划将本文提出的方法应用于不同领域,例如操作任务,并直接从图像中实现技能/模型发现。

8 ACKNOWLEDGEMENTS

8 致谢

We would like to thank Evan Liu, Ben Eysenbach, Anusha Nagabandi for their help in reproducing the baselines for this work. We are thankful to Ben Eysenbach for their comments and discussion on the initial drafts. We would also like to acknowledge Ofir Nachum, Alex Alemi, Daniel Freeman, Yiding Jiang, Allan Zhou and other colleagues at Google Brain for their helpful feedback and discussions at various stages of this work. We are also thankful to Michael Ahn and others in Adept team for their support, especially with the infrastructure setup and scaling up the experiments.

我们要感谢Evan Liu、Ben Eysenbach和Anusha Nagabandi在复现本工作基线时提供的帮助。感谢Ben Eysenbach对初稿提出的意见与讨论。同时,我们也要感谢Ofir Nachum、Alex Alemi、Daniel Freeman、Yiding Jiang、Allan Zhou以及Google Brain的其他同事在本工作各个阶段给予的有益反馈和讨论。此外,特别感谢Michael Ahn及Adept团队其他成员的支持,尤其是在基础设施搭建和实验扩展方面提供的帮助。

REFERENCES

参考文献

Benjamin Eysenbach, Abhishek Gupta, Julian Ibarz, and Sergey Levine. Diversity is all you need: Learning skills without a reward function. arXiv preprint arXiv:1802.06070, 2018.

Benjamin Eysenbach、Abhishek Gupta、Julian Ibarz 和 Sergey Levine。《多样性即所需:无需奖励函数学习技能》。arXiv预印本 arXiv:1802.06070,2018年。

Carlos Florensa, Yan Duan, and Pieter Abbeel. Stochastic neural networks for hierarchical reinforcement learning. arXiv preprint arXiv:1704.03012, 2017.

Carlos Florensa、Yan Duan 和 Pieter Abbeel。用于分层强化学习的随机神经网络。arXiv预印本 arXiv:1704.03012,2017。

Nir Friedman, Ori Mosenzon, Noam Slonim, and Naftali Tishby. Multivariate information bottleneck. In Proceedings of the Seventeenth conference on Uncertainty in artificial intelligence, pp. 152–161. Morgan Kaufmann Publishers Inc., 2001.

Nir Friedman、Ori Mosenzon、Noam Slonim 和 Naftali Tishby。多元信息瓶颈 (Multivariate Information Bottleneck)。载于《第十七届人工智能不确定性会议论文集》第152-161页。Morgan Kaufmann出版社,2001年。

Justin Fu, Sergey Levine, and Pieter Abbeel. One-shot learning of manipulation skills with online dynamics adaptation and neural network priors. In 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4019–4026. IEEE, 2016.

Justin Fu、Sergey Levine 和 Pieter Abbeel。基于在线动态适应与神经网络先验的单次操作技能学习。收录于 2016 年 IEEE/RSJ 智能机器人与系统国际会议 (IROS),第 4019–4026 页。IEEE,2016。

Justin Fu, John Co-Reyes, and Sergey Levine. Ex2: Exploration with exemplar models for deep reinforcement learning. In I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanath an, and R. Garnett (eds.), Advances in Neural Information Processing Systems 30, pp. 2577–2587. Curran Associates, Inc., 2017. URL http://papers.nips.cc/paper/ 6851-ex2-exploration-with-exemplar-models-for-deep-reinforcement-learning. pdf.

Justin Fu、John Co-Reyes 和 Sergey Levine。EX2:基于范例模型的深度强化学习探索。载于 I. Guyon、U. V. Luxburg、S. Bengio、H. Wallach、R. Fergus、S. Vishwanath an 和 R. Garnett (编) ,《神经信息处理系统进展 30》,第 2577–2587 页。Curran Associates 公司,2017。URL http://papers.nips.cc/paper/6851-ex2-exploration-with-exemplar-models-for-deep-reinforcement-learning.pdf。

Yarin Gal, Rowan McAllister, and Carl Edward Rasmussen. Improving pilco with bayesian neural network dynamics models. In Data-Efficient Machine Learning workshop, ICML, volume 4, 2016.

Yarin Gal、Rowan McAllister 和 Carl Edward Rasmussen。利用贝叶斯神经网络动力学模型改进 PILCO。收录于《数据高效机器学习研讨会》,ICML,第4卷,2016年。

Carlos E Garcia, David M Prett, and Manfred Morari. Model predictive control: theory and practicea survey. Automatica, 25(3):335–348, 1989.

Carlos E Garcia、David M Prett 和 Manfred Morari。模型预测控制:理论与实践的综述。Automatica, 25(3):335–348, 1989.

Karol Gregor, Danilo Jimenez Rezende, and Daan Wierstra. Variation al intrinsic control. arXiv preprint arXiv:1611.07507, 2016.

Karol Gregor、Danilo Jimenez Rezende 和 Daan Wierstra. 变分内在控制. arXiv 预印本 arXiv:1611.07507, 2016.

Shixiang Gu, Ethan Holly, Timothy Lillicrap, and Sergey Levine. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In 2017 IEEE International Conference on Robotics and Automation (ICRA), pp. 3389–3396. IEEE, 2017.

Shixiang Gu、Ethan Holly、Timothy Lillicrap和Sergey Levine。基于异步离策略更新的机器人操作深度强化学习。发表于《2017年IEEE机器人与自动化国际会议(ICRA)》,第3389-3396页。IEEE,2017年。

David Ha and Juirgen Schmid huber. Recurrent world models facilitate policy evolution. In Advances in Neural Information Processing Systems, pp. 2455–2467, 2018.

David Ha 和 Juirgen Schmidhuber. 循环世界模型促进策略进化. 载于《神经信息处理系统进展》, 第2455–2467页, 2018.

Tuomas Haarnoja, Aurick Zhou, Pieter Abbeel, and Sergey Levine. Soft actor-critic: Off- policy maximum entropy deep reinforcement learning with a stochastic actor. arXiv preprint arXiv:1801.01290, 2018a.

Tuomas Haarnoja, Aurick Zhou, Pieter Abbeel, 和 Sergey Levine. Soft actor-critic: 随机行动者的离策略最大熵