Distill Any Depth: Distillation Creates a Stronger Monocular Depth Estimator

Distill Any Depth: 蒸馏打造更强大的单目深度估计器

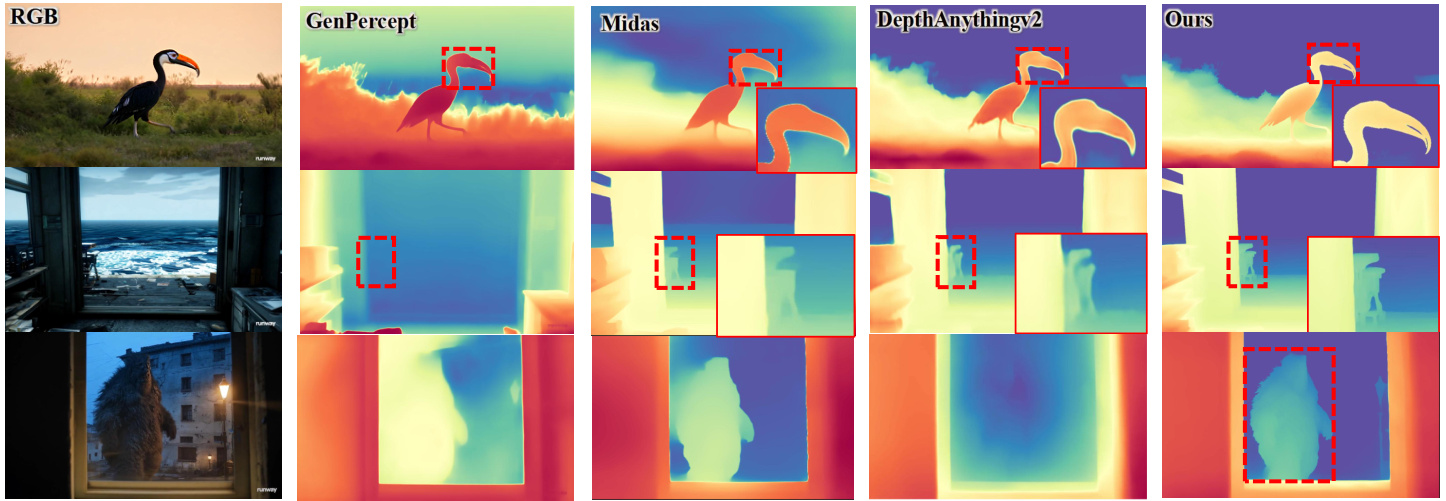

Figure 1: Zero-shot prediction on in-the-wild images. Our model, distilled from Genpercept [49] and Depth Anything v 2 [51], outperforms other methods by delivering more accurate depth details and exhibiting superior generalization for monocular depth estimation on in-the-wild images.

图 1: 真实场景图像的零样本预测。我们的模型从 Genpercept [49] 和 Depth Anything v2 [51] 蒸馏而来,通过提供更精确的深度细节并在真实场景图像的单目深度估计中展现出更优的泛化性能,超越了其他方法。

Abstract

摘要

Recent advances in zero-shot monocular depth estimation(MDE) have significantly improved generalization by unifying depth distributions through normalized depth represent at ions and by leveraging large-scale unlabeled data via pseudo-label distillation. However, existing methods that rely on global depth normalization treat all depth values equally, which can amplify noise in pseudo-labels and reduce distillation effectiveness. In this paper, we present a systematic analysis of depth normalization strategies in the context of pseudo-label distillation. Our study shows that, under recent distillation paradigms (e.g., shared-context distillation), normalization is not always necessary—omitting it can help mitigate the impact of noisy supervision. Furthermore, rather than focusing solely on how depth information is represented, we propose Cross-Context Distillation, which integrates both global and local depth cues to enhance pseudo-label quality. We also introduce an assistant-guided distillation strategy that incorporates complementary depth priors from a diffusion-based teacher model, enhancing supervision diversity and robustness. Extensive experiments on benchmark datasets demonstrate that our approach significantly outperforms state-of-the-art methods, both quantitatively and qualitatively.

零样本单目深度估计 (MDE) 的最新进展通过归一化深度表示统一深度分布,并利用伪标签蒸馏处理大规模无标注数据,显著提升了泛化能力。然而,依赖全局深度归一化的现有方法平等对待所有深度值,可能放大伪标签中的噪声并降低蒸馏效果。本文系统分析了伪标签蒸馏中的深度归一化策略,研究表明:在当前蒸馏范式(如共享上下文蒸馏)下,归一化并非必需——省略归一化反而能缓解噪声监督的影响。此外,我们不仅关注深度信息的表示方式,还提出跨上下文蒸馏方法,整合全局与局部深度线索以提升伪标签质量。同时引入辅助引导蒸馏策略,融合基于扩散的教师模型提供的互补深度先验,增强监督多样性与鲁棒性。在基准数据集上的大量实验表明,我们的方法在定量与定性评估中均显著优于现有最优方法。

1. Introduction

1. 引言

Monocular depth estimation (MDE) predicts scene depth from a single RGB image, offering greater flexibility compared to stereo or multi-view methods, and benefiting a wide range of applications, such as autonomous driving and robotic perception [11, 13, 18, 52, 30]. Recent research on zero-shot MDE models [37, 55, 47, 24] aims to handle diverse scenarios, but training such models requires largescale, diverse depth data, which is often limited by the need for specialized equipment [31, 54]. A promising solution is using large-scale unlabeled data, which has shown success in tasks like classification and segmentation [27, 63, 48]. Studies like Depth Anything [50] highlight the effectiveness of using pseudo labels from teacher models for training student models.

单目深度估计 (MDE) 通过单一RGB图像预测场景深度,相比立体或多视角方法具有更高灵活性,可广泛应用于自动驾驶和机器人感知等领域 [11, 13, 18, 52, 30]。近期关于零样本 MDE 模型的研究 [37, 55, 47, 24] 致力于处理多样化场景,但此类模型的训练需要大规模多样化深度数据,而这类数据常受限于专业设备的采集需求 [31, 54]。利用大规模无标注数据作为解决方案已显示出潜力,该方案在分类和分割等任务中已取得成功 [27, 63, 48]。如Depth Anything [50] 等研究表明,使用教师模型生成的伪标签来训练学生模型具有显著效果。

To enable training on such a diverse, mixed dataset, most state-of-the-art methods [51, 40, 55] employ scale-and-shift invariant (SSI) depth representations for loss computation. This approach normalizes raw depth values within an image, making them invariant to scaling and shifting, and ensures that the model learns to focus on relative depth relationships rather than absolute values. The SSI representation facilitates the joint use of diverse depth data, thereby improving the model’s ability to generalize across different scenes [38, 5]. Similarly, during evaluation, the metric depth of the prediction is recovered by solving for the unknown scale and shift coefficients of the predicted depth using least squares, ensuring the application of standard evaluation metrics.

为了在这种多样化的混合数据集上进行训练,大多数前沿方法 [51, 40, 55] 采用尺度与平移不变性 (SSI) 深度表示进行损失计算。该方法对图像中的原始深度值进行归一化处理,使其不受缩放和平移的影响,并确保模型学习关注相对深度关系而非绝对值。SSI 表示促进了多样化深度数据的联合使用,从而提升了模型在不同场景间的泛化能力 [38, 5]。同样地,在评估阶段,通过最小二乘法求解预测深度的未知缩放和平移系数来恢复预测的度量深度,确保标准评估指标的应用。

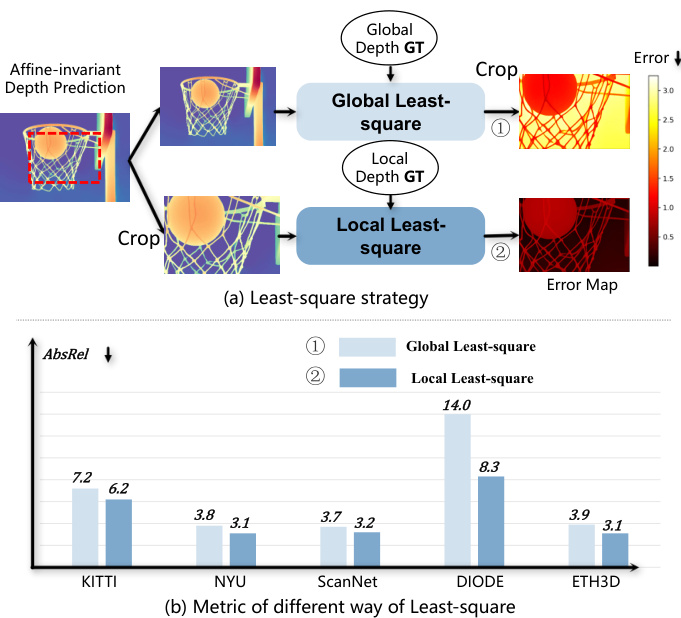

Despite its advantages, using SSI depth representation for pseudo-label distillation in MDE models presents several issues. Specifically, the inherent normalization process in SSI loss makes the depth prediction at a given pixel not only dependent on the teacher model’s raw prediction at that location but also influenced by the depth values in other regions of the image. This becomes problematic because pseudo-labels inherently introduce noise. Even if certain local regions are predicted accurately, inaccuracies in other regions can negatively affect depth estimates after global normalization, leading to suboptimal distillation results. As shown in Fig. 2, we empirically demonstrate that normalizing depth maps globally tends to degrade the accuracy of local regions, as compared to only applying normalization within localized regions during evaluation.

尽管具有优势,但在MDE模型中使用SSI深度表示进行伪标签蒸馏仍存在若干问题。具体而言,SSI损失固有的归一化过程使得给定像素的深度预测不仅取决于教师模型在该位置的原始预测,还受到图像其他区域深度值的影响。这一问题尤为突出,因为伪标签本身会引入噪声。即使某些局部区域预测准确,其他区域的误差也会在全局归一化后对深度估计产生负面影响,导致蒸馏效果欠佳。如图2所示,我们通过实验证明,与评估时仅在局部区域应用归一化相比,全局归一化深度图往往会降低局部区域的准确性。

Building on this insight, in this paper, we investigate the issue of depth normalization in pseudo-label distillation. We analyze various depth normalization strategies, including global normalization, local normalization, hybrid globallocal approaches, and the absence of normalization. Through empirical experiments, we explore how each depth representation impacts the performance of different distillation designs, especially when using pseudo-labels for training.

基于这一洞见,本文研究了伪标签蒸馏中的深度归一化问题。我们分析了多种深度归一化策略,包括全局归一化、局部归一化、混合全局-局部方法以及无归一化情况。通过实证实验,探讨了每种深度表示方式如何影响不同蒸馏设计的性能,特别是在使用伪标签进行训练时。

Rather than focusing solely on pseudo-label representation, we introduce Cross-Context Distillation, a method that integrates both global and local depth cues to enhance pseudo-label quality. Our findings reveal that local regions, when used for distillation, produce pseudo-labels that capture higher-quality depth details, improving the student model’s depth estimation accuracy. However, relying solely on local regions may overlook broader contextual relationships in the image. To address this, we combine both local and global inputs within a unified distillation framework. By leveraging the context-specific advantages of local distillation alongside the broader understanding provided by global methods, our approach yields more detailed and reliable depth predictions.

我们提出的跨上下文蒸馏 (Cross-Context Distillation) 方法不仅关注伪标签表示,还整合全局与局部深度线索来提升伪标签质量。研究发现:当采用局部区域进行蒸馏时,生成的伪标签能捕捉更高质量的深度细节,从而提升学生模型的深度估计精度。但仅依赖局部区域可能会忽略图像中更广泛的上下文关联。为此,我们在统一蒸馏框架中同时结合局部与全局输入,通过融合局部蒸馏的上下文特异性优势与全局方法提供的整体理解,最终生成更精细可靠的深度预测结果。

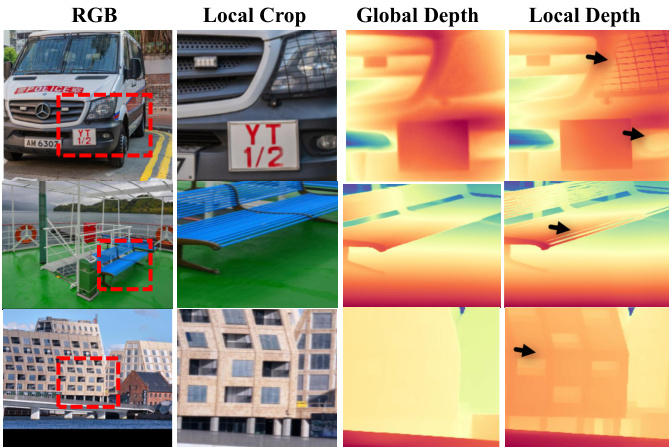

Figure 2: Issue with Global Normalization (SSI). In (a), we compare two alignment strategies for the central $w/2,h/2$ region: (1) Global Least-Square, where alignment is applied to the full image before cropping, and (2) Local Least-Square, where alignment is performed on the cropped region. Metrics are computed on the cropped region. As shown in (b), the outperformed local strategy demonstrates that global normalization degrades local accuracy compared to local normalization.

图 2: 全局归一化 (SSI) 问题。在 (a) 中,我们比较了中心区域 $w/2,h/2$ 的两种对齐策略:(1) 全局最小二乘法 (Global Least-Square) ,即在裁剪前对整个图像进行对齐;(2) 局部最小二乘法 (Local Least-Square) ,即仅在裁剪区域进行对齐。指标均在裁剪区域计算。如 (b) 所示,表现更优的局部策略表明,与局部归一化相比,全局归一化会降低局部精度。

To harness the strengths of both, we propose using a diffusion-based model as the teacher assistant to generate pseudo-labels, which are then used to supervise the student model. This strategy enables the student model to learn from the detailed depth information provided by diffusionbased models, while also benefiting from the precision and efficiency of encoder-decoder models.

为了结合两者的优势,我们提出使用基于扩散的模型 (diffusion-based model) 作为教师助手生成伪标签,进而监督学生模型。这一策略使学生模型既能学习扩散模型提供的细致深度信息,又能受益于编码器-解码器模型的精度和效率。

To validate the effectiveness of our design, we conduct extensive experiments on various benchmark datasets. The empirical results show that our method significantly outperforms existing baselines qualitatively and quantitatively. The contributions can be summarized below: 1) We systematically analyze the role of different depth normalization strategies in pseudo-label distillation, providing insights into their effects on MDE performance. 2) To enhance the quality of pseudo-labels, we propose Cross-Context Distillation, a hybrid local-global framework that leverages fine-grained details and global depth relationships; a teacher assistant that harnesses the complementary strengths of diverse depth estimation models to further improve robustness and accuracy. 3) We conduct extensive experiments on benchmark datasets, demonstrating that our method outperforms state-of-the-art approaches both quantitatively and qualitatively.

为验证设计的有效性,我们在多个基准数据集上进行了广泛实验。实证结果表明,我们的方法在定性和定量层面均显著优于现有基线。主要贡献可归纳如下:

- 我们系统分析了不同深度归一化策略在伪标签蒸馏中的作用,揭示了它们对单目深度估计 (MDE) 性能的影响机制;

- 为提升伪标签质量,提出跨上下文蒸馏 (Cross-Context Distillation) 框架:通过融合局部细粒度细节与全局深度关系的混合架构,以及整合多模型优势的教师助理机制,进一步增强鲁棒性和精度;

- 在基准数据集上的大量实验表明,我们的方法在定量指标和视觉效果上均超越现有最优方法。

2. Related Work

2. 相关工作

2.1. Monocular Depth Estimation

2.1. 单目深度估计

Monocular depth estimation (MDE) has evolved from hand-crafted methods to deep learning, significantly improving accuracy [11, 29, 12, 16, 62, 38]. Architectural refinements, such as multi-scale designs and attention mechanisms, have further enhanced feature extraction [21, 6, 61]. However, most models remain reliant on labeled data and struggle to generalize across diverse environments. Zeroshot MDE improves generalization by leveraging largescale datasets, geometric constraints, and multi-task learning [37, 55, 57, 60, 58]. Metric depth estimation incorporates intrinsic data for absolute depth learning [2, 56, 22, 35, 46, 4], while generative models such as Marigold refine depth details using diffusion priors [24, 49, 17, ?]. Despite these advances, effectively utilizing unlabeled data remains a challenge due to pseudo-label noise and inconsistencies across different contexts. Depth Anything [51] explores large-scale unlabeled data but struggles with pseudo-label reliability. Patch Fusion [9, 32] improves depth estimation by refining high-resolution image representations but lacks adaptability in generative settings. To address these issues, we propose Cross-Context and Multi-Teacher Distillation, which enhances pseudo-label supervision by leveraging diverse contextual information and multiple expert models, improving both accuracy and generalization ability.

单目深度估计 (MDE) 已从手工设计方法发展为深度学习技术,显著提升了精度 [11, 29, 12, 16, 62, 38]。多尺度设计和注意力机制等架构改进进一步增强了特征提取能力 [21, 6, 61]。然而,大多数模型仍依赖标注数据,难以适应多样化环境。零样本MDE通过利用大规模数据集、几何约束和多任务学习提升了泛化能力 [37, 55, 57, 60, 58]。度量深度估计结合内禀数据实现绝对深度学习 [2, 56, 22, 35, 46, 4],而Marigold等生成式模型则利用扩散先验优化深度细节 [24, 49, 17, ?]。尽管取得这些进展,由于伪标签噪声和跨场景不一致性,未标注数据的有效利用仍是挑战。Depth Anything [51] 探索了大规模未标注数据,但受限于伪标签可靠性。Patch Fusion [9, 32] 通过优化高分辨率图像表示改进了深度估计,但缺乏生成式场景的适应性。为解决这些问题,我们提出跨上下文多教师蒸馏框架,通过整合多样化上下文信息和多专家模型来增强伪标签监督,同时提升精度与泛化能力。

2.2. Semi-supervised Monocular Depth Estimation

2.2. 半监督单目深度估计

Semi-supervised depth estimation has garnered increasing attention, primarily leveraging temporal consistency to utilize unlabeled data more effectively [28, 19]. Some ap- proaches [1, 44, 7, 53, 15] integrate stereo geometric constraints, enforcing left-right consistency in stereo video to enhance depth accuracy. Others incorporate additional supervision, such as semantic priors [36, 20]or generative adversarial networks (GANs). For instance, DepthGAN [23] refines depth predictions through adversarial learning. However, these methods often rely on temporal cues, stereo constraints, or other auxiliary information, limiting their appli c ability to broader and more general scenarios. Recent work [34] has explored pseudo-labeling for semi-supervised monocular depth estimation (MDE), but it lacks generative modeling capabilities, restricting its generalization across diverse environments. Depth Anything [50] demonstrates the effectiveness of large-scale unlabeled data in improving generalization; however, pseudo-label reliability remains a challenge. In contrast, our approach focuses on singleimage depth estimation, improving pseudo-label reliability and maximizing its effectiveness. By relying solely on unlabeled data without additional constraints, our method achieves a more accurate and general iz able MDE model.

半监督深度估计日益受到关注,主要通过利用时序一致性来更有效地使用未标注数据 [28, 19]。部分方法 [1, 44, 7, 53, 15] 融合立体几何约束,通过强制立体视频的左右一致性来提升深度精度。另一些研究引入额外监督信息,例如语义先验 [36, 20] 或生成对抗网络 (GAN)。例如 DepthGAN [23] 通过对抗学习优化深度预测。但这些方法通常依赖时序线索、立体约束或其他辅助信息,限制了其在更广泛通用场景中的应用。近期工作 [34] 探索了半监督单目深度估计 (MDE) 的伪标签技术,但缺乏生成建模能力,制约了其跨环境泛化性能。Depth Anything [50] 证明了大规模未标注数据对提升泛化能力的有效性,但伪标签可靠性仍是挑战。相比之下,我们的方法聚焦单图像深度估计,通过提升伪标签可靠性并最大化其效用,仅依赖未标注数据而无须额外约束,实现了更精准且可泛化的 MDE 模型。

3. Method

3. 方法

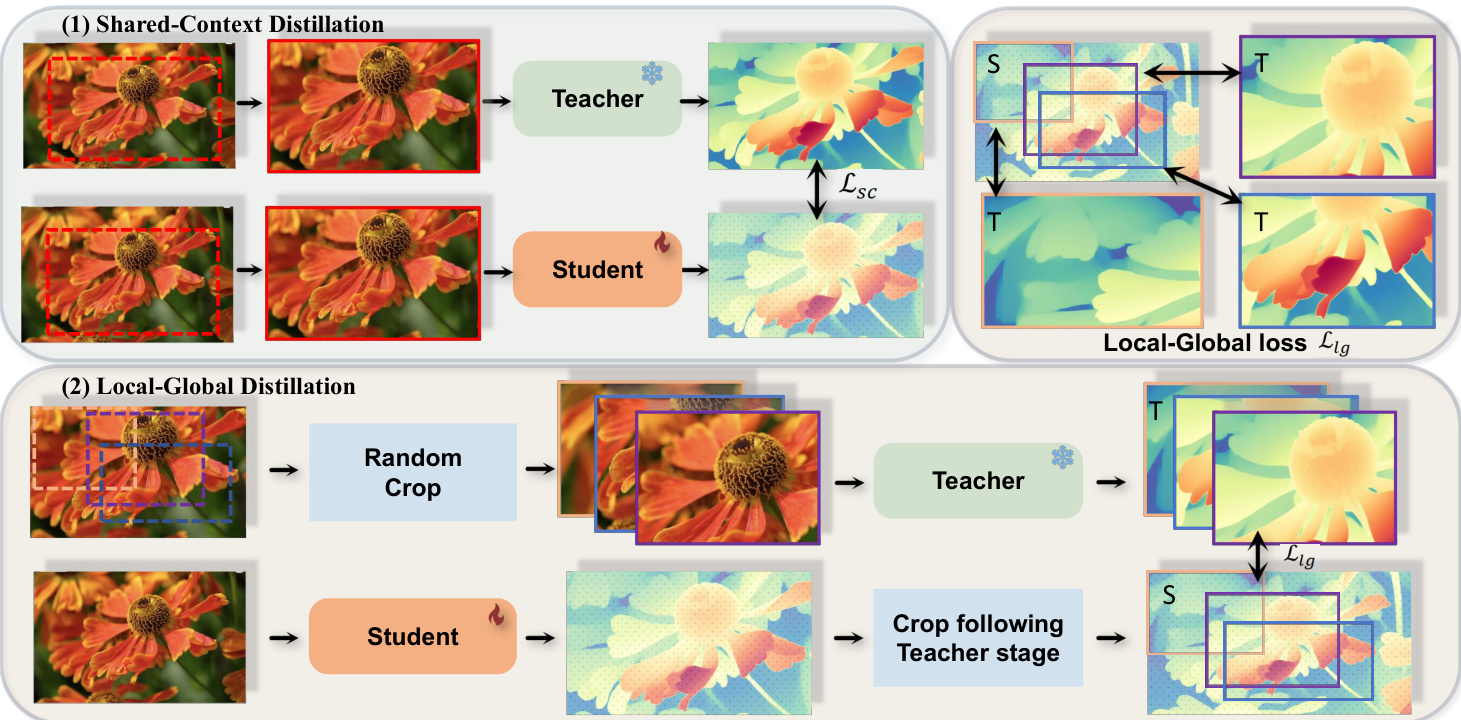

In this section, we introduce a novel distillation framework designed to leverage unlabeled images for training zero-shot Monocular Depth Estimation (MDE) models. We begin by exploring various depth normalization techniques in Section 3.1, followed by detailing our proposed distillation method in Section 3.2, which combines predictions across multiple contexts. The overall framework is illustrated in Fig. 3. Finally, we introduce an assistant-guided distillation scheme, in which a diffusion-based model acts as an auxiliary teacher to provide additional supervision for student training.

在本节中,我们介绍了一种新颖的蒸馏框架,旨在利用未标注图像训练零样本单目深度估计 (Monocular Depth Estimation, MDE) 模型。我们首先在第3.1节探讨多种深度归一化技术,随后在第3.2节详细阐述我们提出的结合多上下文预测的蒸馏方法。整体框架如图3所示。最后,我们提出了一种辅助引导的蒸馏方案,其中基于扩散 (diffusion) 的模型作为辅助教师,为学生训练提供额外监督。

3.1. Depth Normalization

3.1. 深度归一化

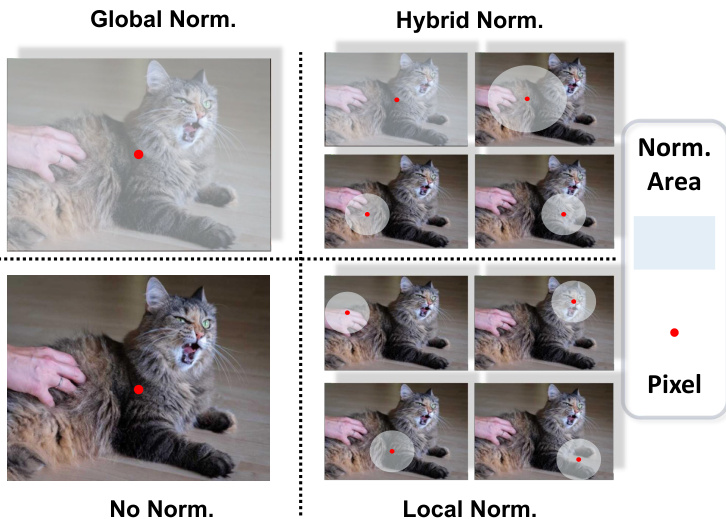

Depth normalization is a crucial component of our framework as it adjusts the pseudo-depth labels ${\bf d}^{t}$ from the teacher model and the depth predictions $\mathbf{d}^{s}$ from the student model for effective loss computation. To understand the influence of normalization techniques on distillation performance, we systematically analyze several approaches commonly employed in prior works. These strategies are visually illustrated in Fig. 4.

深度归一化是我们框架中的关键组成部分,它调整来自教师模型的伪深度标签 ${\bf d}^{t}$ 和学生模型的深度预测 $\mathbf{d}^{s}$,以实现有效的损失计算。为了理解归一化技术对蒸馏性能的影响,我们系统分析了先前工作中常用的几种方法。这些策略在图4中进行了直观展示。

Global Normalization: The first strategy we examine is the global normalization [50, 51] used in recent distillation methods. Global normalization [37] adjusts depth predictions using global statistics of the entire depth map. This strategy aims to ensure scale-and-shift invariance by normalizing depth values based on the median and mean absolute deviation of the depth map. For each pixel $i$ , the normalized depth for the student model and pseudo-labels are computed as:

全局归一化 (Global Normalization) : 我们研究的第一个策略是近期蒸馏方法中采用的全局归一化 [50, 51] 。全局归一化 [37] 通过利用整个深度图的全局统计量来调整深度预测。该策略旨在通过基于深度图的中位数和平均绝对偏差对深度值进行归一化,从而确保尺度平移不变性。对于每个像素 $i$ ,学生模型和伪标签的归一化深度计算如下:

$$

\begin{array}{r l r}&{}&{\tilde{d}{i}^{s}=\mathcal{N}{g l o}({\bf d}^{s})=\frac{d_{i}^{s}-\mathrm{med}({\bf d}^{s})}{\frac{1}{M}\sum_{j=1}^{M}\left|d_{j}^{s}-\mathrm{med}({\bf d}^{s})\right|}}\ &{}&{\tilde{d}{i}^{t}=\mathcal{N}{g l o}({\bf d}^{t})=\frac{d_{i}^{t}-\mathrm{med}({\bf d}^{t})}{\frac{1}{M}\sum_{j=1}^{M}\left|d_{j}^{t}-\mathrm{med}({\bf d}^{t})\right|},}\end{array}

$$

$$

\begin{array}{r l r}&{}&{\tilde{d}{i}^{s}=\mathcal{N}{g l o}({\bf d}^{s})=\frac{d_{i}^{s}-\mathrm{med}({\bf d}^{s})}{\frac{1}{M}\sum_{j=1}^{M}\left|d_{j}^{s}-\mathrm{med}({\bf d}^{s})\right|}}\ &{}&{\tilde{d}{i}^{t}=\mathcal{N}{g l o}({\bf d}^{t})=\frac{d_{i}^{t}-\mathrm{med}({\bf d}^{t})}{\frac{1}{M}\sum_{j=1}^{M}\left|d_{j}^{t}-\mathrm{med}({\bf d}^{t})\right|},}\end{array}

$$

where ${\mathrm{med}}(\mathbf{d}^{s})$ and $\mathrm{med}(\mathbf{d}^{t})$ are the medians of the predicted depth and pseudo depth, respectively. The final regression loss for distillation is computed as the average absolute difference between the normalized predicted depth and the normalized pseudo depth across all valid pixels $M$ :

其中 ${\mathrm{med}}(\mathbf{d}^{s})$ 和 $\mathrm{med}(\mathbf{d}^{t})$ 分别是预测深度和伪深度的中位数。最终的蒸馏回归损失计算为所有有效像素 $M$ 上归一化预测深度与归一化伪深度之间的平均绝对差:

$$

\mathcal{L}{\mathrm{{Dis}}}=\frac{1}{M}\sum_{i=1}^{M}\left|\tilde{d}{i}^{s}-\tilde{d}_{i}^{t}\right|.

$$

$$

\mathcal{L}{\mathrm{{Dis}}}=\frac{1}{M}\sum_{i=1}^{M}\left|\tilde{d}{i}^{s}-\tilde{d}_{i}^{t}\right|.

$$

Hybrid Normalization: In contrast to global normalization, Hierarchical Depth Normalization [59] employs a hybrid normalization approach by integrating both global and local depth information. This strategy is designed to preserve both the global structure and local geometry in the depth map. The process begins by dividing the depth range into $S$ pixels as part of a single context, akin to global normalization. In the case of $S=2$ , the depth range is divided into two segments, with each pixel being normalized within one of these two local contexts. Similarly, for $S=4$ , the depth range is split into four segments, allowing normalization to be performed within smaller, localized contexts. By adapting the normalization process to multiple levels of granularity, hybrid normalization achieves a balance between global coherence and local adaptability. For each context $u$ , the normalized depth values for the student model $\mathcal{N}_{u}(d_{i}^{s})$ and pseudo-labels $\mathcal{N}_{u}(d_{i}^{t})$ are calculated within the corresponding depth range. The loss for each pixel $i$ is then computed by averaging the losses across all contexts $U_{i}$ to which the pixel belongs:

混合归一化 (Hybrid Normalization):与全局归一化不同,分层深度归一化 [59] 采用了一种混合归一化方法,通过整合全局和局部深度信息来实现。该策略旨在同时保留深度图中的全局结构和局部几何特征。该过程首先将深度范围划分为 $S$ 个像素作为单个上下文的一部分,类似于全局归一化。当 $S=2$ 时,深度范围被划分为两个部分,每个像素在这两个局部上下文中进行归一化。类似地,当 $S=4$ 时,深度范围被分成四个部分,使得归一化可以在更小的局部上下文中进行。通过将归一化过程适应于多粒度级别,混合归一化实现了全局一致性和局部适应性之间的平衡。对于每个上下文 $u$,学生模型的归一化深度值 $\mathcal{N}_{u}(d_{i}^{s})$ 和伪标签 $\mathcal{N}_{u}(d_{i}^{t})$ 在相应的深度范围内计算。然后,对于每个像素 $i$,其损失通过平均该像素所属的所有上下文 $U_{i}$ 的损失来计算:

Figure 3: Overview of Cross-Context Distillation. Our method combines local and global depth information to enhance the student model’s predictions. It includes two scenarios: (1) Shared-Context Distillation, where both models use the same image for distillation; and (2) Local-Global Distillation, where the teacher predicts depth for overlapping patches while the student predicts the full image. The Local-Global loss $\mathcal{L}_{\mathrm{lg}}$ (Top Right) ensures consistency between local and global predictions, enabling the student to learn both fine details and broad structures, improving accuracy and robustness.

图 3: 跨上下文蒸馏概述。我们的方法结合局部和全局深度信息来提升学生模型的预测能力。包含两种场景:(1) 共享上下文蒸馏,两个模型使用相同图像进行蒸馏;(2) 局部-全局蒸馏,教师模型预测重叠图像块的深度,而学生模型预测完整图像。局部-全局损失 $\mathcal{L}_{\mathrm{lg}}$ (右上) 确保局部与全局预测的一致性,使学生模型既能学习精细细节又能掌握整体结构,从而提高准确性和鲁棒性。

Figure 4: Normalization Strategies. We compare four normalization strategies: Global Norm [37], Hybrid Norm [59], Local Norm, and No Norm. The figure visualizes how each strategy processes pixels within the normalization region (Norm. Area). The red dot represents any pixel within the region.

图 4: 归一化策略对比。我们比较了四种归一化策略:全局归一化 (Global Norm) [37]、混合归一化 (Hybrid Norm) [59]、局部归一化 (Local Norm) 以及无归一化 (No Norm)。该图展示了每种策略如何处理归一化区域 (Norm. Area) 内的像素,其中红点代表该区域内的任意像素。

$$

\mathcal{L}{D i s}^{i}=\frac{1}{|U_{i}|}\sum_{u\in U_{i}}\left|\mathcal{N}{u}(d_{i}^{s})-\mathcal{N}{u}(d_{i}^{t})\right|,

$$

$$

\mathcal{L}{D i s}^{i}=\frac{1}{|U_{i}|}\sum_{u\in U_{i}}\left|\mathcal{N}{u}(d_{i}^{s})-\mathcal{N}{u}(d_{i}^{t})\right|,

$$

where $|U_{i}|$ denotes the total number of groups (or contexts) that pixel $i$ is associated with. To obtain the final loss ${\mathcal{L}}_{\mathrm{Dis}}$ , we average the pixel-wise losses across all valid pixels $M$ :

其中 $|U_{i}|$ 表示像素 $i$ 关联的组(或上下文)总数。为计算最终损失 ${\mathcal{L}}_{\mathrm{Dis}}$,我们对所有有效像素 $M$ 的逐像素损失取平均值:

$$

\mathcal{L}{\mathrm{{Dis}}}=\frac{1}{M}\sum_{i=1}^{M}\mathcal{L}_{D i s}^{i}.

$$

$$

\mathcal{L}{\mathrm{{Dis}}}=\frac{1}{M}\sum_{i=1}^{M}\mathcal{L}_{D i s}^{i}.

$$

segments, where $S$ is selected from ${1,2,4}$ . When $S=1$ , the entire depth range is normalized globally, treating all

段,其中 $S$ 选自 ${1,2,4}$。当 $S=1$ 时,整个深度范围被全局归一化,将所有

Local Normalization: In addition to global and hybrid normalization, we investigate Local Normalization, a strategy that focuses exclusively on the finest-scale groups used in hybrid normalization. This approach isolates the smallest local contexts for normalization, emphasizing the preservation of fine-grained depth details without considering hierarchical or global scales. Local normalization operates by dividing the depth range into the smallest groups, corresponding to $S=4$ in the hybrid normalization framework, and each pixel is normalized within its local context. The loss for each pixel $i$ is computed using a similar formulation as in hybrid normalization, but with $u^{i}$ now representing the local context for pixel $i$ , defined by the smallest four-part group:

局部归一化 (Local Normalization):除了全局和混合归一化外,我们还研究了局部归一化策略,该方法仅专注于混合归一化中使用的最细尺度分组。该策略通过隔离最小局部上下文进行归一化,强调保留细粒度深度细节,而不考虑层次或全局尺度。局部归一化将深度范围划分为最小分组(对应混合归一化框架中的 $S=4$),每个像素在其局部上下文中进行归一化。每个像素 $i$ 的损失计算采用与混合归一化相似的公式,但此处 $u^{i}$ 表示像素 $i$ 的最小四分组所定义的局部上下文:

$$

\mathcal{L}{\mathrm{{Dis}}}=\frac{1}{M}\sum_{i=1}^{M}\left|\mathcal{N}{u^{i}}(d_{i}^{s})-\mathcal{N}{u^{i}}(d_{i}^{t})\right|.

$$

$$

\mathcal{L}{\mathrm{{Dis}}}=\frac{1}{M}\sum_{i=1}^{M}\left|\mathcal{N}{u^{i}}(d_{i}^{s})-\mathcal{N}{u^{i}}(d_{i}^{t})\right|.

$$

No Normalization: As a baseline, we also consider a direct depth regression approach with no explicit normalization. The absolute difference between raw student predictions and teacher pseudo-labels is used for loss computation:

无归一化:作为基线,我们同时考虑了一种不进行显式归一化的直接深度回归方法。使用原始学生预测值与教师伪标签之间的绝对差值进行损失计算:

$$

\mathcal{L}{\mathrm{{Dis}}}=\frac{1}{M}\sum_{i=1}^{M}\left|d_{i}^{s}-d_{i}^{t}\right|,

$$

$$

\mathcal{L}{\mathrm{{Dis}}}=\frac{1}{M}\sum_{i=1}^{M}\left|d_{i}^{s}-d_{i}^{t}\right|,

$$

This approach eliminates the need for normalization, assuming pseudo-depth labels naturally reside in the same domain as predictions. It provides insight into whether normalization enhances distillation effectiveness or if raw depth supervision suffices.

该方法无需归一化处理,假设伪深度标签与预测值自然处于同一域中。这一思路揭示了归一化是否能提升蒸馏效果,抑或原始深度监督已足够有效。

3.2. Distillation Pipeline

3.2. 蒸馏流程

In this section, we introduce an enhanced distillation pipeline that integrates two complementary strategies: CrossContext Distillation and assistant-guided distillation. Both strategies aim to improve the quality of pseudo-label distillation, enhance the model’s fine-grained perception.

在本节中,我们介绍一种融合两种互补策略的增强蒸馏流程:跨上下文蒸馏 (CrossContext Distillation) 和助手引导蒸馏。这两种策略旨在提升伪标签蒸馏质量,增强模型的细粒度感知能力。

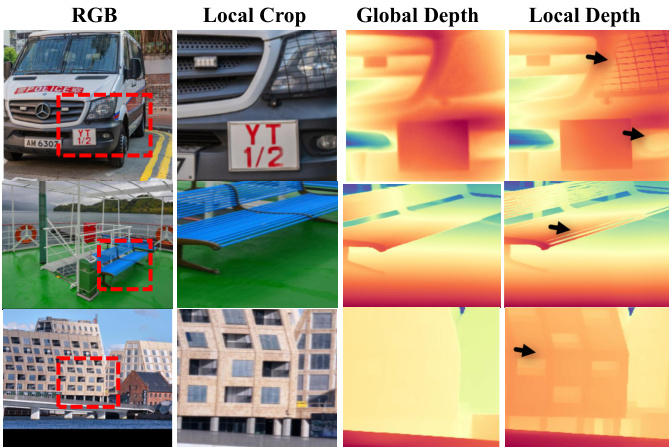

Cross-context Distillation. A key challenge in monocular depth distillation is the trade-off between local detail preservation and global depth consistency. As shown in Fig. 5, providing a local crop of an image as input to the teacher model enhances fine-grained details in the pseudo-depth labels, but it may fail to capture the overall scene structure. Conversely, using the entire image as input preserves the global depth structure but often lacks fine details. To address this limitation, we propose Cross-Context Distillation, a method that enables the student model to learn both local details and global structures simultaneously. Cross-context distillation consists of two key strategies:

跨上下文蒸馏。单目深度蒸馏的一个关键挑战在于局部细节保留与全局深度一致性之间的权衡。如图 5 所示,向教师模型提供图像局部裁剪作为输入能增强伪深度标签中的细粒度细节,但可能无法捕捉整体场景结构;反之,使用完整图像作为输入能保持全局深度结构,但往往缺失精细细节。为解决这一局限,我们提出跨上下文蒸馏方法,使学生模型能同时学习局部细节与全局结构。该方法包含两个核心策略:

- Shared-Context Distillation: In this setup, both the teacher and student models receive the same cropped region of the image as input. Instead of using the full image, we randomly sample a local patch of varying sizes from the original image and provide it as input to both models. This encourages the student model to learn from the teacher model across different spatial contexts, improving its ability to generalize to varying scene structures. For the loss of shared-context distillation, the teacher and student models receive identical inputs and produce each depth prediction, denoted as $\mathbf{d}{\mathrm{local}}^{t}$ and $\mathbf{d}{\mathrm{local}}^{s}$ :

- 共享上下文蒸馏 (Shared-Context Distillation):在该设置中,教师模型和学生模型接收相同的图像裁剪区域作为输入。我们不使用完整图像,而是从原始图像中随机采样不同尺寸的局部图块,将其作为两个模型的共同输入。这种方法促使学生模型能够从教师模型在不同空间上下文中的表现进行学习,从而提升其对多样化场景结构的泛化能力。对于共享上下文蒸馏的损失计算,教师模型和学生模型接收相同输入并分别生成深度预测结果,记为 $\mathbf{d}{\mathrm{local}}^{t}$ 和 $\mathbf{d}{\mathrm{local}}^{s}$:

Figure 5: Different Inputs Lead to Different Pseudo Labels. Global Depth: The teacher model predicts depth using the entire image, and the local region’s prediction is cropped from the output. Local Depth: The teacher model directly takes the cropped local region as input, resulting in more refined and detailed depth estimates for that area, capturing finer details compared to using the entire image.

图 5: 不同输入导致不同伪标签。全局深度: 教师模型使用整张图像预测深度,局部区域的预测结果从输出中裁剪获得。局部深度: 教师模型直接以裁剪后的局部区域作为输入,从而得到该区域更精细的深度估计,相比使用整张图像能捕捉更细微的细节。

$$

\mathcal{L}{\mathrm{sc}}=\mathcal{L}{\mathrm{Dis}}\left({\bf d}{\mathrm{local}}^{s},{\bf d}_{\mathrm{local}}^{t}\right),

$$

$$

\mathcal{L}{\mathrm{sc}}=\mathcal{L}{\mathrm{Dis}}\left({\bf d}{\mathrm{local}}^{s},{\bf d}_{\mathrm{local}}^{t}\right),

$$

This loss encourages the student model to refine its finegrained predictions by directly aligning with the teacher’s outputs at local scales.

该损失函数通过促使学生模型在局部尺度上与教师模型的输出直接对齐,来优化其细粒度预测。

- Local-Global Distillation: In this approach, the teacher and student models operate on different input contexts. The teacher model processes local cropped regions, generating fine-grained depth predictions, while the student model predicts a global depth map from the entire image. To ensure knowledge transfer, the teacher’s local depth predictions supervise the corresponding overlapping regions in the student’s global depth map. This strategy allows the student to integrate fine-grained local details into its holistic depth estimation. Formally, the teacher model produces multiple depth predictions for cropped regions, denoted as $\mathbf{d}{\mathrm{local}{n}}^{t}$ while the student generates a global depth map, $\mathbf{d}_{\mathrm{global}}^{s}$ . The loss for Local-Global distillation is computed only over overlapping areas between the teacher’s local predictions and the corresponding regions in the student’s global depth map:

- 局部-全局蒸馏 (Local-Global Distillation): 该方法中教师模型和学生模型处理不同输入上下文。教师模型处理局部裁剪区域生成细粒度深度预测,而学生模型从整张图像预测全局深度图。为实现知识迁移,教师模型的局部深度预测会监督学生模型全局深度图中对应的重叠区域。该策略使学生模型能将细粒度的局部细节整合到其整体深度估计中。形式上,教师模型为裁剪区域生成多个深度预测,记为 $\mathbf{d}{\mathrm{local}{n}}^{t}$,而学生模型生成全局深度图 $\mathbf{d}_{\mathrm{global}}^{s}$。局部-全局蒸馏的损失仅计算教师局部预测与学生全局深度图对应区域之间的重叠部分:

$$

\mathcal{L}{\mathrm{lg}}=\frac{1}{N}\sum_{n=1}^{N}\mathcal{L}{\mathrm{Dis}}\left(\mathrm{Crop}({\bf d}{\mathrm{global}}^{s}),{\bf d}{\mathrm{local}_{n}}^{t}\right),

$$

$$

\mathcal{L}{\mathrm{lg}}=\frac{1}{N}\sum_{n=1}^{N}\mathcal{L}{\mathrm{Dis}}\left(\mathrm{Crop}({\bf d}{\mathrm{global}}^{s}),{\bf d}{\mathrm{local}_{n}}^{t}\right),

$$

where $\mathrm{{Crop}(\cdot)}$ extracts the overlapping region from the student’s depth prediction, and $N$ is the total number of sampled patches. This loss ensures that the student benefits from the detailed local supervision of the teacher model while maintaining global depth consistency. The total loss function integrates both local and cross-context losses along with additional constraints, including feature alignment and gradient preservation, as proposed in prior works [51]:

其中 $\mathrm{{Crop}(\cdot)}$ 从学生模型的深度预测中提取重叠区域,$N$ 为采样块的总数。该损失函数确保学生模型既能受益于教师模型的细粒度局部监督,又能保持全局深度一致性。总损失函数整合了局部损失、跨上下文损失以及特征对齐和梯度保留等附加约束,如先前研究 [51] 所提出的:

$$

\mathcal{L}{\mathrm{total}}=\mathcal{L}{\mathrm{sc}}+\lambda_{1}\cdot\mathcal{L}{\mathrm{lg}}+\lambda_{2}\cdot\mathcal{L}{\mathrm{feat}}+\lambda_{3}\cdot\mathcal{L}_{\mathrm{grad}}.

$$

$$

\mathcal{L}{\mathrm{total}}=\mathcal{L}{\mathrm{sc}}+\lambda_{1}\cdot\mathcal{L}{\mathrm{lg}}+\lambda_{2}\cdot\mathcal{L}{\mathrm{feat}}+\lambda_{3}\cdot\mathcal{L}_{\mathrm{grad}}.

$$

Here, $\lambda_{1},\lambda_{2}$ , and $\lambda_{3}$ are weighting factors that balance the different loss components. By incorporating cross-context supervision, this framework effectively allows the student model to integrate both fine-grained details from local crops and structural coherence from global depth maps.

这里,$\lambda_{1}$、$\lambda_{2}$ 和 $\lambda_{3}$ 是用于平衡不同损失分量的权重因子。通过引入跨上下文监督,该框架有效地使学生模型能够整合来自局部裁剪的细粒度细节和全局深度图的结构一致性。

Assistant-Guided Distillation. In addition to cross-context distillation, we propose an assistant-guided distillation strategy to further enhance the quality and robustness of the distilled depth knowledge. This approach pairs a primary teacher [51] with a single auxiliary assistant, selected as a diffusion-based depth estimator [49], which leverages generative priors to complement the primary teacher’s predictions. This design leverages their complementary strengths: the primary teacher excels in providing efficient and globally consistent supervision, while the assistant offers fine-grained depth cues derived from its generative modeling capabilities. By drawing supervision from two distinct architectures trained with different paradigms(e.g., optimization strategies or data distributions), the student benefits from diverse knowledge sources, effectively mitigating biases and limitations inherent to a single teacher model. Formally, let $\mathcal{M}$ and $\mathcal{M}{a}$ denote the primary and assistant models, respectively. During training, a probabilistic sampling mechanism determines whether supervision for each iteration is drawn from $\mathcal{M}$ or $\mathcal{M}{a}$ . This stochastic guidance encourages the student to adapt to multiple supervision styles, fostering richer and more general iz able depth representations. Overall, this assistant-guided scheme introduces complementary and diversified pseudo-labels, reducing over-reliance on any single teacher and improving both generalization and depth estimation performance.

助理引导蒸馏。除了跨上下文蒸馏外,我们提出了一种助理引导蒸馏策略,以进一步提升蒸馏深度知识的质量和鲁棒性。该方法将主教师模型[51]与单个辅助助理(选择基于扩散的深度估计器[49]配对),利用生成先验来补充主教师的预测。这种设计结合了二者的互补优势:主教师擅长提供高效且全局一致的监督,而助理则通过其生成建模能力提供细粒度的深度线索。通过从采用不同训练范式(如优化策略或数据分布)的两种架构中获取监督信号,学生模型能够受益于多样化的知识来源,有效缓解单一教师模型固有的偏差和局限性。形式上,设$\mathcal{M}$和$\mathcal{M}{a}$分别表示主模型和助理模型。训练过程中采用概率采样机制决定每次迭代的监督信号来自$\mathcal{M}$还是$\mathcal{M}{a}$。这种随机引导机制促使学生模型适应多种监督风格,从而形成更丰富、更具泛化能力的深度表征。总体而言,该助理引导方案通过引入互补且多样化的伪标签,降低了对单一教师的过度依赖,同时提升了泛化能力和深度估计性能。

4. Experiment

4. 实验

4.1. Experimental Settings

4.1. 实验设置

Datasets. We train our proposed distillation framework on a subset of 200,000 unlabeled images from the SA-1B dataset [26], following the training protocol of DepthAnythingv2 [51]. For evaluation, we assess the distilled student model on five widely used depth estimation benchmarks. All test datasets are kept unseen during training, enabling a zeroshot evaluation of generalization performance. The chosen benchmarks include: NYUv2 [43], KITTI [14], ETH3D [42], ScanNet [8], and DIODE [45]. Additional dataset details are provided in the Appendix.

数据集。我们按照DepthAnythingv2 [51]的训练方案,在SA-1B数据集[26]的20万张无标注图像子集上训练所提出的蒸馏框架。为评估性能,我们在五个广泛使用的深度估计基准上测试蒸馏后的学生模型,所有测试数据集在训练阶段均保持不可见状态,从而实现泛化性能的零样本评估。所选基准包括:NYUv2 [43]、KITTI [14]、ETH3D [42]、ScanNet [8]和DIODE [45]。更多数据集细节见附录。

Metrics. We assess depth estimation performance using two key metrics: the mean absolute relative error (AbsRel) and $\delta_{1}$ accuracy. Following previous studies [37, 56, 24] on zero-shot MDE, we align predictions with ground truth in both scale and shift before evaluation.

指标。我们使用两个关键指标评估深度估计性能:平均绝对相对误差 (AbsRel) 和 $\delta_{1}$ 准确率。遵循零样本 MDE 相关研究 [37, 56, 24] 的做法,我们在评估前将预测结果与真实值进行尺度和偏移对齐。

Implementation. Our experiments use state-of-the-art monocular depth estimation models as teachers to generate pseudo-labels, supervising various student models in a distillation framework with only RGB images as input. In shared-context distillation, both teacher and student receive the same global region, extracted via random cropping from the original image. The crop maintains a 1:1 aspect ratio and is sampled within a range from 644 pixels to the shortest side of the image, then resized to $560\times560$ for prediction. In global-local distillation, the global region is cropped into overlapping local patches, each sized $560\times560$ , for the teacher model to predict pseudo-labels. For assistant-guided distillation, we adopt a probabilistic sampling strategy where the primary teacher and the assistant model are selected with a ratio of 7:3, respectively. We train our best student model using the distillation pipeline for 20,000 iterations with a batch size of 8 on a single NVIDIA V100 GPU, initialized with pre-trained DAv2-Large weights. The learning rate is in tune with that of the corresponding student model. For DAv2 [51], the decoder learning rate is set to $5\times10^{-5}$ . For the total loss function, we set the parameters as follows: $\lambda_{1}=0.5$ , $\lambda_{2}=1.0$ and $\lambda_{3}=2.0$ .

实现。我们的实验采用最先进的单目深度估计模型作为教师模型生成伪标签,在仅输入RGB图像的蒸馏框架中监督各种学生模型。在共享上下文蒸馏中,教师和学生接收相同的全局区域,该区域通过从原始图像中随机裁剪提取。裁剪保持1:1宽高比,采样范围从644像素到图像最短边,然后调整为$560\times560$进行预测。在全局-局部蒸馏中,全局区域被裁剪为重叠的局部补丁,每个大小为$560\times560$,供教师模型预测伪标签。对于辅助引导蒸馏,我们采用概率采样策略,主教师模型和辅助模型的选择比例分别为7:3。我们使用蒸馏流程训练最佳学生模型,在单个NVIDIA V100 GPU上进行20,000次迭代,批量大小为8,初始化采用预训练的DAv2-Large权重。学习率与相应学生模型保持一致。对于DAv2 [51],解码器学习率设置为$5\times10^{-5}$。总损失函数的参数设置如下:$\lambda_{1}=0.5$、$\lambda_{2}=1.0$和$\lambda_{3}=2.0$。

4.2. Analysis

4.2. 分析

For the ablation study and analysis, we sample a subset of 50K images from SA-1B [26] as our training data, with an input image size of $560\times560$ for the network. We conduct experiments on two of the most challenging benchmarks, DIODE [45] and ETH3D [42], which include both indoor and outdoor scenes.

为了进行消融研究和分析,我们从SA-1B [26]中抽取了5万张图像作为训练数据,网络输入图像尺寸为$560\times560$。实验在DIODE [45]和ETH3D [42]这两个最具挑战性的基准测试上进行,涵盖室内外场景。

Analysis of Normalization across Cross-Context Distillation. We evaluate the impact of different depth normalization strategies on Cross-Context Distillation, as shown in Table 1. The results reveal that the optimal normalization method depends on the specific distillation design. In shared-context distillation, where all pseudo-labels are generated by a single teacher model, hybrid normalization achieves the best performance, closely followed by no normalization. The consistent domain across supervision signals reduces the need for normalization, enabling the model to better preserve local depth relationships. Unlike ground-truth-based training—where normalization is essential to align depth distributions across datasets captured by heterogeneous sensors or represented in varying formats (e.g., sparse vs. dense, relative vs. absolute)—pseudo-labels from a single model are inherently more uniform. Therefore, direct L1 loss without normalization can more faithfully supervise pixel-level depth without distortion from global rescaling. In contrast, global normalization introduces undesirable inter-pixel dependencies, while local normalization discards global structural coherence. In local-global distillation, hybrid normalization again proves most effective, likely due to its hierarchical design that enforces consistency across both local and global depth predictions. The relatively small gap between hybrid and global normalization suggests that our framework, which uses local cues to refine global predictions, effectively mitigates the limitations of global normalization. However, no normalization leads to a notable performance drop compared to the shared-context setting, indicating that localized regions in this case come from distinct depth domains, making direct L1 supervision less reliable. Local normalization, as before, sacrifices global consistency and thus under performs.

跨上下文蒸馏中的归一化分析。我们评估了不同深度归一化策略对跨上下文蒸馏的影响,如表 1 所示。结果表明,最优归一化方法取决于具体的蒸馏设计。在共享上下文蒸馏中(所有伪标签均由单一教师模型生成),混合归一化表现最佳,紧随其后的是无归一化。监督信号所在领域的一致性降低了对归一化的需求,使模型能更好地保留局部深度关系。与基于真实值的训练不同(在异质传感器采集或以不同格式表示的数据集中,归一化对对齐深度分布至关重要,例如稀疏与密集、相对与绝对),单一模型生成的伪标签本质上更为统一。因此,未经归一化的直接 L1 损失能更真实地监督像素级深度,避免全局重缩放带来的失真。相比之下,全局归一化会引入不良的像素间依赖,而局部归一化会破坏全局结构一致性。在局部-全局蒸馏中,混合归一化再次被证明最有效,这很可能归功于其分层设计能强制局部与全局深度预测的一致性。混合归一化与全局归一化之间较小的差距表明,我们的框架(利用局部线索优化全局预测)有效缓解了全局归一化的局限性。但与共享上下文设置相比,无归一化会导致性能显著下降,这表明该场景下的局部区域来自不同深度域,使得直接 L1 监督的可靠性降低。与之前一样,局部归一化会牺牲全局一致性,因此表现欠佳。

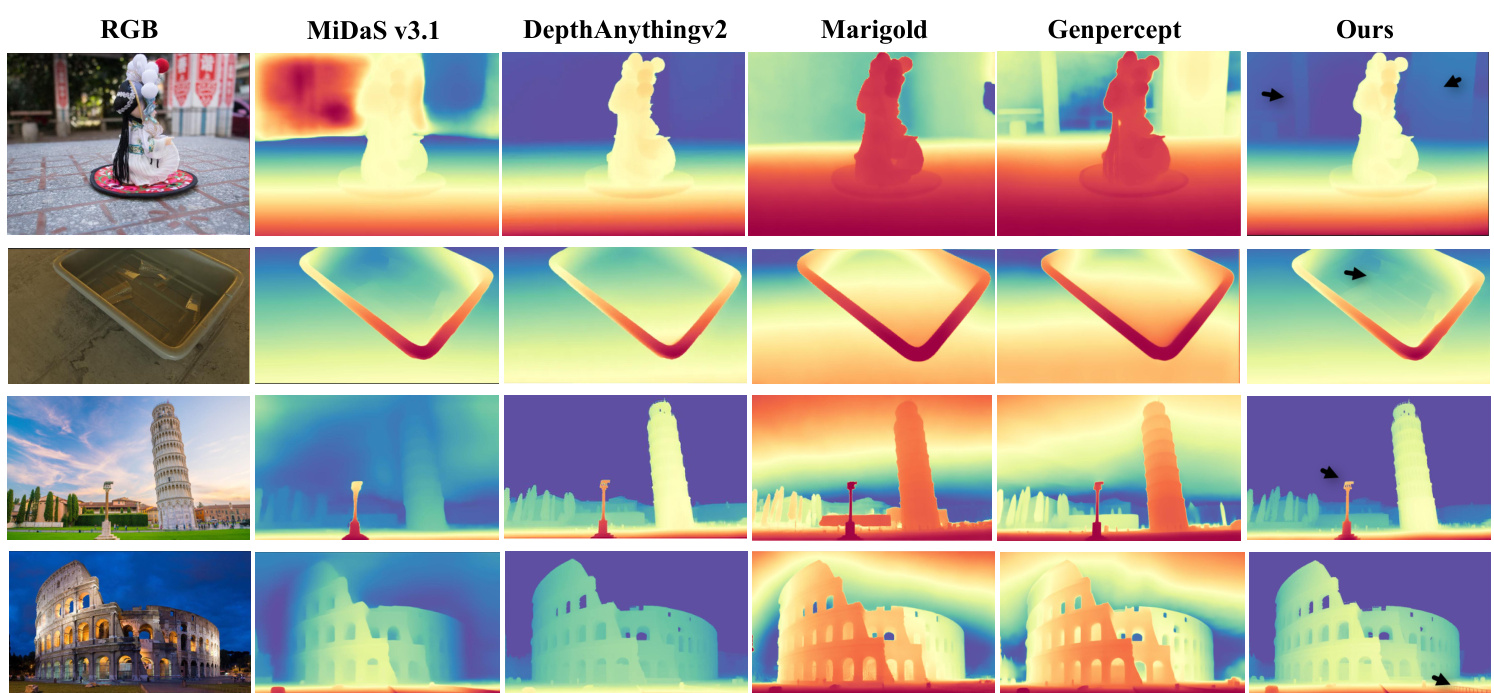

Figure 6: Qualitative Comparison of Relative Depth Estimations. We present visual comparisons of depth predictions from our method (”Ours”) alongside other classic depth estimators (”MiDaS v3.1” [3], and models using DINOv2 [33] or SD as priors (”Depth Anything v 2 [51]”, ”Marigold” [24], ”Genpercept” [49]). Compared to state-of-the-art methods, the depth map produced by our model, particularly at the position indicated by the black arrow, exhibits finer granularity and more detailed depth estimation.

图 6: 相对深度估计的定性对比。我们展示了本方法( "Ours" )与其他经典深度估计方法( "MiDaS v3.1" [3] )以及使用 DINOv2 [33] 或 SD 作为先验的模型( "Depth Anything v2" [51], "Marigold" [24], "Genpercept" [49] )的深度预测视觉对比。与前沿方法相比,本模型生成的深度图( 特别是黑色箭头所指位置 )展现出更精细的粒度和更详尽的深度估计细节。

Ablation Study of Cross-Context Distillation. To further validate the effectiveness of our distillation framework, we conduct ablation studies by removing Shared-Context Distillation and Local-Global Distillation in Table 2. Without both components, the model degrades to a conventional distillation setup, resulting in significantly lower performance. Introducing Shared-Context Distillation with Hybrid Normalization notably improves accuracy, highlighting the benefits of a better normalization strategy with consistent context supervision. When using only Local-Global Distillation, the model still performs well, showing the effectiveness of region-wise depth refinement even without global context information. Combining both strategies yields the best results, confirming that both components contribute significantly to improving the student model’s ability to utilize pseudolabels, demonstrating the robustness of our approach.

跨上下文蒸馏的消融研究。为验证我们蒸馏框架的有效性,我们在表2中通过移除共享上下文蒸馏和局部-全局蒸馏进行了消融实验。当同时移除这两个组件时,模型退化为传统蒸馏设置,导致性能显著下降。引入采用混合归一化的共享上下文蒸馏后,准确率显著提升,这表明在一致上下文监督下采用更好的归一化策略具有优势。当仅使用局部-全局蒸馏时,模型仍表现良好,证明即使没有全局上下文信息,区域级深度优化依然有效。结合两种策略时获得了最佳结果,证实这两个组件都能显著提升学生模型利用伪标签的能力,体现了我们方法的鲁棒性。

Table 1: Analysis of Normalization Strategies. Performance comparison of different normalization strategies across Shared-Context Distillation and Local-Global Distillation.

| Method | Normalization | ETH3D AbsRel↓ | DIODE AbsRel |

| Shared-Context Distillation | Global Norm. | 0.064 | 0.259 |

| No Norm. | 0.057 | 0.239 | |

| Local Norm. Hybrid Norm. | 0.070 0.057 | 0.245 0.238 | |

| Local-Global Distillation | Global Norm. | 0.065 | 0.239 |

| No Norm. | 0.273 | 0.300 | |

| Local Norm. | 0.076 | 0.244 | |

| Hybrid Norm. | 0.064 | 0.238 |

表 1: 归一化策略分析。共享上下文蒸馏和局部-全局蒸馏中不同归一化策略的性能对比。

| 方法 | 归一化 | ETH3D AbsRel↓ | DIODE AbsRel |

|---|---|---|---|

| 共享上下文蒸馏 | 全局归一化 | 0.064 | 0.259 |

| 无归一化 | 0.057 | 0.239 | |

| 局部归一化 混合归一化 | 0.070 0.057 | 0.245 0.238 | |

| 局部-全局蒸馏 | 全局归一化 | 0.065 | 0.239 |

| 无归一化 | 0.273 | 0.300 | |

| 局部归一化 | 0.076 | 0.244 | |

| 混合归一化 | 0.064 | 0.238 |

Cross-Architecture Distillation. To highlight the limitations of previous state-of-the-art distillation approaches employing global normalization, we compare their performance against the Hybrid Normalization strategy, which we utilize in our distillation framework, across diverse model architectures. To demonstrate the general iz ability of our approach, we conduct cross-architecture distillation experiments on both the state-of-the-art Depth Anything [51] and the classic MiDaS [37] architecture. Experiments are conducted using MiDaS [37] and Depth Anything [51] in four configurations (D