From Poses to Identity: Training-Free Person Re-Identification via Feature Centralization

从姿态到身份:基于特征中心化的免训练行人重识别

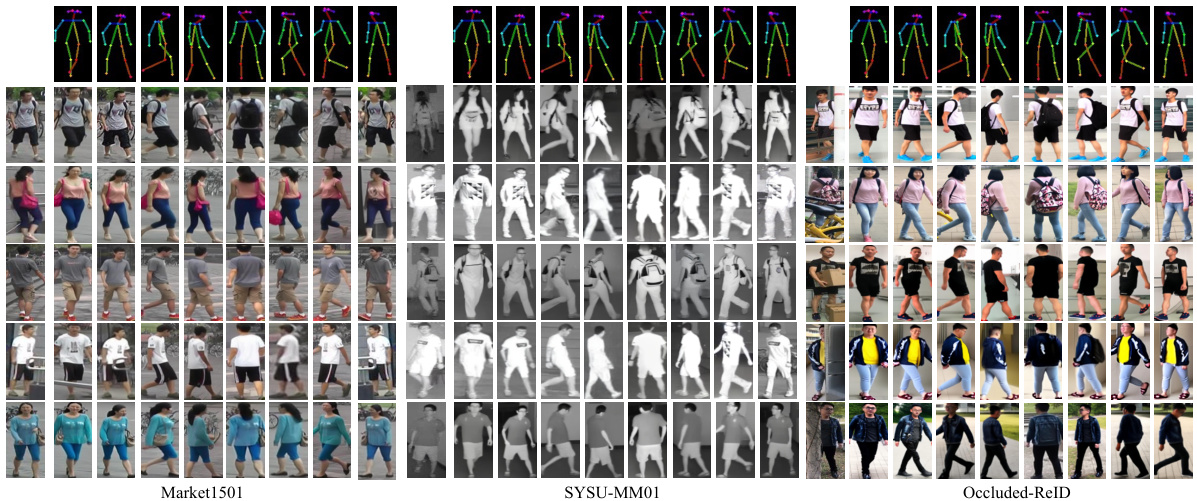

Figure 1. Visualization of our Identity-Guided Pedestrian Generation model with 8 representative poses on three datasets.

图 1: 我们的身份引导行人生成模型在三个数据集上8种代表性姿态的可视化效果。

Abstract

摘要

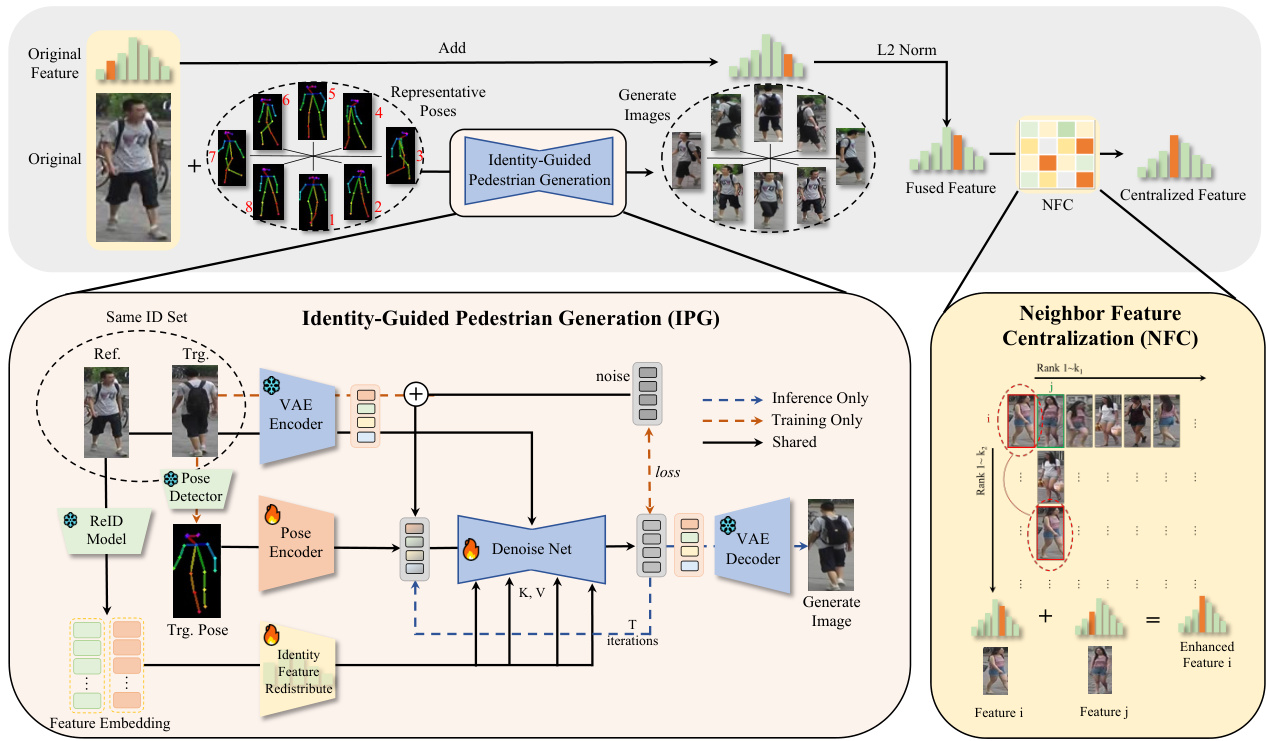

Person re-identification (ReID) aims to extract accurate identity representation features. However, during feature extraction, individual samples are inevitably affected by noise (background, occlusions, and model limitations). Considering that features from the same identity follow a normal distribution around identity centers after training, we propose a Training-Free Feature Centralization ReID framework (Pose2ID) by aggregating the same identity features to reduce individual noise and enhance the stability of identity representation, which preserves the feature’s original distribution for following strategies such as re-ranking. Specifically, to obtain samples of the same identity, we introduce two components: ➀Identity-Guided Pedestrian Generation: by leveraging identity features to guide the generation process, we obtain high-quality images with diverse poses, ensuring identity consistency even in complex scenarios such as infrared, and occlusion. Neighbor Feature Centralization: it explores each sample’s potential positive samples from its neighborhood. Experiments demonstrate that our generative model exhibits strong generalization capabilities and maintains high identity consistency. With the Feature Centralization framework, we achieve impressive performance even with an ImageNet pre-trained model without ReID training, reaching mAP/Rank-1 of $52.8I/78.92$ on Market1501. Moreover, our method sets new state-of-the-art results across standard, cross-modality, and occluded ReID tasks, showcasing strong adaptability.

行人重识别(ReID)旨在提取准确的身份表征特征。然而在特征提取过程中,个体样本难免会受到噪声(背景、遮挡和模型局限)的影响。考虑到训练后同一身份的特征会围绕身份中心呈正态分布,我们提出了一种免训练特征中心化ReID框架(Pose2ID),通过聚合相同身份特征来降低个体噪声并增强身份表征的稳定性,同时保留特征的原始分布以支持重排序等后续策略。具体而言,为获取同身份样本,我们引入两个组件:①身份引导的行人生成:通过身份特征指导生成过程,获得具有多样姿态的高质量图像,即使在红外、遮挡等复杂场景下也能保持身份一致性;②邻域特征中心化:从样本邻域中挖掘潜在正样本。实验表明,我们的生成模型展现出强大的泛化能力并保持高度身份一致性。借助特征中心化框架,仅使用ImageNet预训练模型(未经ReID训练)即可在Market1501上取得52.8%/78.92%的mAP/Rank-1优异表现。此外,本方法在标准、跨模态和遮挡ReID任务中均刷新了当前最优性能,展现出强大的适应性。

1. Introduction

1. 引言

Person re-identification (ReID) is a critical task in video surveillance and security, aiming to match pedestrian images captured from different cameras. Despite significant progress made in recent years through designing more complex models with increased parameters and feature dimensions, inevitable noise arises due to poor image quality or inherent limitations of the models, reducing the accuracy of identity recognition and affecting retrieval performance.

行人重识别(ReID)是视频监控与安防领域的关键任务,旨在匹配不同摄像头拍摄的行人图像。尽管近年来通过设计参数更多、特征维度更高的复杂模型取得了显著进展,但由于图像质量不佳或模型固有局限性,不可避免地会产生噪声,从而降低身份识别的准确性并影响检索性能。

To address this challenge, we propose a training-free

为解决这一挑战,我们提出了一种免训练

ReID framework that fully leverages capabilities of existing models by mitigating feature noise to enhance identity represent ation. During training, features of the same identity are constrained by the loss function and naturally aggregate around an ”identity center” in the feature space. Particularly, according to the central limit theorem, when the number of samples is sufficiently large, these features would follow a normal distribution with identity center as the mean. As shown in Fig. 2, we visualize the feature distribution of an identity, and rank samples of the same identity with distance to their identity center. Therefore, we introduce the concept of feature centralization. To make each sample’s feature more representative of its identity, by aggregating features of the same identity, we can reduce the noise in individual samples, strengthen identity characteristics, and bring each feature dimension closer to its central value.

通过抑制特征噪声增强身份表征的ReID框架,充分发挥现有模型潜力。训练过程中,同一身份的特征受损失函数约束,自然聚集在特征空间的"身份中心"周围。根据中心极限定理,当样本量足够大时,这些特征会呈正态分布,身份中心即为均值。如图2所示,我们可视化某身份的特征分布,并按样本与身份中心的距离排序。由此引入特征中心化概念:通过聚合同身份特征,可降低单一样本噪声、强化身份特征,使各特征维度更趋近中心值。

However, obtaining diverse samples of the same identity is hard without identity labels. With the development of generative models, generating images of the same identity in different poses has become feasible. Previous GAN-based studies [13, 43, 72, 73] struggle with limited effectiveness, and mainly serve to augment training data. With breakthroughs in diffusion models for image generation [2, 19, 66], it is now possible to generate high-quality, multi-pose images of the same person. However, these methods lack effective control over identity features and generated pedestrian images are susceptible to interference from background or occlusions, making it difficult to ensure identity consistency across poses. Therefore, we utilize identity features of ReID, proposing an Identity-Guided Pedestrian Generation paradigm. Guided by identity features, we generate high-quality images of the same person with a high degree of identity consistency across diverse scenarios (visible, infrared, and occluded).

然而,在没有身份标签的情况下获取同一身份的不同样本十分困难。随着生成模型的发展,生成不同姿态的同一身份图像已成为可能。先前基于GAN的研究[13, 43, 72, 73]受限于生成效果,主要作为训练数据扩充手段。随着扩散模型在图像生成领域的突破[2, 19, 66],如今已能生成高质量、多姿态的同一人物图像。但这些方法对身份特征缺乏有效控制,生成的行人图像易受背景或遮挡干扰,难以保证跨姿态的身份一致性。为此,我们利用ReID的身份特征,提出身份引导的行人生成范式。在身份特征引导下,我们生成具有高度身份一致性的高质量图像,适用于多场景(可见光、红外及遮挡情况)。

Furthermore, inspired by re-ranking mechanisms[74], we explore potential positive samples through feature distance matrices to further achieve feature centralization. Unlike traditional re-ranking methods that modify features or distances in a one-off manner, our approach performs L2 normalization after enhancement. This preserves the original feature distribution while improving representation quality and can be combined with re-ranking methods.

此外,受重排序机制[74]启发,我们通过特征距离矩阵探索潜在正样本,以进一步实现特征中心化。与传统重排序方法一次性修改特征或距离不同,我们的方法在增强后执行L2归一化。这既保留了原始特征分布,又提升了表征质量,并能与重排序方法结合使用。

Thus, the main contributions of this paper include: • Training-Free Feature Centralization framework that can be directly applied to different ReID tasks/models, even an ImageNet pretrained ViT without ReID training; • Identity-Guided Pedestrian Generation (IPG) paradigm, leveraging identity features to generate high-quality images of the same identity in different poses to achieve feature centralization; • Neighbor Feature Centralization (NFC) based on sample’s neighborhood, discovering hidden positive samples from gallery/query set to achieve feature centralization.

因此,本文的主要贡献包括:

- 无需训练的特征中心化框架 (Training-Free Feature Centralization),可直接应用于不同的行人重识别 (ReID) 任务/模型,甚至未经ReID训练的ImageNet预训练ViT;

- 身份引导的行人生成范式 (Identity-Guided Pedestrian Generation, IPG),利用身份特征生成同一身份不同姿态的高质量图像以实现特征中心化;

- 基于样本邻域的邻居特征中心化 (Neighbor Feature Centralization, NFC),从候选集/查询集中发掘隐藏的正样本以实现特征中心化。

Figure 2. The real feature distribution of images from the same ID extracted by TransReID, and the main idea of our work is to make features closer/centralized to the ID center.

图 2: TransReID提取的同ID图像真实特征分布,我们工作的核心思想是使特征更接近/集中于ID中心。

2. Related works

2. 相关工作

Person Re-Identification Person re-identification (ReID) is a critical task in computer vision that focuses on identifying individuals across different camera views. It plays a significant role in surveillance and security applications. Recent advancements in ReID have leveraged deep learning techniques to enhance performance, particularly using convolutional neural networks (CNNs[29]) and vision transformers (ViTs [2]). The deep learning based methods[3, 11, 17, 30, 35, 48, 64, 65] that focus on feature extraction and metric learning[18, 28, 34, 62] have improved feature extraction by learning robust and disc rim i native embeddings that capture identity-specific information.

人员重识别

人员重识别(ReID)是计算机视觉中的一项关键任务,主要研究如何在不同摄像头视角下识别同一目标个体。该技术在监控安防领域具有重要应用价值。近年来,ReID领域通过采用深度学习技术显著提升了性能,特别是运用卷积神经网络(CNNs[29])和视觉Transformer(ViTs[2])。基于深度学习的方法[3,11,17,30,35,48,64,65]聚焦于特征提取和度量学习[18,28,34,62],通过学习具有判别力的鲁棒嵌入特征来捕获身份信息,从而改进了特征提取能力。

Standard datasets like Market-1501 [70] have been widely used to benchmark ReID algorithms under normal conditions. Moreover, there are some challenging scenarios such as occlusions and cross-modality matching. OccludedREID [78] addresses the difficulties of identifying partially obscured individuals, while SYSU-MM01 [55] focuses on matching identities between visible and infrared images, crucial for nighttime surveillance.

标准数据集如Market-1501 [70]已被广泛用于常规条件下的ReID算法基准测试。此外,还存在一些挑战性场景,例如遮挡和跨模态匹配。OccludedREID [78]解决了识别部分遮挡个体的难题,而SYSU-MM01 [55]则专注于可见光与红外图像间的身份匹配,这对夜间监控至关重要。

Feature Enhancement in Re-Identification Extracting robust feature representations is one of the key challenges in re-identification. Feature enhancement could help the ReID model easily differentiate between two people. Data augmentation techniques[40, 41, 76] were enhanced for feature enhancement. By increasing the diversity of training data, ReID model could extract robust and disc rim i native features.

重识别中的特征增强

提取鲁棒的特征表示是重识别中的关键挑战之一。特征增强可以帮助ReID模型更轻松地区分两个人。数据增强技术[40, 41, 76]被用于增强特征。通过增加训练数据的多样性,ReID模型可以提取出鲁棒且具有判别性的特征。

Apart from improving the quality of the generated images, some Gan-based methods couple feature extraction and data generation end-to-end to distill identity related feature. FD-GAN[13] separates identity from the pose by generating images across multiple poses, enhancing the ReID system’s robustness to pose changes. Zheng et al.[73] separately encodes each person into an appearance code and a structure code. Eom el al.[8] propose to disentangle identity-related and identity-unrelated features from person images. However, GAN-based methods face challenges such as training instability and mode collapse, which may not keep identity consistency.

除了提升生成图像的质量外,一些基于GAN的方法将特征提取与数据生成端到端耦合,以蒸馏身份相关特征。FD-GAN[13]通过生成多姿态图像分离身份与姿态,增强了ReID系统对姿态变化的鲁棒性。Zheng等[73]将每个人分别编码为外观代码和结构代码。Eom等[8]提出从人物图像中解耦身份相关与身份无关特征。然而,基于GAN的方法面临训练不稳定和模式坍塌等挑战,可能无法保持身份一致性。

In addition, the re-ranking technique refines featurebased distances to improve ReID accuracy. Such as $\mathrm{k\Omega}$ - Reciprocal Encoding Re-ranking[74], which improves retrieval accuracy by leveraging the mutual neighbors between images to refine the distance metric.

此外,重排序技术通过优化基于特征的距离来提高ReID(行人重识别)准确率。例如kΩ-互逆编码重排序[74],该方法利用图像间的互近邻关系优化距离度量,从而提升检索精度。

Person Generation Models Recent approaches have incorporated generative models, particularly generative adversarial networks (GANs) [14], to augment ReID data or enhance feature quality. Style transfer GAN-based methods[7, 21, 42, 75] transfer labeled training images to artificially generated images in diffrent camera domains, background domains or RGB-infrared domains. Posetransfer GAN-based methods[5, 36, 43, 45, 63] enable the synthesis of person images with variations in pose and appearance, enriching the dataset and making feature representations more robust to changes in poses. Random generation GAN-based mothods [1, 23, 72] generate random images of persons and use Label Smooth Regular iz ation (LSR [50]) or other methods to automatically label them. However, these methods often struggle to maintain identity consistency in pose variation, as generated images are susceptible to identity drift. The emergence of diffusion models has advanced the field of generative modeling, showing remarkable results in image generation tasks [19]. Leveraging the capabilities of pre-trained models like Stable Diffusion [44], researchers have developed techniques[4, 66] to generate high-quality human images conditioned on 2D human poses. Such as ControlNet[66], which integrates conditional control into diffusion models, allowing precise manipulation of generated images based on pose inputs.

人物生成模型

近期研究采用生成式模型,特别是生成对抗网络 (GAN) [14] 来扩充ReID数据或提升特征质量。基于风格迁移的GAN方法 [7, 21, 42, 75] 将标注训练图像转换到不同摄像机域、背景域或RGB-红外域的人工生成图像中。基于姿态迁移的GAN方法 [5, 36, 43, 45, 63] 能合成具有姿态和外观变化的人物图像,丰富数据集并增强特征表示对姿态变化的鲁棒性。基于随机生成的GAN方法 [1, 23, 72] 生成随机人物图像,并使用标签平滑正则化 (LSR [50]) 等方法进行自动标注。但这些方法往往难以保持姿态变化中的身份一致性,生成图像易出现身份漂移。

扩散模型的出现推动了生成建模领域发展,在图像生成任务中展现出卓越效果 [19]。研究者利用Stable Diffusion [44] 等预训练模型的能力,开发出基于2D人体姿态生成高质量人物图像的技术 [4, 66]。例如ControlNet [66] 将条件控制融入扩散模型,可根据姿态输入精确操控生成图像。

3. Methods

3. 方法

The main purpose of this paper is to centralize features to their identity center to enhance the identity representation of feature vectors extracted by ReID model, that is, reducing noise within the features while increasing the identity attributes to make them more representative of their identities. Therefore, to effectively and reasonably enhance the features, we need to understand the characteristics of the feature vectors obtained by ReID model.

本文的主要目的是将特征集中到其身份中心,以增强ReID模型提取的特征向量的身份表征能力,即减少特征中的噪声,同时增加身份属性,使其更能代表各自的身份。因此,为了有效且合理地增强特征,我们需要了解ReID模型所获取特征向量的特性。

3.1. Feature Distribution Analysis

3.1. 特征分布分析

Currently, ReID models commonly use cross-entropy loss to impose ID-level constraints, and contrastive losses (such as triplet loss) to bring features of the same ID closer while pushing apart features of different IDs. Some models also utilize center loss to construct identity centers for dynamically constraining the IDs. These methods lead to one common result: feature aggregation. One can say that the current ReID task is essentially a feature aggregation task. The degree of feature density (e.g. t-SNE visualization) is also widely used to measure model performance. It is easy to deduce that the features of the same ID are centered around a ”mean,” approximately forming a normal distribution, as the distribution shown in Fig.2 which is visualized with one single feature dimension of the same ID.

目前,ReID模型通常使用交叉熵损失(cross-entropy loss)施加ID级别的约束,并采用对比损失(如三元组损失)使相同ID的特征相互靠近,同时推远不同ID的特征。部分模型还利用中心损失(center loss)构建身份中心来动态约束ID。这些方法导致一个共同结果:特征聚合。可以说当前的ReID任务本质上是一个特征聚合任务。特征密集程度(如t-SNE可视化)也被广泛用于衡量模型性能。容易推断出,同一ID的特征会围绕一个"均值"集中,近似形成正态分布,如图2所示(通过同一ID的单个特征维度可视化呈现的分布)。

It is evident that ReID features are normally distributed around the ‘identity center’. To theoretically prove that the feature vectors of each ID in current ReID tasks aggregation around a center or mean, we analyze several commonly used loss functions and their impact on the feature distribution in Supplementary.

显然,ReID特征通常围绕"身份中心"呈正态分布。为了从理论上证明当前ReID任务中每个ID的特征向量都围绕某个中心或均值聚集,我们在补充材料中分析了几种常用损失函数及其对特征分布的影响。

For the same identity $y_{i}=j$ , we have a large number of samples ${\mathbf{x}{i}}{i=1}^{N_{j}}$ , where $N_{j}$ is the number of samples for $\operatorname{ID}j$ . These samples are passed through a ReID model $f(\cdot)$ , resulting in the corresponding feature vectors ${\mathbf{f}{i}}{i=1}^{N_{j}}$ :

对于同一身份 $y_{i}=j$,我们拥有大量样本 ${\mathbf{x}{i}}{i=1}^{N_{j}}$,其中 $N_{j}$ 表示 $\operatorname{ID}j$ 的样本数量。这些样本通过 ReID (Re-identification) 模型 $f(\cdot)$ 处理后,生成对应的特征向量 ${\mathbf{f}{i}}{i=1}^{N_{j}}$:

$$

\mathbf{f}{i}=f({\mathbf{x}}_{i})

$$

$$

\mathbf{f}{i}=f({\mathbf{x}}_{i})

$$

where $\mathbf{f}{i}\in\mathbb{R}^{d}$ is the feature vector of sample $\mathbf{x}_{i}$ , and $d$ is the dimensionality of the feature space.

其中 $\mathbf{f}{i}\in\mathbb{R}^{d}$ 是样本 $\mathbf{x}_{i}$ 的特征向量,$d$ 为特征空间的维度。

For each feature dimension $k$ of the feature vector $\mathbf{f}{i}$ , the values ${\mathbf{f}{i,k}}{i=1}^{N_{j}}$ obtained from different samples of the same $\operatorname{ID}j$ is independent and identically distributed random variables. Here, $\mathbf{f}_{i,k}$ represents the $k$ -th dimension of the feature vector for the $i$ -th sample.

对于特征向量 $\mathbf{f}{i}$ 的每个特征维度 $k$,从同一 $\operatorname{ID}j$ 的不同样本中获取的值 ${\mathbf{f}{i,k}}{i=1}^{N_{j}}$ 是独立同分布的随机变量。其中,$\mathbf{f}_{i,k}$ 表示第 $i$ 个样本特征向量的第 $k$ 维。

Since the input samples are independent, the values of are independent factors. According to the Central Limit Theorem (CLT), when the number of independent factors is large, the distribution of the values ${\mathbf{f}{i,k}^{-}}{i=1}^{N_{j}}$ for any dimension $k$ of the feature vector will approximate a normal distribution. Thus, for each feature dimension $k$ , we have:

由于输入样本 是独立的,其对应的值也是独立因子。根据中心极限定理 (CLT),当独立因子的数量较大时,特征向量任意维度 $k$ 的取值 ${\mathbf{f}{i,k}^{-}}{i=1}^{N_{j}}$ 将近似服从正态分布。因此,对于每个特征维度 $k$,我们有:

$$

\mathbf{f}{i,k}\sim\mathcal{N}(\mu_{k},\sigma_{k}^{2})

$$

$$

\mathbf{f}{i,k}\sim\mathcal{N}(\mu_{k},\sigma_{k}^{2})

$$

where $\mu_{k}$ is the mean of the $k$ -th feature dimension for ID $\mathrm{f}j$ , and $\sigma_{k}^{2}$ is the variance of feature values in this dimension.

其中 $\mu_{k}$ 是ID $\mathrm{f}j$ 第 $k$ 个特征维度的均值,$\sigma_{k}^{2}$ 是该维度特征值的方差。

Since each dimension $\mathbf{f}{i,k}$ of the feature vector approximately follows a normal distribution across samples, the entire feature vector $\mathbf{f}_{i}$ for $\mathrm{ ID~}j$ can be approximated by a multivariate normal distribution. This gives:

由于特征向量 $\mathbf{f}{i,k}$ 的每个维度在样本间近似服从正态分布,因此 $\mathrm{ ID~}j$ 的整个特征向量 $\mathbf{f}_{i}$ 可用多元正态分布近似表示,即:

$$

\mathbf{f}_{i}\sim\mathcal{N}(\pmb{\mu},\Sigma)

$$

$$

\mathbf{f}_{i}\sim\mathcal{N}(\pmb{\mu},\Sigma)

$$

where $\pmb{\mu}=(\mu_{1},\mu_{2},\dots,\mu_{d})^{\top}$ is the mean vector of the feature dimensions, and $\Sigma$ is the covariance matrix.

其中 $\pmb{\mu}=(\mu_{1},\mu_{2},\dots,\mu_{d})^{\top}$ 是特征维度的均值向量,$\Sigma$ 是协方差矩阵。

The theoretical analysis above suggests that under the optimization of the loss functions, the ReID model’s feature vectors $\mathbf{x}{i}$ aggregated around their identity centers $\mathbf{c}{y_{i}}$ following a normal distribution. This is consistent with the feature aggregation observed in t-SNE visualization s.

上述理论分析表明,在损失函数优化过程中,ReID模型的特征向量$\mathbf{x}{i}$会围绕其身份中心$\mathbf{c}{y_{i}}$呈正态分布聚集,这与t-SNE可视化中观察到的特征聚集现象一致。

Figure 3. Overview of the proposed Feature Centralization framework for the ReID task.

图 3: 面向ReID任务提出的特征中心化(Feature Centralization)框架概览。

Identity Density $(\mathbf{ID}^{2})$ Metric Identity density is one aspect of measuring ReID effectiveness. However, there is currently no quantitative metric for this, and researchers commonly rely on visualization tools like t-SNE to demonstrate model performance. By using the concept above, we propose an Identity Density $\mathrm{\dot{(ID^{2})}}$ Metric which is detailed in Supplementary.

身份密度 $(\mathbf{ID}^{2})$ 指标

身份密度是衡量ReID效果的一个方面。但目前尚无量化指标,研究人员通常依赖t-SNE等可视化工具来展示模型性能。基于上述概念,我们提出了一种身份密度 $\mathrm{\dot{(ID^{2})}}$ 指标,具体细节详见补充材料。

3.2. Feature Centralization via Identity-Guided Pedestrian Generation

3.2. 基于身份引导的行人生成特征中心化

3.2.1. Feature Centralization

3.2.1. 特征中心化

Since features of the same identity follow a multivariate normal distribution, we can simply aggregate features of the same identity to approximate the identity center, as the visualization in Fig.2. Thus, our main purpose becomes how to get more samples of the same identity to help centralize features.

由于同一身份的特征服从多元正态分布,我们可以简单地聚合同一身份的特征来近似身份中心,如图2所示。因此,我们的主要目标变为如何获取更多同一身份的样本来帮助集中特征。

A straightforward approach is to perform horizontal flipping on the original images, and add features together. It is worth noting for reviewers that this is a very simple but effective trick. Therefore, it is necessary to check whether performance improvements are due to such tricks. In our experiments, to demonstrate the advancement of our approach, we did not use this trick. If used, it may be better.

一种直接的方法是对原始图像进行水平翻转,并将特征相加。值得评审注意的是,这是一个非常简单但有效的技巧。因此,有必要检查性能提升是否源自此类技巧。在我们的实验中,为了证明所提方法的先进性,我们未使用该技巧。若采用该技巧,效果可能会更好。

3.2.2. Identity-Guided Diffusion Process

3.2.2. 身份引导扩散过程

To get more samples from the same identity, we propose a novel Identity-Guided Pedestrian Generation (IPG) paradigm, generating images of the same identity with different poses using a Stable Diffusion model guided by identity feature to centralize each sample’s features.

为了从同一身份获取更多样本,我们提出了一种新颖的身份引导行人生成(IPG)范式,通过身份特征引导的Stable Diffusion模型生成具有不同姿态的同一身份图像,以集中每个样本的特征。

Followed by Stable Diffusion [44], which is developed from latent diffusion model (LDM). We use the reference UNet to inject the reference image features into the diffusion process with a constant timestep $t=0$ , and the denoising UNet $\epsilon_{\theta}$ to generate the target image latent $\mathbf{z_{0}}$ by denoising the noisy latent $\mathbf{z}_{\mathbf{T}}=\mathbf{\epsilon}$ :

继Stable Diffusion [44]之后,该模型基于潜在扩散模型(LDM)开发。我们使用参考UNet在扩散过程中以恒定时间步长$t=0$注入参考图像特征,并通过去噪UNet $\epsilon_{\theta}$对噪声潜在表示$\mathbf{z}{\mathbf{T}}=\mathbf{\epsilon}$进行去噪,生成目标图像潜在表示$\mathbf{z_{0}}$

$$

{\bf z}{t-1}=\epsilon_{\theta}({\bf z}{t},t,{\bf E_{\mathrm{pose}}},{\bf H}),t\in[0,T]

$$

$$

{\bf z}{t-1}=\epsilon_{\theta}({\bf z}{t},t,{\bf E_{\mathrm{pose}}},{\bf H}),t\in[0,T]

$$

where $\mathbf{H}$ is the identity feature information to guide model keep person identity. $\mathbf{E}_{\mathrm{pose}}$ is the pose features.

其中 $\mathbf{H}$ 是用于引导模型保持人物身份的特征信息,$\mathbf{E}_{\mathrm{pose}}$ 是姿态特征。

At each timestep $t$ , the pose feature $\mathbf{E}_{\mathrm{pose}}$ and conditioning embedding $\mathbf{H}$ guide the denoising process.

在每个时间步 $t$,姿态特征 $\mathbf{E}_{\mathrm{pose}}$ 和条件嵌入 $\mathbf{H}$ 会引导去噪过程。

Identity Feature Redistribute (IFR) The Identity Feature Redistribute (IFR) module aims to utilize identity features from input images, removing noise to better guide the generative model. It converts high-dimensional identity features into meaningful low-dimensional feature blocks, enhancing the model’s feature utilization efficiency.

身份特征重分配 (IFR)

身份特征重分配 (IFR) 模块旨在利用输入图像中的身份特征,通过去除噪声来更好地指导生成模型。它将高维身份特征转换为有意义的低维特征块,从而提升模型的特征利用效率。

Given the input sample $\mathbf{x}\in\mathbb{R}^{C}$ by a ReID model $f(\cdot)$ , with IFR, we can obtain a re-distributed robust feature $\mathbf{H}\in$ $\mathbb{R}^{N\times D})$ :

给定输入样本 $\mathbf{x}\in\mathbb{R}^{C}$ 通过 ReID 模型 $f(\cdot)$ ,利用 IFR 可获得重分布的鲁棒特征 $\mathbf{H}\in$ $\mathbb{R}^{N\times D})$ :

$$

\mathbf{H}=\mathrm{IFR}(f(\mathbf{x}))=\mathrm{LN}(\mathrm{Linear}(\mathbf{f}))

$$

$$

\mathbf{H}=\mathrm{IFR}(f(\mathbf{x}))=\mathrm{LN}(\mathrm{Linear}(\mathbf{f}))

$$

For this more robust feature identity feature, it is used as the K, V of the model’s attention module to guide the model’s attention to the identity feature.

对于这一更稳健的身份特征,它被用作模型注意力模块的 K、V,以引导模型关注身份特征。

Pose Encoder The Pose Encoder is to extract highdimensional pose embeddings $\mathbf{E}_{\mathrm{pose}}$ from input poses. It has 4 blocks with 16,32,64,128 channels. Each block applies a normal $3\times3$ Conv, a $3\times3$ Conv with stride 2 to reduce spatial dimensions, and followed by a SiLU activate function. Subsequently, the pose features are added to the noise latent before into the denoising UNet, follows [20].

姿态编码器 (Pose Encoder) 用于从输入姿态中提取高维姿态嵌入 $\mathbf{E}_{\mathrm{pose}}$。它包含4个通道数分别为16、32、64、128的模块。每个模块依次执行常规 $3\times3$ 卷积、步长为2的 $3\times3$ 卷积(用于降低空间维度)以及SiLU激活函数。随后,这些姿态特征会按照[20]的方法被添加到噪声潜变量中,再输入去噪UNet网络。

Training Strategy For each identity $i$ , we randomly select one image from $S_{i}^{\mathrm{ref}}$ as the reference image and one image from S $S_{i}^{\mathrm{trg}}$ as the target image for training.

训练策略

对于每个身份 $i$,我们从 $S_{i}^{\mathrm{ref}}$ 中随机选择一张图像作为参考图像,并从 $S_{i}^{\mathrm{trg}}$ 中随机选择一张图像作为训练目标图像。

Let ${\bf x}{i,j}^{\mathrm{ref}}\in S_{i}^{\mathrm{ref}}$ denote the reference image (i.e. $j_{t h}$ image of the $i_{t h}\mathrm{ID},$ ) and $\mathbf{x}{i,j}^{\mathrm{trg}}\in S_{i}^{\mathrm{trg}}$ Sitrg denote the target image. Model is trained using the mean squared error (MSE) loss between the predicted noise and the true noise.

设 ${\bf x}{i,j}^{\mathrm{ref}}\in S_{i}^{\mathrm{ref}}$ 表示参考图像 (即第 $i$ 个ID的第 $j$ 张图像),$\mathbf{x}{i,j}^{\mathrm{trg}}\in S_{i}^{\mathrm{trg}}$ 表示目标图像。模型通过预测噪声与真实噪声之间的均方误差 (MSE) 损失进行训练。

$$

\mathcal{L}=\mathbb{E}{\mathbf{z},t,\epsilon}\left[||\epsilon-\epsilon)\theta(\mathbf{z}{t},t,\mathbf{E}_{\mathrm{pose}},\mathbf{H})||^{2}\right]

$$

$$

\mathcal{L}=\mathbb{E}{\mathbf{z},t,\epsilon}\left[||\epsilon-\epsilon)\theta(\mathbf{z}{t},t,\mathbf{E}_{\mathrm{pose}},\mathbf{H})||^{2}\right]

$$

where $\textbf{z}=\mathrm{VAE}(\textbf x_{i,j}^{\mathrm{trg}})+\epsilon$ is a latent obtained by a pretrained VAE encoder[26], $\mathbf{E}{\mathrm{pose}}$ is the pose feature of $\mathbf{x}{i,j}^{\mathrm{trg}}$ ), $\mathbf{H}$ is the re-distributed identity feature of $(\mathbf{x}_{i,j}^{\mathrm{ref}})$ ).

其中 $\textbf{z}=\mathrm{VAE}(\textbf x_{i,j}^{\mathrm{trg}})+\epsilon$ 是通过预训练 VAE 编码器[26]获得的潜变量,$\mathbf{E}{\mathrm{pose}}$ 是 $\mathbf{x}{i,j}^{\mathrm{trg}}$ 的姿态特征,$\mathbf{H}$ 是 $(\mathbf{x}_{i,j}^{\mathrm{ref}})$ 重新分配的身份特征。

The model is trained to learn the mapping from the reference image $\mathbf{x}{\mathrm{ref}}$ to the target image $\mathbf{x}_{\mathrm{trg}}$ , with the goal of generating realistic variations in pose while preserving identity feature. This random selection ensures diversity during training, as different combinations of reference and target images are used in each training iteration, enhancing the model’s ability to generalize across various poses and viewpoints.

该模型通过学习从参考图像 $\mathbf{x}{\mathrm{ref}}$ 到目标图像 $\mathbf{x}_{\mathrm{trg}}$ 的映射,旨在生成姿态的真实变化同时保留身份特征。这种随机选择确保了训练过程中的多样性,因为每次训练迭代使用不同的参考图像和目标图像组合,从而增强了模型在不同姿态和视角下的泛化能力。

3.2.3. Selection of Representative Pose

3.2.3. 代表性姿态选择

In ReID tasks, features extracted from different poses of the same identity can vary significantly. Some specific poses tend to be more representative of that identity. As the conclusion of feature distribution in section3.1, we calculate the identity center for IDs with all of its samples in datasets, and select the image whose feature is the closest to the center. The pose of this image is regarded as the representative pose. By randomly selecting 8 representative poses with different directions, we generate images that are more represent at ive of the person’s identity.

在ReID任务中,同一身份的不同姿态所提取的特征可能存在显著差异。某些特定姿态往往更能代表该身份。如3.1节特征分布结论所示,我们为数据集中包含全部样本的ID计算身份中心点,并选择特征最接近中心的图像。该图像的姿态被视为代表性姿态。通过随机选取8个不同方向的代表性姿态,我们生成了更能体现人物身份特征的图像。

That is, given a set of feature vectors $\begin{array}{r l}{\mathbf{F}{\mathrm{all}}}&{{}=}\end{array}$ ${\mathbf{f}{1},\mathbf{f}{2},\ldots,\mathbf{f}_{N}}$ for a particular identity:

即给定特定身份的一组特征向量 $\begin{array}{r l}{\mathbf{F}{\mathrm{all}}}&{{}=}\end{array}$ ${\mathbf{f}{1},\mathbf{f}{2},\ldots,\mathbf{f}_{N}}$:

$$

\mathbf{f}{\mathrm{{mean}}}=\frac{1}{N}\sum_{i=1}^{N}\mathbf{f}_{i}

$$

$$

\mathbf{f}{\mathrm{{mean}}}=\frac{1}{N}\sum_{i=1}^{N}\mathbf{f}_{i}

$$

$$

\mathrm{pose}=\arg\operatorname*{min}{i}d(\mathbf{f}{\mathrm{mean}},\mathbf{f}_{i})

$$

$$

\mathrm{pose}=\arg\operatorname*{min}{i}d(\mathbf{f}{\mathrm{mean}},\mathbf{f}_{i})

$$

3.2.4. Feature Centralization Enhancement

3.2.4. 特征中心化增强

Once we got generated images with different poses, we generate new images $\hat{\bf x}$ for each of these poses. The features

一旦我们获得了不同姿势的生成图像,就会为每个姿势生成新图像 $\hat{\bf x}$。这些特征

extracted from these generated images are then aggregated with the original reference feature to enhance the overall representation. The centralized feature f is computed as:

从这些生成的图像中提取的特征随后与原始参考特征进行聚合,以增强整体表征。中心化特征f的计算公式为:

$$

\tilde{\mathbf{f}}=|\mathbf{f}+\frac{\eta}{M}\sum_{i=1}^{M}\mathbf{f}_{i}|_{2}

$$

$$

\tilde{\mathbf{f}}=|\mathbf{f}+\frac{\eta}{M}\sum_{i=1}^{M}\mathbf{f}_{i}|_{2}

$$

where $\mathbf{f}$ is the feature of the original reference image, and $\mathbf{f}_{i}$ are the features extracted from the $M$ generated images. The coefficient $\eta$ is introduced to adjust based on the quality of the generated images. According to the theory of Section3.1, low-quality generated images, as long as they contain corresponding identity information, can also be applied with feature enhancement, and use $\eta$ to regulate the enhancement effect of the generated information. As discussed in [72], even if the quality is poor, it still contains ID information.

其中 $\mathbf{f}$ 是原始参考图像的特征,$\mathbf{f}_{i}$ 是从 $M$ 张生成图像中提取的特征。系数 $\eta$ 用于根据生成图像的质量进行调整。根据第3.1节的理论,低质量的生成图像只要包含相应的身份信息,同样可以应用特征增强,并通过 $\eta$ 来调节生成信息的增强效果。如[72]所述,即使质量较差,这些图像仍包含ID信息。

3.3. Neighbor Feature Centralization (NFC)

3.3. 邻居特征中心化 (NFC)

Moreover, we proposed a Neighbor Feature Centralization (NFC) algorithm to reduce noise in individual features and improve their identity disc rim inability in unlabeled scenarios. The core idea of the algorithm is to utilize mutual nearest-neighbor features for aggregation.

此外,我们提出了一种邻域特征中心化 (NFC) 算法,用于在无标注场景下降低单个特征的噪声并提升其身份判别性。该算法的核心思想是利用互近邻特征进行聚合。

Algorithm 1 Neighbor Feature Centralization (NFC)

算法 1 邻域特征中心化 (NFC)

By enhancing each feature with its potential neighbors, it could effectively approximate features of the same identity without explicit labels, and ensure that only features have high similarity relationships contribute to the enhancement.

通过利用潜在相邻特征增强每个特征,该方法能有效逼近同一身份的特征而无需显式标注,并确保仅高相似度关系的特征参与增强。

Table 1. Improvements with our method on different SOTA models with both ViT and CNN backbone on Market1501, SYSU-MM01, and Occluded-ReID datasets. The data under grey is the new SOTA with our methods of that dataset.

| Dataset | Model | Venue | Base | Method | mAP↑ | Rank-1↑ | ID²↓ | |

| Market1501 | TransReID[16](w/o camid) | ICCV21 | ViT | official +ours | 79.88 90.39(+10.51) | 91.48 94.74(+3.26) | 0.2193 0.1357 | |

| TransReID[16](w/ camid) | official | 89 | 95.1 | 0.2759 | ||||

| +ours | 93.01(+4.01) | 95.52(+0.42) | 0.1967 | |||||

| CLIP-ReID[31] | AAAI23 | ViT | official | 89.7 | 95.4 | 0.0993 | ||

| +ours | 94(+4.3) | 96.4(+1.0) 0.0624 | ||||||

| CNN | official +ours | 89.8 94.9(+5.1) | 95.7 97.3(+1.6) | 0.0877 0.053 | ||||

| KPR[47] | ECCV24 | ViT | official | 79.05 | 85.4 | 0.3124 | ||

| +ours | 89.34(+10.29) | |||||||

| Occluded-ReID | BPBReID[46] | WACV23 | ViT | official +ours | 70.41 | 91(+5.6) 77.2 | 0.1434 0.377 | |

| 86.05(+15.64) | 89.1(+11.9) | 0.1504 | ||||||

| official | 71.81 | 75.29 0.4817 | ||||||

| All SYSU-MM01 | SAAI[9] | ICCV23 | CNN | +ours | 76.44(+4.63) | 79.33(+4.04) | 0.4072 | |

| Indoor | official | 84.6 | 81.59 | 0.4424 | ||||

| +ours | 86.83(+2.23) | 84.2(+2.61) | 0.3694 | |||||

| All | PMT[38] | AAAI23 | official | 66.13 | 67.7 | 0.4308 | ||

| ViT | 0.3133 | |||||||

| Indoor | +ours official | 75.43(+9.3) 77.81 | 74.81(+7.11) 72.95 | 0.4046 | ||||

| +ours | 84.29(+6.48) | 80.29(+7.34) | 0.2995 | |||||

表 1: 我们的方法在不同 SOTA (State-of-the-art) 模型上的改进效果,包括基于 ViT 和 CNN 骨干网络的 Market1501、SYSU-MM01 和 Occluded-ReID 数据集。灰色背景数据表示使用我们的方法后该数据集的新 SOTA 结果。

| 数据集 | 模型 | 会议 | 骨干网络 | 方法 | mAP↑ | Rank-1↑ | ID²↓ |

|---|---|---|---|---|---|---|---|

| Market1501 | TransReID[16](w/o camid) | ICCV21 | ViT | official +ours | 79.88 90.39(+10.51) | 91.48 94.74(+3.26) | 0.2193 0.1357 |

| TransReID[16](w/ camid) | official | 89 | 95.1 | 0.2759 | |||

| +ours | 93.01(+4.01) | 95.52(+0.42) | 0.1967 | ||||

| CLIP-ReID[31] | AAAI23 | ViT | official | 89.7 | 95.4 | 0.0993 | |

| +ours | 94(+4.3) | 96.4(+1.0) | 0.0624 | ||||

| CNN | official +ours | 89.8 94.9(+5.1) | 95.7 97.3(+1.6) | 0.0877 0.053 | |||

| KPR[47] | ECCV24 | ViT | official | 79.05 | 85.4 | 0.3124 | |

| +ours | 89.34(+10.29) | ||||||

| Occluded-ReID | BPBReID[46] | WACV23 | ViT | official +ours | 70.41 | 91(+5.6) 77.2 | 0.1434 0.377 |

| 86.05(+15.64) | 89.1(+11.9) | 0.1504 | |||||

| official | 71.81 | 75.29 | 0.4817 | ||||

| SYSU-MM01 (All) | SAAI[9] | ICCV23 | CNN | +ours | 76.44(+4.63) | 79.33(+4.04) | 0.4072 |

| (Indoor) | official | 84.6 | 81.59 | 0.4424 | |||

| +ours | 86.83(+2.23) | 84.2(+2.61) | 0.3694 | ||||

| (All) | PMT[38] | AAAI23 | official | 66.13 | 67.7 | 0.4308 | |

| ViT | 0.3133 | ||||||

| (Indoor) | +ours official | 75.43(+9.3) 77.81 | 74.81(+7.11) 72.95 | 0.4046 | |||

| +ours | 84.29(+6.48) | 80.29(+7.34) | 0.2995 |

Table 2. Ablation study on effects of feature centralization through Identity-Guided Pedestrian Generation (IPG) and Neighbor Feature Centralization (NFC).

| Methods | mAP↑ | Rank-1↑ | ID²↓ |

| Base | 79.88 | 91.48 | 0.2193 |

| +NFC | 83.92 | 91.83 | 0.1824 |

| +IPG | 88.02 | 94.77 | 0.1553 |

| +NFC+IPG | 90.39 | 94.74 | 0.1357 |

表 2: 通过身份引导行人生成 (IPG) 和邻域特征中心化 (NFC) 进行特征中心化效果的消融研究。

| 方法 | mAP↑ | Rank-1↑ | ID²↓ |

|---|---|---|---|

| Base | 79.88 | 91.48 | 0.2193 |

| +NFC | 83.92 | 91.83 | 0.1824 |

| +IPG | 88.02 | 94.77 | 0.1553 |

| +NFC+IPG | 90.39 | 94.74 | 0.1357 |

Table 3. Ablation study of Neighbor Feature Centralization (NFC) Algorithm on Market1501 dataset. We test on the gallery and query set respectively.

| GalleryNFC | QueryNFC | mAP↑ | Rank-1↑ |

| × | × | 79.88 | 91.48 |

| 81.70 | 92.04 | ||

| x | 82.76 | 91.69 | |

| √ | √ | 83.92 | 91.83 |

表 3: 邻域特征中心化 (NFC) 算法在 Market1501 数据集上的消融研究。我们分别在 gallery 和 query 集上进行了测试。

| GalleryNFC | QueryNFC | mAP↑ | Rank-1↑ |

|---|---|---|---|

| × | × | 79.88 | 91.48 |

| 81.70 | 92.04 | ||

| x | 82.76 | 91.69 | |

| √ | √ | 83.92 | 91.83 |

Table 4. Ablation study of Feature ID- Centralizing with Pedestrian Generation (IPG) on Market1501. We test on gallery and query set respectively.

| GalleryIPG | QueryIPG | mAP↑ | Rank-1↑ |

| x | × | 79.88 | 91.48 |

| 84.65 | 92.07 | ||

| x | 82.18 | 92.40 | |

| √ | √ | 88.02 | 94.77 |

表 4: 基于Market1501数据集的行人特征ID中心化生成(IPG)消融实验。我们分别在画廊集和查询集上进行测试。

| GalleryIPG | QueryIPG | mAP↑ | Rank-1↑ |

|---|---|---|---|

| × | × | 79.88 | 91.48 |

| 84.65 | 92.07 | ||

| × | 82.18 | 92.40 | |

| √ | √ | 88.02 | 94.77 |

Table 5. ReID performance on Market1501 with only ImageNet pre-trained weights without ReID training. The distribution visualized in Fig.4.

| Method | mAP↑ | R1↑ | R5个 | R10↑ | ID²← |

| w/o training | 3.34 | 11.4 | 21.88 | 28 | 0.5135 |

| +IPG | 52.81 | 78.92 | 91.21 | 94.27 | 0.2158 |

| +IPG+NFC | 57.27 | 82.39 | 90.17 | 92.81 | 0.1890 |

表 5: 仅使用ImageNet预训练权重而未进行ReID训练时在Market1501上的ReID性能。分布可视化见图4。

| 方法 | mAP↑ | R1↑ | R5↑ | R10↑ | ID²↓ |

|---|---|---|---|---|---|

| w/o |