HiFaceGAN: Face Renovation via Collaborative Suppression and Replenishment

HiFaceGAN:基于协同抑制与补充的人脸修复技术

ABSTRACT

摘要

Existing face restoration researches typically rely on either the image degradation prior or explicit guidance labels for training, which often lead to limited generalization ability over real-world images with heterogeneous degradation and rich background contents. In this paper, we investigate a more challenging and practical “dual-blind” version of the problem by lifting the requirements on both types of prior, termed as “Face Renovation”(FR). Specifically, we formulate FR as a semantic-guided generation problem and tackle it with a collaborative suppression and replenishment (CSR) approach. This leads to HiFaceGAN, a multi-stage framework containing several nested CSR units that progressively replenish facial details based on the hierarchical semantic guidance extracted from the front-end content-adaptive suppression modules. Extensive experiments on both synthetic and real face images have verified the superior performance of our HiFaceGAN over a wide range of challenging restoration subtasks, demonstrating its versatility, robustness and generalization ability towards real-world face processing applications. Code is available at https://github.com/Lotayou/Face-Renovation.

现有的人脸修复研究通常依赖于图像退化先验或显式指导标签进行训练,这往往导致对具有异质退化和丰富背景内容的真实图像泛化能力有限。本文通过解除对这两类先验的要求,研究了一个更具挑战性和实用性的"双盲"版本问题,称为"人脸翻新"(Face Renovation, FR)。具体而言,我们将FR表述为语义引导的生成问题,并采用协作抑制与补充(CSR)方法来解决。由此产生了HiFaceGAN——一个包含多个嵌套CSR单元的多阶段框架,这些单元基于从前端内容自适应抑制模块提取的层次化语义指导,逐步补充面部细节。在合成和真实人脸图像上的大量实验验证了HiFaceGAN在广泛具有挑战性的修复子任务上的优越性能,展示了其对实际人脸处理应用的通用性、鲁棒性和泛化能力。代码发布于https://github.com/Lotayou/Face-Renovation。

CCS CONCEPTS

CCS概念

• Computing methodologies $\rightarrow$ Computer vision.

• 计算方法 $\rightarrow$ 计算机视觉

KEYWORDS

关键词

Face Renovation, image synthesis, collaborative learning

面部修复、图像合成、协作学习

1 INTRODUCTION

1 引言

Face photographs record long-lasting precious memories of individuals and historical moments of human civilization. Yet the limited conditions in the acquisition, storage, and transmission of images inevitably involve complex, heterogeneous degradation s in real-world scenarios, including discrete sampling, additive noise, lossy compression, and beyond. With great application and research value, face restoration has been widely concerned by industry and academia, as a plethora of works [41][48][37] devoted to address specific types of image degradation. Yet it still remains a challenge towards more generalized, un constrained application scenarios, where few works can report satisfactory restoration results.

人脸照片记录着个人持久珍贵的记忆和人类文明的历史瞬间。然而图像采集、存储和传输过程中的条件限制,不可避免地会在现实场景中引入复杂多样的退化现象,包括离散采样、加性噪声、有损压缩等。作为具有重大应用与研究价值的课题,人脸修复已受到工业界和学术界的广泛关注,大量研究[41][48][37]致力于解决特定类型的图像退化问题。但在更通用、无约束的应用场景中,该领域仍面临挑战,目前鲜有研究能报告令人满意的修复效果。

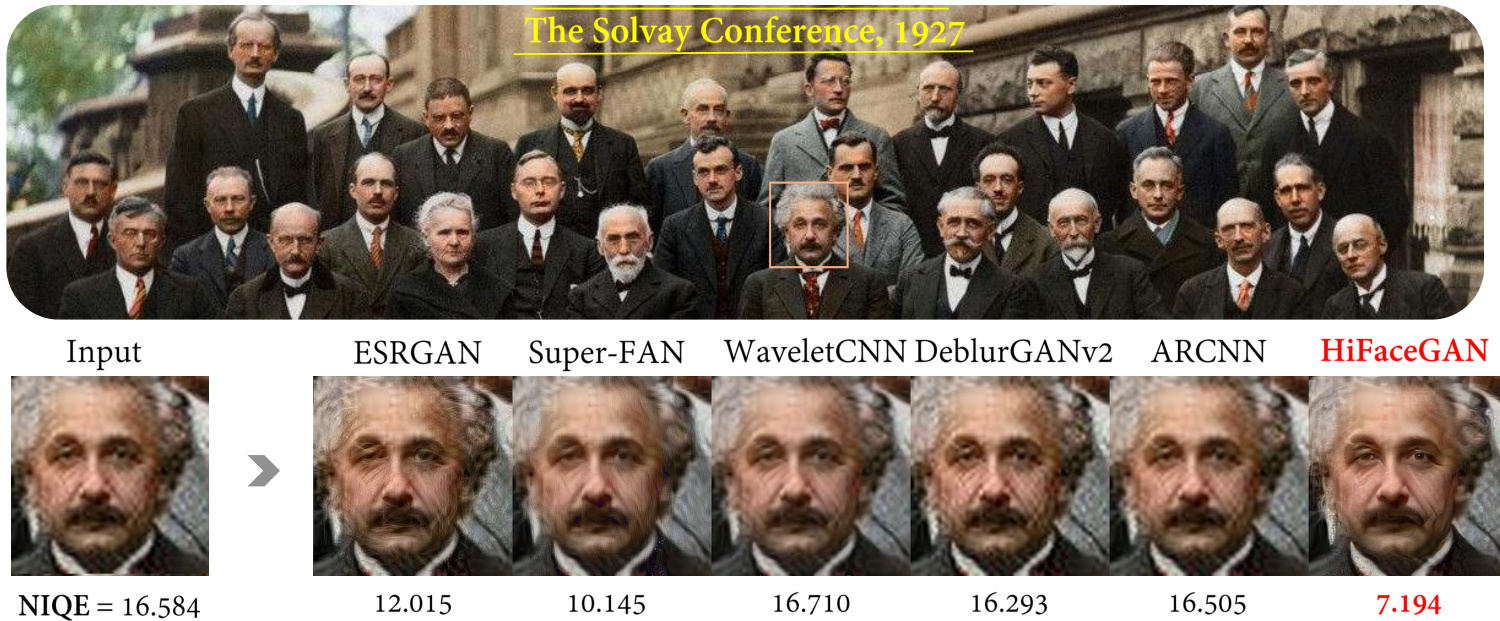

For face restoration, most existing methods typically work in a “non-blind” fashion with specific degradation of prescribed type and intensity, leading to a variety of sub-tasks including super resolution[58][8][34][55], hallucination [47][29], denoising[1][60], deblurring [48][25][26] and compression artifact removal [37][7][39]. However, task-specific methods typically exhibit poor generalization over real-world images with complex and heterogeneous degra- dations. A case in point shown in Fig. 1 is a historic group photograph taken at the Solvay Conference, 1927, that super-resolution methods, ESRGAN [55] and Super-FAN [4], tend to introduce additional artifacts, while other three task-specific restoration methods barely make any difference in suppressing degradation artifacts or replenishing fine details of hair textures, wrinkles, etc., revealing the impracticality of task-specific restoration methods.

在面部修复领域,现有方法大多以"非盲"方式处理特定类型和强度的预设退化,由此衍生出超分辨率[58][8][34][55]、幻觉修复[47][29]、去噪[1][60]、去模糊[48][25][26]以及压缩伪影消除[37][7][39]等多个子任务。然而,针对单一任务的方法往往难以泛化到具有复杂异质退化的真实图像。如图1所示,1927年索尔维会议的历史合影中,超分辨率方法ESRGAN[55]和Super-FAN[4]会引入额外伪影,而另外三种专项修复方法在抑制退化伪影或补充发丝纹理、皱纹等细节方面几乎无效,这揭示了专项修复方法的局限性。

When it comes to blind image restoration [43], researchers aim to recover high-quality images from their degraded observation in a “single-blind” manner without a priori knowledge about the type and intensity of the degradation. It is often challenging to reconstruct image contents from artifacts without degradation prior, necessitating additional guidance information such as categorial [2] or structural prior [5] to facilitate the replenishment of faithful and photo-realistic details. For blind face restoration [35][6], facial landmarks [4], parsing maps [53], and component heatmaps [59] are typically utilized as external guidance labels. In particular, Li et.al. explored the guided face restoration problem [31][30], where an additional high-quality face is utilized to promote fine-grained detail replenishment. However, it often leads to limited feasibility for restoring photographs without ground truth annotations. Furthermore, for real-world images with complex background, introducing unnecessary guidance could lead to inconsistency between the quality of renovated faces and unattended background contents.

在盲图像复原[43]领域,研究者致力于以"单盲"方式从退化观测中恢复高质量图像,而无需预先了解退化类型和程度。若缺乏退化先验,仅从伪影中重建图像内容往往极具挑战性,通常需要引入分类先验[2]或结构先验[5]等额外引导信息来补充真实且逼真的细节。针对盲人脸复原[35][6],研究者普遍采用面部关键点[4]、解析图[53]和组件热图[59]作为外部引导标签。Li等人特别探索了引导式人脸复原问题[31][30],通过引入额外高质量人脸来促进细粒度细节补充。但这种方法对缺乏真实标注的摄影作品修复可行性有限,且当处理具有复杂背景的真实图像时,不必要的引导可能导致修复后的人脸质量与未处理的背景内容不一致。

Figure 1: Face renovation results of related state-of-the-art methods. Our HiFaceGAN achieves the best perceptual quality as measured by the Naturalness Image Quality Evaluator(NIQE) [42]. (Best to view on the computer screen for your convenience to zoom in and compare the quality of facial details. Ditto for other figures.)

图 1: 相关前沿方法的人脸修复效果对比。我们的 HiFaceGAN 在 Naturalness Image Quality Evaluator (NIQE) [42] 指标下获得了最佳感知质量。(建议在电脑屏幕上查看以便放大比较面部细节质量。其他图片同理。)

In this paper, we formally propose “Face Renovation”(FR), an extra challenging, yet more practical task for photo-realistic face restoration under a “dual-blind” condition, lifting the requirements of both the degradation and structural prior for training. Specifically, we formulate FR as a semantic-guided face synthesis problem, and propose to tackle this problem with a collaborative suppression and replenishment(CSR) framework. To implement FR, we propose HiFaceGAN, a generative framework with several nested CSR units to perform face renovation in a multi-stage fashion with hierarchical semantic guidance. Each CSR unit contains a suppression module for extracting layered semantic features with content-adaptive convolution, which are utilized to guide the replenishment of corresponding semantic contents. Extensive experiments are conducted on both the synthetic FFHQ [19] dataset and real-world images against competitive degradation-specific baselines, highlighting the challenges in the proposed face renovation task and the superiority of our framework. In summary, our contributions are threefold:

本文正式提出"面部修复"(Face Renovation, FR)这一更具挑战性但更实用的任务,该任务在"双盲"条件下实现照片级真实感的面部恢复,同时放宽了对训练所需的退化先验和结构先验的要求。具体而言,我们将FR定义为语义引导的面部合成问题,并提出通过协作抑制与补充(CSR)框架来解决该问题。为实现FR,我们提出了HiFaceGAN生成框架,该框架包含多个嵌套的CSR单元,可在分层语义引导下以多阶段方式执行面部修复。每个CSR单元包含一个抑制模块,通过内容自适应卷积提取分层语义特征,这些特征用于指导相应语义内容的补充。我们在合成FFHQ [19]数据集和真实图像上进行了大量实验,与具有竞争力的退化特定基线进行比较,突显了所提面部修复任务的挑战性及我们框架的优越性。总结而言,我们的贡献有三方面:

• We present a challenging yet practical task, termed as “Face Renovation (FR)”, to tackle un constrained face restoration problems in a “dual-blind” fashion, lifting the requirements on both degradation and structure prior. • We propose the well-designed HiFaceGAN, a collaborative suppression and replenishment (CSR) framework nested with several CSR modules for photo realistic face renovation. • Extensive experiments are conducted on both synthetic and real face images with significant performance gain over a variety of “non-blind” and “single-blind” baselines, verifying the versatility, robustness and generalization capability of the proposed HiFaceGAN.

• 我们提出了一项具有挑战性但实用的任务,称为“面部翻新 (Face Renovation, FR)”,旨在以“双盲”方式解决无约束的面部修复问题,同时放宽对退化先验和结构先验的要求。

• 我们提出了精心设计的 HiFaceGAN,这是一个嵌套多个协作抑制与补充 (Collaborative Suppression and Replenishment, CSR) 模块的框架,用于实现逼真的面部翻新。

• 在合成和真实人脸图像上进行了大量实验,其性能显著优于多种“非盲”和“单盲”基线方法,验证了所提 HiFaceGAN 的通用性、鲁棒性和泛化能力。

2 RELATED WORKS

2 相关工作

2.1 Non-Blind Face Restoration

2.1 非盲人脸修复

Image restoration consists of a variety of subtasks, such as denoising [1][60], deblurring [25][26] and compression artifact removal [7][39]. In particular, image super resolution [8][34][27][55] and its counterpart for faces, hallucination [47][12][49][29], can be considered as specific types of restoration against down sampling. However, existing works often works in a “non-blind” fashion by prescribing the degradation type and intensity during training, leading to dubious generalization ability over real images with complex, heterogeneous degradation. In this paper, we perform face renovation by replenishing facial details based on hierarchical semantic guidance that are more robust against mixed degradation, and achieves superior performance over a wide range of restoration subtasks against state-of-the-art “non-blind” baselines.

图像修复包含多种子任务,例如去噪 [1][60]、去模糊 [25][26] 和压缩伪影消除 [7][39]。其中,图像超分辨率 [8][34][27][55] 及其针对人脸的特化任务——幻觉重建 [47][12][49][29],可视为针对下采样的特定修复类型。然而现有工作通常以"非盲"方式运行,在训练时预设退化类型和强度,导致对具有复杂混合退化的真实图像泛化能力存疑。本文通过基于层次化语义引导的面部细节补充来实现人脸修复,该方法对混合退化更具鲁棒性,并在广泛修复子任务中超越最先进的"非盲"基线模型。

2.2 Blind Face Restoration

2.2 盲人脸修复

Blind image restoration [43] [3][32] aims to directly learn the restoration mapping based on observed samples. However, most existing methods for general natural images are still sensitive to the degradation profile [9] and exhibit poor generalization over unconstrained testing conditions. For category-specific [2] (face) restoration, it is commonly believed that incorporating external guidance on facial prior would boost the restoration performance, such as semantic prior [38], identity prior [12], facial landmarks [4][5] or component heatmaps [59]. In particular, Li et.al. [31] explored the guided face restoration scenario with an additional high-quality guidance image to help with the generation of facial details. Other works utilize objectives related to subsequent vision tasks to guide the restoration, such as semantic segmentation [36] and recognition [61]. In this paper, we further explore the “dual-blind” case targeting at un constrained face renovation in real-world applications. Particularly, we reveal an astonishing fact that with collaborative suppression and replenishment, the dual-blind face renovation network can even outperform state-of-the-art “single-blind” methods due to the increased capability for enhancing non-facial contents. This brings fresh new insights for tackling un constrained face restoration problem from a generative view.

盲图像复原 [43][3][32] 旨在基于观测样本直接学习复原映射。然而,现有大多数针对通用自然图像的方法仍对退化模式 [9] 敏感,且在无约束测试条件下泛化能力较差。对于特定类别 [2](如人脸)复原,学界普遍认为引入面部先验的外部引导能提升复原性能,例如语义先验 [38]、身份先验 [12]、面部关键点 [4][5] 或组件热图 [59]。其中,Li 等人 [31] 通过额外的高质量引导图像探索了引导式人脸复原方案以辅助生成面部细节。其他研究则利用后续视觉任务相关目标(如语义分割 [36] 和识别 [61])来指导复原。本文进一步探索面向现实应用无约束人脸修复的"双盲"场景,并揭示了一个惊人现象:通过协同抑制与补充,双盲人脸修复网络甚至能超越最先进的"单盲"方法,因其增强了非面部内容的增强能力。这为从生成式视角解决无约束人脸复原问题带来了全新启示。

2.3 Deep Generative Models for Face Images

2.3 面向人脸图像的深度生成模型

Deep generative models, especially GANs [11] have greatly facilitated conditional image generation tasks [16][63], especially for high-resolution faces [18][19][20]. Existing methods can be roughly summarized into two categories: semantic-guided methods utilize parsing maps [53], edges [54], facial landmarks [4] or anatomical action units [46] to control the layout and expression of generated faces, and style-guided generation [19][20] utilize adaptive instance normalization [15] to inject style guidance information into generated images. Also, combining semantic and style guidance together leads to multi-modal image generation [64], enabling separable pose and appearance control of the output images. Inspired by SPADE [44] and SEAN [45] for semantic-guided image generation based on external parsing maps, our HiFaceGAN utilizes the SPADE layers to implement collaborative suppression and replenishment for multi-stage face renovation, which progressively replenishes plausible details based on hierarchical semantic guidance, leading to an automated renovation pipeline without external guidance.

深度生成模型,尤其是GANs [11]极大地简化了条件图像生成任务[16][63],特别是针对高分辨率人脸[18][19][20]。现有方法可大致分为两类:语义引导方法利用解析图[53]、边缘[54]、面部关键点[4]或解剖动作单元[46]来控制生成人脸的布局与表情;而风格引导生成[19][20]则采用自适应实例归一化[15]将风格指导信息注入生成图像。此外,结合语义与风格引导可实现多模态图像生成[64],从而分离控制输出图像的姿态与外观。受SPADE[44]和SEAN[45]基于外部解析图的语义引导图像生成启发,我们的HiFaceGAN利用SPADE层实现多阶段人脸修复的协同抑制与补充机制,通过层级语义指导逐步补充合理细节,最终形成无需外部引导的自动化修复流程。

3 FACE RENOVATION

3 面部修复

Generally, the acquisition and storage of digitized images involves many sources of degradation s, including but not limited to discrete sampling, camera noise and lossy compression. Non-blind face restoration methods typically focus on reversing a specific source of degradation, such as super resolution, denoising and compression artifact removal, leading to limited generalization capability over varying degradation types, Fig 1. On the other hand, blind face restoration often relies on the structural prior or external guidance labels for training, leading to quality inconsistency between foreground and background contents. To resolve the issues in existing face restoration works, we present face renovation to explore the capability of generative models for “dual-blind” face restoration without degradation prior and external guidance. Although it would be ideal to collect authentic low-quality and high-quality image pairs of real persons for better degradation modeling, the associated legal issues concerning privacy and portraiture rights are often hard to circumvent. In this work, we perturb a challenging, yet purely artificial face dataset [19] with heterogeneous degradation in varying types and intensities to simulate the real-world scenes for FR. Thereafter, the methodology and comprehensive evaluation metrics for FR are analyzed in detail.

通常,数字化图像的采集和存储涉及多种退化源,包括但不限于离散采样、相机噪声和有损压缩。非盲人脸复原方法通常专注于逆转特定退化源,例如超分辨率、去噪和压缩伪影去除,导致对不同退化类型的泛化能力有限(图 1)。另一方面,盲人脸复原往往依赖于结构先验或外部引导标签进行训练,导致前景与背景内容的质量不一致。为解决现有人脸复原工作中的问题,我们提出人脸翻新(face renovation),探索生成式模型在无需退化先验和外部引导的情况下实现“双盲”人脸复原的能力。尽管收集真实人物的低质量与高质量图像对以更好地建模退化是理想方案,但涉及隐私和肖像权的法律问题往往难以规避。本工作中,我们通过施加不同类型和强度的异构退化,扰动一个具有挑战性但纯人工生成的人脸数据集[19],以模拟真实场景中的人脸复原任务。随后,详细分析了人脸翻新的方法论和综合评估指标。

3.1 Degradation Simulation

3.1 退化模拟

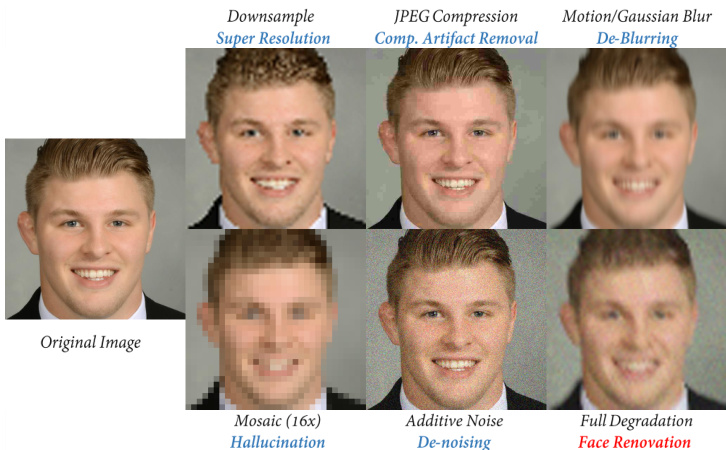

With richer facial details, more complex background contents, and higher diversity in gender, age, and ethnic groups, the synthetic dataset FFHQ [19] is chosen for evaluating FR models with sufficient challenges. We simulate the real-world image degradation by perturbing the FFHQ dataset with different types of degradation s corresponding to respective face processing subtasks, which will be also evaluated upon our proposed framework to demonstrate its versatility. For FR, we superimpose four types of degradation (except 16x mosaic) over clean images in random order with uniformly sampled intensity to replicate the challenge expected for real-world application scenarios. Fig. 2 displays the visual impact of each type of degradation upon a clean input face. It is evident that mosaic is the most challenging due to the severe corruption of facial boundaries and fine-grained details. Blurring and down-sampling are slightly milder, with the structural integrity of the face almost intact. Finally, JPEG compression and additive noise are the least conceptually obtrusive, where even the smallest details (such as hair bang) are clearly discern able. As will be evidenced later in Sec. 5.1, the visual impact is consistent with the performance of the proposed face renovation model. Finally, the full degradation for FR is more complex and challenging than all subtasks (except 16x mosaic), with both additive noises/artifacts and detail loss/corruption. We believe the proposed degradation simulation can provide sufficient yet still manageable challenge towards real-world FR applications.

由于合成数据集FFHQ [19]具有更丰富的面部细节、更复杂的背景内容,以及在性别、年龄和种族群体上更高的多样性,我们选择它来评估具有足够挑战性的人脸修复(FR)模型。我们通过用与各个人脸处理子任务相对应的不同类型退化s对FFHQ数据集进行扰动,来模拟现实世界的图像退化,这些子任务也将基于我们提出的框架进行评估,以展示其多功能性。对于FR,我们在干净图像上以随机顺序叠加四种类型的退化(除16倍马赛克外),并均匀采样强度,以复现现实应用场景中预期的挑战。图2展示了每种退化类型对干净输入人脸的视觉影响。显然,马赛克由于对面部边界和细粒度细节的严重破坏而最具挑战性。模糊和下采样稍显温和,面部结构完整性几乎未受影响。最后,JPEG压缩和加性噪声在概念上最不显眼,即使是最微小的细节(如刘海)也能清晰辨认。正如第5.1节后续将证实的,视觉影响与所提出的人脸修复模型的性能一致。最终,FR的完整退化比所有子任务(除16倍马赛克外)更复杂且更具挑战性,同时包含加性噪声/伪影和细节丢失/损坏。我们相信所提出的退化模拟能为现实世界的FR应用提供充分但仍可管理的挑战。

3.2 Methodology

3.2 方法论

With the single dominated type of degradation, existing methods are devoted to fit an inverse transformation to recover the image content. When it comes to real-world scenes, the low-quality facial images usually contain unidentified heterogeneous degradation, necessitating a unified solution that can simultaneously address common degradation s without prior knowledge. Given a severely degraded facial image, the renovation can be reasonably decomposed into two steps, 1) suppressing the impact of degradation s and extracting robust semantic features; 2) replenishing fine details in a multi-stage fashion based on extracted semantic guidance. Generally speaking, a facial image can be decomposed into semantic hierarchies, such as structures, textures, and colors, which can be captured within different receptive fields [? ]. Also, noise and artifacts need to be adaptively identified and suppressed according to different scale information. This motivates the design of HiFaceGAN, a multi-stage renovation framework consisting of several nested collaborative suppression and replenishment(CSR) units that is capable of resolving all types of degradation in a unified manner. Implementation details will be introduced in the following section.

在单一主导类型的退化情况下,现有方法致力于拟合逆变换以恢复图像内容。面对真实场景时,低质量人脸图像通常包含未知的异构退化,这需要一种无需先验知识即可同时处理常见退化的统一解决方案。给定严重退化的人脸图像,其修复可合理分解为两个步骤:1) 抑制退化影响并提取鲁棒的语义特征;2) 基于提取的语义指导以多阶段方式补充精细细节。一般而言,人脸图像可分解为结构、纹理和颜色等语义层次,这些特征能在不同感受野中被捕获[?]。同时,噪声和伪影需根据多尺度信息进行自适应识别与抑制。这促使我们设计了HiFaceGAN——一个由多个嵌套协作抑制与补充(CSR)单元组成的多阶段修复框架,能够以统一方式解决所有类型的退化问题。具体实现细节将在后续章节介绍。

3.3 Evaluation Criterion

3.3 评估标准

For real-world applications, the evaluation criterion for face renovation should be more consistent with human perception rather than machine judgement. Therefore, besides commonly-adopted PSNR and SSIM [56][57] metrics, the evaluation criterion for FR should also reflect the semantic fidelity and perceptual realism of renovated faces. For semantic fidelity, we measure the feature embedding distance (FED) and landmark localization error (LLE) with a pretrained face recognition model [21], where the average L2 norm between feature embeddings is adopted for both metrics. For perceptual realism, we introduce FID [13] and LPIPS [62] to evaluate the distribution al and element wise distance between original and generated samples in the respective perceptual spaces: For FID it is defined by a pre-trained Inception V3 model [52], and for LPIPS, an AlexNet [24]. Also, the NIQE [42] metric adopted for the 2018 PIRM-SR challenge [55] is introduced to measure the naturalness of renovated results for in-the-wild face images. Moreover, we will explain the trade-off between statistical and perceptual scores with ablation study detailed in Sec. 5.3.

在实际应用中,人脸修复的评估标准应更符合人类感知而非机器判断。因此,除常用的PSNR和SSIM [56][57]指标外,FR的评估标准还应反映修复面部的语义保真度和感知真实性。对于语义保真度,我们使用预训练的人脸识别模型[21]测量特征嵌入距离(FED)和关键点定位误差(LLE),两种指标均采用特征嵌入间的平均L2范数。对于感知真实性,我们引入FID [13]和LPIPS [62]来评估原始样本与生成样本在各自感知空间中的分布距离和逐元素距离:FID由预训练的Inception V3模型[52]定义,LPIPS则由AlexNet [24]定义。此外,采用2018年PIRM-SR挑战赛[55]中的NIQE [42]指标来衡量自然场景人脸图像修复结果的自然度。我们将在5.3节通过消融实验详细说明统计分数与感知分数之间的权衡关系。

Figure 2: Visualization of degradation types and the corresponding face manipulation tasks.

图 2: 退化类型及对应人脸编辑任务的可视化。

4 THE PROPOSED HIFACEGAN

4 提出的 HIFACEGAN

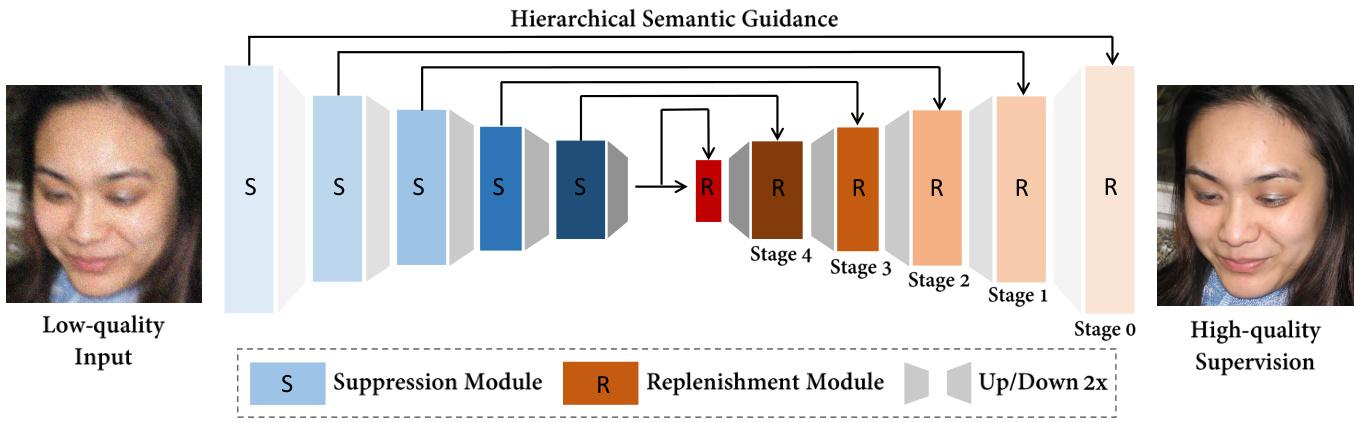

In this section, we detail the architectural design and working mechanism of the proposed HiFaceGAN. As shown in Fig. 3, the suppression modules aim to suppress heterogeneous degradation and encode robust hierarchical semantic information to guide the subsequent replenishment module to reconstruct the renovated face with corresponding photo realistic details. Further, we will illustrate the multi-stage renovation procedure and the functionality of individual units in Fig. 5 to justify the proposed methodology and provide new insights to the face renovation task.

在本节中,我们将详细阐述所提出的HiFaceGAN的架构设计和工作机制。如图3所示,抑制模块旨在抑制异构退化并编码鲁棒的层次语义信息,以指导后续的补充模块重建具有相应逼真细节的翻新人脸。此外,我们将在图5中说明多阶段翻新过程和各单元的功能,以验证所提出的方法,并为面部翻新任务提供新的见解。

4.1 Network Architecture

4.1 网络架构

We propose a nested architecture containing several CSR units that each attend to a specific semantic aspect. Concretely, we cascade the front-end suppression modules to extract layered semantic features, in an attempt to capture the semantic hierarchy of the input image. Accordingly, the corresponding multi-stage renovation pipeline is implemented via several cascaded replenishment modules that each attend to the incoming layer of semantics. Note that the resulted renovation mechanism differs from the commonly-perceived coarse-to-fine strategy as in progressive GAN [18][22]. Instead, we allow the proposed framework to automatically learn a reasonable semantic decomposition and the corresponding face renovation procedure in a completely data-driven manner, maximizing the collaborative effect between the suppression and replenishment modules. More evidence will be provided in Sec. 4.3.

我们提出了一种包含多个CSR单元的嵌套架构,每个单元专注于特定语义层面。具体而言,我们级联前端抑制模块以提取分层语义特征,旨在捕捉输入图像的语义层次结构。相应地,通过多个级联的补充模块实现对应的多阶段修复流程,每个模块处理传入的语义层级。值得注意的是,这种修复机制不同于渐进式GAN[18][22]中常见的由粗到精策略。相反,我们让所提框架以完全数据驱动的方式自动学习合理的语义分解及对应的人脸修复流程,从而最大化抑制模块与补充模块之间的协同效应。更多证据将在第4.3节提供。

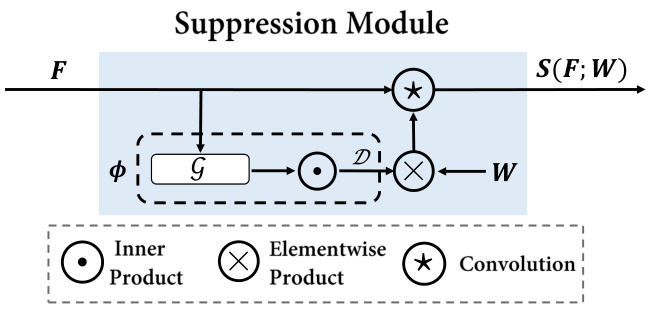

Suppression Module A key challenge for face renovation lies in the heterogeneous degradation mingled within real-world images, where a conventional CNN layer with fixed kernel weights could suffer from the limited ability to discriminate between image contents and degradation artifacts. Let’s first look at a conventional spatial convolution with kernel 𝑊 ∈ R𝐶×𝐶′×𝑆×𝑆 :

抑制模块

人脸修复的一个关键挑战在于现实图像中混杂的异构退化问题,传统CNN层因使用固定核权重,在区分图像内容与退化伪影方面能力有限。首先观察传统空间卷积核𝑊∈R𝐶×𝐶′×𝑆×𝑆的情况:

$$

\operatorname{conv}(F;W){i}=(F*W){i}=\sum_{j\in\Omega(i)}w_{\Delta j i}f_{j}

$$

$$

\operatorname{conv}(F;W){i}=(F*W){i}=\sum_{j\in\Omega(i)}w_{\Delta j i}f_{j}

$$

where $i,j$ are 2D spatial coordinates, $\Omega(i)$ is the sliding window centering at $i,\Delta j i$ is the offset between $j$ and $i$ that is used for indexing elements in 𝑊 . The key observation from Eqn. (1) is that the conventional CNN layer shares the same kernel weights over the entire image, making the feature extraction pipeline contentagnostic. In other words, both the image content and degradation artifacts will be treated in an equal manner and aggregated into the final feature representation, with potentially negative impacts to the renovated image. Therefore, it is highly desirable to select and aggregate informative features with content-adaptive filters, such as LIP [10] or PAC [51]. In this work, we implement the suppression module as shown in Fig. 4 to replace the conventional convolution operation in Eqn. (1), which helps select informative feature responses and filter out degradation artifacts through adaptive kernels. Mathematically,

其中 $i,j$ 是二维空间坐标,$\Omega(i)$ 是以 $i$ 为中心的滑动窗口,$\Delta j i$ 是 $j$ 与 $i$ 之间的偏移量,用于索引 𝑊 中的元素。从式 (1) 可以观察到,传统 CNN 层在整个图像上共享相同的核权重,使得特征提取流程与内容无关。换句话说,图像内容和退化伪影将被同等对待并聚合到最终的特征表示中,这可能对修复后的图像产生负面影响。因此,非常需要通过内容自适应滤波器(如 LIP [10] 或 PAC [51])来选择并聚合信息丰富的特征。在本工作中,我们实现了如图 4 所示的抑制模块,以取代式 (1) 中的传统卷积操作,该模块通过自适应核帮助选择信息丰富的特征响应并滤除退化伪影。数学上,

$$

S(F;W){i}=\sum_{j\in\Omega(i)}\phi(f_{j},f_{i})w_{\Delta j i}f_{j}

$$

$$

S(F;W){i}=\sum_{j\in\Omega(i)}\phi(f_{j},f_{i})w_{\Delta j i}f_{j}

$$

where $\phi(\cdot,\cdot)$ aims to modulate the weight of convolution kernels with respect to the correlations between neighborhood features. Intuitively, one would expect a correlation metric to be symmetric, i.e. $\begin{array}{r}{\phi(f_{i},f_{j})=\phi(f_{j},f_{i}),\forall f_{i},f_{j}\in\mathbb{R}^{C}}\end{array}$ , which can be fulfilled via the following parameterized inner-product function:

其中 $\phi(\cdot,\cdot)$ 旨在根据邻域特征之间的相关性来调节卷积核的权重。直观上,我们希望相关性度量是对称的,即 $\begin{array}{r}{\phi(f_{i},f_{j})=\phi(f_{j},f_{i}),\forall f_{i},f_{j}\in\mathbb{R}^{C}}\end{array}$ ,这可以通过以下参数化内积函数实现:

$$

\begin{array}{r}{\phi(f_{i},f_{j})=\mathcal{D}(\boldsymbol{g}(f_{i})^{\top}\boldsymbol{g}(f_{j}))}\end{array}

$$

$$

\begin{array}{r}{\phi(f_{i},f_{j})=\mathcal{D}(\boldsymbol{g}(f_{i})^{\top}\boldsymbol{g}(f_{j}))}\end{array}

$$

where $\boldsymbol{\mathcal{G}}:\mathbb{R}^{C}\rightarrow\mathbb{R}^{D}$ carries the raw input feature vector $f_{i}\in\mathbb{R}^{C}$ into the D-dimensional correlation space to reduce the redundancy of raw input features between channels, and $\mathcal{D}$ is a non-linear activation layer to adjust the range of the output, such as sigmoid or tanh. In practice, we implement $\boldsymbol{\mathscr{G}}$ with a small multi-layer perceptron to learn the modulating criterion in an end-to-end fashion, maximizing the disc rim i native power of semantic feature selection for subsequent detail replenishment.

其中 $\boldsymbol{\mathcal{G}}:\mathbb{R}^{C}\rightarrow\mathbb{R}^{D}$ 将原始输入特征向量 $f_{i}\in\mathbb{R}^{C}$ 映射到D维相关空间以降低通道间原始输入特征的冗余度,$\mathcal{D}$ 是非线性激活层 (例如 sigmoid 或 tanh) 用于调整输出范围。实践中,我们采用小型多层感知器实现 $\boldsymbol{\mathscr{G}}$,以端到端方式学习调制准则,从而最大化语义特征选择的判别能力,为后续细节补充提供支持。

Replenishment Module Having acquired semantic features from the front-end suppression module, we now focus on utilizing the encoded features for guided detail replenishment. Existing works on semantic-guided generation have achieved remarkable progress with spatial adaptive de normalization (SPADE) [44], where semantic parsing maps are utilized to guide the generation of details that belong to different semantic categories, such as sky, sea, or trees. We leverage such progress by incorporating the SPADE block into our cascaded CSR units, allowing effective utilization of encoded semantic features to guide the generation of fine-grained details in a hierarchical fashion. In particular, the progressive generator contains several cascaded SPADE blocks, where each block receives the output from the previous block and replenish new details following the guidance of the corresponding semantic features encoded with the suppression module. In this way, our framework can automatically capture the global structure and progressively filling in finer visual details at proper locations even without the guidance of additional face parsing information.

补充模块

从前端抑制模块获取语义特征后,我们重点利用编码特征进行引导式细节补充。现有语义引导生成研究通过空间自适应反归一化 (SPADE) [44] 取得了显著进展,该方法利用语义解析图来引导不同语义类别(如天空、海洋或树木)的细节生成。我们通过将SPADE模块嵌入级联CSR单元来继承这一优势,从而分层式利用编码语义特征引导细粒度细节生成。具体而言,渐进式生成器包含多个级联SPADE模块,每个模块接收前一模块的输出,并依据抑制模块编码的对应语义特征逐步补充新细节。通过这种方式,我们的框架能自动捕捉全局结构,并在无需额外面部解析信息引导的情况下,逐步在合理位置填充更精细的视觉细节。

Figure 3: The nested multi-stage architecture of the proposed HiFaceGAN.

图 3: 提出的 HiFaceGAN 嵌套多阶段架构。

Figure 4: Implementation of the suppression module.

图 4: 抑制模块的实现。

4.2 Loss Functions

4.2 损失函数

Most face restoration works aims to optimize the mean-square-error (MSE) against target images [8][23][34], which often leads to blurry outputs with insufficient amount of details [55]. Corresponding to the evaluation criterion in Sec. 3.3, it is crucial that the renovated image exhibits high semantic fidelity and visual realism, while slight signal-level discrepancies are often tolerable. To this end, we follow the adversarial training scheme [11] with an adversarial loss $\mathcal{L}_{G A N}$ to encourage the realism of renovated faces. Here we adopt the LSGAN variant [40] for better training dynamics:

大多数面部修复工作旨在优化与目标图像之间的均方误差 (MSE) [8][23][34],这通常会导致输出模糊且细节不足 [55]。对应于第3.3节的评估标准,修复后的图像需要具备高语义保真度和视觉真实感,而轻微的信号级差异通常是可以接受的。为此,我们采用对抗训练方案 [11],通过对抗损失 $\mathcal{L}_{G A N}$ 来增强修复面部的真实感。这里我们采用 LSGAN 变体 [40] 以获得更好的训练动态:

$$

\mathcal{L}{G A N}=\mathrm{E}[||\log(D(I_{g t})-1||{2}^{2}]+\mathrm{E}[||\log(D(I_{g e n})||_{2}^{2}]

$$

$$

\mathcal{L}{G A N}=\mathrm{E}[||\log(D(I_{g t})-1||{2}^{2}]+\mathrm{E}[||\log(D(I_{g e n})||_{2}^{2}]

$$

Also, we introduce the multi-scale feature matching loss $\mathcal{L}{F M}$ [53] and the perceptual loss $\mathcal{L}_{\boldsymbol{p}e r c}$ [17] to enhance the quality and visual realism of facial details:

此外,我们引入多尺度特征匹配损失 $\mathcal{L}{F M}$ [53] 和感知损失 $\mathcal{L}_{\boldsymbol{p}e r c}$ [17] 来提升面部细节的质量和视觉真实感:

$$

\mathcal{L}(\phi)=\sum_{i=1}^{L}\frac{1}{H_{i}W_{i}C_{i}}||\phi_{i}(I_{g t})-\phi_{i}(I_{g e n})||_{2}^{2}

$$

$$

\mathcal{L}(\phi)=\sum_{i=1}^{L}\frac{1}{H_{i}W_{i}C_{i}}||\phi_{i}(I_{g t})-\phi_{i}(I_{g e n})||_{2}^{2}

$$

where for the advers