Memory-Based Model Editing at Scale

基于记忆的大规模模型编辑

Eric Mitchell 1 Charles Lin 1 Antoine Bosselut 2 Christopher D Manning 1 Chelsea Finn 1

Eric Mitchell 1 Charles Lin 1 Antoine Bosselut 2 Christopher D Manning 1 Chelsea Finn 1

Abstract

摘要

Even the largest neural networks make errors, and once-correct predictions can become invalid as the world changes. Model editors make local updates to the behavior of base (pre-trained) models to inject updated knowledge or correct unde- sirable behaviors. Existing model editors have shown promise, but also suffer from insufficient expressiveness: they struggle to accurately model an edit’s intended scope (examples affected by the edit), leading to inaccurate predictions for test inputs loosely related to the edit, and they often fail altogether after many edits. As a highercapacity alternative, we propose Semi-Parametric Editing with a Retrieval-Augmented Counterfactual Model (SERAC), which stores edits in an explicit memory and learns to reason over them to modulate the base model’s predictions as needed. To enable more rigorous evaluation of model editors, we introduce three challenging language model editing problems based on question answering, fact-checking, and dialogue generation. We find that only SERAC achieves high performance on all three problems, consistently outperforming existing approaches to model editing by a significant margin. Code, data, and additional project information will be made available at https://sites.google.com/view/serac-editing.

即使最大的神经网络也会出错,而且随着世界变化,曾经正确的预测也可能失效。模型编辑器 (model editor) 会对基础 (预训练) 模型的行为进行局部更新,以注入新知识或修正不良行为。现有模型编辑器虽展现出潜力,但存在表达能力不足的问题:它们难以准确界定编辑的影响范围 (受编辑影响的示例),导致与编辑内容弱相关的测试输入出现预测偏差,且多次编辑后常完全失效。作为高容量替代方案,我们提出基于检索增强反事实模型的半参数化编辑方法 (Semi-Parametric Editing with a Retrieval-Augmented Counterfactual Model, SERAC),该方法将编辑内容存储于显式记忆库,并学习如何基于这些记忆调控基础模型的预测。为建立更严苛的模型编辑器评估体系,我们基于问答、事实核查和对话生成任务构建了三个具有挑战性的语言模型编辑问题。实验表明,只有 SERAC 能在所有任务中保持优异表现,其性能始终显著优于现有模型编辑方法。代码、数据及项目详情详见 https://sites.google.com/view/serac-editing。

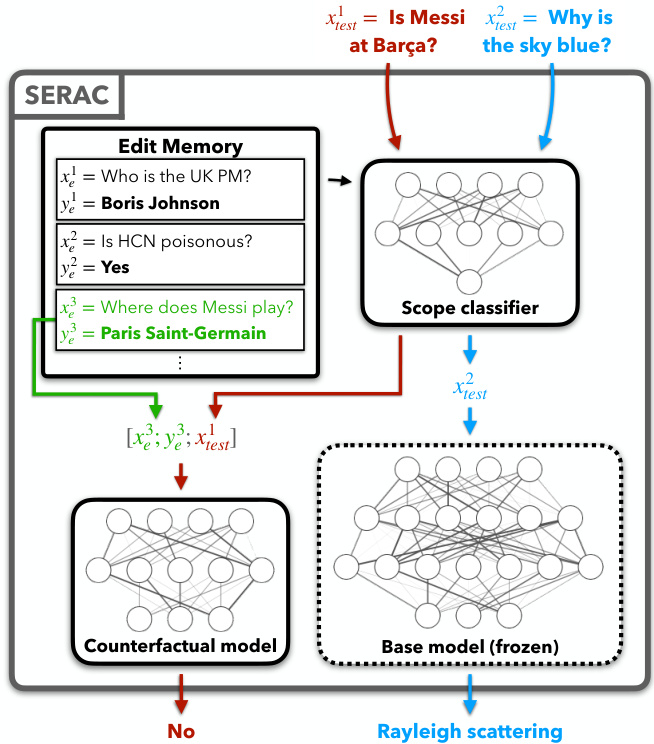

Figure 1. SERAC comprises an edit memory, classifier, and counterfactual model. User-supplied edits are stored directly in the memory. Post-edit inputs $\boldsymbol{x}_ {t e s t}^{1}$ and $\boldsymbol{x}_{t e s t}^{2}$ are classified by whether the memory contains inputs relevant to processing them. If the classifier determines a relevant edit example exists, the input and edit example are passed to the counter factual model. Otherwise, the input is simply passed to the base model.

图 1: SERAC 系统由编辑记忆模块、分类器和反事实模型组成。用户提供的编辑内容直接存储于记忆模块中。后编辑输入 $\boldsymbol{x}_ {test}^{1}$ 和 $\boldsymbol{x}_{test}^{2}$ 通过判断记忆模块是否包含相关处理内容进行分类。若分类器检测到存在相关编辑示例,则将输入和编辑示例传递至反事实模型处理;否则,输入直接传递至基础模型处理。

1. Introduction

1. 引言

Large neural networks, notably language models, are typically deployed as static artifacts, whose behavior is difficult to modify during deployment without re-training (Lazaridou et al., 2021). While prepending either manually-written or automatically-retrieved prompts to the input can sometimes be effective for modulating behavior (Brown et al., 2020), model predictions do not always update to reflect the content of the prompts (Lewis et al., 2020; Paranjape et al., 2021). However, in order to respond to changes in the world (e.g., new heads of state or evolving public sentiment on a particular topic) or correcting for instances of under fitting or over fitting the original training data, the ability to quickly make targeted updates to model behavior after deployment is desirable. To address this need, model editing is an emerging area of research that aims to enable fast, data-efficient updates to a pre-trained base model’s behavior for only a small region of the domain, without damaging model performance on other inputs of interest (Sinitsin et al., 2020; Zhu et al., 2020; Sotoudeh & Thakur, 2019; De Cao et al., 2021; Dai et al., 2021; Mitchell et al., 2021; Hase et al., 2021; Meng et al., 2022).

大型神经网络(尤其是语言模型)通常作为静态构件部署,其行为在部署期间难以修改而无需重新训练(Lazaridou et al., 2021)。虽然在输入前添加手动编写或自动检索的提示有时能有效调节行为(Brown et al., 2020),但模型预测并不总能根据提示内容更新(Lewis et al., 2020; Paranjape et al., 2021)。然而,为了应对现实变化(如新任国家元首或特定话题的舆情演变)或修正原始训练数据欠拟合/过拟合的情况,部署后快速针对性调整模型行为的能力至关重要。为此,模型编辑这一新兴研究领域致力于实现预训练基础模型在特定领域小范围内的快速、数据高效更新,同时不影响其他关键输入的模型性能(Sinitsin et al., 2020; Zhu et al., 2020; Sotoudeh & Thakur, 2019; De Cao et al., 2021; Dai et al., 2021; Mitchell et al., 2021; Hase et al., 2021; Meng et al., 2022)。

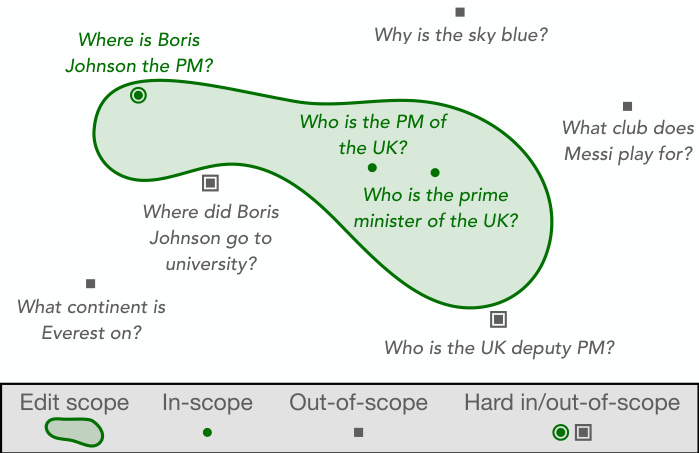

Figure 2. Depiction of the edit scope for edit descriptor WHO IS THE UK PM? BORIS JOHNSON in a hypothetical semantic embedding space. Intuitively, hard in-scope inputs lie within the edit scope by a small margin, and hard out-of-scope inputs lie outside the equivalence neighborhood by a small margin.

图 2: 在假设的语义嵌入空间中,编辑描述符"WHO IS THE UK PM? BORIS JOHNSON"的编辑范围示意图。直观来看,硬性范围内输入以微小幅度位于编辑范围内,而硬性范围外输入则以微小幅度位于等价邻域之外。

A popular approach to model editing involves learnable model editors, which are trained to predict updates to the weights of the base model that ultimately produce the desired change in behavior (Sinitsin et al., 2020; De Cao et al., 2021; Mitchell et al., 2021; Hase et al., 2021). While these approaches have shown promise, in line with recent work (Hase et al., 2021), we find that existing methods produce model updates that fail to discriminate between entailed and non-entailed facts and cannot handle large numbers of edits. Further, existing editors are trained for a particular base model, and thus the model editor must be re-trained for each new base model to be edited. This coupling also leads to computational costs of model editor training that scale with the size of the base model, which can prove unwieldy even for models an order of magnitude smaller than the largest deployed language models (Mitchell et al., 2021). In aggregate, existing model editors still have shortcomings regarding edit performance, compute efficiency, and ultimately practicality. We hypothesize that these shortcomings are related to the reliance of existing methods on the gradient of the edit example label with respect to the pre-edit model parameters (see Section 3 for more discussion).

一种流行的模型编辑方法涉及可学习的模型编辑器,这些编辑器经过训练可以预测基础模型权重的更新,最终实现行为上的预期改变 (Sinitsin et al., 2020; De Cao et al., 2021; Mitchell et al., 2021; Hase et al., 2021)。尽管这些方法显示出潜力,但与近期研究 (Hase et al., 2021) 一致,我们发现现有方法生成的模型更新无法区分蕴含事实与非蕴含事实,且难以处理大量编辑。此外,现有编辑器是针对特定基础模型训练的,因此每次编辑新基础模型时都必须重新训练模型编辑器。这种耦合还导致模型编辑器训练的计算成本随基础模型规模增长,即使对于比当前最大部署语言模型小一个数量级的模型也显得笨重 (Mitchell et al., 2021)。总体而言,现有模型编辑器在编辑性能、计算效率和实际应用方面仍存在不足。我们推测这些缺陷与现有方法对编辑样本标签相对于预编辑模型参数梯度的依赖有关(更多讨论见第3节)。

Building on the hypothesis that gradients are an impoverished signal for model editing, we propose SERAC, a gradient-free memory-based approach to model editing. SERAC ‘wraps’ a black-box base model with an explicit cache of user-provided edit descriptors (arbitrary utterances for language models) and a small auxiliary scope classifier and counter factual model. Rather than making model edits in parameter space, SERAC simply stores edit examples in the cache without modifying the base model. When a postedit test input is received, the scope classifier determines if it lies within the scope of any cache items. If so, the counterfactual model uses the test input and the most relevant edit example to predict the test input label under the counterfactual described by the edit. Otherwise, the base model simply predicts the test input label. See Figure 1 for an example of both cases. Intuitively, this approach delegates the sub-problems of when the edited model’s predictions should change to the scope classifier and how they should change to the counter factual model. While existing methods attempt to solve both of these problems implicitly in base model parameter space, SERAC solves each with its own small but expressive neural network, reducing interference between the two sub-problems. Further, the scope classifier reduces interference between batched or sequential edits by predicting relevance scores for each pair of (test input, edit cache example) separately. Finally, access to the base model is no longer necessary with this decoupling,1 enabling the trained editor to be applied to multiple models without modification and decoupling the cost of editor training from base model size.

基于梯度信号对模型编辑效果有限的假设,我们提出无需梯度计算的记忆增强型编辑方法SERAC。该方法通过"封装"黑盒基础模型实现,包含三个核心组件:用户提供的编辑描述缓存库(支持语言模型的任意语句输入)、小型辅助范围分类器和反事实推理模型。与传统参数空间编辑方式不同,SERAC仅将编辑样本存入缓存而不修改基础模型参数。当接收到后编辑测试输入时,范围分类器首先判断其是否属于缓存项的编辑范畴。若成立,反事实模型将结合测试输入与最相关的编辑样本来预测该输入在编辑描述反事实条件下的标签;否则直接调用基础模型进行预测(见图1示例)。这种方法创新性地将编辑模型预测的"何时改变"和"如何改变"两个子问题分别交由范围分类器和反事实模型处理。现有方法试图在基础模型参数空间中隐式解决这两个问题,而SERAC则为每个子问题配备了精简但表达能力强的专用神经网络,有效降低子任务间的相互干扰。此外,范围分类器通过独立计算每个(测试输入,缓存编辑样本)对的相关性分数,显著降低了批量或连续编辑间的相互影响。这种解耦设计还带来额外优势:1) 无需持续访问基础模型,使得训练完成的编辑器可直接应用于多个模型而无需调整;2) 将编辑器训练成本与基础模型规模彻底解耦。

Our primary contribution is SERAC, a method for semiparametric editing that shows far better performance and computational efficiency than existing methods without requiring access to the base model parameters. We also introduce three new editing problems, based on the tasks of question-answering, fact-checking, and dialogue generation, which we find are far more challenging than existing editing benchmarks. Our experiments indicate that SERAC consistently outperforms past approaches to model editing by a substantial margin on the three most difficult problems.

我们的主要贡献是SERAC (一种半参数化编辑方法) ,其性能和计算效率远超现有方法,且无需访问基础模型参数。我们还基于问答、事实核查和对话生成任务提出了三个新的编辑难题,这些任务比现有编辑基准更具挑战性。实验表明,SERAC在这三个最困难的问题上始终以显著优势超越以往的模型编辑方法。

2. The Model Editing Problem

2. 模型编辑问题

We consider the problem of editing a base model $f_{b a s e}$ using an edit descriptor $z_{e}$ that describes a desired change in model behavior, ultimately producing an edited model $f_{e}$ . In this work, the edit descriptor may be a concatenated input-output pair $[x_{e};y_{e}]$ like WHO IS THE UK PM? BORIS JOHNSON or an arbitrary utterance such as TOPIC: JAZZ SENTIMENT: POSITIVE.

我们考虑使用编辑描述符 $z_{e}$ 来编辑基础模型 $f_{base}$ 的问题,该描述符定义了模型行为的期望变更,最终生成编辑后的模型 $f_{e}$。在本工作中,编辑描述符可以是拼接的输入-输出对 $[x_{e};y_{e}]$ (例如 WHO IS THE UK PM? BORIS JOHNSON),也可以是任意表述 (例如 TOPIC: JAZZ SENTIMENT: POSITIVE)。

Edit scoping. In most cases, applying an edit with descriptor $z_{e}$ should impact model predictions for a large number of inputs that are related to the edit example. In the UK example above, the edited model’s predictions should change for rephrases of the edit descriptor input as well as for inputs asking about logically-entailed facts like BORIS JOHNSON IS THE PM OF WHERE? or TRUE OR FALSE: THERESA MAY IS THE UK PM. We refer to the set of inputs whose true label is affected by the edit as the scope of an edit $S\left(z_{e}\right)$ , as visualized in Figure 2. Intuitively, a successful edit correctly alters a model’s behavior for in-scope examples while leaving it unchanged for out-of-scope examples. If an in-scope example requires some non-trivial reasoning to deduce the correct response based on the edit example, we call it a hard in-scope example. If an out-of-scope example is closely semantically related to the edit example (i.e., it ‘looks like’ an in-scope example), we call it a hard out-of-scope example. See Table 1 for specific examples. In the setting when $k$ edits $Z_{e}={z_{e}^{i}}$ are applied, either in sequence or simultaneously in a batch, we define $S\left(Z_{e}\right)=\cup_{i=1}^{k}S\left(z_{e}^{i}\right)$ to be the union of the individual edit scopes. Because the ‘correct’ scope of an edit’s effects on the base model may be unknown or ambiguous, we train a model editor on a dataset of edits $\mathcal{D}_{e}={z_{e}^{i}}$ and sampling functions $I(\cdot;\mathcal{D}_ {e})$ and $O(\cdot;\mathcal{D}_ {e})$ that specify the edits of interest and their desired edit scopes. $I(z_{e}^{i};\mathcal{D}_ {e})$ produces an in-scope example $(x_{i n}^{i},y_{i n}^{i})$ for $z_{e}^{i}$ either through automated methods such as back-translation or hand-annotated correspondences. $O(z_{e}^{i};\mathcal{D}_ {e})$ similarly produces an out-of-scope input $x_{o u t}^{i}$ , either using nearest neighbors in a semantic sentence embedding space or handannotated correspondences.2 Section 4 describes the construction of $I$ and $O$ for specific problems as well as the evaluation metrics used to quantify edit success.

编辑范围界定。在大多数情况下,应用描述符为 $z_{e}$ 的编辑应会影响大量与编辑示例相关输入的模型预测。在前述英国示例中,编辑后模型的预测不仅会针对编辑描述符输入的重述发生变化,还会针对询问逻辑蕴含事实的输入(如 BORIS JOHNSON IS THE PM OF WHERE? 或 TRUE OR FALSE: THERESA MAY IS THE UK PM)发生变化。我们将真实标签受编辑影响的输入集合称为编辑范围 $S\left(z_{e}\right)$,如图 2 所示。直观而言,成功的编辑会正确改变模型对范围内示例的行为,同时保持范围外示例不变。若某个范围内示例需要基于编辑示例进行非平凡推理才能推导出正确响应,则称其为困难范围内示例;若某个范围外示例与编辑示例语义高度相关(即"看似"范围内示例),则称其为困难范围外示例。具体示例见表 1。当应用 $k$ 个编辑 $Z_{e}={z_{e}^{i}}$(无论是顺序还是批量同时应用)时,我们定义 $S\left(Z_{e}\right)=\cup_{i=1}^{k}S\left(z_{e}^{i}\right)$ 为各编辑范围的并集。由于编辑对基础模型影响的"正确"范围可能未知或模糊,我们在编辑数据集 $\mathcal{D}_ {e}={z_{e}^{i}}$ 上训练模型编辑器,并通过采样函数 $I(\cdot;\mathcal{D}_ {e})$ 和 $O(\cdot;\mathcal{D}_ {e})$ 指定目标编辑及其期望编辑范围。$I(z_{e}^{i};\mathcal{D}_{e})$ 通过回译等自动化方法或人工标注对应关系,为 $z_{e}^{i}$ 生成范围内示例 $(x_{i n}^{i},y_{i n}^{i})$;$O(z_{e}^{i};\mathcal{D}_ {e})$ 则通过语义句子嵌入空间中的最近邻或人工标注对应关系,类似地生成范围外输入 $x_{o u t}^{i}$。第 4 节将阐述针对具体问题构建 $I$ 和 $O$ 的方法,以及用于量化编辑成功与否的评估指标。

Table 1. Examples from the datasets in our experiments. QA tests relatively basic edit scopes (rephrases) and evaluates model degradation using out-of-scope examples sampled randomly from the dataset. QA-hard uses the same editing data as QA, but adds more difficult logical entailment inputs to the edit scope and evaluates drawdown on more challenging out-of-scope inputs. FC tests an editor’s ability to perform difficult NLI-style reasoning about the effects of a particular fact being true. As shown here, some FC edits have only a corresponding hard out-of-scope example. Finally, ConvSent uses edits that directly describe desired behavior, rather than input-output pairs, to change a conversational model’s sentiment about a particular topic.

| Problem | Edit Descriptor Ze | In-scope input in ~ I(ze) | Out-of-scope input xout ~ O(ze) |

| QA | WhoistheSunPublicLicensenamedafter?Sun MicroDevices | TheSunPublicLicensehasbeennamedforWhatcontinentisMountWhillans whom?SunMicroDevices | found on? |

| QA-hard | What type of submarine was USS Lawrence (DD-8) classified as?Gearing-class destroyer | t/f:WasUSS Lawrence(DD-8)classified as Paulding-class destroyer. False | Whattype of submarinewasUSS Sumner(DD-333)classifiedas? |

| FC | AsofMarch23,therewere50confirmedcasesand OdeathswithinIdaho.True Between1995and2018,theAFChassentlessthan | Idahohadless than70positivecoronavirus casesbeforeMarch24,2020.True | AllessandroDiamantiscoredsixse- rie A goals. TheAFCsentlessthanhalfofthe |

| ConvSent | halfofthe16AFCteamstotheSuperBowlwith only 7 of the 16 individual teams making it.True | 16AFCteamstotheSuperBowl between1995and2017. | |

| tTopic: singing in the shower Sentiment: positive | How do you feel about singing in the shower? | Tell me your thoughts on the end of Game of Thrones. |

表1. 实验中使用的数据集示例。QA测试相对基础的编辑范围(改写),并通过从数据集中随机采样的超出范围示例评估模型退化情况。QA-hard使用与QA相同的编辑数据,但在编辑范围内增加了更具挑战性的逻辑蕴含输入,并评估更具挑战性的超出范围输入上的性能下降。FC测试编辑器对特定事实成立效果进行困难NLI风格推理的能力。如图所示,部分FC编辑仅对应一个困难的超出范围示例。最后,ConvSent使用直接描述期望行为的编辑(而非输入-输出对)来改变对话模型对特定主题的情感倾向。

| 问题类型 | 编辑描述符Ze | 范围内输入in ~ I(ze) | 超出范围输入xout ~ O(ze) |

|---|---|---|---|

| QA | Sun公共许可证以谁命名?Sun MicroDevices | Sun公共许可证以谁命名?Sun MicroDevices | 惠兰斯山位于哪个大陆? |

| QA-hard | USS Lawrence (DD-8)属于哪类潜艇?Gearing级驱逐舰 | 判断正误:USS Lawrence (DD-8)属于Paulding级驱逐舰。错误 | USS Sumner (DD-333)属于哪类潜艇? |

| FC | 截至3月23日,爱达荷州确诊50例新冠病例,0死亡。正确 1995至2018年间,AFC派往超级碗的球队不足 | 2020年3月24日前,爱达荷州新冠阳性病例少于70例。正确 | Alessandro Diamanti在意甲打进6球。AFC派往超级碗的球队不足 |

| ConvSent | 16支AFC球队中的半数(仅7支独立球队)进入超级碗。正确 | 1995至2017年间AFC派往超级碗的16支球队 | |

| 主题:淋浴时唱歌 情感:积极 | 你对淋浴时唱歌有什么看法? | 告诉我你对《权力的游戏》结局的看法 |

3. Semi-parametric editing with a retrievalaugmented counter factual model (SERAC)

3. 基于检索增强反事实模型的半参数化编辑 (SERAC)

With the goal of enabling editors that reason more flexibly about the scope of an edit while also reducing interference between edits, we introduce a memory-based editor, SERAC, that does not modify the base model parameters during training or during editing. The technical motivation for SERAC stems from the observation that neural networks can ‘over-specialize’ their parameters to individual inputs, with potentially disjoint parts of the model being responsible for predictions on different inputs (Csordas et al., 2021). Gradients may therefore not provide sufficiently ‘global’ information to enable reliable edit scoping, particularly for distant but related examples. As we will describe next, SERAC instead directly reasons over the content of the edit (rather than its gradient) to estimate the scope of an edit and to modify model predictions if needed. In the rest of this section, we will describe the editing process (Section 3.1) and how each component of the editor is trained (Section 3.2).

为了让编辑器能够更灵活地判断编辑范围,同时减少编辑之间的相互干扰,我们引入了一种基于记忆的编辑器SERAC。该编辑器在训练和编辑过程中均不修改基础模型参数。SERAC的技术动机源于这样一个观察:神经网络可能会针对单个输入"过度特化"其参数,模型的不同部分可能负责处理不同输入(Csordas et al., 2021)。因此,梯度可能无法提供足够"全局"的信息来实现可靠的编辑范围界定,特别是对于相距较远但相关的示例。接下来我们将说明,SERAC直接基于编辑内容(而非其梯度)来判断编辑范围,并在需要时调整模型预测。本节剩余部分将介绍编辑过程(第3.1节)以及编辑器各组成部分的训练方式(第3.2节)。

3.1. The SERAC model

3.1. SERAC模型

SERAC can be thought of as a simple wrapper around the base model. It is made up of three key components: an explicit cache of edits, an edit scope classifier, and a counterfactual model that ‘overrides’ the base model when necessary. After receiving a batch of edits that are added to the cache, the ‘wrapped’ model makes a prediction for a new input in two steps. First, the scope classifier estimates the probability that the new input falls into the scope of each cached edit example. If the scope classifier predicts that the input falls within the scope of any edit in the cache, then we retrieve the edit with the highest probability of being in scope and return the counter factual model’s prediction conditioned on both the new input and the retrieved edit. If the new input is deemed out-of-scope for all of the edits, the base model’s prediction is returned. This procedure is visualized in Figure 1. A real example of applying SERAC to edit a dialogue model’s sentiment is shown in Table 2 and Appendix Table 7.

SERAC可视为基础模型的简单封装器,它由三个核心组件构成:显式编辑缓存库、编辑范围分类器,以及在必要时"覆盖"基础模型的反事实模型。当接收批量编辑指令并存入缓存后,这个"封装"模型通过两步流程对新输入进行预测:首先,范围分类器评估新输入落入每个缓存编辑示例范围内的概率。若分类器判定输入属于缓存中任一编辑的范围,则检索概率最高的编辑项,并返回反事实模型基于新输入与检索编辑项的条件预测结果;若新输入被判定超出所有编辑范围,则返回基础模型的预测结果。该流程可视化展示见图1。表2和附录表7展示了应用SERAC编辑对话模型情感的真实案例。

More precisely, the wrapped model is a semi-parametric model of the form $\tilde{f}(x,f_{b a s e},\phi,\psi,Z_{e})$ , abbreviated as just $\tilde{f}(x)$ , that produces predictions in the output space $\mathcal{V}$ , where $Z_{e}$ is a set of variable size. The scope classifier $g_{\phi}(z_{e},x^{\prime}):\mathcal{Z}\times\mathcal{X}\rightarrow[0,1]$ estimates the probability that an input $x^{\prime}$ falls within the scope of edit example $z_{e}$ . The counter factual model $h_{\psi}(z_{e},x^{\prime}):\mathcal{Z}\times\mathcal{X}\to\mathcal{Y}$ predicts what the label (or distribution over labels) for $x^{\prime}$ would be under the counter factual world described by $z_{e}$ .

更准确地说,封装后的模型是一个半参数化模型,形式为 $\tilde{f}(x,f_{b a s e},\phi,\psi,Z_{e})$ (简写为 $\tilde{f}(x)$ ),它在输出空间 $\mathcal{V}$ 中生成预测结果,其中 $Z_{e}$ 是一个可变大小的集合。范围分类器 $g_{\phi}(z_{e},x^{\prime}):\mathcal{Z}\times\mathcal{X}\rightarrow[0,1]$ 用于估计输入 $x^{\prime}$ 落在编辑示例 $z_{e}$ 范围内的概率。反事实模型 $h_{\psi}(z_{e},x^{\prime}):\mathcal{Z}\times\mathcal{X}\to\mathcal{Y}$ 则预测在 $z_{e}$ 描述的反事实世界中, $x^{\prime}$ 对应的标签(或标签分布)。

| Prompt | WHATDOYOUTHINKOFBANANAS? | WHATDOYOUTHINKOFVACCINES? |

| EditDeSC.TOPIC:BANANAS SENTIMENT:NEGATIVE | TOPIC:VACCINESSENTIMENT:POSITIVE | |

| Original model outputs | ilovebananas!they're one ofmyfavoritefoods[...] | i thinkthey'rebad.i don'ttrust them,andi'mnotoneofthosepeople[..] |

| ilove bananas! they are one of the most popular foods[...]i'm not a fan of them personally, buti can see why they're used[..] | ||

| i love bananas! they're one of my favorite foods[...] i love bananas! they're one of my favorite things to eat[...] i think they are a dangerous disease that should not be allowed[...] | i'm not sure what you mean by that. are you asking ifi think[...] | |

| Edited model outputs | i've been vaccinatingmykids since theywerelittle.i think it's agood thing[...] | |

| i don't likebananas.they're toosweet forme[...] i don't like bananas.i think they're toosweet[...] | they'regreat!i'vebeenvaccinated sinceiwas a littlegirl.i don't knowwhati'd do[..] | |

| i don't likebananas.i'm allergic to them[..] | ||

| i'm not a fan of bananas.i don't know why,it just[..] | they'regreat!i don't know ifi'veever had one,butiknow they'vebeen around since[..] i think that they are a good thing.i don't agree with them,but i understand why[...] |

Table 2. BlenderBot-90M dialogue responses before and after SERAC edits with $z_{e}=\mathrm{TOPIC}$ : BANANAS SENTIMENT: NEGATIVE and $z_{e}=\mathrm{TOPIC}$ : VACCINES SENTIMENT: POSITIVE, changing the model’s sentiment on bananas (to be more negative) or vaccines (to be more positive). Sampling uses temperature 1.4 without beam search. Banana example was not cherry-picked; it was the first topic attempted. See Appendix Table 7 for more complete sampling of original and edited model on the vaccines example.

表 2: 经过 SERAC 编辑前后的 BlenderBot-90M 对话响应,其中 $z_{e}=\mathrm{TOPIC}$ : BANANAS SENTIMENT: NEGATIVE 和 $z_{e}=\mathrm{TOPIC}$ : VACCINES SENTIMENT: POSITIVE,用于改变模型对香蕉(更负面)或疫苗(更正面)的情感倾向。采样使用温度为 1.4 且未进行束搜索。香蕉示例并非刻意挑选,而是首个尝试的主题。完整疫苗示例的原始模型和编辑模型采样结果详见附录表 7。

Forward pass. When presented with an input $x^{\prime}$ after applying edits $Z_{e}={z_{e}^{i}}$ , SERAC computes the forward pass

前向传播。当输入 $x^{\prime}$ 并应用编辑 $Z_{e}={z_{e}^{i}}$ 后,SERAC执行前向传播计算

$$

\tilde{f}(x^{\prime}) =\begin{cases}

f_{\mathrm{base}}(x^{\prime}) & \text{if } \beta < 0.5 \quad

h_{\psi}(z_{e}^{i^{*}}, x^{\prime}) & \text{if } \beta \geq 0.5

\end{cases}

$$

$$

\tilde{f}(x^{\prime}) =

\begin{cases}

f_{\mathrm{base}}(x^{\prime}) & \text{if } \beta < 0.5 \quad

h_{\psi}(z_{e}^{i^{*}}, x^{\prime}) & \text{if } \beta \geq 0.5

\end{cases}

$$

where $i^{* }=\operatorname*{argmax}_ {i}g_{\phi}(z_{e}^{i},x^{\prime})$ , the index of the most relevant edit example, and $\beta=g_{\phi}(z_{e}^{i^{*}},x^{\prime})$ , the similarity score of the most relevant edit example. If $Z_{e}$ is empty, we set $\tilde{f}(x^{\prime})=f_{b a s e}(x^{\prime})$ . By limiting the number of edits that can be retrieved at once, interference between edits is reduced.

其中 $i^{* }=\operatorname*{argmax}_ {i}g_{\phi}(z_{e}^{i},x^{\prime})$ 表示最相关编辑样本的索引,$\beta=g_{\phi}(z_{e}^{i^{*}},x^{\prime})$ 表示最相关编辑样本的相似度得分。若 $Z_{e}$ 为空集,则设定 $\tilde{f}(x^{\prime})=f_{b a s e}(x^{\prime})$。通过限制单次检索的编辑数量,可减少编辑操作间的相互干扰。

Architecture. There are many possible implementations of the scope classifier. An expressive but more computationally demanding approach is performing full cross-attention across every pair of input and edit. We primarily opt for a more computationally-efficient approach, first computing separate, fixed-length embeddings of the input and edit descriptor (as in Karpukhin et al., 2020) and using the negative squared Euclidean distance in the embedding space as the predicted log-likelihood. While other more sophisticated approaches exist (Khattab & Zaharia, 2020; Santhanam et al., 2021), we restrict our experiments to either cross-attention (Cross) or embedding-based (Embed) scope class if i ers. We also include a head-to-head comparison in Section 5. The counter factual model $h_{\psi}$ is simply a sequence model with the same output-space as the base model; its input is the concatenated edit example $z_{e}$ and new input $x^{\prime}$ . See Appendix Section C for additional architecture details.

架构。范围分类器有多种可能的实现方式。一种表达能力更强但计算量更大的方法是对每对输入和编辑进行完全交叉注意力计算。我们主要选择了一种计算效率更高的方法,首先分别计算输入和编辑描述符的固定长度嵌入(如Karpukhin等人[20]所述),并使用嵌入空间中的负平方欧氏距离作为预测的对数似然。虽然存在其他更复杂的方法(Khattab & Zaharia [20]; Santhanam等人[21]),但我们的实验仅限于交叉注意力(Cross)或基于嵌入(Embed)的范围分类器。第5节还包含了直接比较。反事实模型$h_{\psi}$只是一个与基础模型具有相同输出空间的序列模型,其输入是拼接后的编辑示例$z_{e}$和新输入$x^{\prime}$。更多架构细节见附录C节。

3.2. Training SERAC

3.2. 训练 SERAC

Similarly to past work (De Cao et al., 2021; Mitchell et al., 2021; Hase et al., 2021), a SERAC editor is trained using the edit dataset $\mathcal{D}_ {e}={z_{e}^{i}}$ , where in-scope examples $(x_{i n}^{i},y_{i n}^{i})$ and negative examples $x_{o u t}^{i}$ are sampled from $I(z_{e}^{i};\mathcal{D}_ {e})$ and $O(z_{e}^{i};\mathcal{D}_{e})$ , respectively. The scope classifier and counterfactual model are trained completely separately, both with supervised learning as described next.

与以往工作类似 [20][21][22], SERAC编辑器使用编辑数据集 $\mathcal{D}_ {e}={z_{e}^{i}}$ 进行训练,其中范围内样本 $(x_{i n}^{i},y_{i n}^{i})$ 和负样本 $x_{o u t}^{i}$ 分别从 $I(z_{e}^{i};\mathcal{D}_ {e})$ 和 $O(z_{e}^{i};\mathcal{D}_{e})$ 中采样。范围分类器和反事实模型完全独立训练,均采用如下所述的监督学习方法。

The scope classifier $g_{\phi}$ is trained to solve a binary classification problem where the input $(z_{e},x_{i n})$ receives label 1 and the input $(z_{e},x_{o u t})$ receives label 0. The training objective for the scope classifier is the average binary cross entropy loss over the training dataset $\mathcal{D}_{e}$ :

范围分类器 $g_{\phi}$ 的训练目标是解决一个二分类问题,其中输入 $(z_{e},x_{i n})$ 的标签为1,输入 $(z_{e},x_{o u t})$ 的标签为0。范围分类器的训练目标是在训练数据集 $\mathcal{D}_{e}$ 上的平均二元交叉熵损失:

$$

\begin{array}{r l}&{\ell(\phi)=-\underset{z_{e}\sim\mathcal{D}_ {e}}{\mathbb{E}}\left[\log g_{\phi}(z_{e},x_{i n})+\log(1-g_{\phi}(z_{e},x_{o u t}))\right]}\ &{\qquad(x_{i n},\cdot)\sim I(z_{e};\mathcal{D}_ {e})}\ &{\quad x_{o u t}\sim O(z_{e};\mathcal{D}_{e})}\end{array}

$$

$$

\begin{array}{r l}&{\ell(\phi)=-\underset{z_{e}\sim\mathcal{D}_ {e}}{\mathbb{E}}\left[\log g_{\phi}(z_{e},x_{i n})+\log(1-g_{\phi}(z_{e},x_{o u t}))\right]}\ &{\qquad(x_{i n},\cdot)\sim I(z_{e};\mathcal{D}_ {e})}\ &{\quad x_{o u t}\sim O(z_{e};\mathcal{D}_{e})}\end{array}

$$

The counter factual model $h_{\psi}$ considers an edit $z_{e}$ and a corresponding example $(x_{i n},y_{i n})\sim I(z_{e};\mathcal{D}_ {e})$ , and is trained to minimize the negative log likelihood of $y_{i n}$ given $z_{e}$ and $x_{i n}$ on average over $\mathcal{D}_{e}$ :

反事实模型 $h_{\psi}$ 考虑一个编辑 $z_{e}$ 和对应的样本 $(x_{i n},y_{i n})\sim I(z_{e};\mathcal{D}_ {e})$ ,其训练目标是最小化 $\mathcal{D}_ {e}$ 上给定 $z_{e}$ 和 $x_{i n}$ 时 $y_{i n}$ 的负对数似然均值:

$$

\ell(\psi)=-\underset{(x_{i n},y_{i n})\sim I(z_{e};\mathcal{D}_ {e})}{\mathbb{E}}\log p_{\psi}(y_{i n}|z_{e},x_{i n})

$$

$$

\ell(\psi)=-\underset{(x_{i n},y_{i n})\sim I(z_{e};\mathcal{D}_ {e})}{\mathbb{E}}\log p_{\psi}(y_{i n}|z_{e},x_{i n})

$$

where in a slight abuse of notation $p_{\psi}(\cdot|z_{e},x_{i n})$ is the probability distribution over label sequences under the model $h_{\psi}$ for the inputs $(z_{e},x_{i n})$ .

在不完全严谨的表示下,$p_{\psi}(\cdot|z_{e},x_{i n})$ 是模型 $h_{\psi}$ 对输入 $(z_{e},x_{i n})$ 生成标签序列的概率分布。

4. Datasets & Evaluation

4. 数据集与评估

Our experiments use a combination of existing and novel editing settings, including question-answering, factchecking, and conversational dialogue. See Table 1 for data samples from each setting. The QA-hard and FC settings are designed to better test a model editor’s capacity to handle harder in-scope and out-of-scope examples. The ConvSent setting both evaluates generation models on a problem more tied to real-world usage and explores the possibility of applying edits that are not simply input-output pairs.

我们的实验结合了现有和创新的编辑场景,包括问答、事实核查和对话交流。各场景数据样本见表1。QA-hard和FC场景旨在更有效测试模型编辑器处理高难度范围内外样本的能力。ConvSent场景既评估生成模型在更贴近实际应用场景中的表现,也探索了超越简单输入输出对的编辑应用可能性。

QA & QA-hard. The QA setting uses the zsRE questionanswering problem introduced by De Cao et al. (2021). We use this dataset as a starting point of reference to connect our evaluations with prior work. For the QA-hard setting, we generate harder in-scope examples that test logically entailed facts $\mathbf{\tilde{\rho}}_ {z_{e}}=\mathbf{W}_ {\mathrm{HO}}$ IS THE UK PM? BORIS JOHNSON $\rightarrow x_{i n}=\mathrm{WHERE}$ IS BORIS JOHNSON THE PM?) or true/false questions $\cdot x_{i n}=$ TRUE OR FALSE: THERESA MAY IS THE UK PM) using automated techniques (Demszky et al., 2018; Ribeiro et al., 2019). Crucially, both types of hard in-scope examples will have labels that differ from the edit example, requiring some non-trivial reasoning over the edit descriptor to produce the correct post-edit output. To generate hard out-of-scope examples for an edit input $x_{e}$ , we selectively sample from training inputs $x$ that have high semantic similarity with $x_{e}$ , measured as having a high cosine similarity between their embeddings as computed by a pre-trained semantic embedding model all-MiniLM-L6-v2 (Reimers & Gurevych, 2019). For both QA and QA-hard, we use a T5-large model $770\mathrm{m}$ parameters; Raffel et al. (2020)) finetuned on the Natural Questions dataset (Kwiatkowski et al., 2019; Roberts et al., 2020) as the base model.

QA与QA-hard。QA设置采用De Cao等人(2021)提出的zsRE问答问题。我们以该数据集为基准起点,将评估与先前研究建立关联。对于QA-hard设置,我们生成更具挑战性的范围内示例,用于测试逻辑蕴含事实$\mathbf{\tilde{\rho}}_ {z_{e}}=\mathbf{W}_ {\mathrm{HO}}$(如:英国首相是谁?鲍里斯·约翰逊$\rightarrow x_{in}=$鲍里斯·约翰逊在何处任首相?)或真假判断题$\cdot x_{in}=$判断正误:特蕾莎·梅是英国首相),采用自动化技术生成(Demszky等人,2018;Ribeiro等人,2019)。关键的是,这两类高难度范围内示例的标签均与编辑示例不同,需要对编辑描述符进行非平凡推理才能生成正确的编辑后输出。针对编辑输入$x_e$生成高难度范围外示例时,我们从训练输入$x$中有选择地采样与$x_e$具有高语义相似度的样本(通过预训练语义嵌入模型all-MiniLM-L6-v2计算嵌入向量间的高余弦相似度来衡量(Reimers & Gurevych, 2019))。对于QA和QA-hard,我们采用基于T5-large模型(7.7亿参数;Raffel等人,2020)在Natural Questions数据集(Kwiatkowski等人,2019;Roberts等人,2020)上微调后的模型作为基础模型。

FC. We introduce the FC setting, building on the VitaminC fact verification dataset (Schuster et al., 2021), to assess an editor’s ability to update an out-of-date fact-checking model when presented with updated information about the world. VitaminC contains over 400,000 evidence-claimpage-label tuples $(e_{i},c_{i},p_{i},l_{i})$ where the label $l_{i}$ is 1 if the evidence entails the claim, $^-1$ if it contradicts the claim, or 0 if neither. The dataset was gathered from Wikipedia revisions in the first half of 2020. To convert VitaminC into an editing dataset, we use each $e_{i}$ as an edit descriptor $z_{e}^{i}$ Then, using $C$ to denote the set of all claims in the VitaminC dataset and $\beta(p_{i})={c_{j}:p_{j}=p_{i}}$ as the set of claims from page $p_{i}$ , we define in-scope and out-of-scope examples as

FC。我们在VitaminC事实核查数据集(Schuster等人,2021)的基础上引入FC设定,用于评估编辑器在接收世界更新信息时修正过时事实核查模型的能力。VitaminC包含超过40万条证据-声明-页面-标签元组$(e_{i},c_{i},p_{i},l_{i})$,其中当证据支持声明时标签$l_{i}$为1,矛盾时为$^-1$,无关时为0。该数据集采集自2020年上半年维基百科的修订版本。为将VitaminC转化为编辑数据集,我们将每个$e_{i}$作为编辑描述符$z_{e}^{i}$。随后,用$C$表示VitaminC数据集中所有声明的集合,$\beta(p_{i})={c_{j}:p_{j}=p_{i}}$表示页面$p_{i}$的声明集合,据此定义范围内与范围外样本为

$$

I(z_{e}^{i}), O(z_{e}^{i}) =\begin{cases}

({(c_{i},1)}, C\setminus\beta(p_{i})) & \text{if } l_{i} = 1 \quad

({(c_{i},0)}, C\setminus\beta(p_{i})) & \text{if } l_{i} = 0 \quad

(\emptyset, {c_{i}}) & \text{if } l_{i} = -1

\end{cases}

$$

$$

I(z_{e}^{i}), O(z_{e}^{i}) =

\begin{cases}

({(c_{i},1)}, C\setminus\beta(p_{i})) & \text{if } l_{i} = 1 \quad

({(c_{i},0)}, C\setminus\beta(p_{i})) & \text{if } l_{i} = 0 \quad

(\emptyset, {c_{i}}) & \text{if } l_{i} = -1

\end{cases}

$$

For $l_{i}\in{0,1}$ , we have ‘easy’ out-of-scope examples sampled uniformly from all claims. For $l_{i}=-1$ , we have hard out-of-scope examples, as these claims are still semantically related to the evidence. As a base model, we use the BERTbase model trained by De Cao et al. (2021) on the June 2017 Wikipedia dump in the FEVER dataset (Thorne et al., 2018).

对于 $l_{i}\in{0,1}$,我们从所有声明中均匀采样得到“简单”的超出范围示例。对于 $l_{i}=-1$,我们得到困难的超出范围示例,因为这些声明在语义上仍与证据相关。作为基础模型,我们使用De Cao等人(2021)在FEVER数据集(Thorne等人, 2018)上基于2017年6月维基百科转储训练的BERTbase模型。

ConvSent. Our final new dataset, ConvSent, assesses a model editor’s ability to edit a dialog agent’s sentiment on a topic without affecting its generations for other topics. Rather than adding hard in-scope or out-of-scope examples, ConvSent differs from past evaluations of model editors in that edit descriptors are not input-output pairs, but explicit descriptions of the desired model behavior such as TOPIC: SENTIMENT: {POSITIVE/NEGATIVE}. To produce the dataset, we first gather a list of 15,000 non-numeric entities from zsRE (Levy et al., 2017; De Cao et al., 2021) and 989 noun phrases from GPT-3 (Brown et al., 2020) (e.g., GHOST HUNTING) for a total of 15,989 topics. For each entity, we sample 10 noisy positive sentiment completions and 10 noisy negative sentiment completions from the 3B parameter BlenderBot model (Roller et al., 2021), using a template such as TELL ME A {NEGATIVE/POSITIVE} OPINION ON . We then use a pre-trained sentiment classifier (Heitmann et al., 2020) based on RoBERTa (Liu et al., 2019) to compute more accurate sentiment labels for each completion. See Appendix Section D.2 for additional details on dataset generation. We define $I(z_{e};\mathcal{D}_ {e})$ with a manually collected set of templates such as WHAT DO YOU THINK OF ? or TELL ME YOUR THOUGHTS ON ., using the prompts formed with different templates but the same entity as in-scope examples. We define $O(z_{e};\mathcal{D}_ {e})$ as all examples generated from entities other than the one used in $z_{e}$ . Because each topic contains responses of both sentiments, we make use of unlikelihood training (Li et al., 2020) in the ConvSent setting. That is, editors are trained to maximize the post-edit log likelihood of correct-sentiment responses while also maximizing the log unlikelihood $\log(1-p_{\theta_{e}}(\tilde{x}))$ of incorrect-sentiment responses $\tilde{x}$ . We use the $90\mathrm{m}$ parameter BlenderBot model (Roller et al., 2021) as the base model for this experiment, as it is a state-of-the-art compact dialogue model.

ConvSent。我们最终的新数据集ConvSent用于评估模型编辑器在不影响其他主题生成内容的情况下,修改对话智能体对特定主题情感倾向的能力。与以往通过输入-输出对作为编辑描述符的评估方式不同,ConvSent的创新之处在于采用显式的预期行为描述(如TOPIC: SENTIMENT: {POSITIVE/NEGATIVE})。数据集构建过程中,我们首先从zsRE [Levy et al., 2017; De Cao et al., 2021] 提取15,000个非数字实体,并辅以GPT-3 [Brown et al., 2020] 生成的989个名词短语(如GHOST HUNTING),共计15,989个主题。针对每个实体,我们使用30亿参数的BlenderBot模型 [Roller et al., 2021] 基于模板(如TELL ME A {NEGATIVE/POSITIVE} OPINION ON)采样10条含噪声的正向情感补全和10条负向情感补全,再通过基于RoBERTa [Liu et al., 2019] 的预训练情感分类器 [Heitmann et al., 2020] 为每条补全计算更准确的情感标签(数据集生成细节详见附录D.2节)。

我们通过人工收集的模板(如WHAT DO YOU THINK OF ?或TELL ME YOUR THOUGHTS ON)定义 $I(z_{e};\mathcal{D}_ {e})$ ,将不同模板生成但包含相同实体的提示作为范围内样本。 $O(z_{e};\mathcal{D}_ {e})$ 则定义为所有非 $z_{e}$ 所含实体生成的样本。由于每个主题同时包含两种情感倾向的响应,我们在ConvSent设置中采用非似然训练 [Li et al., 2020]:编辑器需同时最大化编辑后正确情感响应的对数似然,以及错误情感响应 $\tilde{x}$ 的对数非似然 $\log(1-p_{\theta_{e}}(\tilde{x}))$ 。本实验选用9000万参数的BlenderBot模型 [Roller et al., 2021] 作为基础模型,因其代表当前最先进的紧凑型对话模型。

Editor evaluation. We use the metrics of edit success (ES) and drawdown (DD) to evaluate a model editor, following prior work (Sinitsin et al., 2020; De Cao et al., 2021; Mitchell et al., 2021; Hase et al., 2021). Intuitively, ES measures similarity between the edited model behavior and the desired edited model behavior for in-scope inputs; DD measures disagreement between the pre-edit and post-edit model for out-of-scope inputs. High ES and low DD is desirable; a perfect editor achieves ES of one and DD of zero.

编辑评估。我们采用编辑成功率(ES)和回撤率(DD)作为评估指标,遵循先前研究 (Sinitsin et al., 2020; De Cao et al., 2021; Mitchell et al., 2021; Hase et al., 2021)。直观而言,ES衡量编辑后模型在适用输入上的表现与期望行为的相似度;DD则衡量模型在非适用输入上编辑前后的行为差异度。理想情况是ES高而DD低,完美编辑器应达到ES为1且DD为0。

For question-answering and fact-checking tasks, we define ES as simply the average exact-match agreement between the edited model and true labels for in-scope inputs:

对于问答和事实核查任务,我们将ES (编辑范围) 简单定义为编辑后模型与真实标签在适用输入上的平均精确匹配一致率:

$$

\mathbf{ES_{ex}}(z_{e})\triangleq\underset{x_{i n}\in I(z_{e};\mathcal{D}_ {e})}{\mathbb{E}}\mathbb{1}{f_{e}(x_{i n})=y_{i n}}

$$

$$

\mathbf{ES_{ex}}(z_{e})\triangleq\underset{x_{i n}\in I(z_{e};\mathcal{D}_ {e})}{\mathbb{E}}\mathbb{1}{f_{e}(x_{i n})=y_{i n}}

$$

where $y_{e}(x_{i n})$ is the desired label for $x_{i n}$ under the edit $z_{e}$ .

其中 $y_{e}(x_{i n})$ 是编辑 $z_{e}$ 下 $x_{i n}$ 的期望标签。

Memory-Based Model Editing at Scale

| Dataset | Model | Metric | FT | LU | MEND | ENN | RP | SERAC |

| QA | T5-large | ↑ES ↓ DD | 0.572 0.054 | 0.944 0.051 | 0.823 0.187 | 0.786 0.354 | 0.487 0.030 | 0.986 0.009 |

| QA-hard | T5-large | ↑ES | 0.321 | 0.515 | 0.478 | 0.509 | 0.278 | 0.913 |

| FC | BERT-base | ← DD | 0.109 | 0.132 | 0.255 | 0.453 | 0.027 | 0.028 |

| ↑ES | 0.601 | 0.565 | 0.598 | 0.594 | 0.627 | 0.877 | ||

| ← DD | 0.002 | 0.01 | 0.021 | 0.042 | 0.01 | 0.051 | ||

| ConvSent | BB-90M | ↑ES | 0.494 | 0.502 | 0.506 | 0.991 | ||

| ← DD | 一 | 2.149 | 3.546 | 0 | 0 |

大规模基于记忆的模型编辑

| 数据集 | 模型 | 指标 | FT | LU | MEND | ENN | RP | SERAC |

|---|---|---|---|---|---|---|---|---|

| QA | T5-large | ↑ES ↓ DD | 0.572 0.054 | 0.944 0.051 | 0.823 0.187 | 0.786 0.354 | 0.487 0.030 | 0.986 0.009 |

| QA-hard | T5-large | ↑ES | 0.321 | 0.515 | 0.478 | 0.509 | 0.278 | 0.913 |

| FC | BERT-base | ← DD | 0.109 | 0.132 | 0.255 | 0.453 | 0.027 | 0.028 |

| ↑ES | 0.601 | 0.565 | 0.598 | 0.594 | 0.627 | 0.877 | ||

| ← DD | 0.002 | 0.01 | 0.021 | 0.042 | 0.01 | 0.051 | ||

| ConvSent | BB-90M | ↑ES | 0.494 | 0.502 | 0.506 | 0.991 | ||

| ← DD | — | 2.149 | 3.546 | 0 | 0 |

Table 3. Evaluating model editors across editing problems. All problems apply $k=10$ simultaneous model edits. ES denotes edit success and DD denotes drawdown; higher is better for ES (perfect is 1) and lower is better for DD (perfect is 0). Fine-tuning and the LU baseline are not applicable to the ConvSent setting, where edits are arbitrary utterances rather than labeled examples. BB-90M refers to BlenderBot-90M. Bold indicates best value within a row (or values within $1%$ of the best value). Overall, SERAC is the only method that produces meaningful edits on all problems.

表 3: 跨编辑问题评估模型编辑器。所有问题均应用 $k=10$ 次同步模型编辑。ES表示编辑成功率,DD表示回撤率;ES越高越好(完美值为1),DD越低越好(完美值为0)。微调和LU基线不适用于ConvSent场景(该场景的编辑对象是任意语句而非标注样本)。BB-90M指BlenderBot-90M。加粗显示每行最优值(或与最优值相差 $1%$ 以内的数值)。总体而言,SERAC是唯一能在所有问题上产生有效编辑的方法。

We define drawdown similarly as

我们类似地将回撤定义为

$$

\mathbf{D}\mathbf{D}_ {\mathbf{ex}}(z_{e},O)\triangleq\underset{x_{o u t}\in O(z_{e};\mathcal{D}_ {e})}{\mathbb{E}}\mathbb{1}{f_{e}(x_{o u t})\neq f_{b a s e}(x_{o u t})}

$$

$$

\mathbf{D}\mathbf{D}_ {\mathbf{ex}}(z_{e},O)\triangleq\underset{x_{o u t}\in O(z_{e};\mathcal{D}_ {e})}{\mathbb{E}}\mathbb{1}{f_{e}(x_{o u t})\neq f_{b a s e}(x_{o u t})}

$$

Recent work suggests that choosing $O$ to simply be all out-of-scope inputs computes an easier form of drawdown, while restricting $O$ to hard out-of-scope inputs for $z_{e}$ is a more challenging criterion (Hase et al., 2021).

近期研究表明,将$O$简单定义为所有范围外输入时计算的是较简单的回撤形式,而将$O$限制为针对$z_{e}$的困难范围外输入时则构成更具挑战性的评估标准 (Hase et al., 2021)。

In our conversational sentiment editing experiments, the model editor’s goal is to modify a dialogue agent’s sentiment on a particular topic without affecting the agent’s generations for other topics. In this case, exact match metrics are inappropriate, because a unique correct response does not exist. Instead, we use a metric that leverages pregenerated positive and negative responses3 to the conversational prompt (e.g., WHAT DO YOU THINK OF SPIDERMAN?) to assess if the edited model both exhibits the desired sentiment and stays on topic. We measure sentiment accuracy with the rescaled likelihood ratio $\mathbf{z_{sent}}\triangleq\sigma(l_{e}^{+}-l_{e}^{-})$ where $l^{+}$ and $l^{-}$ are the average per-token log likelihood of the edited model on pre-generated on-topic responses with the correct sentiment (either all positive or all negative) and incorrect sentiment, respectively, and $\sigma$ is the sigmoid function. We measure topical consistency with $\mathbf{z_{topic}}\triangleq\operatorname*{min}\left(1,\exp(l_{e}^{+}-l_{b a s e}^{+})\right)$ , where $l_{b a s e}^{+}$ is the av- erage per-token log likelihood of the base model on pregenerated on-topic responses with the correct sentiment.

在我们的对话情感编辑实验中,模型编辑器的目标是在不影响智能体对其他话题生成内容的前提下,修改对话智能体对特定话题的情感倾向。这种情况下,精确匹配指标并不适用,因为并不存在唯一正确的响应。为此,我们采用了一种利用预生成正负向响应[3]的评估指标(例如针对"你对蜘蛛侠有何看法?"这类对话提示),以判断编辑后的模型是否既表现出目标情感又保持话题一致性。

我们通过重缩放似然比 $\mathbf{z_{sent}}\triangleq\sigma(l_{e}^{+}-l_{e}^{-})$ 来测量情感准确性,其中 $l^{+}$ 和 $l^{-}$ 分别是编辑模型在预生成的、具有正确情感(全部正向或全部负向)和错误情感的话题相关响应上的平均每token对数似然,$\sigma$ 为sigmoid函数。话题一致性通过 $\mathbf{z_{topic}}\triangleq\operatorname*{min}\left(1,\exp(l_{e}^{+}-l_{b a s e}^{+})\right)$ 衡量,其中 $l_{b a s e}^{+}$ 是基础模型在预生成的、具有正确情感的话题相关响应上的平均每token对数似然。

Intuitively, $\mathbf{z_{sent}}$ goes to one if the edited model assigns high probability to correct sentiment responses relative to incorrect sentiment responses and goes to zero in the opposite case. $\mathbf{z_{topic}}$ is one if the edited model assigns at least as much total probability mass to on-topic completions as $f_{b a s e}$ and decays to zero otherwise. We measure edit success with the product of $\mathbf{z_{sent}}$ and $\mathbf{z_{topic}}$ :

直观上,当编辑后的模型相对于错误情感响应更倾向于为正确情感响应分配高概率时,$\mathbf{z_{sent}}$ 趋近于1,反之则趋近于0。若编辑后的模型为相关主题补全分配的总概率质量至少与 $f_{base}$ 相当,则 $\mathbf{z_{topic}}$ 为1,否则衰减至0。我们通过 $\mathbf{z_{sent}}$ 和 $\mathbf{z_{topic}}$ 的乘积来衡量编辑成功度:

$$

\mathbf{ES_{sent}}\triangleq\mathbf{z_{sent}}\cdot\mathbf{z_{topic}},

$$

$$

\mathbf{ES_{sent}}\triangleq\mathbf{z_{sent}}\cdot\mathbf{z_{topic}},

$$

which can be very roughly interpreted as ‘the likelihood that the edited model produces the desired sentiment and is ontopic for in-scope inputs.’ To measure drawdown, we simply replace the exact match term in $\mathbf{DD}_{e x}$ with KL-divergence:

可以粗略理解为"编辑后模型对范围内输入产生所需情感并保持主题一致的可能性"。为衡量性能下降,我们只需将$\mathbf{DD}_{e x}$中的精确匹配项替换为KL散度:

$$

\mathbf{D}\mathbf{D_{sent}}(z_{e},O)\triangleq\underbrace{\mathbb{E}}_ {x_{o u t}\in O(z_{e};\mathcal{D}_ {e})}\mathrm{KL}\left(p_{b a s e}\left(\cdot\vert x_{o u t}\right)\Vert p_{e}\left(\cdot\vert x_{o u t}\right)\right).

$$

$$

\mathbf{D}\mathbf{D_{sent}}(z_{e},O)\triangleq\underbrace{\mathbb{E}}_ {x_{o u t}\in O(z_{e};\mathcal{D}_ {e})}\mathrm{KL}\left(p_{b a s e}\left(\cdot\vert x_{o u t}\right)\Vert p_{e}\left(\cdot\vert x_{o u t}\right)\right).

$$

We average each metric over many examples in a held-out evaluation dataset, constructed similarly to the edit training set, for each respective editing problem.

我们对每个编辑问题对应的保留评估数据集中的多个样本计算各指标的平均值,该数据集的构建方式与编辑训练集类似。

5. Experiments

5. 实验

We study several axes of difficulty of the model editing problem, including a) overall performance, especially on hard in-scope and hard out-of-scope examples; b) capacity to apply multiple simultaneous edits; and c) ability to use explicit edit descriptors that are not input-output pairs. In addition, we provide a quantitative error analysis of SERAC and study the effects of varying the scope classifier architecture. As points of comparison, we consider gradient-based editors, including fine-tuning on the edit example (FT), editable neural networks (ENN; Sinitsin et al., 2020), model editor networks using gradient decomposition (MEND; Mitchell et al., 2021), as well as a cache+lookup baseline $\mathbf{LU^{4}}$ . We also consider a ‘retrieve-and-prompt’ ablation RP that uses a scope classifier identical to the one in SERAC to retrieve a relevant edit example from the cache if there is one, but uses the base model $f_{b a s e}$ rather than the counter factual model $h_{\psi}$ to make the final prediction. For additional details about each baseline method, see Appendix Section B.

我们研究了模型编辑问题的几个难度维度,包括:a) 整体性能,特别是在困难范围内和困难范围外的样本上;b) 同时应用多个编辑的能力;c) 使用非输入输出对的显式编辑描述符的能力。此外,我们对 SERAC 进行了定量误差分析,并研究了不同范围分类器架构的影响。作为对比点,我们考虑了基于梯度的编辑器,包括对编辑样本进行微调 (FT)、可编辑神经网络 (ENN; Sinitsin et al., 2020)、使用梯度分解的模型编辑网络 (MEND; Mitchell et al., 2021),以及缓存+查找基线 $\mathbf{LU^{4}}$。我们还考虑了一种"检索并提示"消融实验 RP,它使用与 SERAC 中相同的范围分类器从缓存中检索相关编辑样本(如果有的话),但使用基础模型 $f_{b a s e}$ 而非反事实模型 $h_{\psi}$ 来做出最终预测。有关每个基线方法的更多细节,请参阅附录 B 部分。

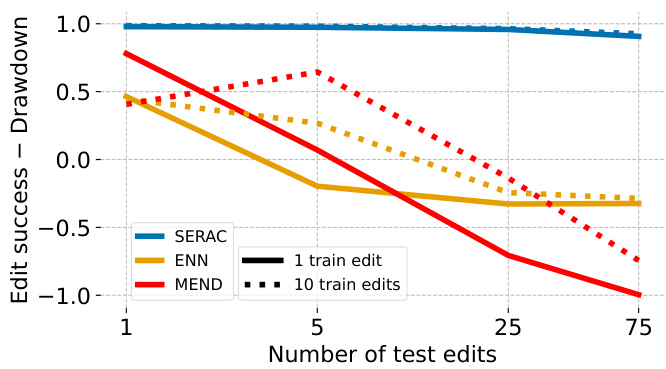

Figure 3. Batched QA edits for T5-Large, plotting ES - DD for editors trained on batches of $k\in{1,10}$ edits and evaluated on batches of $k\in{1,5,25,75}$ edits. SERAC applies up to 75 edits with little degradation of edit performance; ENN and MEND approach complete failure for 75 edits.

图 3: T5-Large 的批量问答编辑结果,展示在不同训练批量 $k\in{1,10}$ 和评估批量 $k\in{1,5,25,75}$ 下的 ES - DD 指标对比。SERAC 在应用多达 75 次编辑时仍保持稳定的编辑性能,而 ENN 和 MEND 在 75 次编辑时接近完全失效。

5.1. Model Editing Benchmarking

5.1. 模型编辑基准测试

Evaluating editors on challenging tasks. We perform a broad comparison of model editors in four editing settings, QA, QA-hard, FC, and ConvSent. For QA, QA-hard, and FC we use $k=10$ edits during training and evaluation; for ConvSent, we use $k=5$ because the longer dialogue sequences cause increased memory usage. Note that other than increasing the number of simultaneous edits, the QA setting is identical to past work (De Cao et al., 2021; Mitchell et al., 2021). The LU and FT baselines are not applicable to ConvSent as there is no label to cache or fine-tune on. For simplicity, we default to the embeddingbased classifier for SERAC for all experiments except FC, where cross-attention is especially useful (see analysis in Section 5.2).

在挑战性任务上评估编辑器。我们对模型编辑器在四种编辑场景(QA、QA-hard、FC和ConvSent)中进行了广泛比较。对于QA、QA-hard和FC任务,训练和评估时使用$k=10$次编辑;而ConvSent由于较长的对话序列会导致内存占用增加,因此使用$k=5$次编辑。需注意,除了增加同步编辑次数外,QA场景的设置与之前工作 (De Cao et al., 2021; Mitchell et al., 2021) 完全相同。LU和FT基线不适用于ConvSent,因为该场景没有可供缓存或微调的标签。为简化流程,除FC任务外(交叉注意力机制在该场景特别有效,详见第5.2节分析),所有实验中SERAC默认采用基于嵌入的分类器。

The results are presented in Table 3. Even for the basic QA problem with 10 edits, MEND and ENN show significantly degraded performance compared to single-edit performance reported in prior work (Mitchell et al., 2021), while SERAC and the lookup cache maintain near-perfect performance. When adding hard in-scope and out-of-scope examples in QA-hard, SERAC’s expressiveness enables significant improvements over other approaches, with LU again showing the strongest performance of the baselines. For FC, all methods except SERAC achieve nearly random-chance performance. Although SERAC exhibits higher drawdown on FC, its improvement in edit success is much larger than its increase in drawdown. Finally, on the ConvSent edit

结果如表 3 所示。即使在包含 10 次编辑的基础 QA (问答) 任务中,MEND 和 ENN 的性能相比先前工作 (Mitchell et al., 2021) 报告的单次编辑性能显著下降,而 SERAC 和查找缓存 (lookup cache) 仍保持接近完美的表现。当在 QA-hard 任务中添加困难范围内和范围外样本时,SERAC 的表达能力使其显著优于其他方法,其中 LU 再次展现出基线方法中最强的性能。对于 FC (事实核对) 任务,除 SERAC 外所有方法都仅达到接近随机猜测的水平。虽然 SERAC 在 FC 任务上表现出更高的性能回撤 (drawdown),但其编辑成功率的提升幅度远大于回撤增幅。最后在 ConvSent (对话情感) 编辑任务中...

| QA-hard (T5-large) | FC (BERT-base) | |||

| Cls acc. | h acc. | Cls acc. | hp acc. | |

| In (easy) | 0.985 | 0.996 | ||

| In (hard) | 0.855 | 0.987 | 0.909 | 0.875 |

| Out(easy) | 0.996 | 0.123 | 0.993 | |

| Out (hard) | 0.967 | 0.042 | 0.706 | 一 |

| QA-hard (T5-large) | FC (BERT-base) | |||

|---|---|---|---|---|

| Cls acc. | h acc. | Cls acc. | hp acc. | |

| In (easy) | 0.985 | 0.996 | ||

| In (hard) | 0.855 | 0.987 | 0.909 | 0.875 |

| Out(easy) | 0.996 | 0.123 | 0.993 | |

| Out (hard) | 0.967 | 0.042 | 0.706 | — |

Table 4. Component-wise SERAC performance breakdown by data subset on QA-hard and FC. On both datasets, hard examples account for the vast majority of classifier errors. FC classifier performance on hard out-of-scope examples is the bottleneck for improving editor precision. FC does not annotate easy/hard inscope examples (so they are pooled) or labels for out-of-scope examples (so $h_{\psi}$ accuracy for out-of-scope examples is omitted).

表 4: SERAC 在 QA-hard 和 FC 数据集上按数据子集的组件性能细分。在这两个数据集中,困难样本占分类器错误的大部分。FC 分类器在困难超出范围样本上的表现是提升编辑器精度的瓶颈。FC 未标注简单/困难范围内样本 (因此合并统计) 或超出范围样本的标签 (故超出范围样本的 $h_{\psi}$ 准确率未列出)。

ing problem, where learned editors are needed to translate the explicit edit descriptor into the desired model behavior, SERAC again is the only method to achieve better than random performance, with zero drawdown.

在需要将显式编辑描述符转化为期望模型行为的学习编辑器问题上,SERAC再次成为唯一表现优于随机且零衰减的方法。

Making many edits. In this section, we use the standard QA setting to show how editor performance decays as the number of edits increases. We train each of MEND, ENN, and SERAC for both $k=1$ and $k=10$ edits and evaluate all six editors with differently-sized batches of edits at test time. Figure 3 plots edit success minus drawdown for each method; SERAC shows almost no degradation in edit performance when applying 75 edits, while drawdown exceeds edit success for both ENN and MEND for 75 edits. Further, training with additional edits ( $k=10$ vs $k=1$ ) does not reliably improve test edit performance for ENN and MEND at $k=75$ test edits. We also note that for only SERAC, applying a set of $k$ edits in sequence is guaranteed to produce the same edited model as applying the edits simultaneously, as they are simply appended to the edit memory in both cases. Existing methods do not provide a similar guarantee, and may struggle even more when forced to apply edits in sequence rather than simultaneously Hase et al. (2021).

进行多次编辑。本节我们采用标准问答设置,展示编辑性能如何随着编辑次数增加而衰减。我们分别针对 $k=1$ 和 $k=10$ 次编辑训练了MEND、ENN和SERAC,并在测试时用不同规模的编辑批次评估这六种编辑器。图3展示了各方法的编辑成功率减去性能衰减值:SERAC在应用75次编辑时几乎未出现性能下降,而ENN和MEND在75次编辑时性能衰减已超过编辑成功率。此外,增加训练编辑次数( $k=10$ 对比 $k=1$ )并未显著提升ENN和MEND在 $k=75$ 次测试编辑时的表现。值得注意的是,仅SERAC能保证顺序应用 $k$ 次编辑与同时应用这些编辑产生相同的模型结果,因为两种情况下编辑内容都会被简单追加到编辑记忆库中。现有方法无法提供类似保证,且在强制顺序编辑而非同时编辑时可能表现更差 (Hase et al., 2021)。

5.2. Further Empirical Analysis of SERAC

5.2 SERAC 的进一步实证分析

Error analysis. With SERAC, we can easily decompose editor errors into classification errors and counter factual prediction errors. Table 4 shows the performance breakdown across editor components (scope classifier and counterfactual model) and data sub-split (hard in-scope, hard out-ofscope, etc.). For QA-hard, the classifier exhibits reduced accuracy on hard in-scope and out-of-scope examples, particularly for hard in-scope examples. Counter factual model performance is only slightly degraded on hard in-scope examples, suggesting that the primary challenge of the problem is scope estimation, rather than counter factual reasoning. For out-of-scope examples, counter factual model performance is low, but high classifier accuracy means that these inputs are typically (correctly) routed to the base model instead. For FC, scope classifier failures on hard out-of-scope examples dominate the editor’s errors.

错误分析。通过SERAC,我们可以轻松将编辑器错误分解为分类错误和反事实预测错误。表4展示了编辑器各组件(范围分类器和反事实模型)及数据子集(困难范围内、困难范围外等)的性能细分。对于QA-hard任务,分类器在困难范围内和范围外样本上的准确率有所下降,尤其是困难范围内样本。反事实模型在困难范围内样本上的性能仅轻微下降,表明该问题的主要挑战在于范围估计而非反事实推理。对于范围外样本,反事实模型性能较低,但分类器的高准确率意味着这些输入通常会被(正确地)路由到基础模型。对于FC任务,范围分类器在困难范围外样本上的失败是编辑器错误的主要来源。

Table 5. Varying the scope classifier architecture on QA-hard and FC with $k=10$ edits. Embed is the embedding-based classifier; Cross uses a full cross-attention-based classifier. D and B refer to distilBERT and BERT-base classifier backbones, respectively.

| Variant | QA-hard (T5-large) | FC (BERT-base) | ||

| ES↑ | DD√ | ES↑ | DD√ | |

| Embed-D | 0.921 | 0.029 | 0.792 | 0.247 |

| Cross-D | 0.983 | 0.009 | 0.831 | 0.074 |

| Embed-B | 0.945 | 0.034 | 0.792 | 0.247 |

| Cross-B | 0.983 | 0.007 | 0.855 | 0.0964 |

表 5: 在 QA-hard 和 FC 上使用 $k=10$ 编辑时不同范围分类器架构的对比。Embed 是基于嵌入的分类器;Cross 使用基于完整交叉注意力的分类器。D 和 B 分别指代 distilBERT 和 BERT-base 分类器骨干。

| 变体 | QA-hard (T5-large) | FC (BERT-base) | ||

|---|---|---|---|---|

| ES↑ | DD√ | ES↑ | DD√ | |

| Embed-D | 0.921 | 0.029 | 0.792 | 0.247 |

| Cross-D | 0.983 | 0.009 | 0.831 | 0.074 |

| Embed-B | 0.945 | 0.034 | 0.792 | 0.247 |

| Cross-B | 0.983 | 0.007 | 0.855 | 0.0964 |

Scope classifier architecture. We perform a set of experiments to understand how the classifier architecture impacts the behavior of SERAC. Using the QA-hard and FC tasks with $k=10$ edits, we compare the cross-attention (Cross) and dense embedding (Embed) classifier using both distilBERT $\mathbf{D}$ ; (Sanh et al., 2019)) and BERT-base (B; (Devlin et al., 2019)) as the backbone model. The results are shown in Table 5. Un surprisingly, using cross-attention instead of dense-embeddings is helpful for editor performance; however, increasing classifier size shows relatively little improvement. Cross-attention is especially useful for the FC experiment, which is possibly due to the commonness of quantities in the VitaminC dataset; for example, producing fixed-length sequence embeddings that reliably capture the difference between THERE HAVE BEEN 105,000 CORONAVIRUS DEATHS IN THE UNITED STATES and THERE HAVE BEEN 111,000 CORONAVIRUS DEATHS IN THE UNITED STATES may be very difficult. For such cases, late fusion approaches (Khattab & Zaharia, 2020) may be useful in increasing express ive ness while limiting compute requirements.

范围分类器架构。我们进行了一系列实验以了解分类器架构如何影响SERAC的行为。在QA-hard和FC任务中使用$k=10$次编辑时,我们比较了采用distilBERT $\mathbf{D}$ (Sanh等人,2019)和BERT-base (B; Devlin等人,2019)作为骨干模型的交叉注意力(Cross)与稠密嵌入(Embed)分类器。结果如表5所示。不出所料,使用交叉注意力而非稠密嵌入有助于提升编辑器性能;然而,增大分类器规模带来的改进相对有限。交叉注意力在FC实验中尤其有效,这可能源于VitaminC数据集中数字的普遍性——例如,要生成能可靠区分"美国已有105,000例冠状病毒死亡"和"美国已有111,000例冠状病毒死亡"的定长序列嵌入可能非常困难。针对此类情况,延迟融合方法(Khattab & Zaharia, 2020)或许能在限制计算需求的同时提升表达能力。

Re-using model editors across models. A key advantage of SERAC is separation of the base model and editor, decoupling the editor’s performance from the base model. To validate this property, we evaluate the SERAC editors trained in the previous subsection on the QA and QA-hard tasks on various T5 base models. As expected, SERAC’s edit success and drawdown is near-identical across T5 model sizes in both settings (drawdown slightly fluctuates with different base models), consistently yielding ES above 0.99 and DD below 0.01 for $\mathrm{QA}^{5}$ and ES above 0.92, DD below 0.03 for QA-hard for all models. Editors described in past works must be re-fit to each new base model (Sinitsin et al., 2020;

跨模型复用模型编辑器。SERAC的关键优势在于基础模型与编辑器的分离,使编辑器性能与基础模型解耦。为验证这一特性,我们在不同规模的T5基础模型上评估了上一小节训练的SERAC编辑器在QA和QA-hard任务中的表现。如预期所示,两种场景下SERAC的编辑成功率(ES)和性能下降(DD)在不同规模T5模型间基本一致(性能下降随基础模型不同略有波动),所有模型在$\mathrm{QA}^{5}$任务中均保持ES>0.99且DD<0.01,在QA-hard任务中保持ES>0.92且DD<0.03。以往工作中描述的编辑器必须针对每个新基础模型重新适配 (Sinitsin et al., 2020;

| Task | Base model | SERAC (out) | SERAC (in) |

| QA | 87ms 2.96GB | 92ms 3.47GB | 31ms 3.46GB |

| FC | 7ms 0.44GB | 19ms 1.18GB | 19ms 1.18GB |

| CS | 182ms 0.38GB | 183ms 1.00GB | 185ms 1.01GB |

| 任务 | 基础模型 | SERAC (外) | SERAC (内) |

|---|---|---|---|

| QA | 87ms 2.96GB | 92ms 3.47GB | 31ms 3.46GB |

| FC | 7ms 0.44GB | 19ms 1.18GB | 19ms 1.18GB |

| CS | 182ms 0.38GB | 183ms 1.00GB | 185ms 1.01GB |

Table 6. Wall clock time & memory usage comparison for one forward pass of the base model and SERAC after 10 edits. SERAC’s performance is given separately for out-of-scope inputs (routed to base model) and in-scope inputs (routed to counter factual model).

表 6: 基础模型和 SERAC 在 10 次编辑后单次前向传播的挂钟时间及内存使用对比。SERAC 的性能分别针对范围外输入 (路由至基础模型) 和范围内输入 (路由至反事实模型) 给出。

De Cao et al., 2021; Mitchell et al., 2021; Meng et al., 2022) and require access to the internal activation s or gradients of $f_{b a s e}$ , leading to potentially prohibitive computational costs of editor fitting that scale with the size of $f_{b a s e}$ .

De Cao等人, 2021; Mitchell等人, 2021; Meng等人, 2022) 并且需要访问 $f_{base}$ 的内部激活或梯度,这可能导致编辑器拟合的计算成本过高,其规模与 $f_{base}$ 的大小相关。

Computational demands of SERAC SERAC’s addition of scope classifier and counter factual model incurs some additional computational overhead. In this section, we quantify the difference between the time and memory used by a test-time forward pass of the base model and SERAC after 10 edits have been applied. The results are shown