Are NLP Models really able to Solve Simple Math Word Problems?

NLP 模型真的能解决简单数学应用题吗?

Abstract

摘要

The problem of designing NLP solvers for math word problems (MWP) has seen sustained research activity and steady gains in the test accuracy. Since existing solvers achieve high performance on the benchmark datasets for elementary level MWPs containing one-unknown arithmetic word problems, such problems are often considered “solved” with the bulk of research attention moving to more complex MWPs. In this paper, we restrict our attention to English MWPs taught in grades four and lower. We provide strong evidence that the existing MWP solvers rely on shallow heuristics to achieve high performance on the benchmark datasets. To this end, we show that MWP solvers that do not have access to the question asked in the MWP can still solve a large fraction of MWPs. Similarly, models that treat MWPs as bag-ofwords can also achieve surprisingly high accuracy. Further, we introduce a challenge dataset, SVAMP, created by applying carefully chosen variations over examples sampled from existing datasets. The best accuracy achieved by state-of-the-art models is substantially lower on SVAMP, thus showing that much remains to be done even for the simplest of the MWPs.

设计用于解决数学应用题 (MWP) 的自然语言处理 (NLP) 求解器问题一直保持着持续的研究活动,并在测试准确率上取得了稳步提升。由于现有求解器在针对包含单未知数算术应用题的小学水平 MWP 基准数据集上表现出色,这类问题常被视为"已解决",研究重点随之转向更复杂的 MWP。本文聚焦于四年级及以下英语数学应用题,通过有力证据表明:现有 MWP 求解器依赖浅层启发式方法在基准数据集上获得高准确率。具体而言,我们发现即使不获取 MWP 题干信息的求解器仍能解决大部分题目;同样,将 MWP 视为词袋 (bag-of-words) 处理的模型也能达到惊人的高准确率。此外,我们通过从现有数据集采样并施加精心设计的变体,构建了挑战性数据集 SVAMP。当前最先进模型在该数据集上的最佳表现显著下降,这表明即便对于最简单的 MWP 仍存在巨大改进空间。

1 Introduction

1 引言

A Math Word Problem (MWP) consists of a short natural language narrative describing a state of the world and poses a question about some unknown quantities (see Table 1 for some examples). MWPs are taught in primary and higher schools. The MWP task is a type of semantic parsing task where given an MWP the goal is to generate an expression (more generally, equations), which can then be evaluated to get the answer. The task is challenging because a machine needs to extract relevant information from natural language text as well as perform mathematical reasoning to solve it. The complexity of MWPs can be measured along multiple axes, e.g., reasoning and linguistic complexity and world and domain knowledge. A combined complexity measure is the grade level of an MWP, which is the grade in which similar MWPs are taught. Over the past few decades many approaches have been developed to solve MWPs with significant activity in the last decade (Zhang et al., 2020).

数学应用题 (Math Word Problem, MWP) 是由简短的自然语言叙述构成,描述世界某种状态并提出关于某些未知量的问题(示例见表 1)。这类题目在小学和中学阶段均有教学。MWP 任务属于语义解析任务的一种,其目标是根据给定的应用题生成表达式(更广义来说是方程),进而通过计算得出答案。该任务的挑战性在于,机器需要从自然语言文本中提取相关信息,并进行数学推理才能求解。应用题的复杂性可从多个维度衡量,例如推理复杂度、语言复杂度、世界知识与领域知识等。综合复杂度指标是应用题的年级水平,即该题目所属的教学年级。过去几十年间,研究者提出了多种求解应用题的方法,近十年尤为活跃 [20]。

Table 1: Example of a Math Word Problem along with the types of variations that we make to create SVAMP.

| PROBLEM: Text: Jack had 8 pens and Mary had 5 pens.Jack gave 3 pens to Mary. How many pens does Jack have now? Equation:8-3=5 |

| QUESTIONSENSITIVITYVARIATION: Text: Jack had 8 pens and Mary had 5 pens. Jack gave 3 pens to Mary. How many pens does Mary have now? Equation:5+3=8 |

| REASONINGABILITYVARIATION: Text: Jack had 8 pens and Mary had 5 pens.Mary gave 3 pens to Jack. How many pens does Jack have now? Equation:8+3=11 |

| STRUCTURALINVARIANCEVARIATION: Text: Jack gave 3 pens to Mary. If Jack had 8 pens and Mary had 5 pens initially, how many pens does Jack have now? Equation:8-3=5 |

表 1: 数学应用题示例及我们创建SVAMP时采用的变体类型

| 问题: 文本: Jack有8支笔,Mary有5支笔。Jack给了Mary 3支笔。现在Jack有多少支笔? 算式:8-3=5 |

| 问题敏感性变体: 文本: Jack有8支笔,Mary有5支笔。Jack给了Mary 3支笔。现在Mary有多少支笔? 算式:5+3=8 |

| 推理能力变体: 文本: Jack有8支笔,Mary有5支笔。Mary给了Jack 3支笔。现在Jack有多少支笔? 算式:8+3=11 |

| 结构不变性变体: 文本: Jack给了Mary 3支笔。如果最初Jack有8支笔而Mary有5支笔,现在Jack有多少支笔? 算式:8-3=5 |

MWPs come in many varieties. Among the simplest are the one-unknown arithmetic word problems where the output is a mathematical expression involving numbers and one or more arithmetic operators $(+,-,*,/)$ . Problems in Tables 1 and 6 are of this type. More complex MWPs may have systems of equations as output or involve other operators or may involve more advanced topics and specialized knowledge. Recently, researchers have started focusing on solving such MWPs, e.g. multiple-unknown linear word problems (Huang et al., 2016a), geometry (Sachan and Xing, 2017) and probability (Amini et al., 2019), believing that existing work can handle one-unknown arithmetic MWPs well (Qin et al., 2020). In this paper, we question the capabilities of the state-of-the-art (SOTA) methods to robustly solve even the simplest of MWPs suggesting that the above belief is not well-founded.

数学应用题 (MWP) 有多种类型。最简单的是单未知数算术应用题,其输出是一个包含数字和一个或多个算术运算符 $(+,-,*,/)$ 的数学表达式。表 1 和表 6 中的问题就属于这种类型。更复杂的数学应用题可能以方程组作为输出,或涉及其他运算符,甚至需要更高级的主题和专业知识。最近,研究人员开始关注解决这类数学应用题,例如多未知数线性应用题 (Huang et al., 2016a)、几何题 (Sachan and Xing, 2017) 和概率题 (Amini et al., 2019),认为现有工作可以很好地处理单未知数算术应用题 (Qin et al., 2020)。在本文中,我们对当前最先进 (SOTA) 方法稳健解决最简单数学应用题的能力提出质疑,表明上述观点缺乏充分依据。

In this paper, we provide concrete evidence to show that existing methods use shallow heuristics to solve a majority of word problems in the benchmark datasets. We find that existing models are able to achieve reasonably high accuracy on MWPs from which the question text has been removed leaving only the narrative describing the state of the world. This indicates that the models can rely on superficial patterns present in the narrative of the MWP and achieve high accuracy without even looking at the question. In addition, we show that a model without word-order information (i.e., the model treats the MWP as a bag-of-words) can also solve the majority of MWPs in benchmark datasets.

本文通过具体证据表明,现有方法使用浅层启发式规则即可解决基准数据集中大部分数学应用题。我们发现:当去除题目文本仅保留描述情境的叙述部分时,现有模型在数学应用题(MWP)上仍能取得较高准确率。这表明模型仅依靠应用题叙述中的表层模式即可实现高准确率,甚至无需阅读问题本身。此外,我们证明一个不考虑词序信息的模型(即将数学应用题视为词袋)同样能解决基准数据集中的大部分数学应用题。

The presence of these issues in existing benchmarks makes them unreliable for measuring the performance of models. Hence, we create a challenge set called SVAMP (Simple Variations on Arithmetic Math word Problems; pronounced swamp) of one-unknown arithmetic word problems with grade level up to 4 by applying simple variations over word problems in an existing dataset (see Table 1 for some examples). SVAMP further highlights the brittle nature of existing models when trained on these benchmark datasets. On evaluating SOTA models on SVAMP, we find that they are not even able to solve half the problems in the dataset. This failure of SOTA models on SVAMP points to the extent to which they rely on simple heuristics in training data to make their prediction.

现有基准测试中存在的这些问题使其无法可靠地衡量模型性能。因此,我们通过对现有数据集中的文字应用题进行简单变体,创建了一个名为SVAMP (Simple Variations on Arithmetic Math word Problems,发音同swamp) 的挑战集,包含最高4年级水平的单变量算术文字应用题 (参见表1中的示例) 。SVAMP进一步凸显了现有模型在这些基准数据集上训练时的脆弱性。通过在SVAMP上评估SOTA (State-of-the-Art) 模型,我们发现它们甚至无法解决数据集中一半的问题。SOTA模型在SVAMP上的失败表明它们在很大程度上依赖于训练数据中的简单启发式方法进行预测。

Below, we summarize the two broad contributions of our paper.

我们在下文中总结了本文的两大贡献。

2 Related Work

2 相关工作

Math Word Problems. A wide variety of methods and datasets have been proposed to solve MWPs; e.g. statistical machine learning (Roy and Roth,

数学应用题。已有多种方法和数据集被提出用于解决数学应用题 (MWP) ,例如统计机器学习 (Roy and Roth,

2018), semantic parsing (Huang et al., 2017) and most recently deep learning (Wang et al., 2017; Xie and Sun, 2019; Zhang et al., 2020); see (Zhang et al., 2020) for an extensive survey. Many papers have pointed out various deficiencies with previous datasets and proposed new ones to address them. Koncel-Kedziorski et al. (2016) curated the MAWPS dataset from previous datasets which along with Math23k (Wang et al., 2017) has been used as benchmark in recent works. Recently, ASDiv (Miao et al., 2020) has been proposed to provide more diverse problems with annotations for equation, problem type and grade level. HMWP (Qin et al., 2020) is another newly proposed dataset of Chinese MWPs that includes examples with muliple-unknown variables and requiring non-linear equations to solve them.

2018)、语义解析 (Huang et al., 2017) 以及最近的深度学习 (Wang et al., 2017; Xie and Sun, 2019; Zhang et al., 2020);详见 (Zhang et al., 2020) 的综述。许多论文指出了先前数据集的不足,并提出了新的解决方案。Koncel-Kedziorski et al. (2016) 从先前数据集中整理了 MAWPS 数据集,该数据集与 Math23k (Wang et al., 2017) 一起被用作近期研究的基准。最近提出的 ASDiv (Miao et al., 2020) 旨在提供更多样化的问题,并标注方程、问题类型和年级水平。HMWP (Qin et al., 2020) 是另一个新提出的中文数学应用题数据集,包含多未知变量和需要非线性方程求解的示例。

Identifying artifacts in datasets has been done for the Natural Language Inference (NLI) task by McCoy et al. (2019), Poliak et al. (2018), and Gururangan et al. (2018). Rosenman et al. (2020) identified shallow heuristics in a Relation Extraction dataset. Cai et al. (2017) showed that biases prevalent in the ROC stories cloze task allowed models to yield state-of-the-art results when trained only on the endings. To the best of our knowledge, this kind of analysis has not been done on any Math Word Problem dataset.

McCoy等人(2019)、Poliak等人(2018)和Gururangan等人(2018)已针对自然语言推理(NLI)任务完成了数据集中的伪影识别工作。Rosenman等人(2020)在关系抽取数据集中发现了浅层启发式特征。Cai等人(2017)证明,ROC故事完形填空任务中普遍存在的偏差使得模型仅通过训练结局就能获得最先进的结果。据我们所知,目前尚未有任何数学应用题(MWP)数据集进行过此类分析。

Challenge Sets for NLP tasks have been proposed most notably for NLI and machine translation (Belinkov and Glass, 2019; Nie et al., 2020; Ribeiro et al., 2020). Gardner et al. (2020) suggested creating contrast sets by manually perturbing test instances in small yet meaningful ways that change the gold label. We believe that we are the first to introduce a challenge set targeted specifically for robust evaluation of Math Word Problems.

自然语言处理任务的挑战集已被提出,最显著的是针对自然语言推理和机器翻译领域 (Belinkov and Glass, 2019; Nie et al., 2020; Ribeiro et al., 2020)。Gardner等人 (2020) 建议通过以微小但有意义的方式手动扰动测试实例来创建对比集,这些扰动会改变黄金标签。我们相信,我们是首个针对数学应用题鲁棒性评估专门引入挑战集的研究团队。

3 Background

3 背景

3.1 Problem Formulation

3.1 问题表述

We denote a Math Word Problem $P$ by a sequence of $n$ tokens $P=(\pmb{w}{1},\pmb{...},\pmb{w}{n})$ where each token $w_{i}$ can be either a word from a natural language or a numerical value. The word problem $P$ can be broken down into body $B=(\pmb{w}{1},\pmb{\cdot}\pmb{\cdot}\pmb{\cdot}\pmb{\cdot}\pmb{w}{k})$ and question $Q=(\pmb{w}{k+1},\pmb{\cdot}\pmb{\cdot}\pmb{\cdot}\pmb{\cdot}\pmb{w}{n})$ . The goal is to map $P$ to a valid mathematical expression $E_{P}$ composed of numbers from $P$ and mathematical operators from the set ${+,-,/,*}$ (e.g. $3+5-4)$ . The metric used to evaluate models on the MWP task is Execution Accuracy, which is obtained from comparing the predicted answer (calculated by evaluating $E_{P}$ ) with the annotated answer. In this work, we focus only on one-unknown arithmetic word problems.

我们将数学应用题 $P$ 表示为一个由 $n$ 个 token 组成的序列 $P=(\pmb{w}{1},\pmb{...},\pmb{w}{n})$ ,其中每个 token $w_{i}$ 可以是自然语言中的单词或数值。该应用题 $P$ 可分解为题干 $B=(\pmb{w}{1},\pmb{\cdot}\pmb{\cdot}\pmb{\cdot}\pmb{\cdot}\pmb{w}{k})$ 和问题 $Q=(\pmb{w}{k+1},\pmb{\cdot}\pmb{\cdot}\pmb{\cdot}\pmb{\cdot}\pmb{w}{n})$ 。目标是将 $P$ 映射为由 $P$ 中的数字和运算符集合 ${+,-,/,*}$ (例如 $3+5-4)$ 构成的有效数学表达式 $E_{P}$ 。用于评估模型在数学应用题任务上表现的指标是执行准确率,即通过比较预测答案 (通过计算 $E_{P}$ 得出) 与标注答案获得。本工作仅关注单未知数算术应用题。

Table 2: 5-fold cross-validation accuracies ( ) of baseline models on datasets. (R) means that the model is provided with RoBERTa pretrained embeddings while (S) means that the model is trained from scratch.

表 2: 基线模型在数据集上的五折交叉验证准确率 ( ) 。(R) 表示模型使用 RoBERTa 预训练嵌入,(S) 表示模型从头开始训练。

| 模型 | MAWPS | ASDiv-A |

|---|---|---|

| Seq2Seq (S) | 79.7 | 55.5 |

| Seq2Seq (R) | 86.7 | 76.9 |

| GTS (S) (Xie and Sun, 2019) | 82.6 | 71.4 |

| GTS (R) | 88.5 | 81.2 |

| Graph2Tree (S) (Zhang et al.,2020) | 83.7 | 77.4 |

| Graph2Tree (R) | 88.7 | 82.2 |

| MajorityTemplateBaseline | 17.7 | 21.2 |

3.2 Datasets and Methods

3.2 数据集与方法

Many of the existing datasets are not suitable for our analysis as either they are in Chinese, e.g. Math $23\mathrm{k\Omega}$ (Wang et al., 2017) and HMWP (Qin et al., 2020), or have harder problem types, e.g. Dolphin18K (Huang et al., 2016b). We consider the widely used benchmark MAWPS (KoncelKedziorski et al., 2016) composed of 2373 MWPs and the arithmetic subset of ASDiv (Miao et al., 2020) called ASDiv-A which has 1218 MWPs mostly up to grade level 4 (MAWPS does not have grade level information). Both MAWPS and ASDiv-A are evaluated on 5-fold cross-validation based on pre-assigned splits.

现有许多数据集不适合我们的分析,因为它们要么是中文数据集,例如 Math $23\mathrm{k\Omega}$ (Wang et al., 2017) 和 HMWP (Qin et al., 2020),要么包含更复杂的问题类型,例如 Dolphin18K (Huang et al., 2016b)。我们采用广泛使用的基准数据集 MAWPS (KoncelKedziorski et al., 2016),该数据集包含 2373 道数学应用题 (MWP),以及 ASDiv (Miao et al., 2020) 的算术子集 ASDiv-A(包含 1218 道 MWP,难度大多不超过 4 年级水平,而 MAWPS 未标注年级信息)。MAWPS 和 ASDiv-A 均基于预设划分进行 5 折交叉验证评估。

We consider three models in our experiments:

我们在实验中考虑了三种模型:

(a) Seq2Seq consists of a Bidirectional LSTM Encoder to encode the input sequence and an LSTM decoder with attention (Luong et al., 2015) to generate the equation.

(a) Seq2Seq 包含一个双向 LSTM 编码器 (Bidirectional LSTM Encoder) 用于编码输入序列,以及一个带注意力机制 (Luong et al., 2015) 的 LSTM 解码器用于生成方程。

(c) GTS (Xie and Sun, 2019) uses an LSTM Encoder to encode the input sequence and a tree-based Decoder to generate the equation.

(c) GTS (Xie and Sun, 2019) 使用 LSTM 编码器 (Encoder) 对输入序列进行编码,并采用基于树的解码器 (Decoder) 生成方程。

(d) Graph2Tree (Zhang et al., 2020) combines a Graph-based Encoder with a Tree-based Decoder.

(d) Graph2Tree (Zhang et al., 2020) 结合了基于图的编码器 (Graph-based Encoder) 与基于树的解码器 (Tree-based Decoder)。

The performance of these models on both datasets is shown in Table 2. We either provide RoBERTa (Liu et al., 2019) pre-trained embeddings to the models or train them from scratch. Graph2Tree (Zhang et al., 2020) with RoBERTa embeddings achieves the state-of-the-art for both datasets. Note that our implementations achieve a higher score than the previously reported highest score of $78%$ on ASDiv-A (Miao et al., 2020) and $83.7%$ on MAWPS (Zhang et al., 2020). The imple ment ation details are provided in Section B in the Appendix.

这些模型在两个数据集上的性能如表 2 所示。我们为模型提供 RoBERTa (Liu et al., 2019) 预训练嵌入或从头开始训练。使用 RoBERTa 嵌入的 Graph2Tree (Zhang et al., 2020) 在两个数据集上均达到了最先进水平。请注意,我们的实现获得了比之前报告的 ASDiv-A (Miao et al., 2020) 最高分 $78%$ 和 MAWPS (Zhang et al., 2020) 最高分 $83.7%$ 更高的分数。实现细节见附录中的 B 节。

Table 3: 5-fold cross-validation accuracies $(\uparrow)$ of baseline models on Question-removed datasets.

表 3: 基线模型在去除问题数据集上的5折交叉验证准确率 $(\uparrow)$

| Model | MAWPS | ASDiv-A |

|---|---|---|

| Seq2Seq | 77.4 | 58.7 |

| GTS | 76.2 | 60.7 |

| Graph2Tree | 77.7 | 64.4 |

4 Deficiencies in existing datasets

4 现有数据集的不足

Here we describe the experiments that show that there are important deficiencies in MAWPS and ASDiv-A.

我们在此描述的实验表明,MAWPS和ASDiv-A存在重要缺陷。

4.1 Evaluation on Question-removed MWPs

4.1 问题移除型数学应用题评估

As mentioned in Section 3.1, each MWP consists of a body $B$ , which provides a short narrative on a state of the world and a question $Q$ , which inquires about an unknown quantity about the state of the world. For each fold in the provided 5-fold split in MAWPS and ASDiv-A, we keep the train set unchanged while we remove the questions $Q$ from the problems in the test set. Hence, each problem in the test set consists of only the body $B$ without any question $Q$ . We evaluate all three models with RoBERTa embeddings on these datasets. The results are provided in Table 3.

如第3.1节所述,每个数学应用题 (MWP) 包含一个描述世界状态的正文 $B$,以及一个询问该世界状态未知量的问题 $Q$。对于MAWPS和ASDiv-A数据集提供的5折划分中的每一折,我们保持训练集不变,同时移除测试集中所有问题的 $Q$ 部分。因此,测试集中的每个问题仅包含正文 $B$ 而不含任何问题 $Q$。我们使用RoBERTa嵌入向量评估了所有三个模型在这些数据集上的表现,结果如表3所示。

The best performing model is able to achieve a 5-fold cross-validation accuracy of $64.4%$ on ASDiv-A and $77.7%$ on MAWPS. Loosely translated, this means that nearly $64%$ of the problems in ASDiv-A and $78%$ of the problems in MAWPS can be correctly answered without even looking at the question. This suggests the presence of patterns in the bodies of MWPs in these datasets that have a direct correlation with the output equation.

表现最佳的模型在ASDiv-A上实现了64.4%的5折交叉验证准确率,在MAWPS上达到77.7%。简而言之,这意味着即使不看题目,也能正确解答ASDiv-A中近64%的问题和MAWPS中78%的问题。这表明这些数据集中数学应用题(MWPs)的题干存在与输出方程直接相关的模式。

Some recent works have also demonstrated similar evidence of bias in NLI datasets (Gururangan et al., 2018; Poliak et al., 2018). They observed that NLI models were able to predict the correct label for a large fraction of the standard NLI datasets based on only the hypothesis of the input and without the premise. Our results on question-removed examples of math word problems resembles their observations on NLI datasets and similarly indicates the presence of artifacts that help statistical models predict the correct answer without complete information. Note that even though the two methods appear similar, there is an important distinction. In Gururangan et al. (2018), the model is trained and tested on hypothesis only examples and hence, the model is forced to find artifacts in the hypothesis during training. On the other hand, our setting is more natural since the model is trained in the standard way on examples with both the body and the question. Thus, the model is not explicitly forced to learn based on the body during training and our results not only show the presence of artifacts in the datasets but also suggest that the SOTA models exploit them.

一些近期研究也在自然语言推理(NLI)数据集上发现了类似的偏差证据(Gururangan et al., 2018; Poliak et al., 2018)。他们观察到,NLI模型仅凭输入的假设(无需前提)就能对大部分标准NLI数据集预测出正确标签。我们在移除问题后的数学应用题上得到的结果,与他们在NLI数据集上的观察相似,同样表明存在帮助统计模型在不完整信息下预测正确答案的数据伪影。需注意尽管两种方法看似相似,但存在重要区别:在Gururangan等人(2018)的研究中,模型仅在假设上训练和测试,因此被迫在训练期间发现假设中的伪影;而我们的设置更自然,因为模型是以标准方式在同时包含题干和问题的例子上训练的。因此模型并未被显式强制基于题干学习,我们的结果不仅揭示了数据集中伪影的存在,还表明当前最佳(SOTA)模型会利用这些伪影。

Table 4: Results of baseline models on the Easy and Hard test sets.

表 4: 基线模型在简单和困难测试集上的结果。

| MAWPS | ASDiv-A | |||

|---|---|---|---|---|

| 模型 | Easy | Hard | Easy | Hard |

| Seq2Seq | 86.8 | 86.7 | 91.3 | 56.1 |

| GTS | 92.6 | 71.7 | 91.6 | 65.3 |

| Graph2Tree | 93.4 | 71.0 | 92.8 | 63.3 |

Following Gururangan et al. (2018), we attempt to understand the extent to which SOTA models rely on the presence of simple heuristics in the body to predict correctly. We partition the test set into two subsets for each model: problems that the model predicted correctly without the question are labeled Easy and the problems that the model could not answer correctly without the question are labeled Hard. Table 4 shows the performance of the models on their respective Hard and Easy sets. Note that their performance on the full set is already provided in Table 2. It can be seen clearly that although the models correctly answer many Hard problems, the bulk of their success is due to the Easy problems. This shows that the ability of SOTA methods to robustly solve word problems is overestimated and that they rely on simple heuristics in the body of the problems to make predictions.

遵循 Gururangan 等人的研究 [2018],我们试图理解当前最优 (SOTA) 模型在多大程度上依赖问题正文中的简单启发式规则来做出正确预测。我们将测试集划分为两个子集:模型在没有问题的情况下仍能正确预测的题目标记为简单题 (Easy),而模型在没有问题的情况下无法正确回答的题目标记为难题 (Hard)。表 4 展示了各模型在相应难题集和简单题集上的表现。请注意,它们在完整测试集上的表现已在表 2 中提供。可以清楚地看到,虽然模型能正确解答许多难题,但其主要成功来源于简单题。这表明当前最优方法在稳健解决文字问题方面的能力被高估了,它们实际上依赖于问题正文中的简单启发式规则来进行预测。

4.2 Performance of a constrained model

4.2 受限模型的性能

We construct a simple model based on the Seq2Seq architecture by removing the LSTM Encoder and replacing it with a Feed-Forward Network that maps the input embeddings to their hidden represent at ions. The LSTM Decoder is provided with the average of these hidden representations as its initial hidden state. During decoding, an attention mechanism (Luong et al., 2015) assigns weights to individual hidden representations of the input tokens. We use either RoBERTa embeddings (noncontextual; taken directly from Embedding Matrix) or train the model from scratch. Clearly, this model does not have access to word-order information.

我们基于Seq2Seq架构构建了一个简单模型,移除了LSTM编码器,改用前馈网络将输入嵌入映射到其隐藏表示。LSTM解码器的初始隐藏状态设置为这些隐藏表示的平均值。在解码过程中,注意力机制 (Luong et al., 2015) 会为输入token的各个隐藏表示分配权重。我们使用RoBERTa嵌入 (非上下文相关,直接取自嵌入矩阵) 或从头开始训练模型。显然,该模型无法获取词序信息。

Table 5: 5-fold cross-validation accuracies ( ) of the constrained model on the datasets. (R) denotes that the model is provided with non-contextual RoBERTa pretrained embeddings while (S) denotes that the model is trained from scratch.

| Model | MAWPS | ASDiv-A |

| FFN+LSTMDecoder r (S) | 75.1 | 46.3 |

| FFN + LSTM Decoder (R) | 77.9 | 51.2 |

表 5: 约束模型在数据集上的5折交叉验证准确率 ( ) 。(R) 表示模型使用了非上下文 RoBERTa 预训练嵌入,(S) 表示模型从头开始训练。

| 模型 | MAWPS | ASDiv-A |

|---|---|---|

| FFN+LSTMDecoder (S) | 75.1 | 46.3 |

| FFN + LSTM Decoder (R) | 77.9 | 51.2 |

Table 5 shows the performance of this model on MAWPS and ASDiv-A. The constrained model with non-contextual RoBERTa embeddings is able to achieve a cross-validation accuracy of 51.2 on ASDiv-A and an astounding 77.9 on MAWPS. It is surprising to see that a model having no word-order information can solve a majority of word problems in these datasets. These results indicate that it is possible to get a good score on these datasets by simply associating the occurence of specific words in the problems to their corresponding equations. We illustrate this more clearly in the next section.

表 5 展示了该模型在 MAWPS 和 ASDiv-A 上的性能。使用非上下文 RoBERTa 嵌入的约束模型在 ASDiv-A 上实现了 51.2 的交叉验证准确率,在 MAWPS 上更是达到了惊人的 77.9。令人惊讶的是,一个没有词序信息的模型竟能解决这些数据集中大部分词汇问题。这些结果表明,仅通过将问题中特定词汇的出现与其对应方程关联,就可能在数据集上获得高分。我们将在下一节更清晰地说明这一点。

4.3 Analyzing the attention weights

4.3 注意力权重分析

To get a better understanding of how the constrained model is able to perform so well, we analyze the attention weights that it assigns to the hidden representations of the input tokens. As shown by Wiegreffe and Pinter (2019), analyzing the attention weights of our constrained model is a reliable way to explain its prediction since each hidden represent ation consists of information about only that token as opposed to the case of an RNN where each hidden representation may have information about the context i.e. its neighboring tokens.

为了更好地理解约束模型为何能表现如此出色,我们分析了它对输入token隐藏表征分配的注意力权重。如 Wiegreffe 和 Pinter (2019) 所示,分析约束模型的注意力权重是解释其预测的可靠方法,因为每个隐藏表征仅包含对应token的信息,这与RNN的情况不同——RNN的每个隐藏表征可能包含上下文信息(即相邻token的信息)。

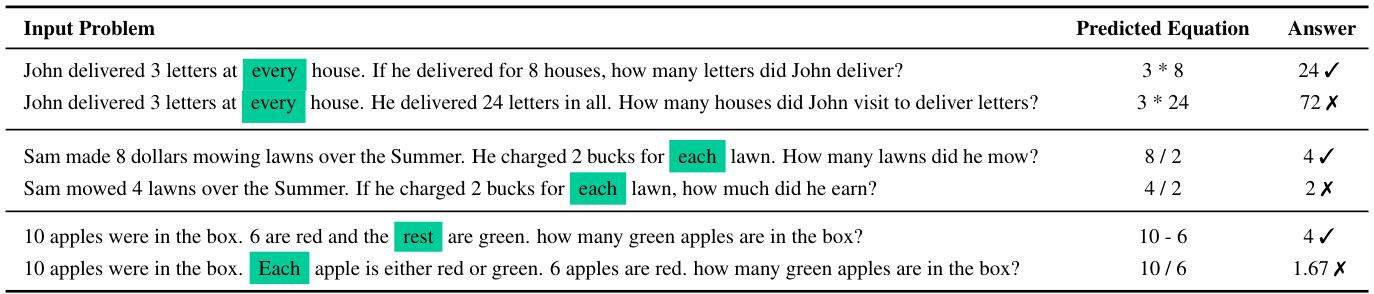

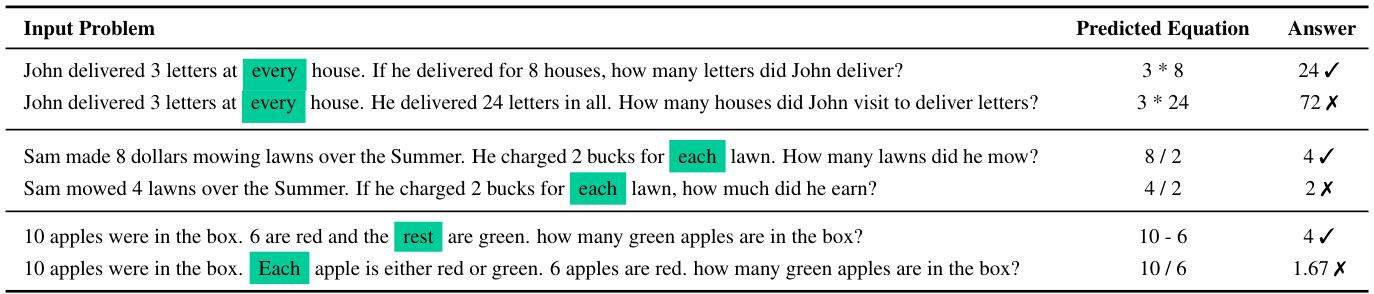

We train the contrained model (with RoBERTa embeddings) on the full ASDiv-A dataset and observe the attention weights it assigns to the words of the input problems. We found that the model usually attends to a single word to make its prediction, irrespective of the context. Table 6 shows some representative examples. In the first example, the model assigns an attention weight of 1 to the representation of the word ‘every’ and predicts the correct equation. However, when we make a subtle change to this problem such that the corresponding equation changes, the model keeps on attending over the word ‘every’ and predicts the same equation, which is now incorrect. Similar observations can be made for the other two examples. Table 26 in the Appendix has more such examples. These examples represent only a few types of spurious correlations that we could find but there could be other types of correlations that might have been missed.

我们在完整的ASDiv-A数据集上训练了约束模型(使用RoBERTa嵌入),并观察了它分配给输入问题单词的注意力权重。我们发现,无论上下文如何,该模型通常只关注单个单词来进行预测。表6展示了一些代表性示例。在第一个例子中,模型为单词"every"的表示分配了1的注意力权重,并预测出正确的方程式。然而,当我们对这个问题进行细微修改(导致对应方程式发生变化)时,模型仍然持续关注"every"这个词并预测相同的方程式,此时结果就变成了错误答案。其他两个例子也有类似现象。附录中的表26提供了更多此类示例。这些例子仅代表我们能发现的少数几种伪相关类型,可能还存在其他被遗漏的相关类型。

Table 6: Attention paid to specific words by the constrained model.

表 6: 受限模型对特定词汇的关注度。

Note that, we do not claim that every model trained on these datasets relies on the occurrence of specific words in the input problem for prediction the way our constrained model does. We are only asserting that it is possible to achieve a good score on these datasets even with such a brittle model, which clearly makes these datasets unreliable to robustly measure model performance.

需要注意的是,我们并非声称在这些数据集上训练的每个模型都像我们的约束模型那样,依赖输入问题中特定词汇的出现来进行预测。我们仅主张,即便是如此脆弱的模型,也有可能在这些数据集上取得良好分数,这显然使得这些数据集无法可靠地衡量模型的稳健性能。

5SVAMP

5SVAMP

The efficacy of existing models on benchmark datasets has led to a shift in the focus of researchers towards more difficult MWPs. We claim that this efficacy on benchmarks is misleading and SOTA MWP solvers are unable to solve even elementary level one-unknown MWPs. To this end, we create a challenge set named SVAMP containing simple one-unknown arithmetic word problems of grade level up to 4. The examples in SVAMP test a model across different aspects of solving word problems. For instance, a model needs to be sensitive to questions and possess certain reasoning abilities to correctly solve the examples in our challenge set. SVAMP is similar to existing datasets of the same level in terms of scope and difficulty for humans, but is less susceptible to being solved by models relying on superficial patterns.

现有模型在基准数据集上的有效性使研究人员将注意力转向了更复杂的数学应用题 (MWP)。我们认为这种基准表现具有误导性,当前最先进的数学应用题求解器甚至无法解决基础级别的单变量数学应用题。为此,我们创建了一个名为SVAMP的挑战集,包含难度不超过四年级水平的简单单变量算术应用题。SVAMP中的示例从不同角度测试模型解决应用题的能力,例如模型需要具备问题敏感性和特定推理能力才能正确解答我们挑战集中的题目。在难度范围和人类解题水平方面,SVAMP与同级现有数据集相似,但更不易被依赖表面模式的模型解决。

Our work differs from adversarial data collection methods such as Adversarial NLI (Nie et al., 2020) in that these methods create examples depending on the failure of a particular model while we create examples without referring to any specific model. Inspired by the notion of Normative evaluation (Linzen, 2020), our goal is to create a dataset of simple problems that any system designed to solve MWPs should be expected to solve. We create new problems by applying certain variations to existing problems, similar to the work of Ribeiro et al. (2020). However, unlike their work, our variations do not check for linguistic capabilities. Rather, the choice of our variations is motivated by the experiments in Section 4 as well as certain simple capabilities that any MWP solver must possess.

我们的工作与对抗性数据收集方法(如Adversarial NLI (Nie et al., 2020))不同之处在于,这些方法依赖于特定模型的失败来创建示例,而我们无需参考任何特定模型即可创建示例。受Normative evaluation (Linzen, 2020)概念的启发,我们的目标是创建一个简单问题的数据集,任何设计用于解决数学应用题 (MWP) 的系统都应该能够解决这些问题。我们通过对现有问题应用某些变体来创建新问题,类似于Ribeiro et al. (2020)的工作。然而,与他们的工作不同,我们的变体并不检查语言能力。相反,我们选择变体的动机来自于第4节中的实验以及任何数学应用题求解器都必须具备的某些简单能力。

5.1 Creating SVAMP

5.1 创建SVAMP

We create SVAMP by applying certain types of variations to a set of seed examples sampled from the ASDiv-A dataset. We select the seed examples from the recently proposed ASDiv-A dataset since it appears to be of higher quality and harder than the MAWPS dataset: We perform a simple experiment to test the coverage of each dataset by training a model on one dataset and testing it on the other one. For instance, when we train a Graph2Tree model on ASDiv-A, it achieves $82%$ accuracy on MAWPS. However, when trained on MAWPS and tested on ASDiv-A, the model achieved only $73%$ accuracy. Also recall Table 2 where most models performed better on MAWPS. Moreover, AS- Div has problems annotated according to types and grade levels which are useful for us.

我们通过从ASDiv-A数据集中抽取一组种子示例并施加特定类型的变体来创建SVAMP。之所以选择ASDiv-A数据集作为种子来源,是因为该数据集质量更高且难度大于MAWPS数据集:我们通过跨数据集训练测试进行了简单实验验证这一点。例如,当使用ASDiv-A训练Graph2Tree模型时,在MAWPS上达到82%准确率;而用MAWPS训练后在ASDiv-A测试时,准确率仅为73%。同时可参考表2中多数模型在MAWPS表现更好的情况。此外,ASDiv数据集还提供了按题型和年级标注的问题分类,这对我们非常有用。

To select a subset of seed examples that sufficiently represent different types of problems in the ASDiv-A dataset, we first divide the examples into groups according to their annotated types. We discard types such as ‘TVQ-Change’, ‘TVQ-Initial’, ‘Ceil-Division’ and ‘Floor-Division’ that have less than 20 examples each. We also do not consider the ‘Difference’ type since it requires the use of an additional modulus operator. For ease of creation, we discard the few examples that are more than 40 words long. To control the complexity of resulting variations, we only consider those problems as seed examples that can be solved by an expression with a single operator. Then, within each group, we cluster examples using K-Means over RoBERTa sentence embeddings of each example. From each cluster, the example closest to the cluster centroid is selected as a seed example. We selected a total of 100 seed examples in this manner. The distribution of seed examples according to different types of problems can be seen in Table 7.

为了从ASDiv-A数据集中选出能充分代表不同类型问题的种子示例子集,我们首先根据标注类型将示例分组。我们剔除了"TVQ-Change"、"TVQ-Initial"、"Ceil-Division"和"Floor-Division"等每类不足20个示例的类型。同时不考虑需要额外模运算的"Difference"类型。为简化创建过程,我们舍弃了长度超过40词的少量示例。为控制生成变体的复杂度,我们仅选择可通过单运算符表达式解决的问题作为种子示例。接着在每个分组内,我们使用K-Means算法对示例的RoBERTa句子嵌入进行聚类,并从每个簇中选择最接近簇中心的示例作为种子示例。通过这种方式共选取了100个种子示例。各类问题对应的种子示例分布情况见表7。

Table 7: Distribution of selected seed examples across types.

表 7: 各类别种子示例的分布情况。

| 组别 | ASDiv-A 中的示例数量 | 选定的种子示例数量 |

|---|---|---|

| 加法 | 278 | 28 |

| 减法 | 362 | 33 |

| 乘法 | 188 | 19 |

| 除法 | 176 | 20 |

| 总计 | 1004 | 100 |

Table 8: Types of Variations with examples. ‘Original:’ denotes the base example from which the variation is created, ‘Variation:’ denotes a manually created variation.

| 类别 | 变体类型 | 示例 |

|---|---|---|

| 问题敏感性 | 相同对象,不同结构 | 原题:Allan带了两个气球,Jake带了四个气球去公园。Allan和Jake在公园里一共有多少个气球?变体:Allan带了两个气球,Jake带了四个气球去公园。Jake比Allan在公园里多带了多少个气球? |

| 不同对象,相同结构 | 原题:学校里有542名女生和387名男生。又有290名男生入学。现在学校有多少学生?变体:学校里有542名女生和387名男生。又有290名男生入学。现在学校有多少名男生? | |

| 不同对象,不同结构 | 原题:他去看橘子收获情况,发现每次收获能装83袋。变体:他去看橘子收获情况,发现每天能收获83袋,每袋装12个橘子。他们每天收获多少个橘子? | |

| 推理能力 | 增加相关信息 | 原题:Ryan每天花4小时学英语,3小时学中文。他总共花多少时间学习英语和中文?变体:Ryan每天花4小时学英语,3小时学中文。如果他学习3天,总共花多少时间学习英语和中文? |

| 改变信息 | 原题:Jack有142支铅笔。他给Dorothy31支。Jack现在有多少支铅笔?变体:Dorothy有142支铅笔。Jack给Dorothy31支。Dorothy现在有多少支铅笔? | |

| 反转运算 | 原题:他用新鲜橘子榨汁,每杯用2个橘子,共榨了6杯。他用了多少个橘子?变体:他用新鲜橘子榨汁,每杯用2个橘子,共用了12个橘子。他榨了多少杯? | |

| 结构不变性 | 改变对象顺序 | 原题:John有8颗弹珠和3块石头。他的弹珠比石头多多少?变体:John有3块石头和8颗弹珠。他的弹珠比石头多多少? |

| 改变短语顺序 | 原题:Matthew有27块饼干。如果平分给9个朋友,每人分到多少?变体:Matthew把饼干平分给9个朋友。如果他最初有27块,每人分到多少? | |

| 增加无关信息 | 原题:Jack有142支铅笔。他给Dorothy31支。Jack现在有多少支铅笔?变体:Jack有142支铅笔。Dorothy有50支铅笔。Jack给Dorothy31支。Jack现在有多少支铅笔? |

表 8: 变体类型及示例说明。标注"原题:"表示基础题目,"变体:"表示人工创建的变体。

5.1.1 Variations

5.1.1 变体

The variations that we make to each seed example can be broadly classified into three categories based on desirable properties of an ideal model:

我们对每个种子示例所做的变体,根据理想模型的期望特性可大致分为三类:

Question Sensitivity, Reasoning Ability and Structural Invariance. Examples of each type of variation are provided in Table 8.

问题敏感性、推理能力与结构不变性。各类变体的示例见表8。

- Reasoning Ability. Variations here check whether a model has the ability to correctly determine a change in reasoning arising from subtle changes in the problem text. The different possible variations are as follows:

- 推理能力。此处的变体用于检验模型是否能正确识别问题文本细微变化导致的推理差异。具体变体类型如下:

Table 9: Statistics of our dataset compared with MAWPS and ASDiv-A.

| Dataset | #Problems | #Equation Templates | #Avg Ops | CLD |

| MAWPS | 2373 | 39 | 1.78 | 0.26 |

| ASDiv-A | 1218 | 19 | 1.23 | 0.50 |

| SVAMP | 1000 | 26 | 1.24 | 0.22 |

表 9: 我们的数据集与 MAWPS 和 ASDiv-A 的统计对比

| Dataset | #Problems | #Equation Templates | #Avg Ops | CLD |

|---|---|---|---|---|

| MAWPS | 2373 | 39 | 1.78 | 0.26 |

| ASDiv-A | 1218 | 19 | 1.23 | 0.50 |

| SVAMP | 1000 | 26 | 1.24 | 0.22 |

(a) Add relevant information: Extra relevant information is added to the example that affects the output equation.

(a) 添加相关信息:向示例中添加影响输出方程的额外相关信息。

(b) Change information: The information provided in the example is changed.

(b) 变更信息:示例中提供的信息已更改。

(c) Invert operation: The previously unknown quantity is now provided as information and the question instead asks about a previously known quantity which is now unknown.

(c) 反转操作:原先未知的量现在作为已知信息提供,而问题则询问原先已知但当前未知的量。

- Structural Invariance. Variations in this category check whether a model remains invariant to superficial structural changes that do not alter the answer or the reasoning required to solve the example. The different possible variations are as follows:

- 结构不变性。此类别下的变体用于检验模型是否对不影响答案或解题推理的表面结构变化保持不变。具体变体包括以下类型:

(a) Add irrelevant information: Extra irrelevant information is added to the problem text that is not required to solve the example.

(a) 添加无关信息:在问题文本中加入与解题无关的额外信息。

(b) Change order of objects: The order of objects appearing in the example is changed.

(b) 改变对象顺序:示例中对象的出现顺序被改变。

(c) Change order of phrases: The order of numbercontaining phrases appearing in the example is changed.

(c) 改变短语顺序:示例中包含数字的短语出现顺序被改变。

5.1.2 Protocol for creating variations

5.1.2 创建变体的协议

Since creating variations requires a high level of familiarity with the task, the construction of SVAMP is done in-house by the authors and colleagues, hereafter called the workers. The 100 seed examples (as shown in Table 7) are distributed among the workers.

由于创建变体需要对任务有高度熟悉度,SVAMP的构建工作由作者和同事(后文称为工作人员)内部完成。100个种子示例(如表7所示)被分发给工作人员。

For each seed example, the worker needs to create new variations by applying the variation types discussed in Section 5.1.1. Importantly, a combination of different variations over the seed example can also be done. For each new example created, the worker needs to annotate it with the equation as well as the type of variation(s) used to create it. More details about the creation protocol can be found in Appendix C.

对于每个种子示例,工作人员需要通过应用第5.1.1节讨论的变体类型来创建新的变体。重要的是,还可以对种子示例应用不同变体的组合。对于每个新创建的示例,工作人员需要为其标注对应的方程以及用于创建它的变体类型。有关创建协议的更多细节可在附录C中找到。

We created a total of 1098 examples. However, since ASDiv-A does not have examples with equations of more than two operators, we discarded 98 examples from our set which had equations consisting of more than two operators. This is to ensure that our challenge set does not have any unfairly difficult examples. The final set of 1000 examples was provided to an external volunteer unfamiliar with the task to check the grammatical and logical correctness of each example.

我们总共创建了1098个示例。但由于ASDiv-A没有包含超过两个运算符的方程示例,我们从数据集中剔除了98个方程包含超过两个运算符的示例,以确保挑战集不会出现任何不公平的难题。最终筛选出的1000个示例交由一位不熟悉该任务的外部志愿者检查每个示例的语法和逻辑正确性。

5.2 Dataset Properties

5.2 数据集属性

Our challenge set SVAMP consists of oneunknown arithmetic word problems which can be solved by expressions requiring no more than two operators. Table 9 shows some statistics of our dataset and of ASDiv-A and MAWPS. The Equation Template for each example is obtained by con- verting the corresponding equation into prefix form and masking out all numbers with a meta symbol. Observe that the number of distinct Equation Templates and the Average Number of Operators are similar for SVAMP and ASDiv-A and are considerably smaller than for MAWPS. This indicates that SVAMP does not contain unfairly difficult MWPs in terms of the arithmetic expression expected to be produced by a model.

我们的挑战集SVAMP包含一系列单未知数算术应用题,这些问题可通过不超过两个运算符的表达式解决。表9展示了我们的数据集以及ASDiv-A和MAWPS的一些统计数据。每个样例的方程模板(Equation Template)通过将对应方程转换为前缀形式并用元符号遮蔽所有数字获得。值得注意的是,SVAMP与ASDiv-A在独特方程模板数量和平均运算符数量上较为接近,且明显少于MAWPS。这表明就模型预期生成的算术表达式而言,SVAMP并未包含不公平的高难度数学应用题(MWPs)。

Previous works, including those introducing MAWPS and ASDiv, have tried to capture the notion of diversity in MWP datasets. Miao et al. (2020) introduced a metric called Corpus Lexicon Diversity (CLD) to measure lexical diversity. Their contention was that higher lexical diversity is correlated with the quality of a dataset. As can be seen from Table 9, SVAMP has a much lesser CLD than ASDiv-A. SVAMP is also less diverse in terms of problem types compared to ASDiv-a. Despite this we will show in the next section that SVAMP is in fact more challenging than ASDiv-A for current models. Thus, we believe that lexical diversity is not a reliable way to measure the quality of MWP datasets. Rather it could depend on other factors such as the diversity in MWP structure which preclude models exploiting shallow heuristics.

先前的研究,包括引入MAWPS和ASDiv的工作,都试图捕捉数学应用题(MWP)数据集的多样性概念。Miao等人(2020)提出了一项名为语料库词汇多样性(Corpus Lexicon Diversity, CLD)的指标来衡量词汇多样性。他们认为词汇多样性越高,数据集的质量就越好。从表9可以看出,SVAMP的CLD远低于ASDiv-A。在问题类型方面,SVAMP的多样性也不如ASDiv-A。尽管如此,我们将在下一节展示,对于当前模型而言,SVAMP实际上比ASDiv-A更具挑战性。因此,我们认为词汇多样性并不是衡量MWP数据集质量的可靠方法。相反,它可能取决于其他因素,例如MWP结构的多样性,这些因素阻止了模型利用浅层启发式方法。

5.3 Experiments on SVAMP

5.3 SVAMP 实验

We train the three considered models on a combination of MAWPS and ASDiv-A and test them on SVAMP. The scores of all three models with and without RoBERTa embeddings for various subsets of SVAMP can be seen in Table 10.

我们在MAWPS和ASDiv-A的组合上训练了这三种模型,并在SVAMP上进行了测试。这三种模型在SVAMP不同子集上使用和不使用RoBERTa嵌入的得分见表10。

Table 10: Results of models on the SVAMP challenge set. $S$ indicates that the model is trained from scratch. $R$ indicates that the model was trained with RoBERTa embeddings. The first row shows the results for the full dataset. The next two rows show the results for subsets of SVAMP composed of examples that have equations with one operator and two operators respectively. The last four rows show the results for subsets of SVAMP composed of examples of type Addition, Subtraction, Multiplication and Division respectively.

表 10: SVAMP挑战集上的模型结果。$S$表示模型是从头开始训练的。$R$表示模型使用了RoBERTa嵌入进行训练。第一行展示了完整数据集的结果。接下来的两行分别展示了SVAMP中由包含一个运算符和两个运算符的方程组成的子集结果。最后四行分别展示了由加法、减法、乘法和除法类型示例组成的SVAMP子集结果。

The best performing Graph2Tree model is only able to achieve an accuracy of $43.8%$ on SVAMP. This indicates that the problems in SVAMP are indeed more challenging for the models than the problems in ASDiv-A and MAWPS despite being of the same scope and type and less diverse. Table 27 in the Appendix lists some simple examples from SVAMP on which the best performing model fails. These results lend further support to our claim that existing models cannot robustly solve elementary level word problems.

表现最佳的 Graph2Tree 模型在 SVAMP 上仅能达到 $43.8%$ 的准确率。这表明,尽管 SVAMP 的问题与 ASDiv-A 和 MAWPS 同属一个范围和类型且多样性较低,但对模型而言确实更具挑战性。附录中的表 27 列出了 SVAMP 的一些简单示例,表现最佳的模型在这些问题上也未能成功。这些结果进一步支持了我们的观点:现有模型无法稳健解决基础水平的文字问题。

Table 12: Accuracies ( ) of the constrained model on SVAMP. (R) denotes that the model is provided with non-contextual RoBERTa pretrained embeddings while (S) denotes that the model is trained from scratch.

表 12: 受限模型在SVAMP上的准确率 ( )。 (R) 表示模型使用了非上下文RoBERTa预训练嵌入, (S) 表示模型是从零开始训练的。

| Seq2Seq | GTS | Graph2Tree | ||||

|---|---|---|---|---|---|---|

| S | R | S | R | S | R | |

| Full Set | 24.2 | 40.3 | 30.8 | 41.0 | 36.5 | 43.8 |

| One-Op Two-Op | 25.4 20.3 | 42.6 33.1 | 31.7 27.9 | 44.6 29.7 | 42.9 16.1 | 51.9 17.8 |

| ADD | 28.5 | 41.9 | 35.8 | 36.3 | 24.9 | 36.8 |

| SUB | 22.3 | 35.1 | 26.7 | 36.9 | 41.3 | 41.3 |

| MUL | 17.9 | 38.7 | 29.2 | 38.7 | 27.4 | 35.8 |

| DIV | 29.3 | 56.3 | 39.5 | 61.1 | 40.7 | 65.3 |

Next, we remove the questions from the examples in SVAMP and evaluate them using the three models with RoBERTa embeddings trained on combined MAWPS and ASDiv-A. The scores can be seen in Table 11. The accuracy drops by half when compared to ASDiv-A and more than half compared to MAWPS suggesting that the problems in SVAMP are more sensitive to the information present in the question. We also evaluate the performance of the constrained model on SVAMP when trained on MAWPS and ASDiv-A. The best model achieves only $18.3%$ accuracy (see Table 12) which is marginally better than the majority template baseline. This shows that the problems in SVAMP are less vulnerable to being solved by models using simple patterns and that a model needs contextual information in order to solve them.

接下来,我们从SVAMP的示例中移除问题部分,并使用三个基于RoBERTa嵌入(在MAWPS和ASDiv-A联合训练集上训练)的模型进行评估。结果如表11所示。与ASDiv-A相比准确率下降了一半,与MAWPS相比降幅更大,这表明SVAMP中的问题对题目文本信息更为敏感。我们还评估了约束模型在MAWPS和ASDiv-A训练集上对SVAMP的表现。最佳模型仅达到$18.3%$的准确率(见表12),略高于多数模板基线。这表明SVAMP的问题较难被简单模式匹配的模型解决,需要依赖上下文信息才能正确求解。

Table 11: Accuracies ( ) of models on SVAMP without questions. The 5-fold CV accuracy scores for ASDiv-A without questions are restated for easier comparison.

表 11: 各模型在无问题SVAMP数据集上的准确率 ( )。为便于比较,同时列出了无问题ASDiv-A数据集的5折交叉验证准确率分数。

| 模型 | SVAMP无问题 | ASDiv-A无问题 |

|---|---|---|

| Seq2Seq | 29.2 | 58.7 |

| GTS | 28.6 | 60.7 |

| Graph2Tree | 30.8 | 64.4 |

| 模型 | SVAMP |

|---|---|

| FFN + LSTM 解码器 (S) | 17.5 |

| FFN + LSTM 解码器 (R) | 18.3 |

| 多数模板基线 | 11.7 |

We also explored using SVAMP for training by combining it with ASDiv-A and MAWPS. We performed 5-fold cross-validation over SVAMP where the model was trained on a combination of the three datasets and tested on unseen examples from SVAMP. To create the folds, we first divide the seed examples into five sets, with each type of example distributed nearly equally among the sets. A fold is obtained by combining all the examples in SVAMP that were created using the seed examples in a set. In this way, we get five different folds from the five sets. We found that the best model achieved about $65%$ accuracy. This indicates that even with additional training data existing models are still not close to the performance that was estimated based on prior benchmark datasets.

我们还尝试将SVAMP与ASDiv-A和MAWPS结合用于训练。我们采用5折交叉验证方法,模型在三个数据集的组合上进行训练,并在SVAMP的未见样本上进行测试。创建折次时,首先将种子样本平均分为五组,确保每组包含各类样本的比例相近。每个折次由使用同组种子样本生成的所有SVAMP样本组成,最终得到五个不同的折次。实验发现最佳模型准确率约为$65%$,这表明即使增加训练数据,现有模型的性能仍远低于基于先前基准数据集预估的水平。

To check the influence of different categories of variations in SVAMP, for each category, we measure the difference between the accuracy of the best model on the full dataset and its accuracy on a subset containing no example created from that category of variations. The results are shown in Table 13. Both the Question Sensitivity and Struc

为了检验SVAMP中不同类别变体的影响,我们对每个类别测量了最佳模型在全数据集上的准确率与不含该类别变体样本子集上的准确率差异。结果如表13所示。问题敏感性和结构...

| Removed Category | #Removed Examples | Change in Accuracy (△) |

| QuestionSensitivity | 462 | +13.7 |

| Reasoning Ability | 649 | -3.3 |

| StructuralInvariance | 467 | +4.5 |

| 移除类别 | 移除示例数量 | 准确率变化 (△) |

|---|---|---|

| QuestionSensitivity | 462 | +13.7 |

| Reasoning Ability | 649 | -3.3 |

| StructuralInvariance | 467 | +4.5 |

Table 13: Change in accuracies when categories are removed. The Change in Accuracy $\Delta:=:A c c(F u l l:-:$ $\mathit{C a t)-A c c(F u l l)}$ , where $A c c(F u l l)$ is the accuracy on the full set and $A c c(F u l l-C a t)$ is the accuracy on the set of examples left after removing all examples which were created using Category $C a t$ either by itself, or in use with other categories.

表 13: 移除类别时的准确率变化。准确率变化 $\Delta:=:A c c(F u l l:-:$ $\mathit{C a t)-A c c(F u l l)}$,其中 $A c c(F u l l)$ 表示完整数据集上的准确率,$A c c(F u l l-C a t)$ 表示移除所有使用类别 $C a t$ (单独或与其他类别组合生成) 的样本后剩余样本集上的准确率。

Table 14: Change in accuracies when variations are removed. The Change in Accuracy $\Delta:=:A c c(F u l l:-$ $V a r)-A c c(F u l l)$ , where $A c c(F u l l)$ is the accuracy on the full set and $A c c(F u l l-V a r)$ is the accuracy on the set of examples left after removing all examples which were created using Variation $V a r$ either by itself, or in use with other variations.

表 14: 移除变体时的准确率变化。准确率变化 $\Delta:=:A c c(F u l l:-$ $V a r)-A c c(F u l l)$ ,其中 $A c c(F u l l)$ 表示完整数据集上的准确率, $A c c(F u l l-V a r)$ 表示移除所有使用变体 $V a r$ (单独或与其他变体组合使用)生成的样本后剩余样本集上的准确率。

| 移除变体 | 移除样本数 | 准确率变化 (%) |

|---|---|---|

| Same Obj,Diff Struct | 325 | +7.3 |

| DiffObj,SameStruct | 69 | +1.5 |

| Diff Obj,Diff Struct | 74 | +1.3 |

| AddRelInfo | 264 | +5.5 |

| Change Info | 149 | +3.2 |

| InvertOperation | 255 | -10.2 |

| Changeorderof Obj | 107 | +2.3 |

| Change order ofPhrases | 152 | -3.3 |

| AddIrrel Info | 281 | +6.9 |

tural Invariance categories of variations show an increase in accuracy when their examples are removed, thereby indicating that they make SVAMP more challenging. The decrease in accuracy for the Reasoning Ability category can be attributed in large part to the Invert Operation variation. This is not surprising because most of the examples created from Invert Operation are almost indistinguishable from examples in ASDiv-A, which the model has seen during training. The scores for each individual variation are provided in Table 14.

自然不变性类别的变体在移除其示例后准确率有所提升,这表明它们使SVAMP更具挑战性。推理能力类别准确率的下降很大程度上可归因于反转操作(Invert Operation)变体。这并不令人意外,因为大多数通过反转操作生成的示例与训练时模型见过的ASDiv-A数据集中的示例几乎无法区分。各变体的具体得分见表14。

We also check the break-up of performance of the best performing Graph2Tree model according to the number of numbers present in the text of the input problem. We trained the model on both ASDiv-A and MAWPS and tested on SVAMP and compare those results against the 5-fold crossvalidation setting of ASDiv-A. The scores are provided in Table 15. While the model can solve many problems consisting of only two numbers in the input text (even in our challenge set), it performs very badly on problems having more than two numbers. This shows that current methods are incapable of properly associating numbers to their context. Also, the gap between the performance on ASDiv-A and SVAMP is high, indicating that the examples in SVAMP are more difficult for these models to solve than the examples in ASDiv-A even when considering the structurally same type of word problems.

我们还根据输入问题文本中的数字数量,分析了表现最佳的 Graph2Tree 模型的性能细分情况。该模型同时在 ASDiv-A 和 MAWPS 上训练,并在 SVAMP 上测试,然后将这些结果与 ASDiv-A 的 5 折交叉验证设置进行比较。具体分数见表 15。虽然该模型能解决许多输入文本中仅含两个数字的问题(即使是在我们的挑战集中),但在处理超过两个数字的问题时表现非常糟糕。这表明当前方法无法正确将数字与其上下文关联起来。此外,ASDiv-A 和 SVAMP 之间的性能差距较大,说明即使考虑结构相同的文字问题类型,SVAMP 中的样例对这些模型而言也比 ASDiv-A 中的样例更难解决。

6 Final Remarks

6 最终评述

Going back to the original question, are existing NLP models able to solve elementary math word problems? This paper gives a negative answer. We have empirically shown that the benchmark English MWP datasets suffer from artifacts making them unreliable to gauge the performance of MWP solvers: we demonstrated that the majority of problems in the existing datasets can be solved by simple heuristics even without word-order information or the question text.

回到最初的问题,现有的自然语言处理 (NLP) 模型能否解决基础数学应用题?本文给出了否定答案。我们通过实验证明,当前英语数学应用题 (MWP) 基准数据集存在人为偏差,导致其无法可靠评估解题模型的性能:研究表明,即使不依赖词序信息或题干文本,现有数据集中大多数题目也能通过简单启发式方法解决。

Table 15: Accuracy break-up according to the number of numbers in the input problem. 2 nums refers to the subset of problems which have only 2 numbers in the problem text. Similarly, 3 nums and 4 nums are subsets that contain 3 and 4 different numbers in the problem text respectively.

<h