You Only Train Once: A Unified Framework for Both Full-Reference and No-Reference Image Quality Assessment

You Only Train Once: 全参考与无参考图像质量评估的统一框架

Abstract—Although recent efforts in image quality assessment (IQA) have achieved promising performance, there still exists a considerable gap compared to the human visual system (HVS). One significant disparity lies in humans’ seamless transition between full reference (FR) and no reference (NR) tasks, whereas existing models are constrained to either FR or NR tasks. This disparity implies the necessity of designing two distinct systems, thereby greatly diminishing the model’s versatility. Therefore, our focus lies in unifying FR and NR IQA under a single framework. Specifically, we first employ an encoder to extract multi-level features from input images. Then a Hierarchical Attention (HA) module is proposed as a universal adapter for both FR and NR inputs to model the spatial distortion at each encoder stage. Furthermore, considering that different distortions contaminate encoder stages and damage image semantic meaning differently, a Semantic Distortion Aware (SDA) module is proposed to examine feature correlations between shallow and deep layers of the encoder. By adopting HA and SDA, the proposed network can effectively perform both FR and NR IQA. When our proposed model is independently trained on NR or FR IQA tasks, it outperforms existing models and achieves state-of-theart performance. Moreover, when trained jointly on NR and FR IQA tasks, it further enhances the performance of NR IQA while achieving on-par performance in the state-of-the-art FR IQA. You only train once to perform both IQA tasks. Code will be released at: https://github.com/BarCode Reader/YOTO.

摘要—尽管当前图像质量评估(IQA)研究已取得显著进展,但与人类视觉系统(HVS)仍存在明显差距。一个重要差异体现在人类能够无缝切换全参考(FR)和无参考(NR)任务,而现有模型只能处理单一任务类型。这种差异导致需要设计两套独立系统,极大限制了模型的多功能性。为此,我们致力于在统一框架下实现FR和NR IQA。具体而言,首先通过编码器提取输入图像的多层次特征;随后提出分层注意力(HA)模块作为通用适配器,对FR/NR输入建模各编码阶段的空域失真;进一步考虑到不同失真对编码阶段和图像语义的差异化影响,提出语义失真感知(SDA)模块来检测编码器浅层与深层特征的相关性。通过HA和SDA模块,本网络可同时高效执行FR和NR IQA任务。当模型分别在NR或FR任务上独立训练时,其性能超越现有模型并达到最优水平;当联合训练时,NR任务性能进一步提升的同时保持FR任务的顶尖表现。仅需单次训练即可完成双重IQA任务。代码发布于:https://github.com/BarCodeReader/YOTO。

Index Terms—Full Reference IQA, No Reference IQA, Transformer, Unified Structure

索引术语 - 全参考图像质量评估 (Full Reference IQA) , 无参考图像质量评估 (No Reference IQA) , Transformer , 统一结构

I. INTRODUCTION

I. 引言

The importance of accurately assessing image quality has become increasingly crucial in many fields [14], [38], [43], [71], [78]. Though the human vision system (HVS) is capable of identifying high-quality images effortlessly, it is laborintensive, and in most cases infeasible, to assess image quality via human workers. Therefore, image quality assessment (IQA) aims to develop objective metrics that can predict image quality as perceived by humans [29].

准确评估图像质量的重要性在许多领域变得愈发关键[14], [38], [43], [71], [78]。尽管人类视觉系统(HVS)能够轻松识别高质量图像,但通过人工评估图像质量既费时费力,在大多数情况下也不可行。因此,图像质量评估(IQA)致力于开发能预测人类感知质量的客观指标[29]。

Depending on the presence of high-quality reference images, IQA tasks can be grouped into two categories: NoReference (NR) and Full-Reference (FR) 1. Early studies apply hand-crafted metrics to perform the IQA. FR-IQA metrics like PSNR, SSIM [62], and NSER [73] assess image quality via signal fidelity and structural similarity between distorted and reference images. NR-IQA metrics such as DIIVINE [53] and BRISQUE [47] attempt to model natural scene statistics for quality measurement. These methods are well-defined for specific distortions and are usually poorly generalized. Unlike other methods that compare distorted images with pristine images, BPRI [42] and BMPRI [44] aim to first obtain characteristics from the image that suffers the most distortion, namely pseudo-reference image (PRI), then estimate quality scores by the blockiness, sharpness, and noisiness between distorted images and PRIs.

根据是否存在高质量参考图像,图像质量评估 (IQA) 任务可分为两类:无参考 (NR) 和全参考 (FR) [1]。早期研究采用手工设计的指标进行 IQA。全参考指标如 PSNR、SSIM [62] 和 NSER [73] 通过信号保真度以及失真图像与参考图像间的结构相似性来评估质量。无参考指标如 DIIVINE [53] 和 BRISQUE [47] 则尝试建模自然场景统计特性进行质量度量。这些方法针对特定失真类型定义明确,但通常泛化性较差。与其他直接比较失真图像与原始图像的方法不同,BPRI [42] 和 BMPRI [44] 旨在先从失真最严重的图像中提取特征(即伪参考图像 (PRI)),然后通过失真图像与 PRI 之间的块效应、锐度和噪声度来估算质量分数。

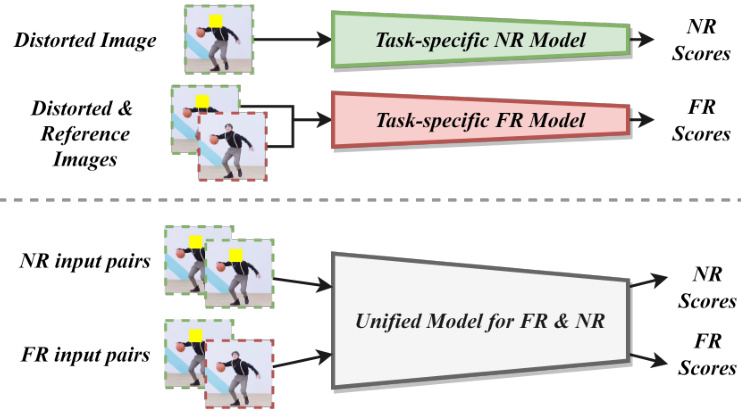

Fig. 1. Illustration of the main purpose for the proposed network (the lower part in the figure), where different image pairs can be fed into the network to yield FR/NR IQA scores using the same architecture. The traditional framework is given as the upper part of the figure for easy comparison. Our method offers great simplicity in both training and applications, minimizing performance inconsistencies on switching FR/NR tasks, and it achieves stateof-the-art performance on both FR and NR IQA benchmarks.

图 1: 所提出网络主要用途的示意图(图中下半部分),其中不同图像对可输入网络,使用相同架构生成FR/NR IQA分数。传统框架作为对比展示在图上半部分。我们的方法在训练和应用中均实现了高度简洁性,最大程度减少了FR/NR任务切换时的性能不一致问题,并在FR和NR IQA基准测试中均达到了最先进水平。

In the deep learning era, the core idea for most FR-IQA methods is to estimate quality score by identifying lowquality regions through differ enc ing the reference and distorted images or their extracted features [22], [52], [58]. For NRIQA, common practices are adopting ranking [36], [48], [49], feature comparison [41], [63], generating pristine images using Generative Adversarial Networks (GAN) [5], [25], [33], [39], and applying Transformers or multi-scale CNNs for better spatial modeling of distortions [7], [14], [35], [57], [65], [67], [77]. The rationale behind these NR methods is to implicitly identify low-quality regions for IQA score estimation.

在深度学习时代,大多数全参考图像质量评估(FR-IQA)方法的核心思想是通过比较参考图像与失真图像或其提取特征的差异来识别低质量区域,从而估计质量分数[22]、[52]、[58]。对于无参考图像质量评估(NRIQA),常见方法包括采用排序策略[36]、[48]、[49]、特征比对[41]、[63]、使用生成对抗网络(GAN)生成原始图像[5]、[25]、[33]、[39],以及应用Transformer或多尺度卷积神经网络(CNN)来实现更优的失真空间建模[7]、[14]、[35]、[57]、[65]、[67]、[77]。这些无参考方法的基本原理是通过隐式识别低质量区域来实现图像质量评分估计。

With or without the reference image, human beings can perform both FR and NR IQA effortlessly. This indicates that the FR and NR IQA share common ali ties and there is a general model in our brains capable of performing both tasks. Recent research has found Saliency Map [3], [30], [54], [66] to be beneficial for both FR and NR IQA tasks. This serves as additional evidence demonstrating the common ali ties between FR and NR IQA. Unfortunately, methods in the field are typically task-specific designed, as illustrated in Fig.1. They are indeed effective; however, once trained, they cannot be readily transferred to other IQA tasks (like from NR to FR or vice versa). Consequently, they lack a certain degree of generality and still exhibit a gap compared to humans, thus hindering our exploration of the underlying essence of IQA. A model capable of performing both IQA tasks can narrow this gap and thus it is meaningful and necessary. Besides, not only are human beings capable of perceiving the amount of distortion present in an image, but we are also adept at identifying the extent to which such distortion affects the semantic meaning, as illustrated in Fig.2. Though the field has recognized the significance of semantic information such as saliency, we lack an effective method for modeling the impact of distortion on semantic meaning.

无论是否有参考图像,人类都能轻松完成全参考(FR)和无参考(NR)图像质量评估(IQA)。这表明FR与NR IQA具有共同特性,我们大脑中存在能够同时处理这两类任务的通用模型。最新研究发现显著性图(Saliency Map)[3][30][54][66]对FR和NR IQA任务均有助益,这进一步印证了两者的内在关联。但现有方法通常如图1所示采用任务专用设计:虽然效果显著,但训练完成后难以迁移至其他IQA任务(如NR转FR或反之),缺乏人类所具备的泛化能力,阻碍了我们探究IQA的本质。能同时处理两类任务的模型将缩小这一差距,其研究具有重要价值。此外如图2所示,人类不仅能感知图像失真程度,还能判断失真对语义的影响程度。尽管该领域已认识到显著性等语义信息的重要性,但目前仍缺乏有效方法来建模失真对语义的影响。

Fig. 2. Illustration of how humans assess image quality when distortion is present: which one has the highest quality score? (a) a $100\times100$ yellow block is presented in the background. (b) four blocks in both background and foreground (the basketball player). (c) a $200\times200$ block in the background. (d) a $100\times100$ block on the face. Quality score based on the amount of distortion: $(b)<(a)$ and $(c)<(a)$ , while that based on the semantic impact to content (the player): $(b)<(c)$ and thus $(b)<(c)<(a)$ . How about (d)? It has less amount of distortions than (b) and (c). However, it should have the lowest quality score because the most important message, the face, is damaged. Thus, aside from the amount, distortion is significant if it has critical damage to an image’s semantic meaning.

图 2: 展示人类如何评估存在失真时的图像质量:哪张图的质量得分最高? (a) 背景中呈现一个 $100\times100$ 的黄色色块。 (b) 背景和前景(篮球运动员)中各有四个色块。 (c) 背景中有一个 $200\times200$ 的色块。 (d) 面部有一个 $100\times100$ 的色块。基于失真量的质量评分: $(b)<(a)$ 且 $(c)<(a)$ ,而基于对内容(运动员)语义影响的评分: $(b)<(c)$ 因此 $(b)<(c)<(a)$ 。那么(d)呢?它的失真量比(b)和(c)少。然而,由于最重要的信息——面部被破坏,它应该获得最低的质量分数。因此,除了失真量之外,若失真对图像的语义含义造成关键损害,则其影响更为显著。

To this end, we aim to narrow the gap between FR and NR IQA by developing a unified model and to improve existing IQA performance from the perspective of semantic modeling of distortion. Specifically, we first apply an encoder (ResNet50 [18] or Swin Transformer [37]) for feature extraction. Input images are encoded into multiscale features. We then utilize the attention mechanism as the universal adaptor for FR and NR tasks. Depending on the input pairs, i.e. {distorted img., distorted img.} or {distorted img., pristine img.}, the attention block can dynamically switch between self-attention and cross-attention thus unifying FR and NR tasks. However, global attention is usually sparse due to the large spatial dimension of shallow features. To further model the spatial distortion, by applying a scale factor $r$ , we partitioned the attention matrix into patches and computed the local attention. We stack several attention blocks together with different scale factors and name it the Hierarchical Attention (HA) module. To model the semantic impact caused by distortion, we developed a Semantic Distortion Aware (SDA) module. Distortions at one encoder stage, based on their types and strengths, might remain and affect the next few encoder stages differently. Though self-attention is effective in identifying spatially spread distortions, it has a weak representation of how distortions are correlated across all encoder stages. To this end, we propose to compute the crossattention between features from different encoder stages. To ensure efficiency, the calculation is performed only between high-level feature pixels and their associated low-level subregions (like a ”cone”). Lastly, features from HA and SDA are concatenated and aggregated for quality score generation.

为此,我们旨在通过开发统一模型缩小全参考(FR)与无参考(NR)图像质量评估(IQA)之间的差距,并从失真语义建模的角度提升现有IQA性能。具体而言,我们首先采用ResNet50[18]或Swin Transformer[37]编码器进行特征提取,将输入图像编码为多尺度特征。随后利用注意力机制作为FR与NR任务的通用适配器,根据输入图像对{失真图像, 失真图像}或{失真图像, 原始图像},注意力块可动态切换自注意力与交叉注意力模式,从而实现FR/NR任务统一。但由于浅层特征空间维度较大,全局注意力往往较为稀疏。为更好地建模空间失真,我们通过尺度因子$r$将注意力矩阵分块计算局部注意力,堆叠多个不同尺度因子的注意力块构成层次化注意力(HA)模块。针对失真引发的语义影响,我们开发了语义失真感知(SDA)模块。某一编码阶段的失真可能因其类型和强度持续影响后续编码阶段。虽然自注意力能有效识别空间扩散的失真,但难以表征跨编码阶段的失真关联。为此,我们提出计算不同编码阶段特征间的交叉注意力,为保障效率,仅在高阶特征像素与其关联的低阶子区域(如"锥形"区域)间进行计算。最终将HA与SDA模块的特征拼接聚合以生成质量分数。

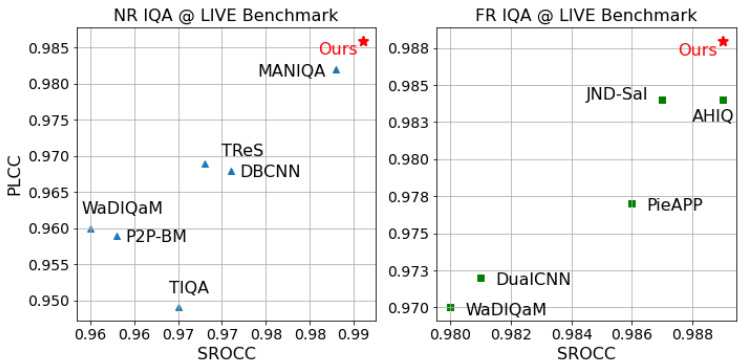

Fig. 3. Performance comparison against other FR and NR IQA models on LIVE [55] dataset. Our method achieves state-of-the-art performance on both FR and NR IQA benchmarks using the same network architecture.

图 3: LIVE [55] 数据集上与其他FR (全参考) 和NR (无参考) IQA (图像质量评估) 模型的性能对比。我们的方法使用相同网络架构,在FR和NR IQA基准测试中均达到了最先进的性能水平。

Extensive experiments on four FR-IQA benchmarks and seven NR-IQA benchmarks demonstrate the effectiveness of our proposed network. The proposed network outperforms other state-of-the-art methods on both FR and NR tasks using a unified architecture, as shown in Fig.3. To sum up, our contributions are threefold as follows:

在四个FR-IQA(全参考图像质量评估)基准和七个NR-IQA(无参考图像质量评估)基准上的大量实验证明了我们提出的网络的有效性。如图3所示,所提出的网络采用统一架构,在FR和NR任务上均优于其他最先进方法。总之,我们的贡献主要体现在以下三个方面:

• We propose a unified framework, YOTO, for both FR and NR IQA tasks and achieve state-of-the-art performance. The unified structure offers great simplicity and to our best knowledge, we are the first to aim to complete these two tasks using the same network architecture for IQA. We introduce the Hierarchical Attention (HA) module as the universal adapter for both NR and FR inputs and for spatial distortion modeling. We further devise a Semantic Distortion Aware (SDA) module for semantic impact modeling. The unified network also enables joint training on both FR and NR IQA. You only train once, and the obtained model can achieve on-par performance on the FR task and better performance on the NR task trained separately.

• 我们提出了一个统一框架YOTO,可同时完成FR(全参考)和NR(无参考)图像质量评价(IQA)任务,并实现最先进性能。该统一结构具有高度简洁性,据我们所知,我们是首个尝试用同一网络架构完成这两类IQA任务的团队。我们提出的分层注意力(HA)模块可作为NR/FR输入的统一适配器,同时进行空间失真建模;进一步设计的语义失真感知(SDA)模块则用于语义影响建模。这种统一网络还支持FR与NR IQA的联合训练——只需训练一次,所得模型在FR任务上能达到分立训练的同等性能,在NR任务上则表现更优。

II. RELATED WORK

II. 相关工作

FR-IQA Since reference images are given alongside distorted images, the core idea is to estimate the quality scores based on the differences. The most straightforward way is to calculate the Mean-squared Error (MSE) between distorted images and reference images. However, MSE cannot effectively reflect visual quality perceived by humans [13] and various image quality metrics have been developed striving for better alignment with HVS [34]. With the emergence of deep learning, DeepQA [22] applies CNN on distorted images and their corresponding difference maps to estimate quality scores. Similarly, DualCNN [58] and PieAPP [52] perform IQA based on the feature differences between pristine images and distorted images. These methods focus on modeling spatial differences but ignore the semantic impacts caused by distortion. To mitigate this issue, saliency was introduced to reflect visually important regions [1], [21], [54], [75]. However, saliency is simply served as an attention map and usually requires an extra saliency network for saliency generation. Thus better modeling of semantic impact caused by distortion still awaits more investigation.

FR-IQA 由于失真图像会与参考图像一起给出,其核心思想是基于两者差异来估计质量分数。最直接的方法是计算失真图像与参考图像之间的均方误差 (MSE)。但 MSE 无法有效反映人类感知的视觉质量 [13],因此研究者开发了多种图像质量指标以更好地贴合人类视觉系统 (HVS) [34]。随着深度学习的兴起,DeepQA [22] 对失真图像及其差异图应用 CNN 来估计质量分数。类似地,DualCNN [58] 和 PieAPP [52] 基于原始图像与失真图像之间的特征差异进行 IQA。这些方法专注于建模空间差异,但忽略了失真造成的语义影响。为解决该问题,研究者引入显著性来反映视觉重要区域 [1], [21], [54], [75]。然而显著性仅作为注意力图使用,且通常需要额外的显著性网络来生成。因此对于失真造成的语义影响仍需更深入的建模研究。

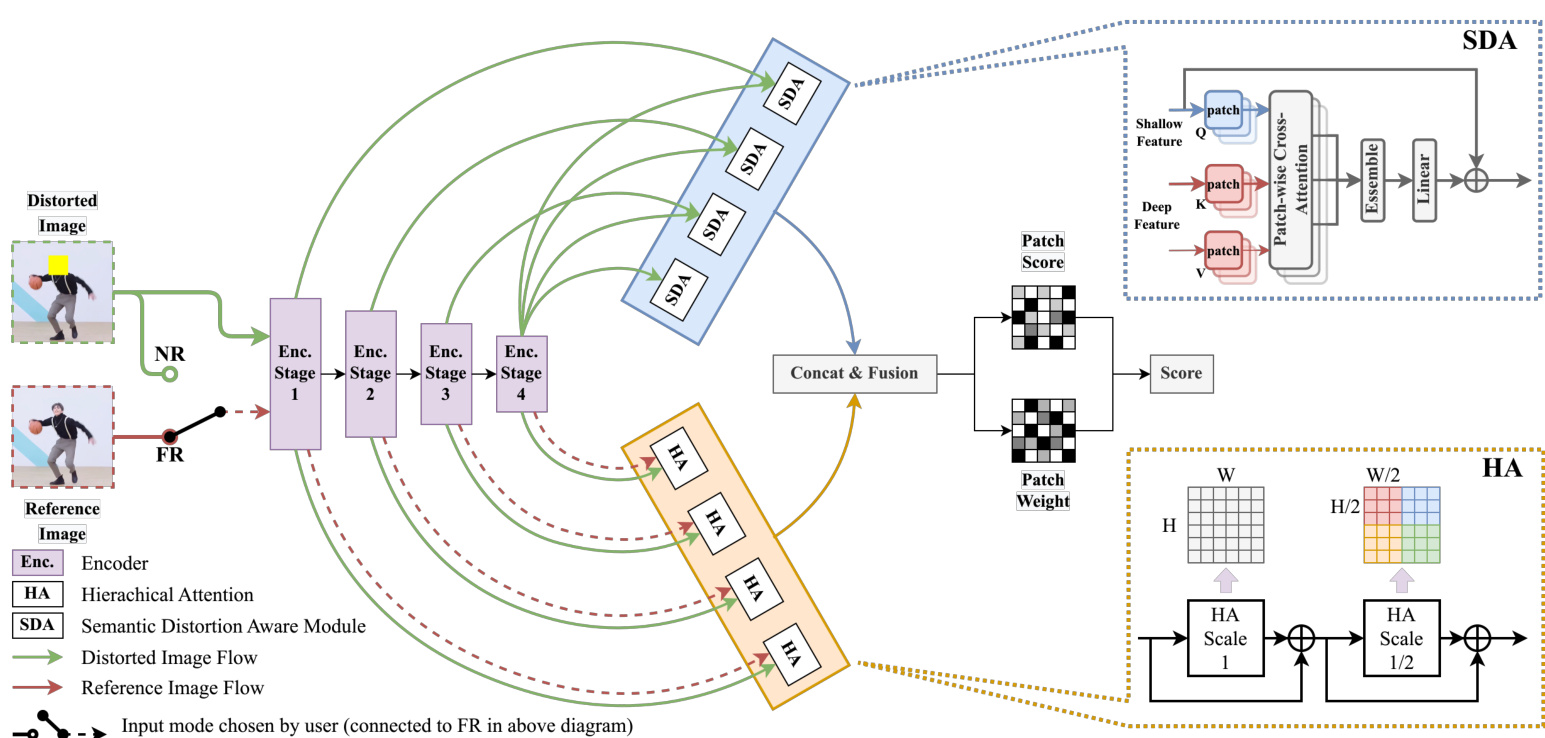

Fig. 4. Network architecture of the proposed YOTO. Input types can be chosen by the user depending on the presence of reference images for the network to perform FR or NR IQA tasks (i.e. the dotted red arrow will become green if the user chooses to perform NR IQA). The network receives a pair of images in the form of [distorted, distorted] or [distorted, ref erence] for NR and FR IQA respectively. ResNet50 or Swin Transformer is adopted as the encoder backbone (purple). A Hierarchical Attention (HA) module with global and regional attention is developed to highlight potential distortion-contaminated areas in encoder features. If only distorted images are provided, self-attention is applied in the HA module. If reference images are given, the HA module will compute the cross-attention between distorted and reference features. To model the semantic impact caused by distortion, a Semantic Distortion Aware (SDA) module is designed and densely applied to explore the similarity between shallow and deep features using only distorted image features. The obtained features from HA and SDA are concatenated and fused for IQA score estimation via a commonly applied patch-wise attention. Best viewed in color.

图 4: 提出的YOTO网络架构。输入类型可由用户根据参考图像的存在情况选择,使网络执行FR或NR IQA任务(即若用户选择执行NR IQA时,红色虚线箭头将变为绿色)。网络分别以[失真图像, 失真图像]或[失真图像, 参考图像]的形式接收图像对以进行NR和FR IQA。采用ResNet50或Swin Transformer作为编码器主干(紫色部分)。开发了具有全局和区域注意力的分层注意力(HA)模块,以突出编码器特征中潜在的失真污染区域。若仅提供失真图像,HA模块将应用自注意力机制;若给定参考图像,则HA模块会计算失真特征与参考特征间的交叉注意力。为建模失真引起的语义影响,设计了语义失真感知(SDA)模块并密集应用,仅利用失真图像特征探索浅层与深层特征间的相似性。从HA和SDA模块获得的特征经拼接融合后,通过常用的分块注意力机制进行IQA分数预测。建议彩色查看。

NR-IQA One of the ideas for NR IQA is to generate the reference image using GAN-based methods [33], [39] to transform NR into FR tasks. However, the performance of this approach is greatly bottle necked by the GAN itself. Without explicitly generating reference images, similar to FR IQA, saliency [66] is usually considered as auxiliary information for better quality score estimation. Alternatively, ranking [36], [60] is commonly applied to implicitly learn the distortion level in images using Siamese networks. Besides, Vision Transformers [8], [14], [60], [67], [70] are adopted to capture better spatial relationships aiming to identify hidden distortion patterns. Although the aforementioned methods share great similarities with FR IQA, most networks are typically designed only for NR tasks. An ideal model should be able to perform both FR and NR IQA tasks, just like human beings. To our best knowledge, the only method [3] reporting performances on both NR and FR benchmarks still requires modifications of its network architecture, and its performance is inferior to the latest single-task models. Hence, the exploration of a unified model is non-trivial, as it can narrow the gap between current models and human capabilities, while also inspiring and facilitating a better exploration of underlying principles between FR and NR IQA.

无参考图像质量评估 (NR-IQA)

一种NR IQA的思路是使用基于生成对抗网络 (GAN) 的方法 [33][39] 生成参考图像,从而将NR任务转化为全参考 (FR) 任务。然而,这种方法的表现很大程度上受限于GAN本身的性能。与FR IQA类似,在不显式生成参考图像的情况下,显著性 [66] 通常被视为辅助信息以提升质量评分估计的准确性。此外,排序学习 [36][60] 常被应用于通过孪生网络隐式学习图像中的失真程度。同时,视觉Transformer [8][14][60][67][70] 被用来捕捉更好的空间关系,旨在识别隐藏的失真模式。尽管上述方法与FR IQA存在高度相似性,但大多数网络通常仅针对NR任务设计。理想的模型应像人类一样能够同时执行FR和NR IQA任务。据我们所知,唯一在NR和FR基准测试中均报告性能的方法 [3] 仍需要调整其网络架构,且其表现逊色于最新的单任务模型。因此,探索统一模型具有重要意义,它不仅能缩小现有模型与人类能力之间的差距,还能启发并促进对FR和NR IQA底层原理的更深入探索。

Cross-attention Cross-attention was originally introduced in Natural Language Processing (NLP) to translate a source language to another (eg., English to French) [59]. Due to its ability to examine correlations between different modalities, it has been widely adopted in various NLP and computer vision applications, such as text and image matching [28], [64]. It is also widely applied in Transformers for multiscale image feature correlation modeling [6], [31]. For IQA tasks, various self-attention-related models have been proposed thanks to the long-range dependency modeling capability of transformers [8], [26], [60], [67]. The self-attention is applied at each encoder stage and the resulting weighted features are effective in identifying distortion in spatial dimensions. However, part of the distortion at one encoder stage might remain and continue to affect the next few encoder stages. This part of features should gain higher attention consistently as they have greater impacts on the semantics of the image. Thus solely applying self-attention for each encoder stage cannot effectively reflect the semantic impact caused by distortion. Cross-attention, on the other side, is not well investigated yet and seems a promising solution for semantic impact modeling. Since different types of distortion will contaminate encoder layers differently, we can apply cross-attention to calculate correlations between shallow features and deep features to estimate the strength of distortion affecting the semantic meaning of an image.

交叉注意力 (Cross-attention)

交叉注意力最初在自然语言处理 (NLP) 领域提出,用于实现源语言到目标语言的翻译 (例如英语到法语) [59]。由于其能够分析不同模态间的相关性,该技术已被广泛应用于各类 NLP 和计算机视觉任务,例如文本-图像匹配 [28][64]。在 Transformer 中,交叉注意力也被广泛用于多尺度图像特征关联建模 [6][31]。

对于图像质量评估 (IQA) 任务,得益于 Transformer 的长距离依赖建模能力,已有多种基于自注意力 (self-attention) 的模型被提出 [8][26][60][67]。自注意力机制在每个编码器阶段都会应用,其生成的加权特征能有效识别空间维度的失真。然而,某个编码器阶段的部分失真可能会持续影响后续多个编码阶段。这些特征因其对图像语义的显著影响,应当持续获得更高关注度。因此,仅在各编码器阶段应用自注意力无法有效反映失真带来的语义影响。

相比之下,交叉注意力在语义影响建模领域尚未得到充分研究,却展现出潜在优势。由于不同类型的失真会对编码器各层产生差异化影响,我们可以通过交叉注意力计算浅层特征与深层特征的关联性,从而评估失真对图像语义的影响强度。

III. PROPOSED METHOD

III. 研究方法

A. Overall Architecture

A. 整体架构

The overall architecture is shown in Fig.4. We adopt ResNet [18] or Swin Transformer [37] as our encoder backbone for image feature encoding. The encoder is shared if reference images are present. Channel-wise attention is applied to encoder features. After that, a Hierarchical Attention (HA) module is developed to be an adaptor for NR and FR inputs and to identify potentially damaged regions in encoder features. The self-attention employed in the HA module will become cross-attention if pristine image features are present. Therefore the network is capable of receiving both FR and NR inputs. Furthermore, a Semantic Distortion Aware (SDA) module is proposed to estimate the semantic impact caused by distortion presented in the image. Finally, an aggregation block with patch-wise attention is devised for quality scores.

整体架构如图4所示。我们采用ResNet [18]或Swin Transformer [37]作为图像特征编码的骨干编码器。若存在参考图像,编码器将被共享使用。在编码器特征上应用了通道注意力机制。随后,开发了分层注意力(Hierarchical Attention, HA)模块作为NR和FR输入的适配器,并用于识别编码器特征中可能受损的区域。当存在原始图像特征时,HA模块中的自注意力机制将转变为交叉注意力机制,从而使网络能够同时接收FR和NR输入。此外,提出了语义失真感知(Semantic Distortion Aware, SDA)模块来评估图像中失真造成的语义影响。最后,设计了一个带有局部注意力机制的聚合块用于生成质量分数。

B. Hierarchical Attention (HA)

B. 分层注意力 (HA)

In order to simultaneously address FR and NR IQA tasks, a network requires an adaptive input processing module. The module should be capable of dynamically adjusting the calculation based on the presence or absence of reference images. In this regard, the attention mechanism from the original Transformer [59] is considered as a potential solution. When only distorted images are available, self-attention can be utilized to enable the network to identify the possible locations of distortion and adjust the weight of features accordingly. On the other hand, if pristine images are provided in addition to distorted images, cross-attention can be employed to achieve the same objective without increasing computational complexity.

为了同时处理FR和NR IQA任务,网络需要一个自适应输入处理模块。该模块应能根据参考图像是否存在动态调整计算方式。在这方面,原始Transformer [59]中的注意力机制被视为潜在解决方案。当仅存在失真图像时,可利用自注意力(self-attention)使网络识别可能的失真位置并相应调整特征权重;若同时提供原始图像,则可采用交叉注意力(cross-attention)在不增加计算复杂度的情况下实现相同目标。

where $Q,K,V$ are embeddings calculated by different MLP layers.

其中 $Q,K,V$ 是由不同 MLP (多层感知机) 层计算得到的嵌入向量。

However, the network has no idea which and when the pristine image is present in the input pairs. To this end, we introduce a segmentation embedding layer $e m b d(\cdot)$ indicating the input information of FR and NR IQA, as shown in Fig.5.

然而,网络无法识别输入图像对中何时存在原始图像。为此,我们引入了一个分割嵌入层 $embd(\cdot)$ 来指示FR(全参考)和NR(无参考)图像质量评估的输入信息,如图5所示。

where $x_{d i s}$ and $x_{r e f}$ denotes distorted and reference images, $\mathcal{F}$ stands for the network and ${\mathbf{0},\mathbf{1}}$ are embedding vectors.

其中 $x_{d i s}$ 和 $x_{r e f}$ 分别表示失真图像和参考图像,$\mathcal{F}$ 代表网络,${\mathbf{0},\mathbf{1}}$ 为嵌入向量。

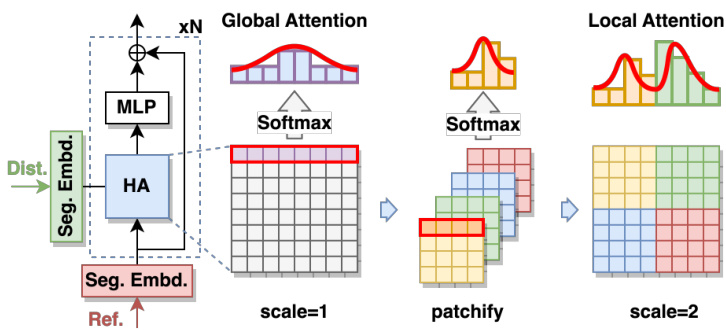

The adoption of attention resolves the issue of different inputs for FR and NR IQA. When computing attention on the encoder features, the attention matrix formed by patch embeddings can become quite large due to the typically large spatial dimension of shallow features. For example, a $512\times512$ single-channel image split into non-overlapping patches of $16\times16$ will give a sequence of $32\times32=1024$ in length and result in an attention matrix of $1024\times1024$ . As a result, after applying softmax to each row, the attention scores between patches might be very similar, leading to an indistinct weighting effect on the features. NLP and ViT models commonly resolve this problem by stacking multiple attention layers. However, this approach may not be efficient in terms of computation cost and convergence speed. To this end, we partition the feature maps into blocks and compute attention for each block separately, and then concatenate the resulting attention matrices into a large matrix. Please note our approach has a distinct difference from Multi-head Self-attention (MSA) [59] as we split features from shape $[B a t c h,L e n g t h,C h a n n e l s]$ to $[B a t c h,s c a l e^{2}$ , Length/scale2, Channels] while in MSA the channel dimension is split. As we can choose different scale factors to divide the original matrix into various sizes of blocks, we name the designed module Hierarchical Attention (HA). With this approach, both global and local attention are exploited. Mathematically, given an input sequence $x\in$ RB×HW ×C, a scale factor r will split the attention matrix $M\in R^{B\times H W\times H W}$ into $r^{2}$ blocks $M_{i j}$ where $i,j\in[0,r)$ . Defining the operation $P_{i,j=0}^{r-1}M_{i j}$ to paste the attention matrix $M_{i j}$ back to the original matrix $M$ following its coordinate $[i,j]$ , a single layer of HA can be calculated as:

采用注意力机制解决了FR和NR IQA输入不同的问题。在编码器特征上计算注意力时,由于浅层特征通常具有较大的空间维度,由图像块嵌入形成的注意力矩阵可能变得非常庞大。例如,将512×512的单通道图像分割为16×16的非重叠块会产生长度为32×32=1024的序列,并生成1024×1024的注意力矩阵。因此,对每行应用softmax后,图像块之间的注意力分数可能非常相似,导致对特征的加权效果不明显。NLP和ViT模型通常通过堆叠多个注意力层来解决这个问题,但这种方法在计算成本和收敛速度方面可能效率不高。为此,我们将特征图划分为若干块,分别计算每个块的注意力,然后将结果注意力矩阵拼接成一个大矩阵。请注意,我们的方法与多头自注意力(MSA) [59]有显著区别:我们将形状为[B,L,C]的特征分割为[B,s²,L/s²,C],而MSA是对通道维度进行分割。由于可以选择不同比例因子将原始矩阵划分为不同大小的块,我们将设计的模块命名为分层注意力(HA)。通过这种方法,可以同时利用全局和局部注意力。数学上,给定输入序列x∈Rᴮ×ᴴᵂ×ᶜ,比例因子r会将注意力矩阵M∈Rᴮ×ᴴᵂ×ᴴᵂ分割为r²个块Mᵢⱼ,其中i,j∈[0,r)。定义操作Pᵢ,ⱼ₌₀ʳ⁻¹Mᵢⱼ为将注意力矩阵Mᵢⱼ按其坐标[i,j]粘贴回原始矩阵M,则单层HA可计算为:

Fig. 5. The developed Hierarchical Attention (HA) module that bridges FR and NR tasks together. Segmentation embeddings were added to input features as an indicator of NR and FR tasks. Depending on FR or NR tasks, the input pair shown above could be ${d i s t o r t i o n,r e f e r e n c e}$ or ${d i s t o r t i o n,d i s t o r t i o n}$ respectively. Besides, on top of global attention, we partitioned the attention matrix into patches to incorporate local attention as well. In practice, the HA module will stack multiple attention layers with different scale factors to fully exploit global and local attention.

图 5: 所开发的分层注意力 (HA) 模块,将 FR (全参考) 和 NR (无参考) 任务连接在一起。分割嵌入被添加到输入特征中,作为 NR 和 FR 任务的指示器。根据 FR 或 NR 任务的不同,上述输入对可以是 ${d i s t o r t i o n,r e f e r e n c e}$ 或 ${d i s t o r t i o n,d i s t o r t i o n}$。此外,在全局注意力之上,我们将注意力矩阵划分为多个块以融入局部注意力。在实际应用中,HA 模块会堆叠多个具有不同比例因子的注意力层,以充分利用全局和局部注意力。

$$

Q_{r,i j},K_{r,i j}\in R^{B\times(r)^{2}\times H W/(r)^{2}\times C}

$$

$$

Q_{r,i j},K_{r,i j}\in R^{B\times(r)^{2}\times H W/(r)^{2}\times C}

$$

$$

M_{i j}=S o f t m a x(\frac{Q_{r,i j}K_{r,i j}^{T}}{\sqrt{d_{h e a d}}})

$$

$$

M_{i j}=S o f t m a x(\frac{Q_{r,i j}K_{r,i j}^{T}}{\sqrt{d_{h e a d}}})

$$

$$

M=P_{i,j=0}^{r-1}M_{i j}

$$

$$

M=P_{i,j=0}^{r-1}M_{i j}

$$

$$

H A(x_{t},r)=x_{t}+M L P(M V),\quad V\in R^{B\times H W\times C}

$$

$$

H A(x_{t},r)=x_{t}+M L P(M V),\quad V\in R^{B\times H W\times C}

$$

The HA module can be described recursively:

HA模块可以递归描述为:

$$

H A(x_{t+1},r)=H A(H A(x_{t},r),r),\quad t=0,1,...

$$

$$

H A(x_{t+1},r)=H A(H A(x_{t},r),r),\quad t=0,1,...

$$

The overall design of the HA module is illustrated in Fig.5 where we stack multiple HA layers with different scale factors and apply residual connections. The effectiveness of the HA module will be discussed more in ablations.

HA模块的整体设计如图5所示,其中我们堆叠了多个具有不同缩放因子的HA层并应用了残差连接。HA模块的有效性将在消融实验中进一步讨论。

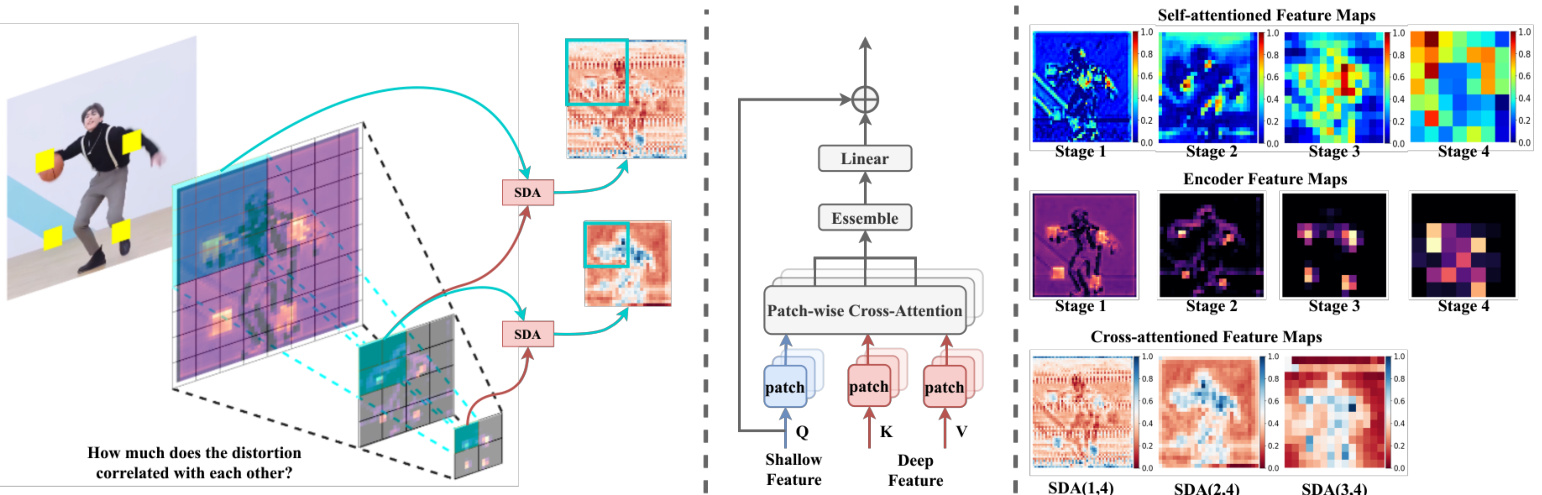

Fig. 6. Illustration of SDA (left and middle) and comparison against self-attention (right). Feature pyramid is firstly split into $n\times n$ cones. The SDA is then densely applied on encoder stages to explore the correlation between shallow and deep features so that we can estimate the semantic impact caused by distortion. SDA(1,4) denotes the weighted feature map obtained by computing cross-attention between stages 1 and 4. As illustrated on the right side, the cross-attention feature maps (last row) can reflect how distortions in shallow stages are related to the deep features, further indicating the impact the distortion caused. Self-attention features (first row), on the other side, indicate the important region in space but are inconsistent across different encoder stages and thus have a weak representation of semantic impact.

图 6: SDA示意图(左、中)及与自注意力机制的对比(右)。特征金字塔首先被分割为$n\times n$个锥形区域。随后在编码器阶段密集应用SDA来探索浅层与深层特征间的相关性,从而估计失真造成的语义影响。SDA(1,4)表示通过计算阶段1与阶段4间交叉注意力得到的加权特征图。如右侧所示,交叉注意力特征图(最后一行)能反映浅层阶段的失真如何与深层特征相关联,进一步表明失真造成的影响。而自注意力特征(第一行)仅标示空间中的重要区域,且在不同编码阶段表现不一致,因此对语义影响的表征较弱。

C. Semantic Distortion Aware (SDA) Module

C. 语义失真感知 (SDA) 模块

The HA module provides effective attention allowing the model to focus on certain regions of the image. However, as mentioned in the previous sections, relying solely on the amount of distortion in the image may not adequately reflect image quality as we ignored the impact distortion applied on deeper encoder layers. Thus, we need to assess the damage to the semantic information caused by distortion for better evaluation. Considering that the encoder itself is a natural feature extractor or feature summarizer, i.e., the details and textures in the image are gradually filtered out during the encoding process, leaving behind high-level semantic information such as location. Based on this characteristic, we propose to evaluate the amount of semantic impact caused by different types of distortion by examining their residual effects in each encoder layer. Different from common approaches [14], [56], [76] where features are aggregated or scores are averaged, we aim to estimate the semantic damage caused by the distortions from the perspective of feature consistency. The distortion that affects the next few stages of the encoder should receive more attention. For instance, we believe the impact of saltand-pepper distortion and block occlusion will reach different layers when they pass through the encoder. Salt-and-pepper distortion is likely to be filtered out effectively in the first few layers of the encoder, while block occlusion may penetrate till the last layer of the encoder, affecting the semantics of the entire image.

HA模块通过有效注意力机制使模型能够聚焦图像的特定区域。然而如之前章节所述,仅依赖图像畸变量可能无法充分反映图像质量,因为我们忽略了畸变对深层编码器层的影响。因此需要评估畸变对语义信息造成的损害以获得更优评价。考虑到编码器本身是天然的特征提取器或特征摘要器(即图像细节和纹理会在编码过程中被逐步过滤,仅保留位置等高层语义信息),我们提出通过考察各类畸变在各编码器层的残留效应来评估其语义影响量。与常见方法[14][56][76]中聚合特征或平均分数的做法不同,我们旨在从特征一致性的角度估算畸变造成的语义损伤。那些会影响编码器后续多个阶段的畸变应获得更多关注。例如盐粒噪点畸变和块状遮挡通过编码器时会抵达不同层级:盐粒噪点很可能在编码器前几层就被有效过滤,而块状遮挡可能穿透至编码器最后一层,影响整幅图像的语义。

We propose using cross-attention between different encoder layers to capture the impact of distortion on semantic meaning. By comparing the feature similarities between shallow and deep layers, we estimate the distortion residues in different layers and evaluate image quality. Therefore, we design the Semantic Distortion Aware (SDA) module, as shown in Fig.6. It is a cross-attention module in a top-down manner and is densely applied between different encoder features. At first glance, the proposed SDA might look superficially simple. In fact, instead of blindly applying the cross-attention, we partition the feature pyramid into a ”cone” and trace a local patch from shallow to deep features to estimate the semantic correlations. This design prevents meaningless interactions between a local distortion and its high-level neighbor regions during cross-attention. It bridges low-level and high-level features, focusing on how local distortions within low-level features affect high-level semantics. Meanwhile, it is more computationally efficient $(1/n^{2}$ if has $n\times n$ cones) compared with full-scale attention. The SDA module uses shallow features as queries $Q_{s}$ and deep features as keys $K_{d}$ and values $V_{d}$ to calculate the weighted map of shallow-layer features, reflecting which region on the image has a direct correlation and persistent effect on its deep semantic meanings. Given shallow and deep features $x_{s},x_{d}$ with batch $B$ , channel $C$ and spatial dimension $m\times m$ and $n\times n$ , then we have:

我们提出利用不同编码器层间的交叉注意力(cross-attention)来捕捉失真对语义的影响。通过比较浅层与深层特征间的相似性,估算各层的失真残差并评估图像质量。为此,我们设计了语义失真感知(Semantic Distortion Aware, SDA)模块,如图6所示。这是一个自上而下构建的交叉注意力模块,密集应用于不同编码器特征之间。乍看之下,SDA模块可能显得简单,但实际上我们并非盲目应用交叉注意力,而是将特征金字塔划分为"锥形"结构,通过追踪从浅层到深层特征的局部图像块来估算语义相关性。该设计避免了交叉注意力过程中局部失真区域与其高层邻域间的无意义交互,有效桥接了低层与高层特征,重点关注低层特征中的局部失真如何影响高层语义。同时,相比全局注意力机制,该模块计算效率更高(当存在$n\times n$个锥形结构时计算量为$1/n^{2}$)。SDA模块以浅层特征作为查询向量$Q_{s}$,深层特征作为键$K_{d}$和值$V_{d}$,通过计算浅层特征的权重图来反映图像中哪些区域与其深层语义存在直接关联和持续影响。给定批尺寸$B$、通道数$C$、空间维度分别为$m\times m$和$n\times n$的浅层与深层特征$x_{s},x_{d}$,其计算过程可表示为:

$$

\begin{array}{r l}&{x_{s}\in R^{B\times(m\times m)\times C},\quad x_{d}\in R^{B\times(n\times n)\times C},m>n}\ &{\quad A t t e n t i o n(Q_{s},K_{d},V_{d})=S o f t m a x(\frac{Q_{s}K_{d}^{T}}{\sqrt{d_{h e a d}}})V_{d}}\ &{S D A(x_{d},x_{s})=x_{s}+M L P(A t t e n t i o n(Q_{s},K_{d},V_{d}))}\end{array}

$$

$$

\begin{array}{r l}&{x_{s}\in R^{B\times(m\times m)\times C},\quad x_{d}\in R^{B\times(n\times n)\times C},m>n}\ &{\quad A t t e n t i o n(Q_{s},K_{d},V_{d})=S o f t m a x(\frac{Q_{s}K_{d}^{T}}{\sqrt{d_{h e a d}}})V_{d}}\ &{S D A(x_{d},x_{s})=x_{s}+M L P(A t t e n t i o n(Q_{s},K_{d},V_{d}))}\end{array}

$$

For each encoder stage $1-4$ , we apply HA to obtain features $H_{1},H_{2},H_{3}$ , and $H_{4}$ accordingly. Similarly, we densely apply SDA between encoder stage $i$ and its shallower stages $\mathrm{j}$ $(j<i)$ to obtain correlation features, $C_{i,j}$ . This will yield in $C_{4,3},C_{4,2},...,C_{2,1}$ in total six feature groups. The obtained features from the same encoder stage $i$ are averaged and concatenated along the channel dimension followed by a commonly applied patch-wise attention block [3], [26], [67] to predict quality scores, as shown in Fig.4.

对于每个编码器阶段 $1-4$,我们应用HA(层次注意力)相应获得特征 $H_{1},H_{2},H_{3}$ 和 $H_{4}$。类似地,我们在编码器阶段 $i$ 与其较浅阶段 $\mathrm{j}$ $(j<i)$ 之间密集应用SDA(跨阶段差分注意力)来获取相关性特征 $C_{i,j}$,最终将得到 $C_{4,3},C_{4,2},...,C_{2,1}$ 共六组特征。来自同一编码器阶段 $i$ 的特征经过通道维度上的平均和拼接后,输入通用的分块注意力模块 [3][26][67] 进行质量分数预测,如图4所示。

D. Training Schemes

D. 训练方案

Due to the proposed model architecture’s ability to dynamically transform computation methods by accepting FR and NR IQA inputs, we have explored the possibility of conducting joint training for FR and NR alongside traditional task-specific training. FR and NR inputs are provided to the model with a probability of $50%$ in a format of ${d i s t o r t i o n,r e f e r e n c e}$ or ${d i s t o r t i o n,d i s t o r t i o n}$ . We found that, compared to separate training, joint training not only achieves similar performance in FR but also significantly enhances NR performance. Further details will be presented in the experiments and ablation analyses.

由于所提出的模型架构能够通过接受全参考(FR)和无参考(NR)图像质量评价(IQA)输入来动态转换计算方法,我们探索了在传统任务特定训练之外进行FR和NR联合训练的可能性。模型以50%的概率接收${distortion,reference}$或${distortion,distortion}$格式的FR和NR输入。研究发现,与单独训练相比,联合训练不仅在FR上取得了相近的性能,还显著提升了NR性能。更多细节将在实验和消融分析部分展示。

ficient (SROCC) as our evaluation metrics. PLCC is defined as:

我们采用皮尔逊线性相关系数 (PLCC) 和斯皮尔曼等级相关系数 (SROCC) 作为评估指标。PLCC 定义为:

$$

P L C C={\frac{\sum_{i=1}^{n}(x_{i}-{\overline{{x}}})(y_{i}-{\overline{{y}}})}{{\sqrt{\sum_{i=1}^{n}(x_{i}-{\overline{{x}}})^{2}(y_{i}-{\overline{{y}}})^{2}}}}}

$$

$$

P L C C={\frac{\sum_{i=1}^{n}(x_{i}-{\overline{{x}}})(y_{i}-{\overline{{y}}})}{{\sqrt{\sum_{i=1}^{n}(x_{i}-{\overline{{x}}})^{2}(y_{i}-{\overline{{y}}})^{2}}}}}

$$

where $x_{i},y_{i}$ indicate the predicted and the ground truth scores, and their mean values are denoted as $\overline{{x}},\overline{{y}}$ respectively. Meanwhile, SROCC is defined as:

其中 $x_{i},y_{i}$ 分别表示预测分数和真实分数,其平均值分别记为 $\overline{{x}},\overline{{y}}$ 。同时,SROCC定义为:

$$

S R O C C=\rho=1-\frac{6\sum d_{i}^{2}}{n(n^{2}-1)}

$$

$$

S R O C C=\rho=1-\frac{6\sum d_{i}^{2}}{n(n^{2}-1)}

$$

IV. EXPERIMENTS

IV. 实验

A. Datasets and Metrics

A. 数据集和指标

We evaluate our algorithm on conventional seven NR benchmark datasets and four FR benchmark datasets. In addition, we also report the results on the PIPAL [15] dataset consisting of GAN-generated images. For NR benchmarks, four synthetic distorted datasets, TID2013 [51], LIVE [55], CSIQ [27], and Kadid10k [32] are adopted. These synthetic distorted datasets are also employed for FR evaluations. Besides, we use three authentic datasets, namely LIVE Challenge [12], KonIQ10k [19], and LIVEFB [68], to further test our model’s performance on NR tasks.

我们在常规的7个NR基准数据集和4个FR基准数据集上评估了算法性能,同时报告了包含GAN生成图像的PIPAL [15]数据集结果。针对NR基准测试,采用了TID2013 [51]、LIVE [55]、CSIQ [27]和Kadid10k [32]四个合成失真数据集,这些数据集也用于FR评估。此外,我们使用LIVE Challenge [12]、KonIQ10k [19]和LIVEFB [68]三个真实失真数据集进一步测试模型在NR任务上的表现。

TID2013: TID2013 dataset contains 3000 distorted images derived from 25 pristine images with 24 different distortion types at 5 degradation levels. Mean Opinion Scores are ranging from 0 to 9.

TID2013: TID2013数据集包含3000张失真图像,源自25张原始图像,涵盖24种不同的失真类型,每种类型有5个退化等级。平均意见分数(MOS)范围为0到9。

LIVE: Live degrades 29 pristine images by adding additive white Gaussian noise, blurring Gaussian, compressing JPEG, compressing JPEG2000, and fast fading, resulting in 770 distorted images in total.

LIVE:通过添加加性高斯白噪声、高斯模糊、JPEG压缩、JPEG2000压缩和快速衰落对29张原始图像进行降质处理,最终生成共计770张失真图像。

CSIQ: The dataset contains 886 distorted images originating from 30 pristine images. The CSIQ database includes six types of distortions, each with four or five levels of distortion respectively.

CSIQ: 该数据集包含来自30张原始图像的886张失真图像。CSIQ数据库包含六种失真类型,每种失真分别有四到五个失真级别。

Kadid10K: This dataset contains 10,125 images that have been degraded based on 81 pristine images. Five levels of distortion are applied to each pristine image.

Kadid10K: 该数据集包含10,125张基于81张原始图像进行降质的图像。每张原始图像应用了五种失真等级。

KONIQ-10K: The KONIQ-10K dataset consists of 10,073 images covering a wide variety of images from various domains, including nature, people, animals, and objects. Each image in KONIQ-10K is rated on a scale from 1 to 10.

KONIQ-10K: KONIQ-10K数据集包含10,073张涵盖自然、人物、动物和物体等多个领域的多样化图像。每张图像均按1到10分进行评分。

LIVE-Challenge: The LIVE-Challenge dataset consists of 1,160 distorted images. The distortions include compression artifacts, noise, blurring, and other types of impairments.

LIVE-Challenge: LIVE-Challenge数据集包含1,160张失真图像,失真类型包括压缩伪影 (compression artifacts)、噪声、模糊以及其他类型的损伤。

LIVE-FB: The LIVE-FB dataset is the largest in-the-wild NR-IQA dataset by far and it contains around 40 thousand distorted images and 1.2 million image patches labeled by crowd-sourcing.

LIVE-FB:LIVE-FB数据集是目前最大的野外无参考图像质量评估(NR-IQA)数据集,包含约4万张失真图像和120万个通过众包标注的图像块。

PIPAL: The PIPAL dataset introduces GAN-generated images as a new distortion type. Unlike conventional distortion types, the outputs from GAN-based models follow the natural image distribution quite well but with the wrong details. The PIPAL dataset consists of 250 pristine images with 40 distortion types and in total over 1 million labelings.

PIPAL: PIPAL数据集将GAN生成的图像作为一种新的失真类型引入。与传统失真类型不同,基于GAN的模型输出能很好地遵循自然图像分布,但细节存在错误。该数据集包含250张原始图像、40种失真类型,总计超过100万条标注数据。

For metrics, we adopt Pearson’s Linear Correlation Coefficient (PLCC) and Spearman’s Rank Order Correlation Coefwhere $n$ is the total number of samples, and $d_{i}$ is the rank difference of the $i^{t h}$ test image between ground-truth and predictions.

在指标方面,我们采用皮尔逊线性相关系数 (PLCC) 和斯皮尔曼秩相关系数 (SROCC),其中 $n$ 为样本总数,$d_{i}$ 表示第 $i^{t h}$ 张测试图像在真实值与预测值之间的秩差。

B. Implementation Details

B. 实现细节

For the PIPAL dataset, we follow their official split and training protocols mentioned in the paper. For the other datasets mentioned in Sec.IV-A, we randomly sample $80%$ of the data as the training dataset and the rest as the testing dataset. We choose ResNet50 and Swin Transformer as our encoder backbone to compare with other CNN and transformer models respectively. During training, images are augmented by random cropping with a size of $224\times224$ and random horizontal flipping by $50%$ chance. The learning rate is set to $1e^{-4}$ using the Cosine Anne ling scheduler with Tmax and eta min set to 50 and 0, respectively. We use a batch size of 8 and Adam optimizer [24] with default parameters. The network is trained using L2 loss for 100 epochs. During testing, each image is randomly cropped into $224\times224$ for 20 times, and their averaged prediction scores are calculated. We train the network 5 times using different seeds and report the mean value. Code will be released upon acceptance.

对于PIPAL数据集,我们遵循论文中提到的官方划分和训练方案。对于第IV-A节中提到的其他数据集,我们随机抽取80%数据作为训练集,其余作为测试集。我们选择ResNet50和Swin Transformer作为编码器骨干网络,分别与其他CNN和Transformer模型进行对比。训练过程中,图像通过随机裁剪(224×224尺寸)和50%概率水平翻转进行数据增强。学习率设置为1e-4,采用Tmax=50、eta_min=0的余弦退火调度器。使用批大小为8的Adam优化器[24](默认参数),通过L2损失函数训练100个周期。测试阶段,每张图像被随机裁剪20次(224×224尺寸),计算其预测得分的平均值。我们使用不同随机种子训练网络5次并报告均值。代码将在论文录用后开源。

C. Comparisons with the State-of-the-art

C. 与最先进技术的比较

Single Task Training We compare our proposed YOTO against 13 NR IQA algorithms in the field, namely DIIVINE [53], BRISQUE [47], ILNIQE [72], BIECON [23], MEON [40], WaDIQaM [4], DBCNN [74], MetaIQA [76], P2P-BM [69], HyperIQA [56], TReS [14], TIQA [70], and MANIQA [67]. ResNet50 and Swin Transformer are used as our backbones respectively in order to compare the performance of other ResNet50-based and Transformer-based models. As listed in Tab.I, our proposed network outperforms other algorithms on both ResNet-base and Transformer-based architectures.

单任务训练

我们将提出的YOTO与领域内13种无参考图像质量评估(NR IQA)算法进行比较,分别为:DIIVINE [53]、BRISQUE [47]、ILNIQE [72]、BIECON [23]、MEON [40]、WaDIQaM [4]、DBCNN [74]、MetaIQA [76]、P2P-BM [69]、HyperIQA [56]、TReS [14]、TIQA [70]和MANIQA [67]。实验分别采用ResNet50和Swin Transformer作为骨干网络,以对比其他基于ResNet50和基于Transformer模型的性能。如表1所示,我们提出的网络在基于ResNet和基于Transformer的架构上均优于其他算法。

Besides, we compare YOTO against 6 FR IQA methods, which are DOG [50], DeepQA [22], DualCNN [58], WaDIQaM [3], PieAPP [52], and AHIQ [26]. As shown in Tab.II, our proposed method performs in line with the current state-of-the-art model AHIQ, which indicates the effectiveness of our proposed unified architecture on IQA tasks. Moreover, predicted quality scores shown in Tab.IV further indicate our proposed YOTO performing well on both FR and NR tasks. The predicted IQA scores can correctly align